Sonic Images and Visual Poetics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

THX Room EQ Manual

Home THX Equalization Manual Rev. 1.5 Home THX Audio System Room Equalization Manual Rev. 1.5 This document is Copyright 1995 by Lucasfilm Ltd THX and Home THX are Registered Trademarks of Lucasfilm Ltd Dolby Stereo, Dolby Pro Logic, and Dolby AC-3 are trademarks of Dolby Laboratories Licensing Corporation 1 Home THX Equalization Manual Rev. 1.5 Table of Contents Introduction and Background: Why Room Equalization? ..................................... 3 Test Equipment Requirements ........................................................................... 4-5 The Home THX Equalizer ....................................................................................... 5 Equalization Procedures ................................................................................... 5-22 Section 1: EQ Procedure Using the THX R-2 Audio Analyzer ............................ 6-13 1.1 - 1.3 Test Equipment Set-Up ................................................................... 6-9 1.4 - 1.7 LCR Equalization ......................................................................... 10-11 1.8 - 1.10 Subwoofer Equalization ............................................................... 11-13 1.11 Listening Tests ................................................................................. 13 Section 2: EQ Procedure Using a Conventional RTA ...................................... 14-21 2.1 - 2.3 Test Equipment Set-Up ................................................................ 14-17 2.4 - 2.6 LCR Equalization ........................................................................ -

Digital Dialectics: the Paradox of Cinema in a Studio Without Walls', Historical Journal of Film, Radio and Television , Vol

Scott McQuire, ‘Digital dialectics: the paradox of cinema in a studio without walls', Historical Journal of Film, Radio and Television , vol. 19, no. 3 (1999), pp. 379 – 397. This is an electronic, pre-publication version of an article published in Historical Journal of Film, Radio and Television. Historical Journal of Film, Radio and Television is available online at http://www.informaworld.com/smpp/title~content=g713423963~db=all. Digital dialectics: the paradox of cinema in a studio without walls Scott McQuire There’s a scene in Forrest Gump (Robert Zemeckis, Paramount Pictures; USA, 1994) which encapsulates the novel potential of the digital threshold. The scene itself is nothing spectacular. It involves neither exploding spaceships, marauding dinosaurs, nor even the apocalyptic destruction of a postmodern cityscape. Rather, it depends entirely on what has been made invisible within the image. The scene, in which actor Gary Sinise is shown in hospital after having his legs blown off in battle, is noteworthy partly because of the way that director Robert Zemeckis handles it. Sinise has been clearly established as a full-bodied character in earlier scenes. When we first see him in hospital, he is seated on a bed with the stumps of his legs resting at its edge. The assumption made by most spectators, whether consciously or unconsciously, is that the shot is tricked up; that Sinise’s legs are hidden beneath the bed, concealed by a hole cut through the mattress. This would follow a long line of film practice in faking amputations, inaugurated by the famous stop-motion beheading in the Edison Company’s Death of Mary Queen of Scots (aka The Execution of Mary Stuart, Thomas A. -

Frank Zappa and His Conception of Civilization Phaze Iii

University of Kentucky UKnowledge Theses and Dissertations--Music Music 2018 FRANK ZAPPA AND HIS CONCEPTION OF CIVILIZATION PHAZE III Jeffrey Daniel Jones University of Kentucky, [email protected] Digital Object Identifier: https://doi.org/10.13023/ETD.2018.031 Right click to open a feedback form in a new tab to let us know how this document benefits ou.y Recommended Citation Jones, Jeffrey Daniel, "FRANK ZAPPA AND HIS CONCEPTION OF CIVILIZATION PHAZE III" (2018). Theses and Dissertations--Music. 108. https://uknowledge.uky.edu/music_etds/108 This Doctoral Dissertation is brought to you for free and open access by the Music at UKnowledge. It has been accepted for inclusion in Theses and Dissertations--Music by an authorized administrator of UKnowledge. For more information, please contact [email protected]. STUDENT AGREEMENT: I represent that my thesis or dissertation and abstract are my original work. Proper attribution has been given to all outside sources. I understand that I am solely responsible for obtaining any needed copyright permissions. I have obtained needed written permission statement(s) from the owner(s) of each third-party copyrighted matter to be included in my work, allowing electronic distribution (if such use is not permitted by the fair use doctrine) which will be submitted to UKnowledge as Additional File. I hereby grant to The University of Kentucky and its agents the irrevocable, non-exclusive, and royalty-free license to archive and make accessible my work in whole or in part in all forms of media, now or hereafter known. I agree that the document mentioned above may be made available immediately for worldwide access unless an embargo applies. -

&Blues GUITAR SHORTY

september/october 2006 issue 286 free jazz now in our 32nd year &blues report www.jazz-blues.com GUITAR SHORTY INTERVIEWED PLAYING HOUSE OF BLUES ARMED WITH NEW ALLIGATOR CD INSIDE: 2006 Gift Guide: Pt.1 GUITAR SHORTY INTERVIEWED Published by Martin Wahl By Dave Sunde Communications geles on a rare off day from the road. Editor & Founder Bill Wahl “I would come home from school and sneak in to my uncle Willie’s bedroom Layout & Design Bill Wahl and try my best to imitate him playing the guitar. I couldn’t hardly get my Operations Jim Martin arms over the guitar, so I would fall Pilar Martin down on the floor and throw tantrums Contributors because I couldn’t do what I wanted. Michael Braxton, Mark Cole, Grandma finally had enough of all that Dewey Forward, Steve Homick, and one morning she told my Uncle Chris Hovan, Nancy Ann Lee, Willie point blank, I want you to teach Peanuts, Mark Smith, Dave this boy how to ‘really’ play the guitar Sunde, Duane Verh and Ron before I kill him,” said Shorty Weinstock. Photos of Guitar Shorty Fast forward through years of late courtesy of Alligator Records night static filled AM broadcasts crackling the southbound airwaves out of Cincinnati that helped further de- Check out our costantly updated website. Now you can search for CD velop David’s appreciative musical ear. Reviews by artists, Titles, Record T. Bone Walker, B.B. King and Gospel Labels, keyword or JBR Writers. 15 innovator Sister Rosetta Tharpe were years of reviews are up and we’ll be the late night companions who spent going all the way back to 1974. -

Middle Years (6-9) 2625 Books

South Australia (https://www.education.sa.gov.au/) Department for Education Middle Years (6-9) 2625 books. Title Author Category Series Description Year Aus Level 10 Rules for Detectives MEEHAN, Adventure Kev and Boris' detective agency is on the 6 to 9 1 Kierin trail of a bushranger's hidden treasure. 100 Great Poems PARKER, Vic Poetry An all encompassing collection of favourite 6 to 9 0 poems from mainly the USA and England, including the Ballad of Reading Gaol, Sea... 1914 MASSON, Historical Australia's The Julian brothers yearn for careers as 6 to 9 1 Sophie Great journalists and the visit of the Austrian War Archduke Franz Ferdinand aÙords them the... 1915 MURPHY, Sally Historical Australia's Stan, a young teacher from rural Western 6 to 9 0 Great Australia at Gallipoli in 1915. His battalion War lands on that shore ready to... 1917 GARDINER, Historical Australia's Flying above the trenches during World 6 to 9 1 Kelly Great War One, Alex mapped what he saw, War gathering information for the troops below him.... 1918 GLEESON, Historical Australia's The story of Villers-Breteeneux is 6 to 9 1 Libby Great described as wwhen the Australians held War out against the Germans in the last years of... 20,000 Leagues Under VERNE, Jules Classics Indiana An expedition to destroy a terrifying sea 6 to 9 0 the Sea Illustrated monster becomes a mission involving a visit Classics to the sunken city of Atlantis... 200 Minutes of Danger HEATH, Jack Adventure Minutes Each book in this series consists of 10 short 6 to 9 1 of Danger stories each taking place in dangerous situations. -

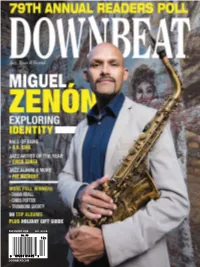

Downbeat.Com December 2014 U.K. £3.50

£3.50 £3.50 . U.K DECEMBER 2014 DOWNBEAT.COM D O W N B E AT 79TH ANNUAL READERS POLL WINNERS | MIGUEL ZENÓN | CHICK COREA | PAT METHENY | DIANA KRALL DECEMBER 2014 DECEMBER 2014 VOLUME 81 / NUMBER 12 President Kevin Maher Publisher Frank Alkyer Editor Bobby Reed Associate Editor Davis Inman Contributing Editor Ed Enright Art Director LoriAnne Nelson Contributing Designer Žaneta Čuntová Bookkeeper Margaret Stevens Circulation Manager Sue Mahal Circulation Associate Kevin R. Maher Circulation Assistant Evelyn Oakes ADVERTISING SALES Record Companies & Schools Jennifer Ruban-Gentile 630-941-2030 [email protected] Musical Instruments & East Coast Schools Ritche Deraney 201-445-6260 [email protected] Advertising Sales Associate Pete Fenech 630-941-2030 [email protected] OFFICES 102 N. Haven Road, Elmhurst, IL 60126–2970 630-941-2030 / Fax: 630-941-3210 http://downbeat.com [email protected] CUSTOMER SERVICE 877-904-5299 / [email protected] CONTRIBUTORS Senior Contributors: Michael Bourne, Aaron Cohen, Howard Mandel, John McDonough Atlanta: Jon Ross; Austin: Kevin Whitehead; Boston: Fred Bouchard, Frank- John Hadley; Chicago: John Corbett, Alain Drouot, Michael Jackson, Peter Margasak, Bill Meyer, Mitch Myers, Paul Natkin, Howard Reich; Denver: Norman Provizer; Indiana: Mark Sheldon; Iowa: Will Smith; Los Angeles: Earl Gibson, Todd Jenkins, Kirk Silsbee, Chris Walker, Joe Woodard; Michigan: John Ephland; Minneapolis: Robin James; Nashville: Bob Doerschuk; New Orleans: Erika Goldring, David Kunian, Jennifer Odell; New York: Alan Bergman, -

Electronic Labyrinth: THX 1138 4EB

Electronic Labyrinth: THX 1138 4EB By Matthew Holtmeier Considering at least one “Star Wars” film has been released every decade since the 1970’s, this epic science fic- tion franchise likely jumps to mind upon hearing the name George Lucas. Avid filmgoers might think, instead, ‘Industrial Light and Magic,’ which has contributed to the special effects of over 275 feature films since it was founded by Lucas in 1975. Either way, Lucas’s penchant for the creation of fantastic worlds has radi- cally shaped the cinematic landscape of the past 40 years, particularly where big Dan Natchsheim as a character known simply as 1138 attempts budget films are concerned. Like many to escape a dystopian future society. Courtesy Library of filmmakers since the growth of film schools Congress Collection. in the 60’s, he started with a student film, however: “Electronic Labyrinth: THX1138 The Yardbirds, “I know. I know.” As the Gregorian 4EB” (1967). “Electronic Labyrinth” provides a strik- chant of The Yardbirds track begins, the camera ingly different vision of what the cinematic medium is tracks across an extreme close up of electronics capable of, particularly when compared to the mod- equipment, revealing the technocracy of the future. ern fairytales of the “Star Wars” films. It too has a The rest of the film will oscillate between the ‘operators’ science fictional setting, but one without heroes or of this technocracy, and THX 1138’s attempted escape princesses, and instead delves into the dystopian from the smooth, featureless white walls of a com- potential of technology itself. Giving vision to a world pound, presumably a world without desire. -

Walter Murch and the Art of Editing Film (2002) and Behind the Seen by Charles Koppelman (2005)

Born in 1943 to Canadian parents in New York City, Murch graduated Phi Beta Kappa from Johns Hopkins University with a B.A. in Liberal Arts; and subsequently the Graduate Program of USC’s School of Cinema-Television. His 50-year career in cinema stretches back to 1969 (Francis Coppola’s The Rain People) and includes work on THX-1138, The Godfather I, II, and III, American Graffiti, The Conversation, Apocalypse Now, The English Patient, The Talented Mr. Ripley, Cold Mountain, and many other films with directors such as George Lucas, Fred Zinnemann, Philip Kaufman, Anthony Minghella, Kathryn Bigelow, Sam Mendes and Brad Bird. Murch's pioneering achievements in sound were acknowledged by Coppola in his 1979 Palme d'Or winner Apocalypse Now, for which Murch was granted, in a film-history first, the screen credit of Sound Designer. Murch has been nominated for nine Academy Awards (six for editing and three for sound) and has won three Oscars: for best sound on Apocalypse Now (for which he and his collaborators devised the now-standard 5.1 sound format), and for Best Film Editing and Best Sound for his work on The English Patient. Although this double win in 1997 was unprecedented in the history of Oscars, Murch had previously won a double BAFTA for best sound and best picture editing for The Conversation in 1974. The English Patient was, co-incidentally, the first digitally-edited film to win an editing Oscar. Murch has also edited documentaries: Particle Fever (2013) on the search for the Higgs Boson (winner of the Grierson Award, and the Steven Hawking Medal for Science Communication) and his most recent work is the recently-completed Coup 53 (2019) an independently-financed feature-length documentary on western interference in the politics of Iran which had its world premiere at the 2019 Telluride Film Festival. -

George Lucas and the Impact Star Wars Had on Modern Society

George Lucas And The Impact Star Wars Had On Modern Society. By: Rahmon Garcia George Lucas was a very famous director, and made many different films in his time as a director, but his most famous film he ever produced was Star Wars. The effect Star Wars had on modern society was pretty incredible; the way he could create an amazing story from a very abstract idea; If you walked up to any person and asked them if they knew the movie series Star Wars, their answer would definitely be yes. His movies changed our modern society and the way we look at aspects of life. George Walton Lucas Jr. was born May 14 1944, in Modesto, California. In his youth he attended the Southern California University for film. He was the Screenwriter and director of his school project and first major film, THX 1138 4EB, which was his first step on the path to becoming a great director. Although George Lucas was mostly known for being the director of movies such as ‘Star Wars’ and ‘Indiana Jones’, he also made many other films, such as ‘Twice Upon A Time’, ‘The Land Before Time’, ‘Willow’, ‘Strange Magic’, ect. Even though we now think of Star Wars as a great movie series, when it was in the making it was very hard to picture it would turn into such an incredible saga. Ever since George Lucas was in High School, he was obsessed with Car Racing, and would often try to repair or create a new engine for his car, until one day when he was racing he got into a really bad accident and was nearly killed, if it wasn't for his seat which ejected him after his seat belt broke. -

Dossier De Presse

rec tdic iph cseé uqp, luoJ Dossier de presse ieuo e"rn rAMH qka uas iDrs laie stfl eral saS tb cir oJea naev cvta oaei c"nl t,cl éqo ,uma aipv ulae tegc onp bril aeé pgdt tieh isDo sthr érae Fefd oae uvr reYt tcoi hlus West ose rmes lufd http://www.amr-geneve.ch/amr-jazz-festival dseo (itn Compilation des groupes du festival disponible sur demande qcPt uiaB Contact médias: Leïla Kramis [email protected], tél: 022 716 56 37/ 078 793 50 72 aeo tnla AMR / Sud des Alpes rsob 10, rue des Alpes, 1201 Genève iAFa T + 41 22 716 56 30 / F + 41 22 716 56 39 èbrM mdea eosa 35e AMR Jazz Festival – dossier de presse 1 mul oM., nbH Table des matières I. L’AMR EN BREF....................................................................................................................................... 3 II. SURVOL DES CONCERTS..................................................................................................................... 4 III. DOSSIERS ARTISTIQUES..................................................................................................................... 5 PARALOG.............................................................................................................................................. 5 JOE LOVANO QUARTET...................................................................................................................... 7 PLAISTOW........................................................................................................................................... 10 J KLEBA............................................................................................................................................ -

6Th Annual Kaiser Permanente San Jose Jazz Winter Fest Presented By

***For Immediate Release: Thursday, January 14, 2016*** 6th Annual Kaiser Permanente San Jose Jazz Winter Fest Presented by Metro Thursday, February 25 - Tuesday, March 8, 2016 Cafe Stritch, The Continental, Schultz Cultural Arts Hall at Oshman Family JCC (Palo Alto), Trianon Theatre, MACLA, Jade Leaf Eatery & Lounge and other venues in Downtown San Jose, CA Event Info: sanjosejazz.org/winterfest Tickets: $10 - $65 "Winter Fest has turned into an opportunity to reprise the summer's most exciting acts, while reaching out to new audiences with a jazz-and-beyond sensibility." –KQED Arts National Headliners: John Scofield Joe Lovano Quartet Regina Carter Nicholas Payton Trio Delfeayo & Ellis Marsalis Quartet Marquis Hill Blacktet Bria Skonberg Regional Artists: Jackie Ryan J.C. Smith Band Chester ‘CT’ Thompson Jazz Beyond Series Co-Curated with Universal Grammar KING Kneedelus Kadhja Bonet Next Gen Bay Area Student Ensembles Lincoln Jazz Band SFJAZZ High School All Stars Combo Homestead High School Jazz Combo Los Gatos High School Jazz Band San Jose State University Jazz Combo San Jose Jazz High School All Stars San Jose, CA -- Renowned for its annual Summer Fest, the iconic Bay Area institution San Jose Jazz kicks off 2016 with dynamic arts programming honoring the jazz tradition and ever-expanding definitions of the genre with singular concerts curated for audiences within the heart of Silicon Valley. Kaiser Permanente San Jose Jazz Winter Fest 2016 presented by Metro continues its steadfast commitment of presenting a diverse array of some of today’s most distinguished artists alongside leading edge emerging musicians with an ambitious lineup of more than 25 concerts from February 25 through March 8, 2016. -

Once Upon a Time in Hollywood

THE MAGAZINE FOR FILM & TELEVISION EDITORS, ASSISTANTS & POST- PRODUCTION PROFESSIONALS THE SUMMER MOVIE ISSUE IN THIS ISSUE Once Upon a Time in Hollywood PLUS John Wick: Chapter 3 – Parabellum Rocketman Toy Story 4 AND MUCH MORE! US $8.95 / Canada $8.95 QTR 2 / 2019 / VOL 69 FOR YOUR EMMY ® CONSIDERATION OUTSTANDING SINGLE-CAMERA PICTURE EDITING FOR A DRAMA SERIES - STEVE SINGLETON FYC.NETFLIX.COM CINEMA EDITOR MAGAZINE COVER 2 ISSUE: SUMMER BLOCKBUSTERS EMMY NOMINATION ISSUE NETFLIX: BODYGUARD PUB DATE: 06/03/19 TRIM: 8.5” X 11” BLEED: 8.75” X 11.25” PETITION FOR EDITORS RECOGNITION he American Cinema Editors Board of Directors • Sundance Film Festival T has been actively pursuing film festivals and • Shanghai International Film Festival, China awards presentations, domestic and international, • San Sebastian Film Festival, Spain that do not currently recognize the category of Film • Byron Bay International Film Festival, Australia Editing. The Motion Picture Editors Guild has joined • New York Film Critics Circle with ACE in an unprecedented alliance to reach out • New York Film Critics Online to editors and industry people around the world. • National Society of Film Critics The organizations listed on the petition already We would like to thank the organizations that have recognize cinematography and/or production design recently added the Film Editing category to their Annual Awards: in their annual awards presentations. Given the essential role film editors play in the creative process • Durban International Film Festival, South Africa of making a film, acknowledging them is long • New Orleans Film Festival overdue. We would like to send that message in • Tribeca Film Festival solidarity.