Advanced Storage Area Network Design

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Connectrix Switch MDS-9000S Tech Specs

Specification Sheet Connectrix MDS-9148S and MDS-9396S 16Gb/s Fibre Channel Switches The Dell EMC Connecrix MDS 9000S Switch series support up to 16 Gigabit per second (Gb/s) Fibre Channel performance which accommodates mission-critical applications and today’s all flash storage systems. Both models are NVMe over Fabric (NVMe oF) capable with no change required in the SAN.. Connectrix MDS 16Gb/s Fibre Channel Switch Models MDS-9148S This switch scales from 12 to 48 line-rate 16 Gb/s Fibre Channel ports in a single compact one rack-unit (RU) form factor, providing high performance with exceptional flexibility at effective cost. The MDS-9148S is an ideal solution for a standalone Storage Area Network (SAN) in small departmental storage environments, a top-of-rack switch in medium-sized redundant fabrics or as an edge switch in larger-scale enterprise data center core-edge topologies. The MDS-9148S delivers speeds of 2, 4, 8 and 16 Gb/s, with 16Gb/s of dedicated bandwidth for each port. Individual ports can be configured with either short or long wavelength optics. MDS-9396S This 96-port switch is a highly reliable, flexible, fabric switch. It combines high performance with exceptional flexibility and cost effectiveness. This powerful, compact two rack-unit (2RU) switch scales from 48 to 96 line-rate 16 Gb/s Fibre Channel ports. For ultimate flexibility, there are two MDS-9396S models to support options for airflow. You can choose port side intake (PSI) where air flows front to back or port side exhaust (PSE) where air flow flows back to front. -

Cisco Nexus 9000 Series NX-OS SAN Switching Configuration Guide, Release 9.3(X)

Cisco Nexus 9000 Series NX-OS SAN Switching Configuration Guide, Release 9.3(x) First Published: 2019-12-23 Last Modified: 2021-03-15 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 © 2020–2021 Cisco Systems, Inc. All rights reserved. CONTENTS PREFACE Preface xiii Audience xiii Document Conventions xiii Related Documentation for Cisco Nexus 9000 Series Switches xiv Documentation Feedback xiv Communications, Services, and Additional Information xiv CHAPTER 1 New and Changed Information 1 New and Changed Information 1 CHAPTER 2 Hardware Support for SAN Switching 3 Hardware Support for SAN Switching 3 CHAPTER 3 Overview 5 Licensing Requirements 5 SAN Switching Overview 5 SAN Switching General Guidelines and Limitations 7 CHAPTER 4 Enabling FC/FCoE Switch Mode 9 Enabling FC/FCoE 9 Disabling FC/FCoE 10 Disabling LAN Traffic on an FCoE Link 11 Configuring the FC-Map 11 Configuring the Fabric Priority 12 Setting the Advertisement Interval 13 Cisco Nexus 9000 Series NX-OS SAN Switching Configuration Guide, Release 9.3(x) iii Contents CHAPTER 5 Configuring FCoE 15 FCoE Topologies 15 Directly Connected CNA Topology 15 Remotely Connected CNA Topology 17 FCoE Best Practices 18 Directly Connected CNA Best Practice 18 Remotely Connected CNA Best Practice 19 Guidelines and Limitations 20 Configuring FC/FCoE 21 Perform TCAM Carving 21 Configuring LLDP 22 Configuring Default QoS 22 Configuring User Defined QoS 22 Configuring Traffic -

Fibre Channel Storage Area Network Design Considerations for Deploying Virtualized SAP HANA

WHITE PAPER Fibre Channel Storage Area Network Design Considerations for Deploying Virtualized SAP HANA Business Case Enterprises are striving to virtualize their mission-critical applications such as SAP HANA, Oracle Applications, and others to meet their goals in terms of performance, availability, and cost. The benefits of virtualization help organizations optimize and utilize resources, but they also include new challenges. Often, organizations that move to virtualized environments are still relying on storage systems that were not designed with the flexibility and ease of deployment and management that a virtualized data center requires. As a result, their application performance is impacted and they are forced to sacrifice efficiency and deployment time for the agility and cost advantages that virtualization offers. To compensate, organizations commonly over-provision storage to address latency and application performance, however this introduces increased costs and does not solve the need for the scalability, speed, and resilience required by virtualized environments. Enterprises need a storage and networking solution that is easy to deploy and user-friendly while also enabling the ability to support application performance and evolve as the environment scales. Solution Overview HANA Tailored Data Center Integration Scope This solution provides a reference (TDI)-Certified hardware. Definition This solution focuses on virtualized SAP architecture to deploy virtualized SAP and testing of this solution followed HANA with a TDI environment. Best HANA in a VMware environment based the process and best practices as per practices for virtualizing SAP HANA for on VMware vSphere and VMware NSX, Application Workload Guidance (formerly scale-up and scale-out configurations offering customers agility, resource VMware Validated Design). -

Introduction to Storage Area Networks

SG24-5470- Introduction to Storage Area Networks Jon Tate Pall Beck Hector Hugo Ibarra Shanmuganathan Kumaravel Libor Miklas Redbooks International Technical Support Organization Introduction to Storage Area Networks December 2017 SG24-5470-08 Note: Before using this information and the product it supports, read the information in “Notices” on page ix. Ninth Edition (December 2017) This edition applies to the products in the IBM Storage Area Networks (SAN) portfolio. © Copyright International Business Machines Corporation 2017. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . ix Trademarks . .x Preface . xi Authors. xii Now you can become a published author, too! . xiv Comments welcome. xiv Stay connected to IBM Redbooks . xiv Summary of changes. .xv December 2017, Ninth Edition . .xv Chapter 1. Introduction. 1 1.1 Networks . 2 1.1.1 The importance of communication . 2 1.2 Interconnection models . 2 1.2.1 The open systems interconnection model. 2 1.2.2 Translating the OSI model to the physical world. 4 1.3 Storage . 5 1.3.1 Storing data. 5 1.3.2 Redundant Array of Independent Disks . 6 1.4 Storage area networks . 11 1.5 Storage area network components . 13 1.5.1 Storage area network connectivity . 14 1.5.2 Storage area network storage. 14 1.5.3 Storage area network servers. 14 1.6 The importance of standards or models . 14 Chapter 2. Storage area networks . 17 2.1 Storage area networks . 18 2.1.1 The problem . 18 2.1.2 Requirements . -

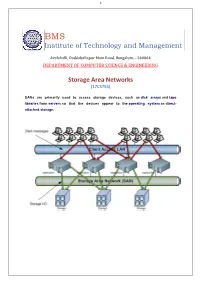

Institute of Technology and Management Storage Area Networks

1 BMS Institute of Technology and Management Avalahalli, Doddaballapur Main Road, Bengaluru – 560064 DEPARTMENT OF COMPUTER SCIENCE & ENGINEERING Storage Area Networks (17CS754) SANs are primarily used to access storage devices, such as disk arrays and tape libraries from servers so that the devices appear to the operating system as direct- attached storage. 2 STORAGE AREA NETWORKS [As per Choice Based Credit System (CBCS) scheme] (Effective from the academic year 2017 - 2018) SEMESTER – VII Subject Code 17CS754 IA Marks 40 Number of Lecture Hours/Week 3 Exam Marks 60 Total Number of Lecture Hours 40 Exam Hours 03 CREDITS – 03 Module – 1 Teaching Hours Storage System Introduction to evolution of storage architecture, key data centre 8 Hours Elements, virtualization, and cloud computing. Key data centre elements – Host (or compute), connectivity, storage, and application in both classic and virtual Environments. RAID implementations, techniques, and levels along with the Impact of RAID on application performance. Components of intelligent storage systems and virtual storage provisioning and intelligent storage system Implementations. Module – 2 Storage Networking Technologies and Virtualization Fibre Channel SAN 8 Hours components, connectivity options, and topologies including access protection mechanism „zoning”, FC protocol stack, addressing and operations, SAN-based virtualization and VSAN technology, iSCSI and FCIP(Fibre Channel over IP) protocols for storage access over IP network, Converged protocol FCoE and its components, Network Attached Storage (NAS) - components, protocol and operations, File level storage virtualization, Object based storage and unified storage platform. Module – 3 Backup, Archive, and Replication This unit focuses on information availability 8 Hours and business continuity solutions in both virtualized and non-virtualized environments. -

HPE C-Series SN6500C Multiservice Switch Overview

QuickSpecs HPE C-series SN6500C Multiservice Switch Overview HPE C-series SN6500C Multiservice Switch HPE SN6500C 16Gb FC/FCIP/FCoE Multi-service Switch (MDS9250i) The HPE SN6500C 16Gb Multi-service Switch (MDS 9250i) brings the most flexible storage networking capability available in the fabric switch market today. Sharing a consistent architecture with the MDS 9700 (SN8500C) Directors, The HPE SN6500C 16Gb Multi-service Switch integrates both Fibre Channel and IP Storage Services in a single system to allow maximum flexibility in user configurations. With 20 16-Gbps Fibre Channel ports active by default, two 10 Gigabit Ethernet IP Storage Services ports, and 8 10 Gigabit Ethernet FCoE ports, the SN6500C 16Gb Multi-service switch is a comprehensive package, ideally suited for enterprise storage networks that require high performance SAN extension or cost-effective IP Storage connectivity for applications such as Business Continuity using Fibre Channel over IP or iSCSI host attachment to Fibre Channel storage devices. Also, using the eight 10 Gigabit Ethernet FCoE ports, the SN6500C 16Gb Multi-service Switch attaches to directly connected FCoE and Fibre Channel storage devices and supports multi-tiered unified network fabric connectivity directly over FCoE. HPE SN6500C 16Gb Multi-service Switch Page 1 QuickSpecs HPE C-series SN6500C Multiservice Switch Standard Features Key Features and Benefits Please note that some features require the optional HPE SN6500C Enterprise Package license to be activated • Integrated Fibre Channel and IP Storage Services in a single optimized form factor: − Supports up to forty 16-Gbps Fibre Channel interfaces for high performance storage area network (SAN) connectivity plus two 10 Gigabit Ethernet ports for Fibre Channel over IP (FCIP) and Small Computer System Interface over IP (iSCSI) storage services plus eight 10 Gigabit Ethernet FCoE ports. -

Cisco Mds 9216A Multilayer Fabric Switch

DATA SHEET CISCO MDS 9216A MULTILAYER FABRIC SWITCH PRODUCT OVERVIEW Scalable 16-Port Multilayer Fabric Switch The Cisco MDS 9216A Multilayer Fabric Switch (Figure 1) brings new capability and investment protection to the fabric switch market. Sharing a consistent architecture with the Cisco MDS 9500 Series, the Cisco MDS 9216A combines multilayer intelligence with a modular chassis, making it an extremely capable and flexible fabric switch. Starting with 16 2-Gbps Fibre Channel ports, the expansion slot on the Cisco MDS 9216A allows for the addition of any current or future Cisco MDS 9000 Family module for up to 64 total ports. Figure 1. Cisco MDS 9216A Multilayer Fabric Switch KEYS FEATURES AND BENEFITS The Cisco ® MDS 9216A Multilayer Fabric Switch is designed for building mission-critical enterprise storage area networks (SANs) where scalability, multilayer capability, resiliency, robust security, and ease of management are imperative. The Cisco MDS 9216A offers the following important features: ● Compelling economics—A modular design provides a 3–rack unit (RU) base system consisting of 16 2-Gbps Fibre Channel ports and can be expanded with a variety of optional switching modules to up to 64 total Fibre Channel ports. ● Cost-effective design—The Cisco MDS 9216A offers advanced management tools for overall lower total cost of ownership (TCO). It includes virtual SAN (VSAN) technology for hardware-enforced isolated environments within a single physical fabric for secure sharing of physical infrastructure, further decreasing TCO. ● Multiprotocol and multitransport—The multilayer architecture of the Cisco MDS 9216A helps enable a consistent feature set over a protocol- independent switch fabric and easily integrates Fibre Channel, IBM Fiber Connection (FICON), Small Computer System Interface over IP (iSCSI), and Fibre Channel over IP (FCIP) in one system. -

HPE C-Series SN6620C Fibre Channel Switch Overview

QuickSpecs HPE C-series SN6620C Fibre Channel Switch Overview HPE C-series SN6620C Fibre Channel Switch The HPE C-series SN6620C Fibre Channel Switch (MDS 9148T) is the next generation 48-port 32Gbps Fibre Channel Switch providing high-speed Fibre Channel connectivity from the server rack to the SAN core. It empowers small, midsize, and large enterprises that are rapidly deploying cloud-scale applications providing the benefits of greater bandwidth, scale, and consolidation. The switch allows seamless transition to Fibre Channel Non-Volatile Memory Express (FC-NVMe) workloads whenever available without any hardware upgrade in the SAN. For flexibility, the HPE SN6620C Fibre Channel Switch scales from 24-48 ports. Additionally, investing in this switch for the lower-speed (8 or 16 Gbps) server rack gives you the flexibility to upgrade to 32 Gbps performance in the future. The SN6620C can be provisioned, managed, monitored, and troubleshot using Cisco Data Center Network Manager (DCNM), which currently manages the entire suite of Cisco data center products. Powered by C-series MDS 9000 NX-OS Software, it includes storage networking features and functions and is compatible with C-series SN8500C (MDS 9700) Series Multilayer Directors, C-series SN6610C (MDS 9132T) Multilayer Fabric Switches, C-series SN6010C (MDS 9148S) Multilayer Fabric Switches and C-series SN6500C (MDS 9250i) Multi-service Fabric Switches, providing transparent, end-to-end service delivery in core-edge deployments. HPE C-series SN6620C FC Switch Page 1 QuickSpecs HPE C-series SN6620C Fibre Channel Switch Standard Features Key Features and Benefits • High Performance for AFA and virtualized workloads − Up to 1536 Gbps of aggregate bandwidth in a 1 rack unit (RU) − Up to 48 autosensing Fibre channel ports capable of speeds of 4/8/16/32 Gbps − Pay as you grow flexibility in increments of 8 ports with on-demand port activation licenses − Allow users to deploy them with 32Gb, 16Gb or 8Gb optics to accommodate their budget while being fully prepared for tomorrow. -

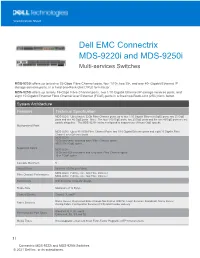

Connectrix Mds-9250I Switch

Specification Sheet Dell EMC Connectrix MDS-9220i and MDS-9250i Multi-services Switches MDS-9220i offers up to twelve 32-Gbps Fibre Channel ports, four 1/10-, two 25-, and one 40- Gigabit Ethernet IP storage services ports, in a fixed one-Rack-Unit (1RU) form factor. MDS-9250i offers up to forty 16-Gbps Fibre Channel ports, two 1/10 Gigabit Ethernet IP storage services ports, and eight 10 Gigabit Ethernet Fibre Channel over Ethernet (FCoE) ports in a fixed two-Rack-Unit (2RU) form factor. System Architecture Features Technical Specification MDS-9220i: Up to twelve 32Gb Fibre Channel ports, up to four 1/10 Gigabit Ethernet (GigE) ports, two 25 GigE ports and one 40 GigE ports. Note: The four 1/10 GigE ports, two 25GigE ports and the one 40GigE ports are not usable altogether. The MDS-9220i can be configured to support one of these GigE speeds. Multi-protocol Ports MDS-9250i: Up to 40 16Gb Fibre Channel Ports, two 1/10 Gigabit Ethernet ports and eight 10 Gigabit Fibre Channel over Ethernet ports MDS-9220i: 32Gb shortwave and long wave Fibre Channel optics 40/25/10/1 GigE optics Supported Optics MDS-9250i: 16Gb and 8Gb shortwave and long wave Fibre Channel optics 10 or 1GigE optics Cascade Maximum 5 Virtual SANs Up to 80 VSANs per Fabric MDS-9220i: Full line rate 32G Fibre Channel Fibre Channel Performance MDS-9250i: Full line rate 16G Fibre Channel Switch Core Non-blocking, cross-bar design Frame Size Maximum 2112 Bytes Class of Service Class 2, 3, and F Name Server, Registered State Change Notification (RSCN), Login Services, Broadcast, Name Server Fabric Services Zoning,Fabric Configuraton Services (FCS) and In-order delivery Standard: E, F, FL and B Fibre Channel Port Types Enhanced: SD, ST and TE Media Types Hot-swappable enhanced Small Form-Factor Pluggable (SFP+) transceivers 1 | Connectrix MDS-9222i and MDS-9250i Switches © 2021 Dell Inc. -

Cisco MDS 9000 Family 4-Gbps Fibre Channel Switching Modules

Data Sheet Cisco MDS 9000 Family 4-Gbps Fibre Channel Switching Modules Cisco ® MDS 9000 Family 4-Gbps Fibre Channel switching modules deliver the intelligence and advanced features required to build truly scalable storage area networks (SANs). Delivering twice the link bandwidth of 2-Gbps Fibre Channel products, the Cisco Fibre Channel switching modules include hardware-enabled innovations designed to dramatically improve performance, scalability, availability, security, and manageability of storage networks, resulting in increased utility and lower total cost of ownership (TCO). Cisco MDS 9000 Family 4-Gbps Fibre Channel switching modules are available in three configurations. The Cisco 12-Port 4 Gbps Fibre Channel Switching Module delivers the highest performance for the most demanding storage networking applications. The Cisco 24-Port 4-Gbps Fibre Channel Switching Module delivers the optimal performance and port density for connection of today’s high performance servers and storage arrays. The Cisco 48-Port 4-Gbps Fibre Channel Switching Module is the ideal solution for consolidating large numbers of server connections into the smallest number of SAN switches, in many cases eliminating the need for core-edge topologies. The Cisco MDS 9000 Family 4-Gbps Fibre Channel switching modules are compatible all MDS 9500 Series Multilayer Directors as well as MDS 9216A and MDS 9216i multilayer fabric switches providing outstanding value and investment protection. Product Overview 12-Port 4-Gbps Fibre Channel Switching Module For the most demanding storage networking environments, the Cisco MDS 9000 Family 12-Port 4- Gbps Fibre Channel Switching Module delivers uncompromising performance. The 4-Gbps ports deliver up to 96 Gbps of full-duplex bandwidth, making the Cisco 12-Port Fibre Channel Switching Module ideal for attachment of the highest performance 4-Gbps-enabled storage subsystems and for ISL connections between switches. -

Fibre Channel Never Dies, Inside Sans

Fibre Channel Never INSIDE Dies SANS Learn the differentiating features of Fibre Channel Explore how Fibre Channel compares to other storage networking protocols Understand why Fibre Channel is here to stay By Juan Tarrio About Brocade Brocade, a Broadcom Inc. Company, is the proven leader in Fibre Channel storage networks that serve as the foundation for virtualized, all-flash data centers. Brocade Fibre Channel solutions deliver innovative, high-performance networks that are highly resilient and easier to deploy, manage and scale for the most demanding environments. The network matters for storage, and Brocade Fibre Channel storage networking solutions are the most trusted, widely deployed network infrastructure for enterprise storage. www.broadcom.com Table of Contents Chapter 1 The Multiple Deaths of Fibre Channel .....................2 A Little Backstory ......................................................................................... 3 The Convergence Wars .............................................................................4 The Converged Promised Land ............................................................. 5 The Cycle of Life (and Technology) .................................................... 5 Chapter 2 The Flash Revolution and the NVMe Era ................. - Fibre Channel Plays a Role ......................................8 The Flash Revolution ...................................................................................8 The SAN Déjà Vu ............................................................................................11 -

The 2015 SNIA Dictionary Was Underwritten by the Generous Contributions Of

SNIA Dictionary SNIA The SNIA Dictionary contains terms and denitions related to storage and other information technologies, and is the storage networking Advancing Storage and Information Technology industry’s most comprehensive attempt to date to arrive at a common body of terminology for the technologies it represents. The terms go through a rigorous technical review and approval process by the SNIA Technical Council to assure their accuracy. The SNIA Technical Council is a group of industry technical experts elected by the members of the SNIA to guide the SNIA’s technical eorts. Their extensive individual technical backgrounds cover all aspects of storage. 2015 Eu SNIA Dictionary 2015 European Edition ropean Edition A glossary of storage networking, data, and information management terminology Storage Networking Industry Association Europe Ltd. Publication sponsors: Ste 4B Greencoat House Francis Street London SW1P 1DH United Kingdom www.snia-europe.org The 2015 SNIA Dictionary was underwritten by the generous contributions of: If your organization is a SNIA Europe member and is interested in sponsoring the 2016 edition of this dictionary, please contact [email protected]. Suite 4B Greencoat House· Francis Street· London SW1P 1DH United Kingdom www.snia-europe.org Storage Networking Industry Association Europe SNIA Europe has supported the storage industry for more than 15 years. As a regional affiliate of the global SNIA organization, SNIA Europe’s mission is to empower organizations to translate data and information into business value by promoting the adoption of enabling technologies and standards. With a large base of member companies spanning the global storage market, SNIA Europe is a true industry catalyst connecting IT professionals to enable knowledge exchange and thought-leadership for the benefit of both the industry and storage professionals.