Development of a Conversing and Body Temperature Scanning Autonomously Navigating Robot to Help Screen for COVID-19 Ryan H

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Barometer of Mobile Internet Connections in Russia Publication of Th May 06 , 2020

Barometer of Mobile Internet Connections in Russia Publication of th May 06 , 2020 2019 Report nPerf is a trademark owned by nPerf SAS, 87 rue de Sèze 69006 LYON – France. Contents 1 Summary of results ...................................................................................................................... 2 1.1 Summary table and nPerf score, all technologies combined .............................................. 2 1.2 Our analysis ........................................................................................................................... 3 2 Overall results 2G/3G/4G ............................................................................................................. 4 2.1 Data amount and distribution ............................................................................................... 4 2.2 Success rate 2G/3G/4G ........................................................................................................ 4 2.3 Download speed 2G/3G/4G .................................................................................................. 5 2.4 Upload speed 2G/3G/4G ....................................................................................................... 6 2.5 Latency 2G/3G/4G ................................................................................................................ 7 2.6 Browsing test 2G/3G/4G....................................................................................................... 8 2.7 Streaming test 2G/3G/4G .................................................................................................... -

Electronic 3D Models Catalogue (On July 26, 2019)

Electronic 3D models Catalogue (on July 26, 2019) Acer 001 Acer Iconia Tab A510 002 Acer Liquid Z5 003 Acer Liquid S2 Red 004 Acer Liquid S2 Black 005 Acer Iconia Tab A3 White 006 Acer Iconia Tab A1-810 White 007 Acer Iconia W4 008 Acer Liquid E3 Black 009 Acer Liquid E3 Silver 010 Acer Iconia B1-720 Iron Gray 011 Acer Iconia B1-720 Red 012 Acer Iconia B1-720 White 013 Acer Liquid Z3 Rock Black 014 Acer Liquid Z3 Classic White 015 Acer Iconia One 7 B1-730 Black 016 Acer Iconia One 7 B1-730 Red 017 Acer Iconia One 7 B1-730 Yellow 018 Acer Iconia One 7 B1-730 Green 019 Acer Iconia One 7 B1-730 Pink 020 Acer Iconia One 7 B1-730 Orange 021 Acer Iconia One 7 B1-730 Purple 022 Acer Iconia One 7 B1-730 White 023 Acer Iconia One 7 B1-730 Blue 024 Acer Iconia One 7 B1-730 Cyan 025 Acer Aspire Switch 10 026 Acer Iconia Tab A1-810 Red 027 Acer Iconia Tab A1-810 Black 028 Acer Iconia A1-830 White 029 Acer Liquid Z4 White 030 Acer Liquid Z4 Black 031 Acer Liquid Z200 Essential White 032 Acer Liquid Z200 Titanium Black 033 Acer Liquid Z200 Fragrant Pink 034 Acer Liquid Z200 Sky Blue 035 Acer Liquid Z200 Sunshine Yellow 036 Acer Liquid Jade Black 037 Acer Liquid Jade Green 038 Acer Liquid Jade White 039 Acer Liquid Z500 Sandy Silver 040 Acer Liquid Z500 Aquamarine Green 041 Acer Liquid Z500 Titanium Black 042 Acer Iconia Tab 7 (A1-713) 043 Acer Iconia Tab 7 (A1-713HD) 044 Acer Liquid E700 Burgundy Red 045 Acer Liquid E700 Titan Black 046 Acer Iconia Tab 8 047 Acer Liquid X1 Graphite Black 048 Acer Liquid X1 Wine Red 049 Acer Iconia Tab 8 W 050 Acer -

![Arxiv:1910.06663V1 [Cs.PF] 15 Oct 2019](https://docslib.b-cdn.net/cover/5599/arxiv-1910-06663v1-cs-pf-15-oct-2019-1465599.webp)

Arxiv:1910.06663V1 [Cs.PF] 15 Oct 2019

AI Benchmark: All About Deep Learning on Smartphones in 2019 Andrey Ignatov Radu Timofte Andrei Kulik ETH Zurich ETH Zurich Google Research [email protected] [email protected] [email protected] Seungsoo Yang Ke Wang Felix Baum Max Wu Samsung, Inc. Huawei, Inc. Qualcomm, Inc. MediaTek, Inc. [email protected] [email protected] [email protected] [email protected] Lirong Xu Luc Van Gool∗ Unisoc, Inc. ETH Zurich [email protected] [email protected] Abstract compact models as they were running at best on devices with a single-core 600 MHz Arm CPU and 8-128 MB of The performance of mobile AI accelerators has been evolv- RAM. The situation changed after 2010, when mobile de- ing rapidly in the past two years, nearly doubling with each vices started to get multi-core processors, as well as power- new generation of SoCs. The current 4th generation of mo- ful GPUs, DSPs and NPUs, well suitable for machine and bile NPUs is already approaching the results of CUDA- deep learning tasks. At the same time, there was a fast de- compatible Nvidia graphics cards presented not long ago, velopment of the deep learning field, with numerous novel which together with the increased capabilities of mobile approaches and models that were achieving a fundamentally deep learning frameworks makes it possible to run com- new level of performance for many practical tasks, such as plex and deep AI models on mobile devices. In this pa- image classification, photo and speech processing, neural per, we evaluate the performance and compare the results of language understanding, etc. -

Samsung Products (Wholesale and Retail)

PRICE LIST FOR SAMSUNG PRODUCTS (WHOLESALE AND RETAIL) Samsung S-Series Wholesale Retail Price Samsung Galaxy Note 20 Ultra 256GB 8GB $700 $1,100 Samsung Galaxy Note 20 256GB 8GB $600 $950 Samsung Galaxy S20 Ultra 128GB 12GB $600 $950 Samsung Galaxy S20+ 28GB 8GB $550 $850 Samsung Galaxy S20 128GB 8GB $500 $800 Samsung Galaxy Note10+ 5G 256GB $600 $950 Samsung Galaxy Note10+ 256GB $550 $850 Samsung Galaxy Note10 5G 256GB $500 $800 Samsung Galaxy Note10 256GB $450 $750 Samsung Galaxy S10 5G 128GB $500 $850 Samsung Galaxy S10+ 128GB $350 $600 Samsung Galaxy S10 128GB $260 $440 Samsung Galaxy Note 9 128GB $300 $500 Samsung Galaxy Note 8 64GB $280 $450 Samsung Galaxy S9 64GB $250 $420 Samsung Galaxy S8+ 64GB $220 $400 Samsung Galaxy S8 64GB $200 $350 Samsung Galaxy Note 7 64GB $180 $300 Samsung Galaxy S7 Edge 64GB $180 $300 Samsung Galaxy S7 64GB $150 $280 Samsung Galaxy S6 Edge + 64GB $120 $250 Samsung Galaxy S6 Edge Unlocked 64GB $110 $230 Samsung Galaxy S6 Unlocked 64GB $100 $200 Samsung A-Series Wholesale Retail Price Samsung Galaxy A70s 128GB $350 $550 Samsung Galaxy A20s 64GB $90 $150 Samsung Galaxy A30s 128GB $220 $400 Samsung Galaxy A50s 128GB $230 $430 Samsung Galaxy A90 5G 128GB $230 $430 Samsung Galaxy A90 128GB $250 $450 Samsung Galaxy A10s 64GB $100 $200 Samsung Galaxy A80 64GB $150 $250 Samsung Galaxy A70 64GB $120 $170 Samsung Galaxy A60 64GB $90 $150 Samsung Galaxy A50 128GB $120 $170 Samsung Galaxy A40 64GB $210 $380 Samsung Galaxy A30 64GB $70 $120 Samsung Galaxy A20e 64GB $170 $270 Samsung Galaxy A20 64GB $60 $100 Samsung -

Get Additional ₹ 3000 on Exchange. Kind Offer

Offer Title: Get Additional ₹3000 on Exchange Kind Offer: Exchange price is subject to physical check. Offer Description: Upgrade to the latest Galaxy A72 and get additional ₹3000 on exchange of your old Samsung smartphone device. Offer available on select Samsung devices only and availability of the Offer is subject to area pin codes of customers. Exchange for devices purchased via Samsung.com - Important Information: 1. You can exchange your old select Samsung smartphone device with Galaxy A72. 2. The Exchange Offer is provided to you by Samsung in collaboration with “Manak Waste Management Pvt Ltd., (“Cashify”)” and the evaluation and exchange of your old Samsung smartphone device shall be subject to the terms and conditions of Cashify, for details refer https://www.cashify.in/terms-of-use & https://www.cashify.in/terms-conditions. 3. Exchange can be done on "Samsung Shop". Galaxy A72 Galaxy A72 (8/128 GB) (8/256 GB) ₹ 3000 ₹ 3000 4. Please give correct inputs, with regards to screen condition & availability of accessories, at the time of evaluating your old Smartphone. Any incorrect information provided by you shall result in cancellation/rejection of the Exchange Offer. In such scenario, you shall not be eligible to receive the exchange value and/or any benefit/Offer from Samsung or the exchange partner in lieu of the Exchange Offer or exchange value and no claims shall be entertained in this regard. 5. Exchange Price shown is the Maximum Price subject to physical check at the time of exchange. 6. The screen condition and accessories declared by you will be verified at the time of exchange. -

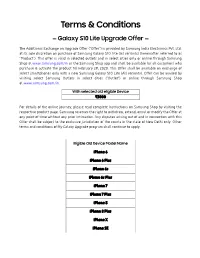

Terms & Conditions

Terms & Conditions — Galaxy S10 Lite Upgrade Offer — The Additional Exchange on Upgrade Offer ("Offer") is provided by Samsung India Electronics Pvt. Ltd. at its sole discretion on purchase of Samsung Galaxy S10 Lite (all variants) (hereinafter referred to as "Product"). This offer is valid in selected outlets and in select cities only or online through Samsung Shop at www.samsung.com/in or the Samsung Shop app and shall be available for all customers who purchase & activate the product till February 29, 2020. This Offer shall be available on exchange of select smartphones only with a new Samsung Galaxy S10 Lite (All variants). Offer can be availed by visiting select Samsung Outlets in select cities ("Outlet") or online through Samsung Shop at www.samsung.com/in. With selected old eligible Device ₹3000 For details of the online journey, please read complete instructions on Samsung Shop by visiting the respective product page. Samsung reserves the right to withdraw, extend, annul or modify the Offer at any point of time without any prior intimation. Any disputes arising out of and in connection with this Offer shall be subject to the exclusive jurisdiction of the courts in the state of New Delhi only. Other terms and conditions of My Galaxy Upgrade program shall continue to apply. Eligible Old Device Model Name iPhone 6 iPhone 6 Plus iPhone 6s iPhone 6s Plus iPhone 7 iPhone 7 Plus iPhone 8 iPhone 8 Plus iPhone X iPhone SE iPhone XR iPhone Xs iPhone Xs Max iPhone 11 iPhone 11 Pro iPhone 11 Max Google Pixel Google Pixel Google Pixel 2 Google -

Get up to Additional ₹ 10000 on Exchange. Kind Offer

Offer Title: Get Up to Additional ₹ 10000 on Exchange. Kind Offer: Exchange price is subject to physical check. Offer Description: Upgrade to the latest Galaxy S21 | S21 Ultra and get up to additional ₹ 10000 on exchange of your old Samsung smartphone device. Offer available on select devices only and availability of the Offer is subject to area pin codes of customers. Exchange for devices purchased via Samsung.com - Important Information: 1. You can exchange your old select Samsung smartphone device with Galaxy S21 | S21 Ultra. 2. The Exchange Offer is provided to you by Samsung in collaboration with “Manak Waste Management Pvt Ltd., (“Cashify”) “ and the evaluation and exchange of your old Samsung smartphone device shall be subject to the terms and conditions of Cashify, for details refer https://www.cashify.in/terms-of-use & https://www.cashify.in/terms-conditions 3. Exchange can be done on "Samsung Shop". Model Normal Upgrade Bonus Focus Model Upgrade Bonus Galaxy S21 ₹ 5000 ₹ 5000 Galaxy S21 Ultra ₹ 7000 ₹ 10000 4. Please give correct inputs, with regards to screen condition & availability of accessories, at the time of evaluating your old Smartphone. Any incorrect information provided by you shall result in cancellation/rejection of the Exchange Offer. In such scenario, you shall not be eligible to receive the exchange value and/or any benefit/Offer from Samsung or the exchange partner in lieu of the Exchange Offer or exchange value and no claims shall be entertained in this regard. 5. Exchange Price shown is the Maximum Price subject to physical check at the time of exchange. -

Størrelsesskema for Universal Smartphone Holdere

Størrelsesskema for universal smartphone holdere iPhone 6 INCH 6.5 INCH > 6.5 INCH Apple iPhone 11 Pro 5.8" Apple iPhone 11 Pro Max 6.5" iPhone XS 5.8" Apple iPhone XS Max 6.5" iPhone X 5.8" Apple iPhone XR 6.1" iPhone 8 Plus 5.5" Apple iPhone 11 6.1" iPhone 8 4.7" iPhone 7 Plus 5.5" iPhone 7 4.7" iPhone SE 4.0" iPhone 6s Plus 5.5" iPhone 6s 4.7" iPhone 6 Plus 5.5" iPhone 6 4.7" iPhone 5s 4.0" iPhone 5c 4.0" iPhone 5 4.0" iPhone 4s 3.5" Samsung 6 INCH 6.5 INCH > 6.5 INCH Samsung Galaxy Xcover 4s 5.0" Samsung Galaxy A20s 6.5" Samsung Galaxy A70s 6.7" Samsung Galaxy A2 Core 5.0" Samsung Galaxy M30s 6.4" Samsung Galaxy Fold 5G 7.3" Samsung Galaxy S10e 5.8" Samsung Galaxy M10s 6.4" Samsung Galaxy Fold 7.3" Samsung Galaxy A40 5.9" Samsung Galaxy A30s 6.4" Samsung Galaxy A90 5G 6.7" Samsung Galaxy A20e 5.8" Samsung Galaxy A50s 6.4" Samsung Galaxy Note10+ 5G 6.8" Samsung Galaxy J2 Core 5.0" Samsung Galaxy Note10 5G 6.3" Samsung Galaxy Note10+ 6.8" Samsung Galaxy On6 5.6" Samsung Galaxy Note10 6.3" Samsung Galaxy S10 5G 6.7" Samsung Galaxy A10s 6.2" Samsung Galaxy A80 6.7" Samsung Galaxy A10e 5.83" Samsung Galaxy A70 6.7" Samsung Galaxy S10+ 6.4" Samsung Galaxy S10 6.1" Samsung Galaxy M40 6.3" Samsung Galaxy M30 6.4" Samsung Galaxy M20 6.3" Samsung Galaxy M10 6.22" Samsung Galaxy A60 6.3" Samsung Galaxy A50 6.4" Samsung Galaxy A30 6.4" Samsung Galaxy A20 6.4" Samsung Galaxy A10 6.2" Samsung Galaxy A8s 6.4" Samsung Galaxy A6s 6.0" Samsung Galaxy A9 6.3" Samsung Galaxy A7 6.0" Samsung Galaxy Note9 6.4" Samsung Galaxy J6+ 6.0" Samsung Galaxy J4 -

SKU EAN Brand Description Model

SKU EAN Brand Description Model IT-20001 8720153552486 Impact Tempered Glass Apple iPhone 5/5S/SE IT-20002 8720153552493 Impact Tempered Glass Apple iPhone 6/6S/7/8 IT-20003 8720153552509 Impact Curved Glass Apple iPhone 6/6S/7/8 IT-20004 8720153552516 Impact Tempered Glass Apple iPhone 6/6S/7/8 Plus IT-20005 8720153552523 Impact Curved Glass Apple iPhone 6/6S/7/8 Plus IT-20006 8720153552530 Impact Tempered Glass Apple iPhone X/XS With Applicator IT-20007 8720153552547 Impact Curved Glass Apple iPhone X/XS/11 Pro IT-20008 8720153552554 Impact Tempered Glass Apple iPhone XR With Applicator IT-20009 8720153552561 Impact Curved Glass Apple iPhone XR/11 IT-20010 8720153552578 Impact Tempered Glass Apple iPhone XS Max With Applicator IT-20011 8720153552585 Impact Curved Glass Apple iPhone X/XS/11 Pro Max IT-20012 8720153552592 Impact Tempered Glass Huawei Mate 20 IT-20013 8720153552608 Impact Tempered Glass Huawei Mate 20 Lite IT-20014 8720153552615 Impact Curved Glass Huawei Mate 20 Pro IT-20015 8720153552622 Impact Tempered Glass Huawei P Smart (2019) IT-20016 8720153552639 Impact Tempered Glass Huawei P Smart Plus (2019) IT-20017 8720153552646 Impact Tempered Glass Huawei P20 IT-20018 8720153552653 Impact Tempered Glass Huawei P20 Lite IT-20019 8720153552660 Impact Tempered Glass Huawei P20 Pro IT-20020 8720153552677 Impact Tempered Glass Huawei P30 With Applicator IT-20021 8720153552684 Impact Tempered Glass Huawei P30 Lite With Applicator IT-20022 8720153552691 Impact Curved Glass Huawei P30 Pro With Applicator IT-20023 8720153552707 Impact -

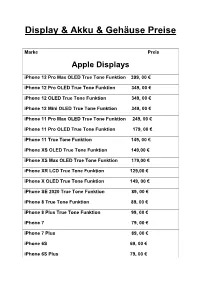

Display & Akku & Gehäuse Preise

Display & Akku & Gehäuse Preise Marke Preis Apple Displays iPhone 12 Pro Max OLED True Tone Funktion 399, 00 € iPhone 12 Pro OLED True Tone Funktion 349, 00 € iPhone 12 OLED True Tone Funktion 349, 00 € iPhone 12 Mini OLED True Tone Funktion 349, 00 € iPhone 11 Pro Max OLED True Tone Funktion 249, 00 € iPhone 11 Pro OLED True Tone Funktion 179, 00 € iPhone 11 True Tone Funktion 149, 00 € iPhone XS OLED True Tone Funktion 149,00 € iPhone XS Max OLED True Tone Funktion 179,00 € iPhone XR LCD True Tone Funktion 129,00 € iPhone X OLED True Tone Funktion 149, 00 € iPhone SE 2020 True Tone Funktion 89, 00 € iPhone 8 True Tone Funktion 89, 00 € iPhone 8 Plus True Tone Funktion 99, 00 € iPhone 7 79, 00 € iPhone 7 Plus 89, 00 € iPhone 6S 69, 00 € iPhone 6S Plus 79, 00 € iPhone 6 59, 00 € iPhone 6 Plus 69, 00 € iPhone SE 59, 00 € iPhone 5 S 59,00 € iPhone 5C 59, 00 € iPhone 5 59,00 € iPhone 4S 59, 00 € iPhone 4 59, 00 € iPhone 4 &4S Farbeumbau 69, 00 € iPhone 3G & 3GS 39, 00 € iPod 2G 49, 00 € iPod 4G 59 ,00 € iPod 5G 99, 00 € iPod 6G 99, 00 € iPad 2 79,00 € iPad 3 79,00 € iPad 4 79,00 € iPad Air 89, 00 € iPad Air 2 179, 00 € iPad Air 3 179, 00 € iPad Pro 9;7 239, 00 € iPad Pro 10,5 269, 00 € iPad Pro 12.9 – 2020 399, 00 € iPad Pro 12.9 - 2018 399, 00 € iPad Pro 11- 2020 379, 00 € iPad Pro 11- 2018 339, 00 € iPad Pro 12.9 - 2017 579, 00 € iPad Pro 12.9 - 2015 269, 00 € iPad 2017 99, 00 € iPad 2018 99, 00 € iPad 10.2 2019 119, 00 € iPad 10.2 2020 119, 00 € iPad Mini 86, 00 € iPad Mini 2 79, 00 € iPad Mini 3 89, 00 € iPad Mini 4 169, 00 € Appel Gehäuse -

Barometer of Mobile Internet Connections in Russia

Barometer of mobile Internet connections in Russia Publication of July 9th, 2021 H2 2020 & H1 2021 nPerf is a trademark owned by nPerf SAS, 87 rue de Sèze 69006 LYON – France. ussi Contents 1 Summary ...................................................................................................................................... 2 1.1 2G/3G/4G nPerf Score .......................................................................................................... 2 1.2 Our analysis ........................................................................................................................... 3 2 Overall results ............................................................................................................................... 4 2.1 Data amount and distribution ............................................................................................... 4 2.2 2G/3G/4G Success rate ........................................................................................................ 4 2.3 2G/3G/4G download speed .................................................................................................. 5 2.4 2G/3G/4G Upload speed ....................................................................................................... 6 2.5 2G/3G/4G Latency ................................................................................................................ 7 2.6 2G/3G/4G Browsing test....................................................................................................... 7 2.7 2G/3G/4G -

Тотальный Trade-In (Гаджет По Обмену)» (Далее По Тексту – «Правила»)

ПРАВИЛА Акции «Тотальный Trade-In (Гаджет по обмену)» (далее по тексту – «Правила») 1. Общие положения. 1.1. Акция – рекламное мероприятие, проводимое Организатором в соответствии с настоящими Правилами. Акция не является стимулирующим мероприятием, подпадающего под действие ст.9 ФЗ «О рекламе». 1.2. Организатор – юридическое лицо, указанное в соответствии с настоящими Правилами как Организатор Акции. 1.3. Программа Трейд-ин – постоянная услуга, которая включает процесс сдачи покупателем Бывшего в употреблении Товара с одновременным приобретением Нового Товара. 1.4. Белмонт – ООО «БЕЛМОНТ», ОГРН: 5167746493547, 115035, г. Москва, Овчинниковская набережная, д. 20, стр. 2, ком. 23, являющееся авторизованным партнером компании Apple, организующее на территории РФ Программу Трейд-ин. 1.5. Смарт-Базар - ООО «Цифровые девайсы», ОГРН: 1197746757944, 127006, г. Москва, переулок Оружейный, д.41, ЭТАЖ 6 ОФИС 6.18, организующее на территории РФ Программу Трейд-ин. 1.6. Потребитель – дееспособное физическое лицо, достигшее возраста 18-ти лет (либо не достигшее 18 лет, но приобретшее дееспособность в полном объёме в порядке, предусмотренном действующим законодательством РФ), владеющее на праве собственности Бывшим в употреблении Товаром. 1.7. Участник – Потребитель, получивший статус Участника в соответствии с настоящими Правилами. 1.8. Салоны сети Организатора – салоны связи, работающие под товарным знаком «Связной», «Связной3» и/или «Евросеть» и перечисленные на сайте Организатора. 1.9. Бывший в употреблении Товар (далее также Б/у Товар) – смартфоны и планшеты под товарными знаками Apple, Samsung, Huawei, LG и Sony, перечисленные в Приложении № 1 к настоящим Правилам, принадлежащие Потребителям на праве собственности, который Потребитель вправе сдать Белмонту или Смарт-Базару, получив за него Выкупную цену, с учётом ограничений и требований, указанных в Приложении № 2 к настоящим Правилам.