Contribution Barriers to Free/Libre and Open Source Software Development Projects

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Comparative Analysis and Framework Evaluating Web Single Sign-On Systems

Comparative Analysis and Framework Evaluating Web Single Sign-On Systems FURKAN ALACA, School of Computing, Queen’s University, [email protected] PAUL C. VAN OORSCHOT, School of Computer Science, Carleton University, [email protected] We perform a comprehensive analysis and comparison of 14 web single sign-on (SSO) systems proposed and/or deployed over the last decade, including federated identity and credential/password management schemes. We identify common design properties and use them to develop a taxonomy for SSO schemes, highlighting the associated trade-offs in benefits (positive attributes) offered. We develop a framework to evaluate the schemes, in which weidentify14 security, usability, deployability, and privacy benefits. We also discuss how differences in priorities between users, service providers (SPs), and identity providers (IdPs) impact the design and deployment of SSO schemes. 1 INTRODUCTION Password-based authentication has numerous documented drawbacks. Despite the many password alternatives, no scheme has thus far offered improved security without trading off deployability or usability [8]. Single Sign-On (SSO) systems have the potential to strengthen web authentication while largely retaining the benefits of conventional password-based authentication. We analyze in depth the state-of-the art ofwebSSO through the lens of usability, deployability, security, and privacy, to construct a taxonomy and evaluation framework based on different SSO architecture design properties and their associated benefits and drawbacks. SSO is an umbrella term for schemes that allow users to rely on a single master credential to access a multitude of online accounts. We categorize SSO schemes across two broad categories: federated identity systems (FIS) and credential managers (CM). -

1 Heidemarie Hanekop, Volker Wittke the Role of Users in Internet-Based

Heidemarie Hanekop, Volker Wittke The Role of Users in Internet-based Innovation Processes The discussion of innovations on the Internet is increasingly focusing on the active role of customers and users. Especially in the development and improvement of digital products and services (such as software, information services or online trade), adapted to consumer demands, we observe an expansion of autonomous activities of customers and users. The division of labour between suppliers and users, producers and consumers is no longer definite. This also brings into question long standing and firmly established boundaries between employment which is still structured by the capitalist law of profit and a private world following other principles. The digital mode of production and distribution, together with the necessary means of production (personal computer) in the hands of numerous private users, provide these users with expanding possibilities to develop and produce digital products and services. In addition, the Internet as a platform of communication facilitates world-wide production with participation of a great number of users ("mass collaboration" – see Tapscott 2006). The development of Open Source Software (OSS) and the online encyclopaedia Wikipedia represent a particularly far-reaching participation of users. It is true that we are dealing with the production of public goods here and therefore specific basic conditions for "mass collaboration". However, concepts like "interactive value creation" (Reichwald/Piller 2006) or "open innovation" (Chesbrough 2003, Chesbrough et al. 2006) propagate an opening of innovation processes for users unrelated to companies also in the framework of commercial value creation, as a central new dimension of promising organisation of innovation processes. -

Download the Google Play App for Firefox Download the Google Play App for Firefox

download the google play app for firefox Download the google play app for firefox. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 679fdb395c10c3f7 • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Firefox Browser. No shady privacy policies or back doors for advertisers. Just a lightning fast browser that doesn’t sell you out. Latest Firefox features. Picture-in-Picture. Pop a video out of the browser window so you can stream and multitask. Expanded Dark Mode. Take it easy on your eyes every time you go online. An extra layer of protection. DNS over HTTPS (DoH) helps keep internet service providers from selling your data. Do what you do online. Firefox Browser isn’t watching. How Firefox compares to other browsers. Get all the speed and tools with none of the invasions of privacy. Firefox Browser collects so little data about you, we don’t even require your email address to download. -

FOSDEM 2017 Schedule

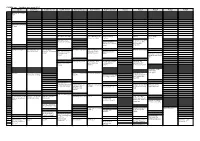

FOSDEM 2017 - Saturday 2017-02-04 (1/9) Janson K.1.105 (La H.2215 (Ferrer) H.1301 (Cornil) H.1302 (Depage) H.1308 (Rolin) H.1309 (Van Rijn) H.2111 H.2213 H.2214 H.3227 H.3228 Fontaine)… 09:30 Welcome to FOSDEM 2017 09:45 10:00 Kubernetes on the road to GIFEE 10:15 10:30 Welcome to the Legal Python Winding Itself MySQL & Friends Opening Intro to Graph … Around Datacubes Devroom databases Free/open source Portability of containers software and drones Optimizing MySQL across diverse HPC 10:45 without SQL or touching resources with my.cnf Singularity Welcome! 11:00 Software Heritage The Veripeditus AR Let's talk about The State of OpenJDK MSS - Software for The birth of HPC Cuba Game Framework hardware: The POWER Make your Corporate planning research Applying profilers to of open. CLA easy to use, aircraft missions MySQL Using graph databases please! 11:15 in popular open source CMSs 11:30 Jockeying the Jigsaw The power of duck Instrumenting plugins Optimized and Mixed License FOSS typing and linear for Performance reproducible HPC Projects algrebra Schema Software deployment 11:45 Incremental Graph Queries with 12:00 CloudABI LoRaWAN for exploring Open J9 - The Next Free It's time for datetime Reproducible HPC openCypher the Internet of Things Java VM sysbench 1.0: teaching Software Installation on an old dog new tricks Cray Systems with EasyBuild 12:15 Making License 12:30 Compliance Easy: Step Diagnosing Issues in Webpush notifications Putting Your Jobs Under Twitter Streaming by Open Source Step. Java Apps using for Kinto Introducing gh-ost the Microscope using Graph with Gephi Thermostat and OGRT Byteman. -

Seisio: a Fast, Efficient Geophysical Data Architecture for the Julia

1 SeisIO: a fast, efficient geophysical data architecture for 2 the Julia language 1∗ 2 2 2 3 Joshua P. Jones , Kurama Okubo , Tim Clements , and Marine A. Denolle 1 4 4509 NE Sumner St., Portland, OR, USA 2 5 Department of Earth and Planetary Sciences, Harvard University, MA, USA ∗ 6 Corresponding author: Joshua P. Jones ([email protected]) 1 7 Abstract 8 SeisIO for the Julia language is a new geophysical data framework that combines the intuitive 9 syntax of a high-level language with performance comparable to FORTRAN or C. Benchmark 10 comparisons with recent versions of popular programs for seismic data download and analysis 11 demonstrate significant improvements in file read speed and orders-of-magnitude improvements 12 in memory overhead. Because the Julia language natively supports parallel computing with an 13 intuitive syntax, we benchmark test parallel download and processing of multi-week segments of 14 contiguous data from two sets of 10 broadband seismic stations, and find that SeisIO outperforms 15 two popular Python-based tools for data downloads. The current capabilities of SeisIO include file 16 read support for several geophysical data formats, online data access using FDSN web services, 17 IRIS web services, and SeisComP SeedLink, with optimized versions of several common data 18 processing operations. Tutorial notebooks and extensive documentation are available to improve 19 the user experience (UX). As an accessible example of performant scientific computing for the 20 next generation of researchers, SeisIO offers ease of use and rapid learning without sacrificing 21 computational performance. 2 22 1 Introduction 23 The dramatic growth in the volume of collected geophysical data has the potential to lead to 24 tremendous advances in the science (https://ds.iris.edu/data/distribution/). -

Analisi Del Progetto Mozilla

Università degli studi di Padova Facoltà di Scienze Matematiche, Fisiche e Naturali Corso di Laurea in Informatica Relazione per il corso di Tecnologie Open Source Analisi del progetto Mozilla Autore: Marco Teoli A.A 2008/09 Consegnato: 30/06/2009 “ Open source does work, but it is most definitely not a panacea. If there's a cautionary tale here, it is that you can't take a dying project, sprinkle it with the magic pixie dust of "open source", and have everything magically work out. Software is hard. The issues aren't that simple. ” Jamie Zawinski Indice Introduzione................................................................................................................................3 Vision .........................................................................................................................................4 Mozilla Labs...........................................................................................................................5 Storia...........................................................................................................................................6 Mozilla Labs e i progetti di R&D...........................................................................................8 Mercato.......................................................................................................................................9 Tipologia di mercato e di utenti..............................................................................................9 Quote di mercato (Firefox).....................................................................................................9 -

Huiswerk Linux: Sendmail Virtual User Instellen

Huiswerk Linux: Sendmail virtual user instellen Als professioneel administrator wil je zo min mogelijk gebruikers op je Linux machine hebben. Echte Linux gebruikers hebben login-rechten op jouw server en kunnen schade aanrichten op jouw machine. Het is in de meeste gevallen niet nodig om voor iedere klant een gebruikersaccount aan te maken. De meeste klanten willen alleen dat hun e-mail werkt. De opdracht voor deze week is: maak een virtual user voor het testdomein.nl aan. Over virtual users Het nadeel van het instellen van Virtual Hosts is dat alle gebruikers geldig zijn voor ieder e-mail domein dat je aanmaakt. Stel dat je een e-mail domein ( virtual host ) voor een klant aanmaakt, zodat zij e-mail kan ontvangen via dit domein. Dit betekent dat andere gebruikers ook e-mail kunnen ontvangen voor datzelfde domein. Dit veroorzaakt veel extra spam. Het handige van virtual users is dat ze alleen bestaan voor het domein waarvoor ze zijn aangemaakt. Het sudo mechanisme Het configureren van Linux doen we namens de super-user . We moeten daarom tijdelijk inloggen als Administrator. Cygwin gebruikers Andere Linux gebruikers Sudo voor Cygwin gebruikers: rechts-klik op het icoon van de Sudo voor gebruikers van andere Linux-versies ( Ubuntu , Cygwin terminal, en kies voor Als administrator uitvoeren . Lubuntu , Kubuntu , Android , Gentoo , Debian , etc): start een Zorg er ook voor dat de Sendmail daemon is gestart: terminal met de toetsencombinatie <Ctrl><Alt>-T. We gebruiken het commando sudo om in te loggen met het su service sendmail start (become Super User) commando. Daardoor blijven we ingelogd: sudo su Feature controleren Sendmail wordt geconfigureerd via het bestand /etc/mail/sendmail.cf . -

THE NIGHTINGALE SONG of DAVID by Octavius Winslow, 1876

www.biblesnet.com - Online Christian Library THE NIGHTINGALE SONG OF DAVID by Octavius Winslow, 1876 Preface Christ the Shepherd Green Pastures Still Waters The Restored Sheep The Valley The Rod and the Staff The Banquet The Anointing The Overflowing Cup Heaven, at Last and Forever Hell, at Last and Forever THE NIGHTINGALE SONG OF DAVID PREFACE Of the Divine Inspiration of the Book of Psalms the Psalmist himself shall speak: "These are the last words of David: David, the son of Jesse, speaks- David, the man to whom God gave such wonderful success, David, the man anointed by the God of Jacob, David, the sweet psalmist of Israel. The Spirit of the Lord speaks through me; his words are upon my tongue." 2 Samuel 23:1-2. Fortified with such a testimony, we may accept without hesitation the Twenty-third of this "Hymn Book for all times" as not only a divine, but as the divinest, richest, and most musical of all the songs which breathed from David's www.biblesnet.com www.biblesnet.com - Online Christian Library inspired harp; and to which, by the consent of the universal Church, the palm of distinction has been awarded. No individual, competent to form a judgment in the matter, and possessing any pretension to a taste for that which is pastoral in composition- rich in imagery- tender in pathos- and sublime in revelation- will fail to study this Psalm without the profoundest instruction and the most exquisite delight. Its melodies- divine and entrancing, and which may well suggest the expressive title we have ventured to give it- have echoed through all ages of the Christian Church- instructing more minds, soothing more hearts, quelling more fears, and inspiring more hopes- than, perhaps, any other composition in any language, or of any age. -

THE POWER of CLOUD COMPUTING COMES to SMARTPHONES Neeraj B

THE POWER OF CLOUD COMPUTING COMES TO SMARTPHONES Neeraj B. Bharwani B.E. Student (Information Science and Engineering) SJB Institute of Technology, Bangalore 60 Table of Contents Introduction ............................................................................................................................................3 Need for Clone Cloud ............................................................................................................................4 Augmented Execution ............................................................................................................................5 Primary functionality outsourcing ........................................................................................................5 Background augmentation..................................................................................................................5 Mainline augmentation .......................................................................................................................5 Hardware augmentation .....................................................................................................................6 Augmentation through multiplicity .......................................................................................................6 Architecture ...........................................................................................................................................7 Snow Flock: Rapid Virtual Machine Cloning for Cloud Computing ........................................................ -

What Every Engineer Should Know About Software Engineering

7228_C000.fm Page v Tuesday, March 20, 2007 6:04 PM WHAT EVERY ENGINEER SHOULD KNOW ABOUT SOFTWARE ENGINEERING Phillip A. Laplante Boca Raton London New York CRC Press is an imprint of the Taylor & Francis Group, an informa business © 2007 by Taylor & Francis Group, LLC 7228_C000.fm Page vi Tuesday, March 20, 2007 6:04 PM CRC Press Taylor & Francis Group 6000 Broken Sound Parkway NW, Suite 300 Boca Raton, FL 33487-2742 © 2007 by Taylor & Francis Group, LLC CRC Press is an imprint of Taylor & Francis Group, an Informa business No claim to original U.S. Government works Printed in the United States of America on acid-free paper 10 9 8 7 6 5 4 3 2 1 International Standard Book Number-10: 0-8493-7228-3 (Softcover) International Standard Book Number-13: 978-0-8493-7228-5 (Softcover) This book contains information obtained from authentic and highly regarded sources. Reprinted material is quoted with permission, and sources are indicated. A wide variety of references are listed. Reasonable efforts have been made to publish reliable data and information, but the author and the publisher cannot assume responsibility for the validity of all materials or for the conse- quences of their use. No part of this book may be reprinted, reproduced, transmitted, or utilized in any form by any electronic, mechanical, or other means, now known or hereafter invented, including photocopying, microfilming, and recording, or in any information storage or retrieval system, without written permission from the publishers. For permission to photocopy or use material electronically from this work, please access www. -

Technical White Paper: Running Applications Under

Technical White Paper: Running Applications Under CrossOver: An Analysis of Security Risks Running Applications Under CrossOver: An Analysis of Security Risks Wine, Viruses, and Methods of Achieving Security Overview: Wine is a Windows compatibility technology that allows a wide variety of Windows Running Windows software to run as-if-natively on Unix-based software via CrossOver operating systems like Linux and Mac OS X. From a theoretical standpoint, Wine should is, on average, much safer also enable malware and viruses to run, thereby than running them under (unfortunately) exposing Wine users to these same Windows hazards. However, CrossOver (based on Wine) also incorporates security features that bring this risk down to almost zero. This White Paper examines the reasons behind the enhanced safety that CrossOver provides. With the increasing popularity of running Windows software on Linux and Mac OS X via compatibility solutions such as Wine, VMWare, and Parallels, users have been able to enjoy a degree of computing freedom heretofore unseen. Yet with that freedom has come peril. As many VMWare and Parallels users have discovered, running applications like Outlook and IE under those PC emulation solutions also opens up their machine to the same viruses and malware they faced under Windows. Indeed, one of the first things any VMWare or Parallels customer should do upon is install a commercial anti-virus package. Failure to do so can result in a host of dire consequences for their Windows partition, just as it would if they were running a Windows PC. Not surprisingly, a question we sometimes hear is whether or not Wine exposes users to the same level of risk. -

Empirical Study of Restarted and Flaky Builds on Travis CI

Empirical Study of Restarted and Flaky Builds on Travis CI Thomas Durieux Claire Le Goues INESC-ID and IST, University of Lisbon, Portugal Carnegie Mellon University [email protected] [email protected] Michael Hilton Rui Abreu Carnegie Mellon University INESC-ID and IST, University of Lisbon, Portugal [email protected] [email protected] ABSTRACT Continuous integration is an automatic process that typically Continuous Integration (CI) is a development practice where devel- executes the test suite, may further analyze code quality using static opers frequently integrate code into a common codebase. After the analyzers, and can automatically deploy new software versions. code is integrated, the CI server runs a test suite and other tools to It is generally triggered for each new software change, or at a produce a set of reports (e.g., the output of linters and tests). If the regular interval, such as daily. When a CI build fails, the developer is result of a CI test run is unexpected, developers have the option notified, and they typically debug the failing build. Once the reason to manually restart the build, re-running the same test suite on for failure is identified, the developer can address the problem, the same code; this can reveal build flakiness, if the restarted build generally by modifying or revoking the code changes that triggered outcome differs from the original build. the CI process in the first place. In this study, we analyze restarted builds, flaky builds, and their However, there are situations in which the CI outcome is unex- impact on the development workflow.