Encrypted Data Identification by Information Entropy Fingerprinting

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Performance Analysis of Advanced Iot Encryption on Serialization Concept: Application Optimization Approach

(IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 11, No. 12, 2020 Performance Analysis of Advanced IoT Encryption on Serialization Concept: Application Optimization Approach Johan Setiawan1, Muhammad Taufiq Nuruzzaman2* Department of Informatics Universitas Islam Negeri Sunan Kalijaga Yogyakarta Yogyakarta, Indonesia Abstract—This study investigates the effect of serialization running[8][12][14]. In tackling these threats, data encryption concepts with cipher algorithms and block mode on structured is required [15]. Encryption is a method used to convert data on execution time in low-level computing IoT devices. The original data into artificial data to become rugged and not research was conducted based on IoT devices, which are accessible for humans to read. The encryption process's currently widely used in online transactions. The result of drawback tends to impose more processing on the overheating on the CPU is fatal if the encryption load is not microprocessor embedded in an IoT device. It can result from reduced. One of the consequences is an increase in the small and limited microprocessor capabilities [16][17] and maintenance obligations. So that from this influence, the user large amounts of data in the encryption process [18]–[20]. As experience level will have bad influence. This study uses a result of an encryption algorithm's complexity, the experimental methods by exploring serialization, ciphers, and microprocessor on the IoT device is more burdened. block mode using benchmarks to get better data combination algorithms. The four test data groups used in benchmarking will The direct effect of microprocessor devices that get high produce an experimental benchmark dataset on the selected loads or pressures to overheat is the length of the computation AES, Serpent, Rijndael, BlowFish, and block mode ciphers. -

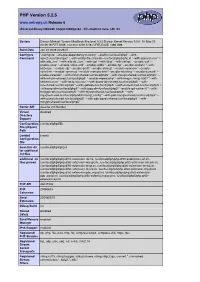

PHP Credits Configuration

PHP Version 5.0.1 www.entropy.ch Release 1 System Darwin G4-500.local 7.7.0 Darwin Kernel Version 7.7.0: Sun Nov 7 16:06:51 PST 2004; root:xnu/xnu-517.9.5.obj~1/RELEASE_PPC Power Macintosh Build Date Aug 13 2004 15:03:31 Configure './configure' '--prefix=/usr/local/php5' '--with-config-file-path=/usr/local/php5/lib' '--with-apxs' '- Command -with-iconv' '--with-openssl=/usr' '--with-zlib=/usr' '--with-mysql=/Users/marc/cvs/entropy/php- module/src/mysql-standard-*' '--with-mysqli=/usr/local/mysql/bin/mysql_config' '--with- xsl=/usr/local/php5' '--with-pdflib=/usr/local/php5' '--with-pgsql=/Users/marc/cvs/entropy/php- module/build/postgresql-build' '--with-gd' '--with-jpeg-dir=/usr/local/php5' '--with-png- dir=/usr/local/php5' '--with-zlib-dir=/usr' '--with-freetype-dir=/usr/local/php5' '--with- t1lib=/usr/local/php5' '--with-imap=../imap-2002d' '--with-imap-ssl=/usr' '--with- gettext=/usr/local/php5' '--with-ming=/Users/marc/cvs/entropy/php-module/build/ming-build' '- -with-ldap' '--with-mime-magic=/usr/local/php5/etc/magic.mime' '--with-iodbc=/usr' '--with- xmlrpc' '--with-expat -dir=/usr/local/php5' '--with-iconv-dir=/usr' '--with-curl=/usr/local/php5' '-- enable-exif' '--enable-wddx' '--enable-soap' '--enable-sqlite-utf8' '--enable-ftp' '--enable- sockets' '--enable-dbx' '--enable-dbase' '--enable-mbstring' '--enable-calendar' '--with- bz2=/usr' '--with-mcrypt=/usr/local/php5' '--with-mhash=/usr/local/php5' '--with- mssql=/usr/local/php5' '--with-fbsql=/Users/marc/cvs/entropy/php-module/build/frontbase- build/Library/FrontBase' Server -

Cryptography Made Easy with Zend Framework 2

Cryptography made easy with Zend Framework 2 by Enrico Zimuel ([email protected]) Senior Software Engineer Zend Framework Core Team Zend Technologies Ltd © All rights reserved. Zend Technologies, Inc. About me ● Enrico Zimuel ● Software Engineer since 1996 ● Senior PHP Engineer at Zend Technologies, in the Zend Framework Team @ezimuel ● Author of articles and books on cryptography, PHP, and secure [email protected] software ● International speaker of PHP conferences ● B.Sc. (Hons) in Computer Science and Economics from the University “G'Annunzio” of Pescara (Italy) © All rights reserved. Zend Technologies, Inc. Cryptography in Zend Framework ● In 2.0.0beta4 we released Zend\Crypt to help developers to use cryptography in PHP projects ● In PHP we have built-in functions and extensions for cryptography purposes: ▶ crypt() ▶ Mcrypt ▶ OpenSSL ▶ Hash (by default in PHP 5.1.2) ▶ Mhash (emulated by Hash from PHP 5.3) © All rights reserved. Zend Technologies, Inc. Cryptography in not so easy to use ● To implement cryptography in PHP we need a solid background in cryptography engineering ● The Mcrypt, OpenSSL and the others PHP libraries are good primitive but you need to know how to use it ● This can be a barrier that discouraged PHP developers ● We decided to offer a simplified API for cryptography with security best practices built-in ● The goal is to support strong cryptography in ZF2 © All rights reserved. Zend Technologies, Inc. Cryptography in Zend Framework ● Zend\Crypt components: ▶ Zend\Crypt\Password ▶ Zend\Crypt\Key\Derivation ▶ Zend\Crypt\Symmetic ▶ Zend\Crypt\PublicKey ▶ Zend\Crypt\Hash ▶ Zend\Crypt\Hmac ▶ Zend\Crypt\BlockCipher © All rights reserved. Zend Technologies, Inc. -

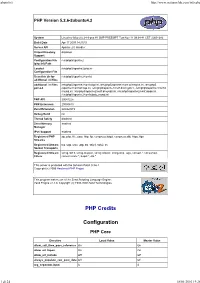

PHP Version 5.2.5 Release 6

PHP Version 5.2.5 www.entropy.ch Release 6 Universal Binary i386/x86_64/ppc7400/ppc64 - this machine runs: x86_64 System Darwin Michael-Tysons-MacBook-Pro.local 9.3.0 Darwin Kernel Version 9.3.0: Fri May 23 00:49:16 PDT 2008; root:xnu-1228.5.18~1/RELEASE_I386 i386 Build Date Jan 24 2008 00:26:01 Configure './configure' '--disable-dependency-tracking' '--prefix=/usr/local/php5' '--with- Command apxs2=/usr/sbin/apxs' '--with-config-file-scan-dir=/usr/local/php5/php.d' '--with-openssl=/usr' '-- with-zlib=/usr' '--with-zlib-dir=/usr' '--with-gd' '--with-ldap' '--with-xmlrpc' '--enable-exif' '-- enable-soap' '--enable-sqlite-utf8' '--enable-wddx' '--enable-ftp' '--enable-sockets' '--with- bz2=/usr' '--enable-zip' '--enable-pcntl' '--enable-shmop' '--enable-sysvsem' '--enable- sysvshm' '--enable-sysvmsg' '--enable-memory-limit' '--enable-mbstring' '--enable-bcmath' '-- enable-calendar' '--with-ming=shared,/usr/local/php5' '--with-mssql=shared,/usr/local/php5' '-- with-mhash=shared,/usr/local/php5' '--enable-memcache' '--with-imap=../imap-2007' '--with- kerberos=/usr' '--with-imap-ssl=/usr' '--with-libxml-dir=shared,/usr/local/php5' '--with- xsl=shared,/usr/local/php5' '--with-gettext=/usr/local/php5' '--with-curl=shared,/usr/local/php5' '- -with-png-dir=/usr/local/php5' '--with-jpeg-dir=/usr/local/php5' '--enable-gd-native-ttf' '--with- freetype-dir=/usr/local/php5' '--with-mysql=shared,/usr/local/php5' '--with- mysqli=shared,/usr/local/php5/bin/mysql_config' '--with-pdo-mysql=shared,/usr/local/php5' '-- with-pgsql=shared,/usr/local/php5' '--with-pdo-pgsql=shared,/usr/local/php5' -

Playing Safe

Playing Safe Vancouver PHP Conference January 22nd, 2004. Vancouver, Canada Derick Rethans <[email protected]> Slide 1/29 Questions May 16 2004 ? - 2 - Slide 2/29 Why use encryption? May 16 2004 o - 3 - Slide 3/29 Quote May 16 2004 "People who are willing to rely on the government to keep them safe are pretty much standing on Darwin's mat, pounding on the door, screaming, 'Take me, take me!'" Carl Jacobs, Alt.Sysadmin.Recovery - 4 - Slide 4/29 Cipher groups May 16 2004 o Rotational (ceasar) o Transposition (xor) o Symmetric (DES, Blowfish, AES) o Asymmetric (RSA, Diffie Hellman) o Hashes - 5 - Slide 5/29 Rotational ciphers May 16 2004 o Rotate characters depending on key o Easy to crack unless key is same size as plain text o But we always have str_rot13() :-) - 6 - Slide 6/29 Transposition ciphers May 16 2004 o XOR plain text with key o Easy to crack with small key o Impossible to crack when<br /> strlen(plain) == strlen(key) - 7 - Slide 7/29 Quote May 16 2004 "Perl - The only language that looks the same before and after RSA encryption." Keith Bostic - 8 - Slide 8/29 Asymmetric ciphers May 16 2004 o Key to encrypt is different as the key to decrypt o ElGamal, Diffie Hellman, RSA o Used for signatures and key distribution - 9 - Slide 9/29 Hash algorithms May 16 2004 o Not reversable o PHP: sha1(), md5(); mhash: GOST, HAVAL o Used for in signatures and validation - 10 - Slide 10/29 Hash Example May 16 2004 <?php if (isset($_COOKIE['stamp'])) { if ($_COOKIE['stamp'] == sha1($_COOKIE['data'] . -

A Review on the Design of Lightweight Symmetric Block Ciphers for Cyber Physical Systems

International Journal of Recent Technology and Engineering (IJRTE) ISSN: 2277-3878, Volume-7, Issue-6, March 2019 A Review on the Design of Lightweight Symmetric Block Ciphers for Cyber Physical Systems Prathiba A, Kanchana Bhaaskaran V S Every operation involved in the cipher decides its security Abstract: Selection and deployment of security hardware for properties as well the performance characteristics. Existing Cyber Physical Systems (CPS) necessitate a smart choice. studies focus on either structural composition or involved Lightweight security algorithms are viable choices for such operations in algorithms to achieve a demanded level of applications. The study presented, will give an overview of security. The literature lacks a study relating design, security lightweight symmetric block cipher algorithms and provide a and hardware performance of the SPN type of block ciphers summary for algorithm designers of the parameters that influence the design of a cipher algorithm and its impact on security and [7], [8]. A comparative analysis of design, security and implementation. Comprehensive review of lightweight, symmetric, hardware architecture as three corners is the motive of the Substitution Permutation Network (SPN) type of block ciphers review presented. Overview of the tradeoff parameters is aids the lightweight cryptographic algorithm designer in selection shown in Fig. 1. of operations suitable for Cyber Physical Systems. An overall survey on existing lightweight SPN type symmetric block ciphers pertaining to design, security and hardware performance as the Cipher three corners that trade-off cipher design is made. The design Design composition of cipher based on security and hardware cost is the highlight of this paper. Index Terms: Lightweight block ciphers, security, performance Choice of Design and design. -

Encryption and Decryption of Data Replication Using Advanced Encryption Standard (Aes)

ENCRYPTION AND DECRYPTION OF DATA REPLICATION USING ADVANCED ENCRYPTION STANDARD (AES) FARAH ZURAIN BINTI MOHD FOIZI BACHELOR OF COMPUTER SCIENCE (COMPUTER NETWORK SECURITY)WITH HONOURS UNIVERSITI SULTAN ZAINAL ABIDIN 2018 ENCRYPTION AND DECRYPTION OF DATA REPLICATION USING ADVANCED ENCRYPTION STANDARD (AES) FARAH ZURAIN BINTI MOHD FOIZI Bachelor of Computer Science (Computer Network Security) with Honours Faculty of Informatics and Computing Universiti Sultan Zainal Abidin, Terengganu, Malaysia 2018 DECLARATION It is declared that the project titled Enryption and Decryption of Data Replication Using Replication using Advanced Encryption Standard (AES) algorithm is originally proposed by me. However, further research and exploration onto this project is granted and encourage for contribution upon this topic. __________________________ (Farah Zurain Binti Mohd Foizi) BTBL15041003 Date: ii CONFIRMATION This project entitle Encryption and Decryption of Data Replication using Advanced Encryption Standard (AES) was prepared and submitted by Farah Zurain binti Mohd Foizi, matric number BTBL15041003 has been satisfactory in terms of scope, quality and presentation as a partial fulfilment of the requirement for Bachelor of Computer Science (Computer Network Security) in University Sultan Zainal Abidin (UniSZA). Signature : ……………………… Supervisor : ……………………… Date : ……………………… iii DEDICATION In the name of Allah, the Most Gracious and the Most Merciful, Alhamdulilah thanks to Allah for giving me the opportunity to complete the Final Year Project proposal report entitles “Encryption and Decryption of Data Replication Using Advanced Encryption Standard (AES)”. I would like to thanks to Dr Zarina bt Mohamad as my supervisor who had guided me, give valuable information and give useful suggestion during compilation and preparation of this research. Also thanks to my family and friends at the instigation of the completion of this project. -

Secure Coding Guide

Secure Coding Guide Version 53.0, Winter ’22 @salesforcedocs Last updated: July 21, 2021 © Copyright 2000–2021 salesforce.com, inc. All rights reserved. Salesforce is a registered trademark of salesforce.com, inc., as are other names and marks. Other marks appearing herein may be trademarks of their respective owners. CONTENTS Chapter 1: Secure Coding Guidelines . 1 Chapter 2: Secure Coding Cross Site Scripting . 2 Chapter 3: Secure Coding SQL Injection . 32 Chapter 4: Secure Coding Cross Site Request Forgery . 40 Chapter 5: Secure Coding Secure Communications . 47 Chapter 6: Storing Sensitive Data . 52 Chapter 7: Arbitrary Redirect . 58 Chapter 8: Authorization and Access Control . 62 Chapter 9: Lightning Security . 67 Chapter 10: Marketing Cloud API Integration Security . 76 Chapter 11: Secure Coding PostMessage . 79 Chapter 12: Secure Coding WebSockets . 81 Chapter 13: Platform Security FAQs . 82 CHAPTER 1 Secure Coding Guidelines This guide walks you through the most common security issues Salesforce has identified while auditing applications built on or integrated with the Lightning Platform. This guide takes into account that many of our developers write integration pieces with the Lightning Platform and includes examples from other web platforms such as Java, ASP.NET, PHP and Ruby on Rails. The Lightning Platform provides full or partial protection against many of these issues. It is noted when this is the case. Consider this to be an easy to read reference and not a thorough documentation of all web application security flaws. More details on a broader spectrum of web application security problems can be found on the OWASP (Open Web Application Security Project) site. -

Design and Implementation of a Lock-Key Image Encryption and Decryption, Based on a User Provided Password

International Journal of Computer Applications (0975 – 8887) Volume 85 – No 11, January 2014 Design and Implementation of a Lock-Key Image Encryption and Decryption, based on a User Provided Password 1 2 3 4 Harinandan Tunga Akash Ghosh Arnab Saha Swashata Ghosh Computer Science & Computer Science & Computer Science & Computer Science & Engineering Department Engineering Department Engineering Department Engineering Department RCC Institute of RCC Institute of RCC Institute of RCC Institute of Information Technology Information Technology Information Technology Information Technology Kolkata, India Kolkata, India Kolkata, India Kolkata, India ABSTRACT 2. REVIEW OF LITERATURE This paper is about encryption and decryption of images using a As security and integrity of data has become the main concern in secret password provided by the user. The encryption machine past few years due to exponential rise of threats from third party takes the password and the source image as input and generates a [3]. And in the present scenario almost all the data is transferred key pattern image by using Secure Hash Algorithm (SHA) and a via network pathways and so are vulnerable to various threats. lock image by using Mcrypt algorithm. It provides additional [1] [2] Has given a brilliant approach to image encryption based security using Image scrambling. The decryption machine takes on blowfish algorithm. This approach can be used on both color the lock image, key image and the password as input to generate and black & white images (.TIF images only). [5] Has given a the original image. It also checks if the input is valid and blocks performance analysis on [1] and was concluded that the the user when an invalid input is provided. -

PHP Credits Configuration

phpinfo() http://www.nettunoclub.com/info.php PHP Version 5.2.6-2ubuntu4.2 System Linux lnx-falco 2.6.24-9-pve #1 SMP PREEMPT Tue Nov 17 09:34:41 CET 2009 i686 Build Date Apr 17 2009 14:15:51 Server API Apache 2.0 Handler Virtual Directory disabled Support Configuration File /etc/php5/apache2 (php.ini) Path Loaded /etc/php5/apache2/php.ini Configuration File Scan this dir for /etc/php5/apache2/conf.d additional .ini files additional .ini files /etc/php5/apache2/conf.d/gd.ini, /etc/php5/apache2/conf.d/imagick.ini, /etc/php5 parsed /apache2/conf.d/imap.ini, /etc/php5/apache2/conf.d/mcrypt.ini, /etc/php5/apache2/conf.d /mysql.ini, /etc/php5/apache2/conf.d/mysqli.ini, /etc/php5/apache2/conf.d/pdo.ini, /etc/php5/apache2/conf.d/pdo_mysql.ini PHP API 20041225 PHP Extension 20060613 Zend Extension 220060519 Debug Build no Thread Safety disabled Zend Memory enabled Manager IPv6 Support enabled Registered PHP zip, php, file, data, http, ftp, compress.bzip2, compress.zlib, https, ftps Streams Registered Stream tcp, udp, unix, udg, ssl, sslv3, sslv2, tls Socket Transports Registered Stream string.rot13, string.toupper, string.tolower, string.strip_tags, convert.*, consumed, Filters convert.iconv.*, bzip2.*, zlib.* This server is protected with the Suhosin Patch 0.9.6.2 Copyright (c) 2006 Hardened-PHP Project This program makes use of the Zend Scripting Language Engine: Zend Engine v2.2.0, Copyright (c) 1998-2008 Zend Technologies PHP Credits Configuration PHP Core Directive Local Value Master Value allow_call_time_pass_reference On On allow_url_fopen -

PHP Info by Chris Flannagan PHP Version 7.0.24

Dashboard PHP Info by Chris Flannagan WPMU DEV Posts PHP Version: 7.0.24 Media WordPress Version: 4.8.2 Pages Server Software: Apache Comments Lists Email Address(es) - Separate with commas Contact Email This Information Invoicing Places Events PHP Version 7.0.24 Geodirectory System Linux whm45.smartseohosting.net 2.6.32- Appearance 673.26.1.lve1.4.30.el6.x86_64 #1 SMP Wed Jun 21 19:37:37 EDT 2017 x86_64 Plugins Build Date Sep 28 2017 18:11:54 Users Configure Command './configure' '--build=x86_64-redhat-linux- Tools gnu' '--host=x86_64-redhat-linux-gnu' '-- target=x86_64-redhat-linux-gnu' '-- Settings program-prefix=' '--prefix=/opt/cpanel/ea- php70/root/usr' '--exec- General prefix=/opt/cpanel/ea-php70/root/usr' '-- Writing bindir=/opt/cpanel/ea-php70/root/usr/bin' Reading '--sbindir=/opt/cpanel/ea- php70/root/usr/sbin' '-- Discussion sysconfdir=/opt/cpanel/ea- Media php70/root/etc' '-- datadir=/opt/cpanel/ea- Permalinks php70/root/usr/share' '-- Page Builder includedir=/opt/cpanel/ea- php70/root/usr/include' '-- PHP Info libdir=/opt/cpanel/ea- Optimize Database php70/root/usr/lib64' '-- libexecdir=/opt/cpanel/ea- php70/root/usr/libexec' '-- SEO localstatedir=/opt/cpanel/ea- Loginizer Security Loginizer Security php70/root/usr/var' '-- sharedstatedir=/opt/cpanel/ea- Spin Rewriter php70/root/usr/com' '-- User Sync mandir=/opt/cpanel/ea- php70/root/usr/share/man' '-- GD Booster infodir=/opt/cpanel/ea- php70/root/usr/share/info' '--cache- Google Analytics file=../config.cache' '--with-libdir=lib64' '-- with-config-file-path=/opt/cpanel/ea- -

PHP Version 5.4.33

phpinfo() http://crm.fedlock.com/getinfo.php Current PHP version: 5.4.33 PHP Version 5.4.33 System Windows NT FL-APP-01 6.1 build 7601 (Windows Server 2008 R2 Standard Edition Service Pack 1) i586 Build Date Sep 17 2014 20:05:18 Compiler MSVC9 (Visual C++ 2008) Architecture x86 Configure cscript /nologo configure.js "--enable-snapshot-build" "--disable-isapi" "--enable- Command debug-pack" "--without-mssql" "--without-pdo-mssql" "--without-pi3web" "--with- pdo-oci=C:\php-sdk\oracle\instantclient10\sdk,shared" "--with-oci8=C:\php-sdk\oracle \instantclient10\sdk,shared" "--with-oci8-11g=C:\php-sdk\oracle\instantclient11\sdk,shared" "--enable-object-out-dir=../obj/" "--enable-com-dotnet=shared" "--with-mcrypt=static" "--disable-static-analyze" "--with-pgo" Server API Apache 2.4 Handler Apache Lounge Virtual Directory enabled Support Configuration C:\Windows File (php.ini) Path Loaded C:\Bitnami\suitecrm-7.1.4-0\php\php.ini Configuration File Scan this dir for (none) additional .ini files Additional .ini (none) files parsed PHP API 20100412 PHP Extension 20100525 Zend Extension 220100525 Zend Extension API220100525,TS,VC9 Build PHP Extension API20100525,TS,VC9 Build Debug Build no Thread Safety enabled Zend Signal disabled Handling Zend Memory enabled Manager Zend Multibyte provided by mbstring Support IPv6 Support enabled DTrace Support disabled Registered PHP php, file, glob, data, http, ftp, zip, compress.zlib, https, ftps, phar Streams Registered tcp, udp, ssl, sslv3, sslv2, tls Stream Socket Transports 1 of 22 3/11/2017 4:13 PM phpinfo()