D2.1 Cyber Incident Handling Trend Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cisco NAC Network Module for Integrated Services Routers

Data Sheet Cisco NAC Network Module for Integrated Services Routers The Cisco ® NAC Network Module for Integrated Services Routers (NME-NAC-K9) brings the feature-rich Cisco NAC Appliance Server capabilities to Cisco 2800, 2900, 3800 and 3900 Series Integrated Services Routers. Cisco NAC Appliance (also known as Cisco Clean Access) is a rapidly deployable Network Admission Control (NAC) product that allows network administrators to authenticate, authorize, evaluate, and remediate wired, wireless, and remote users and their machines prior to allowing them onto the network. Product Overview The Cisco NAC Network Module for Integrated Services Routers (NME-NAC-K9) extends the Cisco NAC Appliance portfolio of products to smaller locations, helping enable network admission control (NAC) capabilities from the headquarters to the branch office (Figure 1). The integration of NAC Appliance Server capabilities into a network module for Integrated Services Routers allows network administrators to manage a single device in the branch office for data, voice, and security requirements, reducing network complexity, IT staff training needs, equipment sparing requirements, and maintenance costs. The Cisco NAC Network Module for Integrated Services Routers deployed at the branch office remediates potential threats locally before they traverse the WAN and potentially infect the network. Figure 1. Cisco NAC Network Module for Integrated Services Routers (NME-NAC-K9) The Cisco NAC Network Module for Integrated Services Routers is an advanced network security product that: ● Recognizes users, their devices, and their roles in the network: This first step occurs at the point of authentication, before malicious code can cause damage. ● Evaluates machines to determine their compliance with security policies: Security policies can vary by user type, device type, or operating system. -

Using Web Honeypots to Study the Attackers Behavior

i 2017 ENST 0065 EDITE - ED 130 Doctorat ParisTech THÈSE pour obtenir le grade de docteur délivré par TELECOM ParisTech Spécialité « Informatique » présentée et soutenue publiquement par Onur CATAKOGLU le 30 Novembre 2017 Using Web Honeypots to Study the Attackers Behavior Directeur de thèse : Davide BALZAROTTI T H Jury È William ROBERTSON, Northeastern University Rapporteur Magnus ALMGREN, Chalmers University of Technology Rapporteur S Yves ROUDIER, University of Nice Sophia Antipolis Examinateur Albert LEVI, Sabanci University Examinateur E Leyla BILGE, Symantec Research Labs Examinateur TELECOM ParisTech École de l’Institut Télécom - membre de ParisTech Using Web Honeypots to Study the Attackers Behavior Thesis Onur Catakoglu [email protected] École Doctorale Informatique, Télécommunication et Électronique, Paris ED 130 November 30, 2017 Advisor: Prof. Dr. Davide Balzarotti Examiners: EURECOM, Sophia-Antipolis Prof. Dr. Yves ROUDIER, University of Nice Sophia Antipolis Reviewers: Prof. Dr. William ROBERTSON, Prof. Dr. Albert LEVI, Northeastern University Sabanci University Prof. Dr. Magnus ALMGREN, Dr. Leyla BILGE, Chalmers University of Technology Symantec Research Labs Acknowledgements First and foremost I would like to express my gratitude to my supervisor, Davide Balzarotti. He was always welcoming whenever I need to ask questions, discuss any ideas and even when he was losing in our long table tennis matches. I am very thankful for his guidance throughout my PhD and I will always keep the mental image of him staring at me for various reasons as a motivation to move on when things will get tough in the future. I would also like to thank my reviewers for their constructive comments regarding this thesis. -

OSSIM: a Careful, Free and Always Available Guardian for Your Network OSSIM a Careful, Free and Always Available Guardian for Your Network

FEATURE OSSIM: a Careful, Free and Always Available Guardian for Your Network OSSIM a Careful, Free and Always Available Guardian for Your Network Monitor your network’s security 24/7 with a free and open-source solution that collects, analyzes and reports logs of the events on your network. MARCO ALAMANNI 68 / JUNE 2014 / WWW.LINUXJOURNAL.COM LJ242-June2014.indd 68 5/22/14 12:36 PM etworks and information check them all, one by one, to obtain systems are increasingly meaningful information. N exposed to attacks that A further difficulty is that there is are becoming more sophisticated no single standard used to record the and sustained over time, such as logs and often, depending on the type the so-called APT (Advanced and size, they are not immediate or Persistent Threats). easy to understand. Information security experts agree It is even more difficult to relate on the fact that no organization, other logs produced by many even the best equipped to protect different systems to each other itself from these attacks, can be manually, to highlight anomalies considered immune, and that the in the network that would not be issue is not whether its systems will detectable by analyzing the logs of be compromised, but rather when each machine separately. and how it will happen. SIEM (Security Information and It is essential to be able to Event Management) software, detect attacks in a timely manner therefore, is not limited to and implement the relative being a centralized solution for countermeasures, following log management, but also (and appropriate procedures to respond especially) it has the ability to to incidents, thus minimizing the standardize logs in a single format, effects and the damages they can analyze the recorded events, cause. -

Cisco Self Defending Network

Cisco Self Defending Network Mai 2007 Presentation_ID © 2006 Cisco Systems, Inc. All rights reserved. Cisco Confidential 1 Intelligent Networking Using the Network to Enable Business Processes Cisco Network Strategy Utilize the Network to Unite Isolated Layers and Domains to Enable Business Processes Connectivity Intelligent Networking Business Networked Processes Infrastructure • Active participation in application and service delivery • A systems approach integrates Resilient technology layers to reduce Integrated complexity Adaptive • Flexible policy controls adapt this intelligent system to your Applications business though business rules and Services Presentation_ID © 2006 Cisco Systems, Inc. All rights reserved. Cisco Confidential 2 When it comes to information security, what are the objectives? Adaptive On Demand Agile Align security practice Organization Organization and policy to business requirements. Security that’s a business enabler, not an inhibitor. Keep costs appropriate: It’s not necessarily about reducing costs, but rather, spending where it counts the most • The network touches all Reduce complexity of parts of the infrastructure the overall environment • It is uniquely positioned to Control and contain threats so help solve these issues they don’t control you Presentation_ID © 2006 Cisco Systems, Inc. All rights reserved. Cisco Confidential 3 Self-Defending Network Defined Efficient Security Management, Control, Operational Management and Response and Policy Control Advanced technologies and security services to -

Popular OSSIM Plugins Sidebar for a Brief Agent by Running the Command: Listing of the Leading Ones)

To read more Linux Journal or start your subscription, please visit http://www.linuxjournal.com. AlienVault the Future of Security Information Management Meet AlienVault OSSIM, a complex security system designed to make your life simpler. JERAMIAH BOWLING Security Information Management (SIM) systems have made many security administrators’ lives easier over the years. SIMs organize an enterprise’s security environment and provide a common interface to manage that environment. Many SIM products are available today that perform well in this role, but none are as ambitious as AlienVault’s Open Source Security Information Management (OSSIM). With OSSIM, AlienVault has harnessed the capabilities of several popular security packages and created an “intelligence” that translates, analyzes and organizes the data in unique and customizable ways that most SIMs cannot. It uses a process called correlation to make threat judgments dynamically and report in real time on the state of risk in your environment. The end result is a design approach that makes risk management an organized and observable process that security administrators and managers alike can appreciate. In this article, I explain the installation of an all-in-one OSSIM agent/server into a test network, add hosts, deploy a Figure 1. A little tough to read, but this is where everything starts. third-party agent, set up a custom security directive and take a quick tour of the built-in incident response system. In addition AlienVault site in .iso form (version 2.1 at the time of this to the OSSIM server, I have placed a CentOS-based Apache writing) and booted my VM from the media. -

OSSIM Fast Guide

----------------- OSSIM Fast Guide ----------------- February 8, 2004 Julio Casal <[email protected]> http://www.ossim.net WHAT IS OSSIM? In three phrases: - VERIFICATION may be OSSIM’s most valuable contribution at this time. Using its correlation engine, OSSIM screens out a large percentage of false positives. - The second advantage is that of INTEGRATION, we have a series of security tools that enable us to perform a range of tasks from auditing, pattern matching and anomaly detection to forensic analysis in one single platform. We take responsibility for testing the stability of these programs and providing patches for them to work together. - The third is RISK ASSESSMENT, OSSIM offers high level state indicators that allow us to guide inspection and measure the security situation of our network. * DISTRIBUTION - OSSIM integrates a number of powerful open source security tools in a single distribution. These include: - Snort - Nessus - Ntop - Snortcenter - Acid - Riskmeter - Spade - RRD - Nmap, P0f, Arpwatch, etc.. - These tools are linked together in OSSIM’s console giving the user a single, integrated navigation environment. * ARCHITECTURE - OSSIM is organized into 3 layers: . Sensors . Servers . Console - The database is independent of these layers and could be considered to be a fourth layer. OSSIM 2004/02/07 Sensors - Sensors integrate powerful software in order to provide three capabilities: . IDS . Anomaly Detection . Real time Monitoring - Sensors can also perform other functions including traffic consolidation on a segment and event normalization. - Sensors communicate and receive orders from the server using a proprietary protocol. Server - OSSIM’s server has the following capabilities: . Correlation . Prioritization . Online inventory . Risk assessment . Normalization Console The console’s interface is structured hierarchically with the following functions: . -

Security Operations and Incident Management Knowledge Area (Draft for Comment)

SECURITY OPERATIONS AND INCIDENT MANAGEMENT KNOWLEDGE AREA (DRAFT FOR COMMENT) AUTHOR: Hervé Debar – Telecom SudParis EDITOR: Howard Chivers – University of York REVIEWERS: Douglas Weimer – RHEA Group Magnus Almgren – Chalmers University of Technology Marc Dacier – EURECOM Sushil Jajodia – George Mason University © Crown Copyright, The National Cyber Security Centre 2018. Security Operations and Incident Management Hervé Debar September 2018 INTRODUCTION The roots of Security Operations and Incident Management (SOIM) can be traced to the original report by James Anderson [5] in 1981. This report theorises that full protection of the information and communication infrastructure is impossible. From a technical perspective, it would require complete and ubiquitous control and certification, which would block or limit usefulness and usability. From an economic perspective, the cost of protection measures and the loss related to limited use effectively require an equilibrium between openness and protection, generally in favour of openness. From there on, the report promotes the use of detection techniques to complement protection. The first ten years afterwards saw the development of the original theory of intrusion detection by Denning [18], which still forms the theoretical basis of most of the work detailed in this ka. Security Operations and Incident Management can be seen as an application and automation of the Monitor Analyze Plan Execute - Knowledge (MAPE-K) autonomic computing loop to cybersecu- rity [30], even if this loop was defined later than the initial developments of SOIM. Autonomic com- puting aims to adapt ICT systems to changing operating conditions. The loop, described in figure 1, is driven by events that provide information about the current behaviour of the system. -

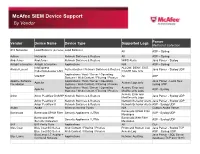

Mcafee SIEM Device Support by Vendor

McAfee SIEM Device Support By Vendor Vendor Device Name Device Type Supported Logs Parser Method of Collection A10 Networks Load Balancer (AX Series) Load Balancer All ASP – Syslog Adtran NetVanta Network Switches & Routers All ASP – Syslog Airdefense Airdefense Network Switches & Routers WIPS Alerts Java Parser - Syslog Airtight Interactive Airtight Interactive Applications N/A ASP – Syslog InfoExpress ALLOW, DENY, EXIT, Alcatel-Lucent Authentication / Network Switches & Routers Java Parser - Syslog UDP CyberGatekeeper LAN CGATE type only Applications / Host / Server / Operating VitalQIP All ASP Systems / Web Content / Filtering / Proxies Apache Software Applications / Host / Server / Operating Java Parser - Local files; Apache Access Logs only Foundation Systems / Web Content / Filtering / Proxies syslog UDP Applications / Host / Server / Operating Access, Error and Apache ASP - Syslog Systems / Web Content / Filtering / Proxies ModSecurity Logs Access, Error and Arbor Arbor Peakflow DoS/SP Network Switches & Routers Java Parser - Syslog UDP ModSecurity Logs Arbor Peakflow X Network Switches & Routers Network Behavior Alerts Java Parser - Syslog UDP Arbor Peakflow X Network Switches & Routers Network Behavior Alerts ASP - Syslog UDP Aruba Aruba Wireless Access Points N/A Custom Aruba Parser Barracuda SPAM Filter Barracuda Barracuda SPAM Filter Security Appliances / UTMs ASP - Syslog UDP Messages Barracuda Web Barracuda Web Filter Security Appliances / UTMs ASP - Syslog UDP Security Gateways Messages Bit9 Bit9 Parity Suite Applications -

OSSIM, Una Alternativa Para La Integración De La Gestión De Seguridad En La Red

Revista Telem@tica. Vol. 11. No. 1, enero-abril, 2012, p. 11-19 ISSN 1729-3804 OSSIM, una alternativa para la integración de la gestión de seguridad en la red Walter Baluja García1, Cesar Camilo Caro Reina2, Frank Abel Cancio Bello3 1 ISPJAE, Ingeniero en Telecomunicaciones y Electrónica, Dr. C. Tec. [email protected] 2ISPJAE. Estudiantes de 5to Año de Telecomunicaciones y Electrónica [email protected] 3 ISPJAE, Ingeniero en Informática [email protected] RESUMEN / ABSTRACT En la actualidad resulta cada vez más complicado mantenerse asegurado frente a los diferentes ataques que se pueden presentar en una red, lo cual resulta en una gestión de seguridad mucho más compleja para los administradores de red. Para cumplir con ese cometido los gestores utilizan un grupo de soluciones que permiten automatizar su trabajo, gestionando grandes volúmenes de información. El presente artículo realiza un breve resumen sobre los sistemas de Administración de Información y Eventos de Seguridad (SIEM por sus siglas en ingles), revisando sus principales características, y continua con la descripción del sistema OSSIM Alienvault, donde se muestran su arquitectura y componentes. Palabras claves: Gestión, Seguridad, SIEM, OSSIM. OSSIM, an alternative network management and protection: Actuality is more difficult to maintain a system secured from the different threats that exist on a network, this results in more complex security management for network managers. This paper briefly summarizes Security Information and Event Management systems (SIEM), it reviews the main characteristics and continues with a description of OSSIM Alienvault System, showing its components and architecture. Keywords: Management, Security, SIEM, OSSIM. -

Cisco NAC Guest Server Installation and Configuration Guide, Release 2.1

Cisco NAC Guest Server Installation and Configuration Guide Release 2.1 November 2012 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 Text Part Number: OL-28256-01 THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

Alienvault OSSIM Installation Guide

AlienVault Installation Guide AlienVault LC - 1901 S Bascom Avenue Suite 220 Campbell, CA, 95008 T +1 408 465-9989 [email protected] wwww.alienvault.com Juan Manuel Lorenzo ([email protected]) Version 1.5 Copyright © AlienVault 2010 All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or me- chanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written per- mission of the copyright owner and publisher. Any trademarks referenced herein are the property of their respective holders. AlienVault LC - 1901 S Bascom Avenue Suite 220 Campbell, CA, 95008 T +1 408 465-9989 [email protected] wwww.alienvault.com AlienVault Table of Contents Introduction! 1 About this Installation Guide! 1 AlienVault Professional SIEM! 2 What is AlienVault Professional SIEM?! 2 Basic Operation! 3 Components! 4 Detector! 4 Collector! 5 SIEM! 5 Logger! 5 Web interface! 5 Before installing AlienVault! 6 Installation Profiles! 6 Sensor! 6 Server! 7 Framework! 7 Database! 7 All-in-one! 7 Overview of the AlienVault installation procedure! 8 Automated Installation! 8 Custom Installation! 8 What you will need! 9 Professional Key! 9 Role of the installed system! 9 Network configuration for the Management Network card! 9 Requirements! 10 Hardware requirements! 10 Network requirements! 10 Obtaining AlienVault Installation Media! 11 Downloading the installer from AlienVault Website! 11 Creating a boot CD! 11 Booting the installer! 11 AlienVault Installation -

Community College Security Design Considerations SBA

Community College Security Design Considerations SBA As community colleges embrace new communication and collaboration tools, • Network abuse—Use of non-approved applications by students, faculty, and staff; transitioning from traditional classroom teaching into an Internet-based, media-rich peer-to-peer file sharing and instant messaging abuse; and access to forbidden education and learning environment, a whole new set of network security challenges content arise. Community college network infrastructures must be adequately secured to protect • Unauthorized access—Intrusions, unauthorized users, escalation of privileges, IP students, staff, and faculty from harmful content, to guarantee confidentiality of private spoofing, and unauthorized access to restricted learning and administrative data, and to ensure the availability and integrity of the systems and data. Providing a safe resources and secure network environment is a top responsibility for community college administrators and community leaders. • Data loss—Loss or leakage of student, staff, and faculty private data from servers and user endpoints Security Design • Identity theft and fraud—Theft of student, staff, and faculty identity or fraud on servers and end users through phishing and E-mail spam Within the Cisco Community College reference design, the service fabric network provides the foundation on which all solutions and services are built to solve the business The Community College reference design accommodates a main campus and one or challenges facing community colleges. These business challenges include building a more remote smaller campuses interconnected over a metro Ethernet or managed WAN virtual learning environment, providing secure connected classrooms, ensuring safety service. Each of these campuses may contain one or more buildings of varying sizes, as and security, and operational efficiencies.