Opinionated Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Libertarian Party National Convention | First Sitting May 22-24, 2020 Online Via Zoom

LIBERTARIAN PARTY NATIONAL CONVENTION | FIRST SITTING MAY 22-24, 2020 ONLINE VIA ZOOM CURRENT STATUS: FINAL APPROVAL DATE: 9/12/20 PREPARED BY ~~aryn ,~nn ~ar~aQ, LNC SECRETARY TABLE OF CONTENTS CONVENTION FIRST SITTING DAY 1-OPENING 3 CALL TO ORDER 3 CONVENTION OFFICIALS AND COMMITTEE CHAIRS 3 CREDENTIALS COMMITTEE REPORT 4 ADOPTION OF THE AGENDA FOR THE FIRST SITTING 7 CONVENTION FIRST SITTING DAY 1-ADJOURNMENT 16 CONVENTION FIRST SITTING DAY 2 -OPENING 16 CREDENTIALS COMMITTEE UPDATE 16 PRESIDENTIAL NOMINATION 18 PRESIDENTIAL NOMINATION QUALIFICATION TOKENS 18 PRESIDENTIAL NOMINATION SPEECHES 23 PRESIDENTIAL NOMINATION – BALLOT 1 24 PRESIDENTIAL NOMINATION – BALLOT 2 26 PRESIDENTIAL NOMINATION – BALLOT 3 28 PRESIDENTIAL NOMINATION – BALLOT 4 32 CONVENTION FIRST SITTING DAY 2 -ADJOURNMENT 33 CONVENTION FIRST SITTING DAY 3 -OPENING 33 CREDENTIALS COMMITTEE UPDATE 33 VICE-PRESIDENTIAL NOMINATION 35 VICE-PRESIDENTIAL NOMINATION QUALIFICATION TOKENS 35 VICE-PRESIDENTIAL NOMINATION SPEECHES 37 ADDRESS BY PRESIDENTIAL NOMINEE DR. JO JORGENSEN 37 VICE-PRESIDENTIAL NOMINATION – BALLOT 1 38 VICE-PRESIDENTIAL NOMINATION – BALLOT 2 39 VICE-PRESIDENTIAL NOMINATION – BALLOT 3 40 STATUS OF TAXATION 41 ADJOURNMENT TO CONVENTION SECOND SITTING 41 SPECIAL THANKS 45 Appendix A – State-by-State Detail for Election Results 46 Appendix B – Election Anomalies and Other Convention Observations 53 2020 NATIONAL CONVENTION | FIRST SITTING VIA ZOOM – FINAL Page 2 LEGEND: text to be inserted, text to be deleted, unchanged existing text. All vote results, points of order, substantive objections, and rulings will be set off by BOLD ITALICS. The LPedia article for this convention can be found at: https://lpedia.org/wiki/NationalConvention2020 Recordings for this meeting can be found at the LPedia link. -

UOCAVA Election Notice

Official Election Notice County of ALAMANCE 2020 PRIMARY Election Date: 03/03/2020 This is an official notice of an election to be conducted in ALAMANCE County on 03/03/2020. This notice contains a list of all of the ballot measures and federal, State, and local offices this county expects, as of this date, to be on the ballot on the date of the election. (See Attachment) An Election Notice will be prepared not later than 100 days before a regularly scheduled election that permits absentee voting, and as soon as practicable in the case of an election or vacancy election not regularly scheduled. For a second primary, an Election Notice will be prepared, no later than the day following the date the appropriate board of elections orders that a second primary be held. As soon as ballot styles are printed, this county board of elections will update this notice with the certified candidates for each office and ballot measures and referenda questions that will be on the ballot. For General Elections during even-numbered years, ballots will be printed 60 days prior to the election. For statewide primaries and other elections (except municipal elections), ballots will be printed 50 days prior to the election. Municipal ballots are available 30 days prior to Election Day. You must request an updated Election Notice. Transmitting a Federal Write-in Absentee Ballot: This notice may be used in conjunction with the federal write-in absentee ballot (FWAB). Covered military & overseas voters seeking to vote by absentee ballot may use the FWAB to register to vote, request an absentee ballot, and vote an official military-overseas ballot. -

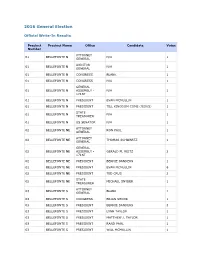

2016 General Write-In Results

2016 General Election Official Write-In Results Precinct Precinct Name Office Candidate Votes Number ATTORNEY 01 BELLEFONTE N N/A 1 GENERAL AUDITOR 01 BELLEFONTE N N/A 1 GENERAL 01 BELLEFONTE N CONGRESS BLANK 1 01 BELLEFONTE N CONGRESS N/A 1 GENERAL 01 BELLEFONTE N ASSEMBLY - N/A 1 171ST 01 BELLEFONTE N PRESIDENT EVAN MCMULLIN 1 01 BELLEFONTE N PRESIDENT TILL KINGDOM COME (JESUS) 1 STATE 01 BELLEFONTE N N/A 1 TREASURER 01 BELLEFONTE N US SENATOR N/A 1 ATTORNEY 02 BELLEFONTE NE RON PAUL 1 GENERAL ATTORNEY 02 BELLEFONTE NE THOMAS SCHWARTZ 1 GENERAL GENERAL 02 BELLEFONTE NE ASSEMBLY - GERALD M. REITZ 2 171ST 02 BELLEFONTE NE PRESIDENT BERNIE SANDERS 1 02 BELLEFONTE NE PRESIDENT EVAN MCMULLIN 6 02 BELLEFONTE NE PRESIDENT TED CRUS 2 STATE 02 BELLEFONTE NE MICHAEL SNYDER 1 TREASURER ATTORNEY 03 BELLEFONTE S BLANK 1 GENERAL 03 BELLEFONTE S CONGRESS BRIAN SHOOK 1 03 BELLEFONTE S PRESIDENT BERNIE SANDERS 3 03 BELLEFONTE S PRESIDENT LYNN TAYLOR 1 03 BELLEFONTE S PRESIDENT MATTHEW J. TAYLOR 1 03 BELLEFONTE S PRESIDENT RAND PAUL 1 03 BELLEFONTE S PRESIDENT WILL MCMULLIN 1 ATTORNEY 04 BELLEFONTE SE JORDAN D. DEVIER 1 GENERAL 04 BELLEFONTE SE CONGRESS JORDAN D. DEVIER 1 04 BELLEFONTE SE PRESIDENT BERNIE SANDERS 1 04 BELLEFONTE SE PRESIDENT BURNEY SANDERS/MICHELLE OBAMA 1 04 BELLEFONTE SE PRESIDENT DR. BEN CARSON 1 04 BELLEFONTE SE PRESIDENT ELEMER FUDD 1 04 BELLEFONTE SE PRESIDENT EVAN MCMULLAN 1 04 BELLEFONTE SE PRESIDENT EVAN MCMULLIN 2 04 BELLEFONTE SE PRESIDENT JIMMY CARTER/GEORGE M.W. -

Completeandleft

MEN WOMEN 1. JA Jason Aldean=American singer=188,534=33 Julia Alexandratou=Model, singer and actress=129,945=69 Jin Akanishi=Singer-songwriter, actor, voice actor, Julie Anne+San+Jose=Filipino actress and radio host=31,926=197 singer=67,087=129 John Abraham=Film actor=118,346=54 Julie Andrews=Actress, singer, author=55,954=162 Jensen Ackles=American actor=453,578=10 Julie Adams=American actress=54,598=166 Jonas Armstrong=Irish, Actor=20,732=288 Jenny Agutter=British film and television actress=72,810=122 COMPLETEandLEFT Jessica Alba=actress=893,599=3 JA,Jack Anderson Jaimie Alexander=Actress=59,371=151 JA,James Agee June Allyson=Actress=28,006=290 JA,James Arness Jennifer Aniston=American actress=1,005,243=2 JA,Jane Austen Julia Ann=American pornographic actress=47,874=184 JA,Jean Arthur Judy Ann+Santos=Filipino, Actress=39,619=212 JA,Jennifer Aniston Jean Arthur=Actress=45,356=192 JA,Jessica Alba JA,Joan Van Ark Jane Asher=Actress, author=53,663=168 …….. JA,Joan of Arc José González JA,John Adams Janelle Monáe JA,John Amos Joseph Arthur JA,John Astin James Arthur JA,John James Audubon Jann Arden JA,John Quincy Adams Jessica Andrews JA,Jon Anderson John Anderson JA,Julie Andrews Jefferson Airplane JA,June Allyson Jane's Addiction Jacob ,Abbott ,Author ,Franconia Stories Jim ,Abbott ,Baseball ,One-handed MLB pitcher John ,Abbott ,Actor ,The Woman in White John ,Abbott ,Head of State ,Prime Minister of Canada, 1891-93 James ,Abdnor ,Politician ,US Senator from South Dakota, 1981-87 John ,Abizaid ,Military ,C-in-C, US Central Command, 2003- -

Missouri House of Representatives

STATE REPRESENTATIVES 147 Missouri House of Representatives CATHERINE HANAWAY ROD JETTON House of Representatives Officers Speaker, Missouri House of Speaker Pro Tem, Missouri Representatives House of Representatives Catherine Hanaway, Speaker Rod Jetton, Speaker Pro Tem Jason Crowell, Majority Floor Leader Mark Wright, Assistant Majority Floor Leader Chuck Portwood, Majority Caucus Chair Annie Reinhart, Majority Caucus Secretary Chuck Purgason, Majority Whip Mark Abel, Minority Floor Leader Bill Ransdall, Assistant Minority Floor Leader Russ Carnahan, Minority Caucus Chair Terry Young, Minority Caucus Secretary Rick Johnson, Minority Whip Stephen S. Davis, Chief Clerk JASON CROWELL MARK ABEL Ralph Robinett, Sergeant-at-Arms Majority Floor Leader Minority Floor Leader Missouri House of Missouri House of Father David Buescher, Chaplain Representatives Representatives Rev. James Earl Jackson, Chaplain Goodman; Liese; Lipke; Luetkemeyer; Muckler; Committees of the House 2003 Salva; Smith (118); Spreng; Sutherland; Villa; Administration and Accounts: Miller, chair; Yates; Young Morris, vice chair; Behnen; Cooper (120); Appropriations–Health, Mental Health and Cunningham (145); Davis (122); Hampton; Social Services: Purgason, chair; Holand, vice Haywood; McKenna; Reinhart; Richard; Salva; chair; Bean; Brooks; Campbell; Cooper (155); Sander; Wagner Curls; Donnelly; El-Amin; Johnson (61); May; Agriculture: Myers, chair; Sander, vice chair; Page; Phillips; Portwood; Reinhart; Schaaf; Barnitz; Bean; Black; Bringer; Davis (122); Skaggs; Stefanick; -

UOCAVA Election Notice

Official Election Notice County of DARE 2020 PRIMARY Election Date: 03/03/2020 This is an official notice of an election to be conducted in DARE County on 03/03/2020. This notice contains a list of all of the ballot measures and federal, State, and local offices this county expects, as of this date, to be on the ballot on the date of the election. (See Attachment) An Election Notice will be prepared not later than 100 days before a regularly scheduled election that permits absentee voting, and as soon as practicable in the case of an election or vacancy election not regularly scheduled. For a second primary, an Election Notice will be prepared, no later than the day following the date the appropriate board of elections orders that a second primary be held. As soon as ballot styles are printed, this county board of elections will update this notice with the certified candidates for each office and ballot measures and referenda questions that will be on the ballot. For General Elections during even-numbered years, ballots will be printed 60 days prior to the election. For statewide primaries and other elections (except municipal elections), ballots will be printed 50 days prior to the election. Municipal ballots are available 30 days prior to Election Day. You must request an updated Election Notice. Transmitting a Federal Write-in Absentee Ballot: This notice may be used in conjunction with the federal write-in absentee ballot (FWAB). Covered military & overseas voters seeking to vote by absentee ballot may use the FWAB to register to vote, request an absentee ballot, and vote an official military-overseas ballot. -

SEC Cryptocurrency Enforcement: Q3 2013–Q4 2020

Economic and Financial Consulting and Expert Testimony SEC Cryptocurrency Enforcement Q3 2013–Q4 2020 Table of Contents Executive Summary 1 Number of Enforcement Actions 2 Allegations in Enforcement Actions 3 Allegations in Litigations vs. Administrative Proceedings 4 ICOs as Unregistered Securities Offering Allegations 6 Defendants in Litigations vs. Administrative Proceedings 7 Litigation Duration 9 Litigation Venue 10 Litigation Allegations by Venue 11 Appendices 12 Methodology 22 Endnotes 23 About the Author 26 i Cornerstone Research | SEC Cryptocurrency Enforcement: Q3 2013–Q4 2020 Table of Figures Figure 1: Number of SEC Cryptocurrency Enforcement Actions and Trading Suspensions 2 Figure 2: Allegations in SEC Cryptocurrency Enforcement Actions 3 Figure 3a: Allegations of Fraud in the Offer or Sale of Securities in SEC Cryptocurrency Enforcement Actions 4 Figure 3b: Allegations in SEC Cryptocurrency Litigations 5 Figure 3c: Allegations in SEC Cryptocurrency Administrative Proceedings 5 Figure 4: ICOs as Unregistered Securities Offering Allegations in SEC Cryptocurrency Enforcement Actions 6 Figure 5a: Defendants in SEC Cryptocurrency Litigations 7 Figure 5b: Respondents in SEC Cryptocurrency Administrative Proceedings 8 Figure 6: Time from Complaint to Resolution in SEC Cryptocurrency Litigations 9 Figure 7: Courts and Presiding Judges in SEC Cryptocurrency Litigations 10 Figure 8: Types of Allegations in SEC Cryptocurrency Litigations by Court Venue 11 Appendix 1: SEC Cryptocurrency Enforcement Actions 12 Appendix 2: SEC Cryptocurrency Trading Suspension Orders 19 Appendix 3: SEC Cryptocurrency Press Releases, Public Statements and Speeches, and Investor Alerts 20 ii Cornerstone Research | SEC Cryptocurrency Enforcement: Q3 2013–Q4 2020 Executive Summary The U.S. Securities and Exchange Commission (SEC) brought its first cryptocurrency-related enforcement action in July 2013. -

A Virtuális Tér Geopolitikája

GEOPOLITIKAI TANÁCS KÖZHASZNÚ ALAPÍTVÁNY www.cgeopol.hu GEOPOLITIKAI TANÁCS KÖZHASZNÚ ALAPÍTVÁNY dr. Dornfeld László - Keleti Arthur - Barsy Miklós - Kilin Józsefné - Berki Gábor - dr. Pintér István Műhelymunkák A virtuális tér geopolitikája 2016/1. szám Műhelymunkák A virtuális tér geopolitikája 2016/1. szám MMuhelymunkak_2016-1__++korr___LEVIL_.indduhelymunkak_2016-1__++korr___LEVIL_.indd 1 22016.016. 009.9. 009.9. 88:27:14:27:14 In memoriam Rémy Leveau Készült a Pallas Athéné Geopolitikai Alapítvány támogatásával. MMuhelymunkak_2016-1__++korr___LEVIL_.indduhelymunkak_2016-1__++korr___LEVIL_.indd 2 22016.016. 009.9. 009.9. 88:27:15:27:15 dr. Dornfeld László – Keleti Arthur – Barsy Miklós – Kilin Józsefné – Berki Gábor – dr. Pintér István Műhelymunkák A virtuális tér geopolitikája Tanulmánykötet 2016/1. szám Geopolitikai Tanács Közhasznú Alapítvány – Budapest, 2016 www.cgeopol.hu MMuhelymunkak_2016-1__++korr___LEVIL_.indduhelymunkak_2016-1__++korr___LEVIL_.indd 3 22016.016. 009.9. 009.9. 88:27:16:27:16 Szerkesztette: Pintér István A kötetben publikált tanulmányok a szerzők önálló véleményét tartalmazzák, így az abban foglaltak nem tekinthetők a Geopolitikai Tanács hivatalos álláspontjának. A tanulmánykötetben leírtak szabadon felhasználhatók a szerző és a forrás pontos megjelölésével. Idézés: Szerző neve (2016) A cikk címe. In: PINTÉR, István: A virtuális tér geopolitikája. Geopolitikai Tanács Műhelytanulmányok, 2016/1. p. oldalszám.HU ISSN 1788-7895. ISBN 978-963-9816-34-3. Th ese are open-access articles, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited. Citation: Name of the author (2016) Name of the article. In: PINTER, Istvan: Geopolitics of the Virtual Space. Council on Geopolitics Working Papers, 2016/1. pp. HU ISSN 1788-7895. -

Eccles EXCHANGE David Eccles School of Busin Ess Alumni Magazin E SUMMER 2013

ECCLES EXCHANGE DaviD EcclEs school of Busin Ess alumni magazin E summER 2013 A Growing Legacy James Lee Sorenson introduces the Sorenson Global Impact Investing Center Former Fortune Brands CEO Norm Wesley’s lifelong dedication to learning PAGE 10 Flying high with Azul Brazilian Airlines’ David Neeleman PAGE 12 Meet new Undergraduate Dean Mark Parker PAGE 14 elcome to the second edition of Eccles Exchange, Wthe alumni magazine for the David Eccles School of Business. The timing couldn’t be more perfect for our second issue, as we are spending this summer eagerly awaiting the opening of Phase II of the Spencer Fox Eccles Business Building on September 6. But as you can see reading through these pages, we’re not letting the anticipation keep us from working every day to make the David Eccles School of Business a place you can be proud of, through the noteworthy efforts of its students, staff, faculty and alumni. In this issue, you will read about how alumni like Norm Wesley, David Neeleman and Kyre Malkemes turned their educations at the University of Utah into the careers of their dreams. You’ll see how student Chris Manning started working to support the David Eccles School of Business by organizing fundraising challenges with his peers for Student Giving Week. You’ll meet our new associate dean of undergraduate affairs, Mark Parker, who brings exciting new ideas to the college this fall, and read about Dr. Kristina Diekmann’s groundbreaking research on harassment in the workplace that garnered the school national media attention. Most prominently, in reading our cover story you’ll learn about our new Sorenson Global Impact Investing Center, which will give students the chance to put their classroom learning to the test through hands-on work experiences around the world in places like India, Uganda and Guatemala—areas that will gain a better standard of living thanks to the efforts of students from the David Eccles School of Business. -

Kriptoeszközök Világa a Jog És Gazdaság Szemszögéből

KRIPTOESZKÖZÖK VILÁGA A JOG ÉS GAZDASÁG SZEMSZÖGÉBŐL KONFERENCIA KÖTET - VÁLOGATOTT TANULMÁNYOK PÉCSI TUDOMÁNYEGYETEM ÁLLAM- ÉS JOGTUDOMÁNYI KAR PÉCS, 2021. MÁRCIUS 19. KRIPTOESZKÖZÖK VILÁGA A JOG ÉS GAZDASÁG SZEMSZÖGÉBŐL KONFERENCIAKÖTET – VÁLOGATOTT TANULMÁNYOK 2021 Pécs 2021. március 19. Kriptoeszközök világa a jog és gazdaság szemszögéből konferencia válogatott tanulmányok Kiadó: Pécsi Tudományegyetem Állam- és Jogtudományi Kar Szerkesztők/Editorial board: Dr. Bujtár Zsolt Dr. Szívós Alexander Roland Dr. Gáspár Zsolt Dr. Szilovics Csaba Dr. Breszkovics Botond Lektorálta: Dr. Glavanits Judit Dr. Kuti Mónika Dr. Gál István László Dr. Szilovics Csaba Dr. Bujtár Zsolt ISBN: 978-963-429-684-3 Minden jog fenntartva. A kiadvány szerzői jogvédelem alatt áll. A kiadványt, illetve annak részleteit másolni, reprodukálni, adatrögzítő rendszerben tárolni bármilyen formában vagy eszközzel - elektronikus vagy más módon - a kiadó és a szerzők írásbeli engedélye nélkül tilos. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the priorpermission of the publisher and the authors. Tartalomjegyzék Előszó ......................................................................................................................................... 1 Yurt Enver: The colour of money .............................................................................................. 3 Szívós Alexander: A kriptoeszközök -

D0000 Guilford County, North Carolina SAMPLE BALLOT March 3, 2020 *D0000*

Composite SAMPLE Ballot - Democratic Party Primary COMPOSITE DEMOCRATIC D0000 Guilford County, North Carolina SAMPLE BALLOT March 3, 2020 *D0000* BALLOT MARKING INSTRUCTIONS A. With the marking device provided or a black ball point pen, completely fill in the oval to the left of each candidate or selection of your choice as shown. B. If you tear, deface or wrongly mark this ballot, return it to request a replacement. FEDERAL OFFICES NC Lieutenant Governor NC District Court Judge (You may vote for ONE) District 18 Presidential Preference Seat 12 (You may vote for ONE) Allen Thomas (You may vote for ONE) Deval Patrick Bill Toole Gavin Reardon Bernie Sanders Terry Van Duyn Kelvin Smith Tom Steyer Chaz Beasley NC District Court Judge District 18 Elizabeth Warren Yvonne Lewis Holley Seat 13 Marianne Williamson Ron Newton (You may vote for ONE) Andrew Yang NC Auditor Brian Tomlin (You may vote for ONE) Michael Bennet Moshera Mills Joseph R. Biden Luis A. Toledo COUNTY OFFICES Michael R. Bloomberg Beth A. Wood Board of Commissioners District 5 Cory Booker NC Commissioner of Agriculture (You may vote for ONE) (You may vote for ONE) Pete Buttigieg Macon Sullivan Julian Castro Walter Smith Carly Cooke John K. Delaney Jenna Wadsworth Board of Commissioners District 8 Tulsi Gabbard Donovan Alexander Watson (You may vote for ONE) Amy Klobuchar NC Superintendent of Skip Alston Public Instruction No Preference (You may vote for ONE) Fahiym Hanna Board of Education US Senate Keith A. Sutton District 1 (You may vote for ONE) James Barrett (You may vote for ONE) Erica D. -

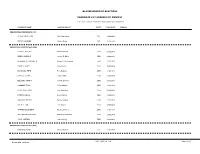

Candidate List Grouped by Contest Bladen Board Of

BLADEN BOARD OF ELECTIONS CANDIDATE LIST GROUPED BY CONTEST CRITERIA: Election: 03/03/2020, Show Contest w/o Candidate: N CANDIDATE NAME NAME ON BALLOT PARTY FILING DATE ADDRESS PRESIDENTIAL PREFERENCE (CST) BLANKENSHIP, DON Don Blankenship CST 12/04/2019 KRAUT, CHARLES Charles Kraut CST 12/04/2019 PRESIDENTIAL PREFERENCE (DEM) BENNET, MICHAEL Michael Bennet DEM 12/03/2019 BIDEN, JOSEPH R Joseph R. Biden DEM 12/03/2019 BLOOMBERG, MICHAEL R Michael R. Bloomberg DEM 12/03/2019 BOOKER, CORY Cory Booker DEM 12/03/2019 BUTTIGIEG, PETE Pete Buttigieg DEM 12/03/2019 CASTRO, JULIAN Julian Castro DEM 12/03/2019 DELANEY, JOHN K John K. Delaney DEM 12/03/2019 GABBARD, TULSI Tulsi Gabbard DEM 12/03/2019 KLOBUCHAR, AMY Amy Klobuchar DEM 12/03/2019 PATRICK, DEVAL Deval Patrick DEM 12/03/2019 SANDERS, BERNIE Bernie Sanders DEM 12/03/2019 STEYER, TOM Tom Steyer DEM 12/03/2019 WARREN, ELIZABETH Elizabeth Warren DEM 12/03/2019 WILLIAMSON, MARIANNE Marianne Williamson DEM 12/03/2019 YANG, ANDREW Andrew Yang DEM 12/03/2019 PRESIDENTIAL PREFERENCE (GRE) HAWKINS, HOWIE Howie Hawkins GRE 12/02/2019 CONT_CAND_rpt_3.rpt Page 1 of 11 Dec 20, 2019 12:10 pm BLADEN BOARD OF ELECTIONS CANDIDATE LIST GROUPED BY CONTEST CANDIDATE NAME NAME ON BALLOT PARTY FILING DATE ADDRESS PRESIDENTIAL PREFERENCE (LIB) ABRAMSON, MAX Max Abramson LIB 12/03/2019 PO BOX 746 SEABROOK, NH 03874 ARMSTRONG, KEN Ken Armstrong LIB 12/03/2019 10135 GOODWOOD BLVD BATON ROUGE, LA 70815 BEHRMAN, DAN Dan Behrman LIB 12/03/2019 PO BOX 29502 LAS VEGAS, NV 89126 18275 BLEVINS, KENNETH Kenneth Blevins