Modeling Student Learning and Forgetting for Optimally Scheduling Distributed Practice of Skills

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memorization & Practice

Y O R K U N I V E R S I T Y Department of Theatre MEMORIZATION & PRACTICE THEA 2010 VOICE I Eric Armstrong [email protected] C F T 3 0 6 , 4 7 0 0 K e e l e S t . To r o n t o O N M 3 J 1 P 3 • t e l e p h o n e : 4 1 6 . 7 3 6 - 2 1 0 0 x 7 7 3 5 3 • h t t p : / / w w w. y o r k u . c a / e a r m s t r o Memorization What is Memorization? Committing something to memory is a process that all actors working outside of Improvisation need. Memory is a complicated process whereby images, sounds, ideas, words, phrases, and even times and places are encoded, so we can recall them later. To ef- fectively learn “lines,” one may use several different kinds of memory at different times in the process of encoding, storing and recalling the text at hand. The basic learning of a single line begins with you using your working memory to get the line off the page and into your head. At this stage of the process, you can repeat the line while looking at an acting partner, or moving around the space, but it won’t last more than a few seconds. The next phase is when the text gets encoded into the short-term memory. These kinds of memories will stay in your mind for a few minutes at most. -

Universidad De Almería

UNIVERSIDAD DE ALMERÍA MÁSTER EN PROFESORADO DE EDUCACIÓN SECUNDARIA OBLIGATORIA Y BACHILLERATO, FORMACIÓN PROFESIONAL Y ENSEÑANZA DE IDIOMAS ESPECIALIDAD EN LENGUA INGLESA Curso Académico: 2015/2016 Convocatoria: Junio Trabajo Fin de Máster: Spaced Retrieval Practice Applied to Vocabulary Learning in Secondary Education Autor: Héctor Daniel León Romero Tutora: Susana Nicolás Román ABSTRACT Spaced retrieval practice is a learning technique which has been long studied (Ebbinghaus, 1885/1913; Gates, 1917) and long forgotten at the same time in education. It is based on the spacing and the testing effects. In recent reviews, spacing and retrieving practices have been highly recommended as there is ample evidence of their long-term retention benefits, even in educational contexts (Dunlosky, Rawson, Marsh, Nathan & Willingham, 2013). An experiment in a real secondary education classroom was conducted in order to show spaced retrieval practice effects in retention and student’s motivation. Results confirm the evidence, spaced retrieval practice showed higher long-term retention (26 days since first study session) of English vocabulary words compared to massed practice. Also, student’s motivation remained high at the end of the experiment. There is enough evidence to suggest educational institutions should promote the use of spaced retrieval practice in classrooms. RESUMEN La recuperación espaciada es una técnica de aprendizaje que se lleva estudiando desde hace muchos años (Ebbinghaus, 1885/1913; Gates, 1917) y que al mismo tiempo ha permanecido como una gran olvidada en los sistemas educativos. Se basa en los efectos que producen el repaso espaciado y el uso de test. En recientes revisiones de la literatura se promueve encarecidamente el uso de estas prácticas, ya que aumentan la retención de recuerdos en la memoria a largo plazo, incluso en contextos educativos (Dunlosky, Rawson, Marsh, Nathan & Willingham, 2013). -

The Spaced Interval Repetition Technique

The Spaced Interval Repetition Technique What is it? The Spaced Interval Repetition (SIR) technique is a memorization technique largely developed in the 1960’s and is a phenomenal way of learning information very efficiently that almost nobody knows about. It is based on the landmark research in memory conducted by famous psychologist, Hermann Ebbinghaus in the late 19th century. Ebbinghaus discovered that when we learn new information, we actually forget it very quickly (within a matter of minutes and hours) and the more time that passes, the more likely we are to forget it (unless it is presented to us again). This is why “cramming” for the test does not allow you to learn/remember everything for the test (and why you don’t remember any of it a week later). SIR software, notably first developed by Piotr A. Woźniak is available to help you learn information like a superhero and even retain it for the rest of your life. The SIR technique can be applied to any kind of learning, but arguably works best when learning discrete pieces of information like dates, definitions, vocabulary, formulas, etc. Why does it work? Memory is a fickle creature. For most people, to really learn something, we need to rehearse it several times; this is how information gets from short‐term memory to long‐ term memory. Research tells us that the best time to rehearse something (like those formulas for your statistics class) is right before you are about to forget it. Obviously, it is very difficult for us to know when we are about to forget an important piece of information. -

Memorization Techniques

MEMORIZATION Memorization BasicsTECHNIQUES Putting information in long-term memory takes TIME and ORGANIZATION. This helps us consolidate information so it remains connected in our brains. Information is best learned when it is meaningful, authentic, engaging, and humorous (we learn 30% more when we tie humor to memory!). Rehearsing or reciting information is the key to retaining it long-term. Types of Memory There are three different types of memory: 1. Long-term: Permanent storehouse of information and can retain information indefinitely 2. Short-term: Working memory and can retain information for 20 to 30 seconds 3. Immediate: “In and out memory” which does not retain information If you want to do well on exams, you need to focus on putting your information into long-term memory. Why is Memorization Necessary? Individuals who read a chapter textbook typically forget: 46% after one day 79% after 14 days 81% after 28 days Sometimes, what we “remember” are invented memories. Events are reported that never took place; casual remarks are embellished, and major points are disregarded or downplayed. This is why it is important to take the time to improve your long-term memory. Memorization Tips 1. Put in the effort and time to improve your memorizing techniques. 2. Pay attention and remain focused on the material. 3. Get it right the first time; otherwise, your brain has a hard time getting it right after you correct yourself. 4. Chunk or block information into smaller, more manageable parts. Read a couple of pages at a time; take notes on key ideas, and review and recite the information. -

Learning Efficiency Correlates of Using Supermemo with Specially Crafted Flashcards in Medical Scholarship

Learning efficiency correlates of using SuperMemo with specially crafted Flashcards in medical scholarship. Authors: Jacopo Michettoni, Alexis Pujo, Daniel Nadolny, Raj Thimmiah. Abstract Computer-assisted learning has been growing in popularity in higher education and in the research literature. A subset of these novel approaches to learning claim that predictive algorithms called Spaced Repetition can significantly improve retention rates of studied knowledge while minimizing the time investment required for learning. SuperMemo is a brand of commercial software and the editor of the SuperMemo spaced repetition algorithm. Medical scholarship is well known for requiring students to acquire large amounts of information in a short span of time. Anatomy, in particular, relies heavily on rote memorization. Using the SuperMemo web platform1 we are creating a non-randomized trial, inviting medical students completing an anatomy course to take part. Usage of SuperMemo as well as a performance test will be measured and compared with a concurrent control group who will not be provided with the SuperMemo Software. Hypotheses A) Increased average grade for memorization-intensive examinations If spaced repetition positively affects average retrievability and stability of memory over the term of one to four months, then consistent2 users should obtain better grades than their peers on memorization-intensive examination material. B) Grades increase with consistency There is a negative relationship between variability of daily usage of SRS and grades. 1 https://www.supermemo.com/ 2 Defined in Criteria for inclusion: SuperMemo group. C) Increased stability of memory in the long-term If spaced repetition positively affects knowledge stability, consistent users should have more durable recall even after reviews of learned material have ceased. -

Enhancing Exam Prep with Customized Digital Flashcards

Enhancing Exam Prep with Customized Digital Flashcards Susan L. Murray Julie Phelps Hanan Altabbakh Missouri University of Science and Technology American University of Kuwait Abstract Flashcards are traditionally constructed with pa- Wissman, Rawson, and Pyc (2012) showed that It is common for college classes to contain basic per index cards; questions are written or typed on one students have limited knowledge of the benefit of us- information that must be mastered before learning side of an index card, and the solution is on the other. ing flashcards in their learning process. The study re- more difficult concepts or applications. Many students Digital flashcards can simulate this design. They can be vealed that 83% of students reported using flashcards struggle with learning facts, terms, or fundamental viewed at different speeds for better-spaced repetition to learn vocabulary, while 29% used them for key con- concepts; in some situations, students are even un- application. Showing the next card at the proper timing cepts. The study finds that students would significantly sure about what they should study. Flashcards are a ensure the appropriate lags between sessions or within benefit from flashcards if they extend their application well-established educational aid for learning basic the cards themselves (Pham, Chen, Nguyen, & Hwang, to a broader variety of learning objectives. The same information. Software programs are available that can 2016). There are other advantages for students using study also revealed that few instructors recommend generate flashcards electronically. This paper details an digital flashcards. They are easy to share or post online. using flashcards as a learning strategy in their courses. -

The Art of Loci: Immerse to Memorize

The Art of Loci: Immerse to Memorize Anne Vetter, Daniel Gotz,¨ Sebastian von Mammen Games Engineering, University of Wurzburg,¨ Germany fanne.vetter,[email protected], [email protected] Abstract—There are uncountable things to be remembered, but assistance during comprehension (R6) of the information, e.g. most people were never shown how to memorize effectively. With by means of verbalization, should follow suit. Due to the this paper, the application The Art of Loci (TAoL) is presented, heterogeneity of learners, the applied process and methods to provide the means for efficient and fun learning. In a virtual reality (VR) environment users can express their study material should be individualized (R7) and strike a productive balance visually, either creating dimensional mind maps or experimenting between user’s choices and imposed learning activities (locus with mnemonic strategies, such as mind palaces. We also present of control) (R8). For example, users should be able to control common memorization techniques in light of their underlying the pace of learning. Learning environments should arouse the pedagogical foundations and discuss the respective features of intrinsic motivation of the user (R9). Fostering an awareness TAoL in comparison with similar software applications. of the learner’s cognition and thus reflection on their learning I. INTRODUCTION process and strategies is also beneficial (metacognition) (R10). Further, a constructivist learning approach can be realized by Before writing was common, information could only be the means to create artefacts such as representations, symbols preserved by memorization. Major ancient literature like The or cues (R11). Finally, cooperative and collaborative learning Iliad and The Odyssey were memorized, passed on through environments can benefit the learning process (R12). -

Memorization Strategies

Memorization strategies Memorization Strategies - Do you have a hard - Do you wish you had time memorizing and better strategies to help you remembering memorize and remember information for tests? information? - Do the things you’ve - Do you sometimes feel the memorized seem to get information you need is mixed up in your head? there, but that you just can’t get to it? Whether you want to remember facts for a test or the name of someone you just met, remembering information is a skill that can be developed! Strategies that Work Strategies… Use all of your senses Look for logical connections The more senses you invovle in the learning process, the more likely you Examples: are to remember information. To remember that Homer wrote The Odyssey, just think, “Homer is an For example, to memorize a vocab word, odd name. formula, or equation, look at it, close your To remember that all 3 angles of an eyes, and try to see it in your mind. Then acute triangle must be less than 90˚, say it out loud and write it down. think “When you’re over 90, you’re not cute anymore.” Strategies… Create unforgettable images Create silly sentences Take the information you’re trying to Use the 1st letter of the words remember and create a crazy, you want to remember to make memorable picture in your mind. up a silly, ridiculous sentence. For example, to remember that the For example, to remember the explorer Pizarro conquered that Inca names of the 8 planets in order empire, imagine a pizza covering up an (Mercury, Venus, Earth, Mars, ink spot. -

Osmosis Study Guide

How to Study in Medical School How to Study in Medical School Written by: Rishi Desai, MD, MPH • Brooke Miller, PhD • Shiv Gaglani, MBA • Ryan Haynes, PhD Edited by: Andrea Day, MA • Fergus Baird, MA • Diana Stanley, MBA • Tanner Marshall, MS Special Thanks to: Henry L. Roediger III, PhD • Robert A. Bjork, PhD • Matthew Lineberry, PhD About Osmosis Created by medical students at Johns Hopkins and the former Khan Academy Medicine team, Os- mosis helps more than 250,000 current and future clinicians better retain and apply knowledge via a web- and mobile platform that takes advantage of cutting-edge cognitive techniques. © Osmosis, 2017 Much of the work you see us do is licensed under a Creative Commons license. We strongly be- lieve educational materials should be made freely available to everyone and be as accessible as possible. We also want to thank the people who support us financially, so we’ve made this exclu- sive book for you as a token of our thanks. This book unlike much of our work, is not under an open license and we reserve all our copyright rights on it. We ask that you not share this book liberally with your friends and colleagues. Any proceeds we generate from this book will be spent on creat- ing more open content for everyone to use. Thank you for your continued support! You can also support us by: • Telling your classmates and friends about us • Donating to us on Patreon (www.patreon.com/osmosis) or YouTube (www.youtube.com/osmosis) • Subscribing to our educational platform (www.osmosis.org) 2 Contents Problem 1: Rapid Forgetting Solution: Spaced Repetition and 1 Interleaved Practice Problem 2: Passive Studying Solution: Testing Effect and 2 "Memory Palace" Problem 3: Past Behaviors Solution: Fogg Behavior Model and 3 Growth Mindset 3 Introduction Students don’t get into medical school by accident. -

Testing Effect”: a Review

Trends in Neuroscience and Education 5 (2016) 52–66 Contents lists available at ScienceDirect Trends in Neuroscience and Education journal homepage: www.elsevier.com/locate/tine Review article Neurocognitive mechanisms of the “testing effect”: A review Gesa van den Broek a,n,1, Atsuko Takashima a,1, Carola Wiklund-Hörnqvist b,c, Linnea Karlsson Wirebring b,c,d, Eliane Segers a, Ludo Verhoeven a, Lars Nyberg b,d,e a Radboud University, Behavioural Science Institute, 6500 HC Nijmegen, The Netherlands b Umeå University, Umeå Center for Functional Brain Imaging (UFBI), 901 87 Umeå, Sweden c Umeå University, Department of Psychology, 901 87 Umeå, Sweden d Umeå University, Department of Integrative Medical Biology, 901 87 Umeå, Sweden e Umeå University, Department of Radiation Sciences, 901 87 Umeå, Sweden article info abstract Article history: Memory retrieval is an active process that can alter the content and accessibility of stored memories. Of Received 16 October 2015 potential relevance for educational practice are findings that memory retrieval fosters better retention Received in revised form than mere studying. This so-called testing effect has been demonstrated for different materials and po- 27 May 2016 pulations, but there is limited consensus on the neurocognitive mechanisms involved. In this review, we Accepted 30 May 2016 relate cognitive accounts of the testing effect to findings from recent brain-imaging studies to identify Available online 2 June 2016 neurocognitive factors that could explain the testing effect. Results indicate that testing facilitates later Keywords: performance through several processes, including effects on semantic memory representations, the se- Testing effect lective strengthening of relevant associations and inhibition of irrelevant associations, as well as po- Retrieval tentiation of subsequent learning. -

Probabilistic Models of Student Learning and Forgetting

Probabilistic Models of Student Learning and Forgetting by Robert Lindsey B.S., Rensselaer Polytechnic Institute, 2008 A thesis submitted to the Faculty of the Graduate School of the University of Colorado in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Computer Science 2014 This thesis entitled: Probabilistic Models of Student Learning and Forgetting written by Robert Lindsey has been approved for the Department of Computer Science Michael Mozer Aaron Clauset Vanja Dukic Matt Jones Sriram Sankaranarayanan Date The final copy of this thesis has been examined by the signatories, and we find that both the content and the form meet acceptable presentation standards of scholarly work in the above mentioned discipline. IRB protocol #0110.9, 11-0596, 12-0661 iii Lindsey, Robert (Ph.D., Computer Science) Probabilistic Models of Student Learning and Forgetting Thesis directed by Prof. Michael Mozer This thesis uses statistical machine learning techniques to construct predictive models of human learning and to improve human learning by discovering optimal teaching methodologies. In Chapters 2 and 3, I present and evaluate models for predicting the changing memory strength of material being studied over time. The models combine a psychological theory of memory with Bayesian methods for inferring individual differences. In Chapter 4, I develop methods for delivering efficient, systematic, personalized review using the statistical models. Results are presented from three large semester-long experiments with middle school students which demonstrate how this \big data" approach to education yields substantial gains in the long-term retention of course material. In Chapter 5, I focus on optimizing various aspects of instruction for populations of students. -

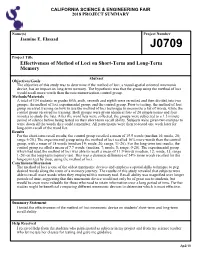

Effectiveness of Method of Loci on Short-Term and Long-Term Memory

CALIFORNIA SCIENCE & ENGINEERING FAIR 2018 PROJECT SUMMARY Name(s) Project Number Jasmine E. Elasaad J0709 Project Title Effectiveness of Method of Loci on Short-Term and Long-Term Memory Abstract Objectives/Goals The objective of this study was to determine if the method of loci, a visual-spatial oriented mnemonic device, has an impact on long-term memory. The hypothesis was that the group using the method of loci would recall more words than the rote memorization control group. Methods/Materials A total of 134 students in grades fifth, sixth, seventh and eighth were recruited and then divided into two groups: the method of loci experimental group; and the control group. Prior to testing, the method of loci group received training on how to use the method of loci technique to memorize a list of words, while the control group received no training. Both groups were given identical lists of 20 simple nouns and four minutes to study the lists. After the word lists were collected, the groups were subjected to a 1.5 minute period of silence before being tested on their short-term recall ability. Subjects were given two minutes to write down all the words they could remember. All participants were then re-tested one week later for long-term recall of the word list. Results For the short-term recall results, the control group recalled a mean of 15.5 words (median 16; mode, 20; range 6-20.) The experimental group using the method of loci recalled 16% more words than the control group, with a mean of 18 words (median 19; mode, 20; range, 11-20).