IT8076 Software Testing Question Bank

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Learn Python the Hard Way

ptg11539604 LEARN PYTHON THE HARD WAY Third Edition ptg11539604 Zed Shaw’s Hard Way Series Visit informit.com/hardway for a complete list of available publications. ed Shaw’s Hard Way Series emphasizes instruction and making things as ptg11539604 Zthe best way to get started in many computer science topics. Each book in the series is designed around short, understandable exercises that take you through a course of instruction that creates working software. All exercises are thoroughly tested to verify they work with real students, thus increasing your chance of success. The accompanying video walks you through the code in each exercise. Zed adds a bit of humor and inside jokes to make you laugh while you’re learning. Make sure to connect with us! informit.com/socialconnect LEARN PYTHON THE HARD WAY A Very Simple Introduction to the Terrifyingly Beautiful World of Computers and Code Third Edition ptg11539604 Zed A. Shaw Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The author and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. -

A Combinatorial Approach to Detecting Buffer Overflow Vulnerabilities

A Combinatorial Approach to Detecting Buffer Overflow Vulnerabilities Wenhua Wang, Yu Lei, Donggang Liu, David Raghu Kacker, Rick Kuhn Kung, Christoph Csallner, Dazhi Zhang Information Technology Laboratory Department of Computer Science and Engineering National Institute of Standards and Technology The University of Texas at Arlington Gaithersburg, Maryland 20899, USA Arlington, Texas 76019, USA {raghu.kacker,kuhn}@nist.gov {wenhuawang,ylei,dliu,kung,csallner,dazhi}@uta.edu Abstract— Buffer overflow vulnerabilities are program defects Many security attacks exploit buffer overflow vulnerabilities that can cause a buffer to overflow at runtime. Many security to compromise critical data structures, so that they can attacks exploit buffer overflow vulnerabilities to compromise influence or even take control over the behavior of a target critical data structures. In this paper, we present a black-box system [27][32][33]. testing approach to detecting buffer overflow vulnerabilities. Our approach is a specification-based or black-box Our approach is motivated by a reflection on how buffer testing approach. That is, we generate test data based on a overflow vulnerabilities are exploited in practice. In most cases specification of the subject program, without analyzing the the attacker can influence the behavior of a target system only source code of the program. The specification required by by controlling its external parameters. Therefore, launching a our approach is lightweight and does not have to be formal. successful attack often amounts to a clever way of tweaking the In contrast, white-box testing approaches [5][13] derive test values of external parameters. We simulate the process performed by the attacker, but in a more systematic manner. -

Types of Software Testing

Types of Software Testing We would be glad to have feedback from you. Drop us a line, whether it is a comment, a question, a work proposition or just a hello. You can use either the form below or the contact details on the rightt. Contact details [email protected] +91 811 386 5000 1 Software testing is the way of assessing a software product to distinguish contrasts between given information and expected result. Additionally, to evaluate the characteristic of a product. The testing process evaluates the quality of the software. You know what testing does. No need to explain further. But, are you aware of types of testing. It’s indeed a sea. But before we get to the types, let’s have a look at the standards that needs to be maintained. Standards of Testing The entire test should meet the user prerequisites. Exhaustive testing isn’t conceivable. As we require the ideal quantity of testing in view of the risk evaluation of the application. The entire test to be directed ought to be arranged before executing it. It follows 80/20 rule which expresses that 80% of defects originates from 20% of program parts. Start testing with little parts and extend it to broad components. Software testers know about the different sorts of Software Testing. In this article, we have incorporated majorly all types of software testing which testers, developers, and QA reams more often use in their everyday testing life. Let’s understand them!!! Black box Testing The black box testing is a category of strategy that disregards the interior component of the framework and spotlights on the output created against any input and performance of the system. -

Digital Rights Management and the Process of Fair Use Timothy K

University of Cincinnati College of Law University of Cincinnati College of Law Scholarship and Publications Faculty Articles and Other Publications Faculty Scholarship 1-1-2006 Digital Rights Management and the Process of Fair Use Timothy K. Armstrong University of Cincinnati College of Law Follow this and additional works at: http://scholarship.law.uc.edu/fac_pubs Part of the Intellectual Property Commons Recommended Citation Armstrong, Timothy K., "Digital Rights Management and the Process of Fair Use" (2006). Faculty Articles and Other Publications. Paper 146. http://scholarship.law.uc.edu/fac_pubs/146 This Article is brought to you for free and open access by the Faculty Scholarship at University of Cincinnati College of Law Scholarship and Publications. It has been accepted for inclusion in Faculty Articles and Other Publications by an authorized administrator of University of Cincinnati College of Law Scholarship and Publications. For more information, please contact [email protected]. Harvard Journal ofLaw & Technology Volume 20, Number 1 Fall 2006 DIGITAL RIGHTS MANAGEMENT AND THE PROCESS OF FAIR USE Timothy K. Armstrong* TABLE OF CONTENTS I. INTRODUCTION: LEGAL AND TECHNOLOGICAL PROTECTIONS FOR FAIR USE OF COPYRIGHTED WORKS ........................................ 50 II. COPYRIGHT LAW AND/OR DIGITAL RIGHTS MANAGEMENT .......... 56 A. Traditional Copyright: The Normative Baseline ........................ 56 B. Contemporary Copyright: DRM as a "Speedbump" to Slow Mass Infringement .......................................................... -

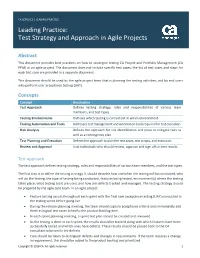

Leading Practice: Test Strategy and Approach in Agile Projects

CA SERVICES | LEADING PRACTICE Leading Practice: Test Strategy and Approach in Agile Projects Abstract This document provides best practices on how to strategize testing CA Project and Portfolio Management (CA PPM) in an agile project. The document does not include specific test cases; the list of test cases and steps for each test case are provided in a separate document. This document should be used by the agile project team that is planning the testing activities, and by end users who perform user acceptance testing (UAT). Concepts Concept Description Test Approach Defines testing strategy, roles and responsibilities of various team members, and test types. Testing Environments Outlines which testing is carried out in which environment. Testing Automation and Tools Addresses test management and automation tools required for test execution. Risk Analysis Defines the approach for risk identification and plans to mitigate risks as well as a contingency plan. Test Planning and Execution Defines the approach to plan the test cases, test scripts, and execution. Review and Approval Lists individuals who should review, approve and sign off on test results. Test Approach The test approach defines testing strategy, roles and responsibilities of various team members, and the test types. The first step is to define the testing strategy. It should describe how and when the testing will be conducted, who will do the testing, the type of testing being conducted, features being tested, environment(s) where the testing takes place, what testing tools are used, and how are defects tracked and managed. The testing strategy should be prepared by the agile core team. -

Software Testing: Essential Phase of SDLC and a Comparative Study Of

International Journal of System and Software Engineering Volume 5 Issue 2, December 2017 ISSN.: 2321-6107 Software Testing: Essential Phase of SDLC and a Comparative Study of Software Testing Techniques Sushma Malik Assistant Professor, Institute of Innovation in Technology and Management, Janak Puri, New Delhi, India. Email: [email protected] Abstract: Software Development Life-Cycle (SDLC) follows In the software development process, the problem (Software) the different activities that are used in the development of a can be dividing in the following activities [3]: software product. SDLC is also called the software process ∑ Understanding the problem and it is the lifeline of any Software Development Model. ∑ Decide a plan for the solution Software Processes decide the survival of a particular software development model in the market as well as in ∑ Coding for the designed solution software organization and Software testing is a process of ∑ Testing the definite program finding software bugs while executing a program so that we get the zero defect software. The main objective of software These activities may be very complex for large systems. So, testing is to evaluating the competence and usability of a each of the activity has to be broken into smaller sub-activities software. Software testing is an important part of the SDLC or steps. These steps are then handled effectively to produce a because through software testing getting the quality of the software project or system. The basic steps involved in software software. Lots of advancements have been done through project development are: various verification techniques, but still we need software to 1) Requirement Analysis and Specification: The goal of be fully tested before handed to the customer. -

Note 5. Testing

Computer Science and Software Engineering University of Wisconsin - Platteville Note 5. Testing Yan Shi Lecture Notes for SE 3730 / CS 5730 Outline . Formal and Informal Reviews . Levels of Testing — Unit, Structural Coverage Analysis Input Coverage Testing: • Equivalence class testing • Boundary value analysis testing CRUD testing All pairs — integration, — system, — acceptance . Regression Testing Static and Dynamic Testing . Static testing: the software is not actually executed. — Generally not detailed testing — Reviews, inspections, walkthrough . Dynamic testing: test the dynamic behavior of the software — Usually need to run the software. Black, White and Grey Box Testing . Black box testing: assume no knowledge about the code, structure or implementation. White box testing: fully based on knowledge of the code, structure or implementation. Grey box testing: test with only partial knowledge of implementation. — E.g., algorithm review. Reviews . Static analysis and dynamic analysis . Black-box testing and white-box testing . Reviews are static white-box (?) testing. — Formal design reviews: DR / FDR — Peer reviews: inspections and walkthrough Formal Design Review . The only reviews that are necessary for approval of the design product. The development team cannot continue to the next stage without this approval. Maybe conducted at any development milestone: — Requirement, system design, unit/detailed design, test plan, code, support manual, product release, installation plan, etc. FDR Procedure . Preparation: — find review members (5-10), — review in advance: could use the help of checklist. A short presentation of the document. Comments by the review team. Verification and validation based on comments. Decision: — Full approval: immediate continuation to the next phase. — Partial approval: immediate continuation for some part, major action items for the remainder. -

Efficient Black-Box JTAG Discovery

Ca’ Foscari University of Venice Department of Environmental Sciences, Informatics and Statistics Master’s Degree programme Computer Science, Software Dependability and Cyber Security Second Cycle (D.M. 270/2004) Master Thesis Efficient Black-box JTAG Discovery Supervisor: Graduand: Prof. Riccardo Focardi Riccardo Francescato Matriculation Number 857609 Assistant supervisor: Dott. Francesco Palmarini Academic Year 2016 - 2017 Riccardo Francescato Matriculation Number 857609 Efficient Black-box JTAG Discovery, Master Thesis c February 2018. Abstract Embedded devices represent the most widespread form of computing device in the world. Almost every consumer product manufactured in the last decades contains an embedded system, e.g., refrigerators, smart bulbs, activity trackers, smart watches and washing machines. These computing devices are also used in safety and security- critical systems, e.g., autonomous driving cars, cryptographic tokens, avionics, alarm systems. Often, manufacturers do not take much into consideration the attack surface offered by low-level interfaces such as JTAG. In the last decade, JTAG port has been used by the research community as an entry point for a number of attacks and reverse engineering techniques. Therefore, finding and identifying the JTAG port of a device or a de-soldered integrated circuit (IC) can be the first step required for performing a successful attack. In this work, we analyse the design of JTAG port and develop methods and algorithms aimed at searching the JTAG port. More specifically we will provide an introduction to the problem and the related attacks already documented in the literature. Moreover, we will provide to the reader the basics necessary to understand this work (background on JTAG and basic electronic terminology). -

Scenario Testing) Few Defects Found Few Defects Found

QUALITY ASSURANCE Michael Weintraub Fall, 2015 Unit Objective • Understand what quality assurance means • Understand QA models and processes Definitions According to NASA • Software Assurance: The planned and systematic set of activities that ensures that software life cycle processes and products conform to requirements, standards, and procedures. • Software Quality: The discipline of software quality is a planned and systematic set of activities to ensure quality is built into the software. It consists of software quality assurance, software quality control, and software quality engineering. As an attribute, software quality is (1) the degree to which a system, component, or process meets specified requirements. (2) The degree to which a system, component, or process meets customer or user needs or expectations [IEEE 610.12 IEEE Standard Glossary of Software Engineering Terminology]. • Software Quality Assurance: The function of software quality that assures that the standards, processes, and procedures are appropriate for the project and are correctly implemented. • Software Quality Control: The function of software quality that checks that the project follows its standards, processes, and procedures, and that the project produces the required internal and external (deliverable) products. • Software Quality Engineering: The function of software quality that assures that quality is built into the software by performing analyses, trade studies, and investigations on the requirements, design, code and verification processes and results to assure that reliability, maintainability, and other quality factors are met. • Software Reliability: The discipline of software assurance that 1) defines the requirements for software controlled system fault/failure detection, isolation, and recovery; 2) reviews the software development processes and products for software error prevention and/or controlled change to reduced functionality states; and 3) defines the process for measuring and analyzing defects and defines/derives the reliability and maintainability factors. -

A Confused Tester in Agile World … Qa a Liability Or an Asset

A CONFUSED TESTER IN AGILE WORLD … QA A LIABILITY OR AN ASSET THIS IS A WORK OF FACTS & FINDINGS BASED ON TRUE STORIES OF ONE & MANY TESTERS !! J Presented By Ashish Kumar, WHAT’S AHEAD • A STORY OF TESTING. • FROM THE MIND OF A CONFUSED TESTER. • FEW CASE STUDIES. • CHALLENGES IDENTIFIED. • SURVEY STUDIES. • GLOBAL RESPONSES. • SOLUTION APPROACH. • PRINCIPLES AND PRACTICES. • CONCLUSION & RECAP. • Q & A. A STORY OF TESTING IN AGILE… HAVE YOU HEARD ANY OF THESE ?? • YOU DON’T NEED A DEDICATED SOFTWARE TESTING TEAM ON YOUR AGILE TEAMS • IF WE HAVE BDD,ATDD,TDD,UI AUTOMATION , UNIT TEST >> WHAT IS THE NEED OF MANUAL TESTING ?? • WE WANT 100% AUTOMATION IN THIS PROJECT • TESTING IS BECOMING BOTTLENECK AND REASON OF SPRINT FAILURE • REPEATING REGRESSION IS A BIG TASK AND AN OVERHEAD • MICROSOFT HAS NO TESTERS NOT EVEN GOOGLE, FACEBOOK AND CISCO • 15K+ DEVELOPERS /4K+ PROJECTS UNDER ACTIVE • IN A “MOBILE-FIRST AND CLOUD-FIRST WORLD.” DEVELOPMENT/50% CODE CHANGES PER MONTH. • THE EFFORT, KNOWN AS AGILE SOFTWARE DEVELOPMENT, • 5500+ SUBMISSION PER DAY ON AVERAGE IS DESIGNED TO LOWER COSTS AND HONE OPERATIONS AS THE COMPANY FOCUSES ON BUILDING CLOUD AND • 20+ SUSTAINED CODE CHANGES/MIN WITH 60+PEAKS MOBILE SOFTWARE, SAY ANALYSTS • 75+ MILLION TEST CASES RUN PER DAY. • MR. NADELLA TOLD BLOOMBERG THAT IT MAKES MORE • DEVELOPERS OWN TESTING AND DEVELOPERS OWN SENSE TO HAVE DEVELOPERS TEST & FIX BUGS INSTEAD OF QUALITY. SEPARATE TEAM OF TESTERS TO BUILD CLOUD SOFTWARE. • GOOGLE HAVE PEOPLE WHO COULD CODE AND WANTED • SUCH AN APPROACH, A DEPARTURE FROM THE TO APPLY THAT SKILL TO THE DEVELOPMENT OF TOOLS, COMPANY’S TRADITIONAL PRACTICE OF DIVIDING INFRASTRUCTURE, AND TEST AUTOMATION. -

100% Pure Java Cookbook Use of Native Code

100% Pure Java Cookbook Guidelines for achieving the 100% Pure Java Standard Revision 4.0 Sun Microsystems, Inc. 901 San Antonio Road Palo Alto, California 94303 USA Copyrights 2000 Sun Microsystems, Inc. All rights reserved. 901 San Antonio Road, Palo Alto, California 94043, U.S.A. This product and related documentation are protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or related documentation may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Restricted Rights Legend Use, duplication, or disclosure by the United States Government is subject to the restrictions set forth in DFARS 252.227-7013 (c)(1)(ii) and FAR 52.227-19. The product described in this manual may be protected by one or more U.S. patents, foreign patents, or pending applications. Trademarks Sun, the Sun logo, Sun Microsystems, Java, Java Compatible, 100% Pure Java, JavaStar, JavaPureCheck, JavaBeans, Java 2D, Solaris,Write Once, Run Anywhere, JDK, Java Development Kit Standard Edition, JDBC, JavaSpin, HotJava, The Network Is The Computer, and JavaStation are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and certain other countries. UNIX is a registered trademark in the United States and other countries, exclusively licensed through X/Open Company, Ltd. All other product names mentioned herein are the trademarks of their respective owners. Netscape and Netscape Navigator are trademarks of Netscape Communications Corporation in the United States and other countries. THIS PUBLICATION IS PROVIDED “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, OR NON-INFRINGEMENT. -

Breeding Software Test Cases for Pairwise Testing Using GA GJCST Classification Dr

Global Journal of Computer Science and Technology Vol. 10 Issue 4 Ver. 1.0 June 2010 P a g e | 97 Breeding Software Test Cases for Pairwise Testing Using GA GJCST Classification Dr. Rakesh Kumar1 Surjeet Singh2 D.2.5, D.2.12 Abstract- All-pairs testing or pairwise testing is a specification- has improved with testing, it is still not clear to the based combinatorial testing method, which requires that for customers that they are receiving the return of the each pair of input parameters to a system (typically, a software investment they demand. Software testing is an important algorithm), each combination of valid values of these two activity of the software development process. It is a critical parameters be covered by at least one test case [TAI02]. element of software quality assurance. A set of possible Pairwise testing has become an indispensable tool in a software tester’s toolbox. This paper pays special attention to usability of inputs for any software system can be too large to be tested the pairwise testing technique. In this paper, we propose a new exhaustively. Techniques like equivalence class partitioning test generation strategy for pairwise testing using Genetic and boundary-value analysis help convert even a large Algorithm (GA). We compare the result with the random number of test levels into a much smaller set with testing and pairwise testing strategy and find that applying GA comparable defect-detection capability. If software under for pairwise testing performs better result. Information on at test can be influenced by a number of such aspects, least 20 tools that can generate pairwise test cases, have so far exhaustive testing again becomes impracticable.