Energy Efficiency Analysis and Optimization for Mobile Platforms Grace Metri Wayne State University

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Volume 2014, No. 1 Law Office Computing Page Puritas Springs Software Law Office Computing

Volume 2014, No. 1 Law Office Computing Page Puritas Springs Software Law Office Computing VOLUME 2014 NO. 1 $ 7 . 9 9 PURITAS SPRINGS SOFTWARE Best Home Pages We think the importance of the through which you accessed INSIDE THIS ISSUE: home page has been greatly the world wide web. Once 1-3 reduced due to the invention of tabbed browsers arrived on the tabbed browsers. Although scene it was possible to create 1,4,5 conceived a group of 4 earlier in 1988, home pages Digital Inklings 6,7 tabbed brows- with each page Child Support 8 ing didn’t go being able to Spousal Support 10 mainstream “specialize” in a Uniform DR Forms 12 until the re- specific area of lease of Micro- your interest. Family Law Documents 13 soft’s Windows Take the Probate Forms 14 Internet Ex- weather for Ohio Estate Tax 16 plorer 7 in example. Every U.S. Income Tax (1041) 18 2006. Until then, your Home good home page should have Ohio Fiduciary Tax 19 page was the sole portal a minimal weather information; (Continued on page 2) Ohio Adoption Forms 20 OH Guardianship Forms 21 OH Wrongful Death 22 Loan Amortizer 23 # More Law Office Tech Tips Advanced Techniques 24 Deed & Document Pro 25 Bankruptcy Forms 26 XX. Quick Launch. The patch the application that you’re Law Office Management 28 of little icons to the right of the working in is maximized. If OH Business Forms 30 Start button is called the Quick you’re interested, take a look Launch toolbar. Sure, you can at the sidebar on page XX of Business Dissolutions 31 put much-used shortcuts on this issue. -

Surface Pro Et Surface Pro 2 Guide De L'utilisateur

Surface Pro et Surface Pro 2 Guide de l’utilisateur Avec Windows 8.1 Professionnel Date de publication : Octobre 2013 Version 2.0 © 2013 Microsoft. Tous droits réservés. BlueTrack Technology, ClearType, Excel, Hotmail, Internet Explorer, Microsoft, OneNote, Outlook, PowerPoint, SkyDrive, Windows, Xbox et Xbox Live sont des marques déposées de Microsoft Corporation. Surface, Skype et Wedge sont des marques commerciales de Microsoft Corporation. Bluetooth est une marque déposée de Bluetooth SIG, Inc. Dolby et le symbole double-D sont des marques déposées de Dolby Laboratories. Ce document est fourni « en l’état ». Les informations contenues dans ce document, y compris les URL et d’autres références à des sites Web Internet, sont susceptibles d’être modifiées sans préavis. © 2013 Microsoft Page ii Sommaire DECOUVREZ SURFACE PRO ............................................................................................................................................1 À PROPOS DE CE GUIDE ........................................................................................................................................................................................ 1 CARACTERISTIQUES DE SURFACE PRO ................................................................................................................................................................ 2 CONFIGURATION DE VOTRE SURFACE PRO ................................................................................................................5 BRANCHEMENT ET ALLUMAGE ............................................................................................................................................................................ -

Security Policy for FIPS 140-2 Validation

Cryptographic Primitives Library Security Policy for FIPS 140-2 Validation Cryptographic Primitives Library (bcryptprimitives.dll and ncryptsslp.dll) in Microsoft Windows 8.1 Enterprise Windows Server 2012 R2 Windows Storage Server 2012 R2 Surface Pro 3 Surface Pro 2 Surface Pro Surface 2 Surface Windows RT 8.1 Windows Phone 8.1 Windows Embedded 8.1 Industry Enterprise StorSimple 8000 Series Azure StorSimple Virtual Array Windows Server 2012 R2 DOCUMENT INFORMATION Version Number 2.1 Updated On April 20, 2017 30 March 2017 © 2017 Microsoft. All Rights Reserved Page 1 of 45 This Security Policy is non-proprietary and may be reproduced only in its original entirety (without revision). Cryptographic Primitives Library The information contained in this document represents the current view of Microsoft Corporation on the issues discussed as of the date of publication. Because Microsoft must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information presented after the date of publication. This document is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS OR IMPLIED, AS TO THE INFORMATION IN THIS DOCUMENT. Complying with all applicable copyright laws is the responsibility of the user. This work is licensed under the Creative Commons Attribution-NoDerivs- NonCommercial License (which allows redistribution of the work). To view a copy of this license, visit http://creativecommons.org/licenses/by-nd-nc/1.0/ or send a letter to Creative Commons, 559 Nathan Abbott Way, Stanford, California 94305, USA. Microsoft may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. -

70-347.Microsoft

70-347.microsoft Number : 70-347 Passing Score : 800 Time Limit : 120 min https://www.gratisexam.com/ Sections 1. Manage clients and end-user devices 2. Provision SharePoint Online site collections 3. Configure Exchange Online and Lync Online for end users 4. Plan for Exchange Online and Lync Online https://www.gratisexam.com/ Exam A QUESTION 1 DRAG DROP You are the Office 365 administrator for a company. Employees are allowed to purchase a desktop computer of their choosing. The company’s owner has one desktop computer, two laptop computers, one Surface RT device, and 2 Surface Pro 2 devices. You plan to deploy Office ProPlus. You provide each user with an Office 365 ProPlus license. You need to deploy Office 365 ProPlus to the unlicensed devices using the fewest number of licenses possible. https://www.gratisexam.com/ What should you do? To answer, move the appropriate license to the correct device. Each license may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. Select and Place: https://www.gratisexam.com/ Correct Answer: https://www.gratisexam.com/ Section: Manage clients and end-user devices Explanation Explanation/Reference: Explanation: Each Office 365 ProPlus license allows a user to install Office on up to five computers. If the user decides to install Office 365 ProPlus on a sixth computer, she will need to deactivate one of the first five. References: https://technet.microsoft.com/en-us/library/gg982959.aspx QUESTION 2 HOTSPOT https://www.gratisexam.com/ Contoso, Ltd., has an Office 365 tenant. -

2-In-1 Accessory Kit for Surface and Surface Pro

2-in-1 Accessory Kit for Surface and Surface Pro Product ID: MSTS3MDPUGBK Get more from your Microsoft Surface™ Pro 4 or Surface Book (backward compatible with Surface Pro 3, Surface 3 and Surface Pro 2) by adding HDMI® and VGA video compatibility as well as wired network connectivity. Connect your Surface to virtually any display You can maximize the versatility of your Microsoft Surface by ensuring that you can connect to virtually any monitor, projector or television. This accessory kit ensures that you're ready to connect to any HDMI or VGA display using the Mini DisplayPort output on your Surface Pro 4, Surface Pro 3 or Surface 3 making it the perfect solution when you're traveling between boardrooms, home and remote offices, trade-shows, hotels and conference centers. Hard-wire your network connection Get the speed and reliability of a hardwired Ethernet connection with your Surface Pro 4. This Surface accessory kit includes a reliable USB 3.0 to Gigabit Ethernet adapter, which adds wired network support that's ideal for BYOD hot-desking, or use as an on-the-go dock solution to enhance your workstation. www.startech.com 1 800 265 1844 The adapter also includes an extra USB 3.0 port built-in, so you can add a USB peripheral device, such as a flash drive for added storage, or a mouse for increased control. Maximize portability Your Microsoft Surface is one of the most versatile and portable computers in the world. Don't be tied down by accessories that require an external power adapter, or a bulky housing. -

Surface Pro User Guide

Surface Pro y Surface Pro 2 Manual del usuario Con el software Windows 8.1 Pro Fecha de publicación: enero de 2014 Versión 2.0 © 2014 Microsoft. Todos los derechos reservados. BlueTrack Technology, ClearType, Excel, Hotmail, Internet Explorer, Microsoft, OneNote, Outlook, PowerPoint, SkyDrive, Windows, Xbox y Xbox Live son marcas registradas de Microsoft Corporation. Surface, Skype y Wedge son marcas comerciales de Microsoft Corporation. Bluetooth es una marca comercial registrada de Bluetooth SIG, Inc. Dolby y el símbolo de doble D son marcas comerciales registradas de Dolby Laboratories. Este documento se proporciona "tal cual". La información que contiene este documento, incluidas las direcciones URL y otras referencias de sitios web de Internet, puede cambiar sin previo aviso. © 2014 Microsoft Página ii Contenido INTRODUCCIÓN A SURFACE PRO .................................................................................................................................1 ACERCA DE ESTE MANUAL .................................................................................................................................................................................... 1 CARACTERÍSTICAS DE SURFACE PRO ................................................................................................................................................................... 2 CONFIGURACIÓN DE SURFACE PRO .............................................................................................................................5 CONEXIÓN Y ENCENDIDO -

Windows App Download Location Windows Location Service and Privacy

windows app download location Windows location service and privacy. In the meantime, check out what's available for Windows 10 on the Windows 10 tab. Some apps need to know when you’re in a specific location to work correctly. For instance, if you’ve asked an app to remind you to buy bread when you’re in the grocery store, the app will need to know when you’re in the store. To give you maps to get you where you’re going, or to show you lists of shops or restaurants near you, many apps and services request location information from your device. That’s what the Windows 10 location service does: tells your apps and services where you are or where you’ve been, so that you can get info more relevant to you. When we collect data, we want to make sure it’s the right choice for you. That’s why we provide info about the location service and settings described here. To learn more about location and your privacy, see the location sections of the Microsoft Privacy Statement. How the location settings work. The device location setting enables certain Windows features such as auto-setting the time zone or Find my device to function properly. When the device location setting is enabled, the Microsoft location service will use a combination of global positioning service (GPS), nearby wireless access points, cell towers, and your IP address to determine your device’s location. Depending on the capabilities of your device, your device’s location can be determined with varying degrees of accuracy and may in some cases be determined precisely. -

Windows Internals, Sixth Edition, Part 2

spine = 1.2” Part 2 About the Authors Mark Russinovich is a Technical Fellow in ® the Windows Azure™ group at Microsoft. Windows Internals He is coauthor of Windows Sysinternals SIXTH EDITION Administrator’s Reference, co-creator of the Sysinternals tools available from Microsoft Windows ® The definitive guide—fully updated for Windows 7 TechNet, and coauthor of the Windows Internals and Windows Server 2008 R2 book series. Delve inside Windows architecture and internals—and see how core David A. Solomon is coauthor of the Windows Internals book series and has taught components work behind the scenes. Led by a team of internationally his Windows internals class to thousands of renowned internals experts, this classic guide has been fully updated Windows developers and IT professionals worldwide, SIXTH for Windows 7 and Windows Server® 2008 R2—and now presents its including Microsoft staff. He is a regular speaker 6EDITION coverage in two volumes. at Microsoft conferences, including TechNet As always, you get critical, insider perspectives on how Windows and PDC. operates. And through hands-on experiments, you’ll experience its Alex Ionescu is a chief software architect and internal behavior firsthand—knowledge you can apply to improve consultant expert in low-level system software, application design, debugging, system performance, and support. kernel development, security training, and Internals reverse engineering. He teaches Windows internals courses with David Solomon, and is ® In Part 2, you will: active in the security research community. -

Surface Pro and Surface Pro 2 User Guide with Windows 8.1 Pro Software

Surface Pro and Surface Pro 2 User Guide With Windows 8.1 Pro Software Published: March 2014 Version 2.0 © 2014 Microsoft. All rights reserved. BlueTrack Technology, ClearType, Excel, Hotmail, Internet Explorer, Microsoft, OneNote, Outlook, PowerPoint, OneDrive, Windows, Xbox, and Xbox Live are registered trademarks of Microsoft Corporation. Surface, Skype, and Wedge are trademarks of Microsoft Corporation. Bluetooth is a registered trademark of Bluetooth SIG, Inc. Dolby and the double-D symbol are registered trademarks of Dolby Laboratories. This document is provided “as-is.” Information in this document, including URL and other Internet Web site references, may change without notice. © 2014 Microsoft Page ii Contents MEET SURFACE PRO ........................................................................................................................................................1 ABOUT THIS GUIDE ................................................................................................................................................................................................ 1 SURFACE PRO FEATURES ....................................................................................................................................................................................... 2 SET UP YOUR SURFACE PRO ..........................................................................................................................................5 PLUG IN AND TURN ON ....................................................................................................................................................................................... -

Creating Mobile Apps with Xamarin.Forms

PREVIEW EDITION This excerpt provides early content from a book currently in development and is still in draft format. See additional notice below. PUBLISHED BY Microsoft Press A Division of Microsoft Corporation One Microsoft Way Redmond, Washington 98052-6399 Copyright © 2014 Xamarin, Inc. All rights reserved. No part of the contents of this book may be reproduced or transmitted in any form or by any means without the written permission of the publisher. ISBN: 978-0-7356-9725-6 Microsoft Press books are available through booksellers and distributors worldwide. Please tell us what you think of this book at http://aka.ms/tellpress. This document is provided for informational purposes only and Microsoft makes no warranties, either express or implied, in this document. Information in this document, including URL and other Internet website references, is subject to change without notice. The entire risk of the use or the results from the use of this document remains with the user. This ERRNH[SUHVVHVWKHDXWKRU·s views and opinions. The information contained in this book is provided without any express, statutory, or implied warranties. Neither the authors, Microsoft Corporation, nor its resellers, or distributors will be held liable for any damages caused or alleged to be caused either directly or indirectly by this book. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Microsoft Corporation. -

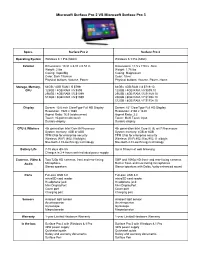

Microsoft Surface Pro 2 VS Microsoft Surface Pro 3

Microsoft Surface Pro 2 VS Microsoft Surface Pro 3 Specs Surface Pro 2 Surface Pro 3 Operating System Windows 8.1 Pro (64bit) Windows 8.1 Pro (64bit) Exterior Dimensions: 10.81 x 6.81 x 0.53 in Dimensions: 11.5 x 7.93 x .36 in Weight: 2 lbs Weight: 1.76 lbs Casing: VaporMg Casing: Magnesium Color: Dark Titanium Color: Silver Physical buttons: Volume, Power Physical buttons: Volume, Power, Home Storage, Memory, 64GB / 4GB RAM / i5 $799 64GB / 4GB RAM / i3 $719.10 CPU 128GB / 4GB RAM / i5 $899 128GB / 4GB RAM / i5 $899.10 256GB / 8GB RAM / i5 $1099 256GB / 8GB RAM / i5 $1169.10 512GB / 8GB RAM / i5 $1599 256GB / 8GB RAM / i7 $1394.10 512GB / 8GB RAM / i7 $1754.10 Display Screen: 10.6 inch ClearType Full HD Display Screen: 12" ClearType Full HD Display Resolution: 1920 x 1080 Resolution: 2160 x 1440 Aspect Ratio: 16:9 (widescreen) Aspect Ratio: 3:2 Touch: 10point multitouch Touch: Multi Touch input Durable display Durable display CPU & Wireless 4th generation Intel Core i5 Processor 4th generation Intel Core i3, i5, or i7 Processors System memory: 4GB or 8GB System memory: 4GB or 8GB TPM Chip for enterprise security TPM Chip for enterprise security Wireless: WiFi (802.11a/b/g/n) Wireless: WiFi 802.11ac/802.11 a/b/g/n Bluetooth 4.0 Low Energy technology Bluetooth 4.0 Low Energy technology Battery Life 715 days idle life Up to 9 hours of web browsing Charges in 24 hours with included power supply Cameras, Video & Two 720p HD cameras, front and rearfacing 5MP and 1080p HD front and rearfacing cameras Audio Microphone Builtin front -

Správa Windows 10 V Podniku

Správa windows 10 v podniku Lubomír Ošmera Pár tipů na úvod • Winver • Whoami • Shutdown -r –g –t 0 • Kopírování • Mocné pravé tlačítko • Mbrgpt.exe MAP • Hardware compatibilita v celém prostředí Upgrade Anylitics Jak se zbavit ModerNUI • Get-AppxPackage | Remove-AppxPackage • Get-AppXProvisionedPackage -Online | Remove- AppxProvisionedPackage –Online • Get-appxpackage –allusers | Remove-AppxPackage Jedině je zakázat? • Pouze Enterprise, Education Roaming profiles Ukladá se v rámci profilů: – Control panel – Plocha – Pozadí barvy – Nastavení Windows a aplikací – Taskbar – Jaké jsou problémy? Screen z registrů Povolení registru • Key: HKEY_LOCAL_MACHINE\Software\Microsoft\Windows\C urrentVersion\Explorer • Value: SpecialRoamingOverrideAllowed • Type: REG_DWORD • Value: 1 Creators update 1703 Bude se Nebude se již Modern UI se nahrazovat ukládat do složky zatím nepřenáší tilestartmenu SyncSettings dobře DEPLOYMENT WINDOWS 10 Jak instalovat bezobslužně a bezstarostně 1) Ať nemusím klikat všechny možnosti 2) Ať je již v image vše připraveno a nadbytečnosti odstraněny 3) Ať se dějí akce navíc (aby už byl počítač připojený do domény, měly nastavenou tapetu) 4) Ať mohu instalaci provést na desítkách PC současně Windows systém image manager • Tvorba bezobslužné instalace Odebrání modern-ui Přidání driverů do image Updaty • Přidání updatu do existujícího image • dism /image:“D:\Deployment\mount“ /Add-Package /PackagePath:“D:\Deployment\updates\KB2751352\“ Sysprep – zachycení a aplikace obrazu • C:\Windows\System32\sysprep • dism /Capture-Image