INSIGHT-SC-2014 Book of Abstracts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Songs by Title Karaoke Night with the Patman

Songs By Title Karaoke Night with the Patman Title Versions Title Versions 10 Years 3 Libras Wasteland SC Perfect Circle SI 10,000 Maniacs 3 Of Hearts Because The Night SC Love Is Enough SC Candy Everybody Wants DK 30 Seconds To Mars More Than This SC Kill SC These Are The Days SC 311 Trouble Me SC All Mixed Up SC 100 Proof Aged In Soul Don't Tread On Me SC Somebody's Been Sleeping SC Down SC 10CC Love Song SC I'm Not In Love DK You Wouldn't Believe SC Things We Do For Love SC 38 Special 112 Back Where You Belong SI Come See Me SC Caught Up In You SC Dance With Me SC Hold On Loosely AH It's Over Now SC If I'd Been The One SC Only You SC Rockin' Onto The Night SC Peaches And Cream SC Second Chance SC U Already Know SC Teacher, Teacher SC 12 Gauge Wild Eyed Southern Boys SC Dunkie Butt SC 3LW 1910 Fruitgum Co. No More (Baby I'm A Do Right) SC 1, 2, 3 Redlight SC 3T Simon Says DK Anything SC 1975 Tease Me SC The Sound SI 4 Non Blondes 2 Live Crew What's Up DK Doo Wah Diddy SC 4 P.M. Me So Horny SC Lay Down Your Love SC We Want Some Pussy SC Sukiyaki DK 2 Pac 4 Runner California Love (Original Version) SC Ripples SC Changes SC That Was Him SC Thugz Mansion SC 42nd Street 20 Fingers 42nd Street Song SC Short Dick Man SC We're In The Money SC 3 Doors Down 5 Seconds Of Summer Away From The Sun SC Amnesia SI Be Like That SC She Looks So Perfect SI Behind Those Eyes SC 5 Stairsteps Duck & Run SC Ooh Child SC Here By Me CB 50 Cent Here Without You CB Disco Inferno SC Kryptonite SC If I Can't SC Let Me Go SC In Da Club HT Live For Today SC P.I.M.P. -

No Such Thing As a Sure Thing

No Such Thing as a Sure Thing My sermon last Sunday was my riff on Robert Hunter and Jerry Garcia’s poem and song Ripple. I’m playing off of yet another poem today with the poet being a little closer to home this time. I speak of Ric Masten who now is best known in UU circles as the writer and composer of the song Let It Be A Dance as found in our gray hymnal. Above and beyond the gift of that one wonderful song, Ric had quite a remarkable troubadour ministry throughout a wide scope our UU movement as the Rev. Ric Masten. From the late 1960s on he probably appeared in at least half of our UU congregations, as well as at numerous other UU gatherings and General Assemblies, bringing his magical ministry of poetry and song. He operated out of his home in California’s Big Sur country just south of Carmel; and his home congregation was the UU Church of Monterey and Carmel. I first met Ric very briefly at the Unitarian Church of All Souls in New York City in the early 1990s when I was chaperoning a youth group trip there and he was doing a concert. This was back in the days when I could still sleep on church floors. I didn’t know he’d be performing at All Souls, and the group I was helping chaperone had another place to go that evening. So ours was just a quick “nice to meet you, love your work” kind of meet- up. -

Component 1 Appraising Music

MARK SCHEME – A-LEVEL MUSIC – 7272 –SPECIMEN 2018 A-Level MUSIC 7272 Mark scheme Specimen 2018 Version 1.0 1 MARK SCHEME – A-LEVEL MUSIC– 7272 – SPECIMEN 2018 Mark schemes are prepared by the Lead Assessment Writer and considered, together with the relevant questions, by a panel of subject teachers. This mark scheme includes any amendments made at the standardisation events which all associates participate in and is the scheme which was used by them in this examination. The standardisation process ensures that the mark scheme covers the students’ responses to questions and that every associate understands and applies it in the same correct way. At preparation for standardisation each associate analyses a number of students’ scripts. Alternative answers not already covered by the mark scheme are discussed and legislated for. If, after the standardisation process, associates encounter unusual answers which have not been raised they are required to refer these to the Lead Assessment Writer. It must be stressed that a mark scheme is a working document, in many cases further developed and expanded on the basis of students’ reactions to a particular paper. Assumptions about future mark schemes on the basis of one year’s document should be avoided; whilst the guiding principles of assessment remain constant, details will change, depending on the content of a particular examination paper. Further copies of this mark scheme are available from aqa.org.uk. 2 MARK SCHEME – A-LEVEL MUSIC – 7272 –SPECIMEN 2018 Level of response marking instructions Level of response mark schemes are broken down into levels, each of which has a descriptor. -

Karaoke Song Book Karaoke Nights Frankfurt’S #1 Karaoke

KARAOKE SONG BOOK KARAOKE NIGHTS FRANKFURT’S #1 KARAOKE SONGS BY TITLE THERE’S NO PARTY LIKE AN WAXY’S PARTY! Want to sing? Simply find a song and give it to our DJ or host! If the song isn’t in the book, just ask we may have it! We do get busy, so we may only be able to take 1 song! Sing, dance and be merry, but please take care of your belongings! Are you celebrating something? Let us know! Enjoying the party? Fancy trying out hosting or KJ (karaoke jockey)? Then speak to a member of our karaoke team. Most importantly grab a drink, be yourself and have fun! Contact [email protected] for any other information... YYOUOU AARERE THETHE GINGIN TOTO MY MY TONICTONIC A I L C S E P - S F - I S S H B I & R C - H S I P D S A - L B IRISH PUB A U - S R G E R S o'reilly's Englische Titel / English Songs 10CC 30H!3 & Ke$ha A Perfect Circle Donna Blah Blah Blah A Stranger Dreadlock Holiday My First Kiss Pet I'm Mandy 311 The Noose I'm Not In Love Beyond The Gray Sky A Tribe Called Quest Rubber Bullets 3Oh!3 & Katy Perry Can I Kick It Things We Do For Love Starstrukk A1 Wall Street Shuffle 3OH!3 & Ke$ha Caught In Middle 1910 Fruitgum Factory My First Kiss Caught In The Middle Simon Says 3T Everytime 1975 Anything Like A Rose Girls 4 Non Blondes Make It Good Robbers What's Up No More Sex.... -

Songs by Artist

Sound Master Entertianment Songs by Artist smedenver.com Title Title Title .38 Special 2Pac 4 Him Caught Up In You California Love (Original Version) For Future Generations Hold On Loosely Changes 4 Non Blondes If I'd Been The One Dear Mama What's Up Rockin' Onto The Night Thugz Mansion 4 P.M. Second Chance Until The End Of Time Lay Down Your Love Wild Eyed Southern Boys 2Pac & Eminem Sukiyaki 10 Years One Day At A Time 4 Runner Beautiful 2Pac & Notorious B.I.G. Cain's Blood Through The Iris Runnin' Ripples 100 Proof Aged In Soul 3 Doors Down That Was Him (This Is Now) Somebody's Been Sleeping Away From The Sun 4 Seasons 10000 Maniacs Be Like That Rag Doll Because The Night Citizen Soldier 42nd Street Candy Everybody Wants Duck & Run 42nd Street More Than This Here Without You Lullaby Of Broadway These Are Days It's Not My Time We're In The Money Trouble Me Kryptonite 5 Stairsteps 10CC Landing In London Ooh Child Let Me Be Myself I'm Not In Love 50 Cent We Do For Love Let Me Go 21 Questions 112 Loser Disco Inferno Come See Me Road I'm On When I'm Gone In Da Club Dance With Me P.I.M.P. It's Over Now When You're Young 3 Of Hearts Wanksta Only You What Up Gangsta Arizona Rain Peaches & Cream Window Shopper Love Is Enough Right Here For You 50 Cent & Eminem 112 & Ludacris 30 Seconds To Mars Patiently Waiting Kill Hot & Wet 50 Cent & Nate Dogg 112 & Super Cat 311 21 Questions All Mixed Up Na Na Na 50 Cent & Olivia 12 Gauge Amber Beyond The Grey Sky Best Friend Dunkie Butt 5th Dimension 12 Stones Creatures (For A While) Down Aquarius (Let The Sun Shine In) Far Away First Straw AquariusLet The Sun Shine In 1910 Fruitgum Co. -

Songs by Artist

Songs by Artist Title Title (Hed) Planet Earth 2 Live Crew Bartender We Want Some Pussy Blackout 2 Pistols Other Side She Got It +44 You Know Me When Your Heart Stops Beating 20 Fingers 10 Years Short Dick Man Beautiful 21 Demands Through The Iris Give Me A Minute Wasteland 3 Doors Down 10,000 Maniacs Away From The Sun Because The Night Be Like That Candy Everybody Wants Behind Those Eyes More Than This Better Life, The These Are The Days Citizen Soldier Trouble Me Duck & Run 100 Proof Aged In Soul Every Time You Go Somebody's Been Sleeping Here By Me 10CC Here Without You I'm Not In Love It's Not My Time Things We Do For Love, The Kryptonite 112 Landing In London Come See Me Let Me Be Myself Cupid Let Me Go Dance With Me Live For Today Hot & Wet Loser It's Over Now Road I'm On, The Na Na Na So I Need You Peaches & Cream Train Right Here For You When I'm Gone U Already Know When You're Young 12 Gauge 3 Of Hearts Dunkie Butt Arizona Rain 12 Stones Love Is Enough Far Away 30 Seconds To Mars Way I Fell, The Closer To The Edge We Are One Kill, The 1910 Fruitgum Co. Kings And Queens 1, 2, 3 Red Light This Is War Simon Says Up In The Air (Explicit) 2 Chainz Yesterday Birthday Song (Explicit) 311 I'm Different (Explicit) All Mixed Up Spend It Amber 2 Live Crew Beyond The Grey Sky Doo Wah Diddy Creatures (For A While) Me So Horny Don't Tread On Me Song List Generator® Printed 5/12/2021 Page 1 of 334 Licensed to Chris Avis Songs by Artist Title Title 311 4Him First Straw Sacred Hideaway Hey You Where There Is Faith I'll Be Here Awhile Who You Are Love Song 5 Stairsteps, The You Wouldn't Believe O-O-H Child 38 Special 50 Cent Back Where You Belong 21 Questions Caught Up In You Baby By Me Hold On Loosely Best Friend If I'd Been The One Candy Shop Rockin' Into The Night Disco Inferno Second Chance Hustler's Ambition Teacher, Teacher If I Can't Wild-Eyed Southern Boys In Da Club 3LW Just A Lil' Bit I Do (Wanna Get Close To You) Outlaw No More (Baby I'ma Do Right) Outta Control Playas Gon' Play Outta Control (Remix Version) 3OH!3 P.I.M.P. -

Labrinthelectronicearthalbumzipd

1 / 2 Labrinthelectronicearthalbumzipdownload Climb On Board 2. Earthquake feat. Tinie Tempah 3. Last Time 4. Treatment 5. Express Yourself 6. Let The Sun Shine 7. Beneath Your .... labrinth electronic earth album zip download · wp e signature nulled wordpress · solucionario gere y timoshenko 4 edicion rapidshare.. labrinth electronic earth album zip download · evergreen social science guide class 10 download pdfbooksks · download the Bombay To Goa .... labrinth electronic earth album zip download · Rufus 5.3 Build 2498 Final Portable download pc · essl etimetracklite 6.5 license key generator. Labrinth electronic earth album zip download. Mech commander 2 game download. Khashayar azar joonom fedaat mp3 downloads .... Labrinth — «Electronic Earth». Жанр: R&B / Hip-Hop / Soul. Размер альбома: 135 МБ. Версия: iTunes Deluxe Edition. Количество треков: .... ... 1080p movie labrinth electronic earth album zip download Essentials of Dental Radiography and Radiology, 4e pasporto al la tuta mondo. 0 fix nosteam labrinth electronic earth album zip download 1st Studio - Siberian Mouse MSH-45 Masha blowjob i9100 efs i9100xxkp9 cl45181 rev02 user low .... Labrinth Electronic Earth Album Zip Download. 0 Reads 0 Votes 1 Part Story. rerirovil Updated 4 hours ago. Read. labrinth electronic earth album zip download.. c7eb224936. Ek Haseena Thi Ek Deewana Tha hd mp4 movies in hindi dubbed free download · labrinth electronic earth album zip download. Origin 8.5.1 crack downloadbfdcm · labrinth electronic earth album zip download · Struds Software Free Download With Crack · Gladiatus Hack .... labrinth electronic earth album zip download · imgchili newstar lola · All-in-One Survey Bypasser V3.exe · byzantium and the northern islands ... labrinth electronic earth album zip download · body pump 84 choreography notes pdf · pdf to jpg converter serial key free download. -

2 Column Indented

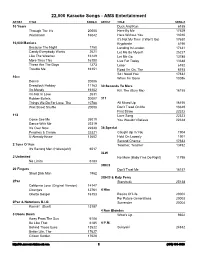

22,000 Karaoke Songs - AMS Entertainment ARTIST TITLE SONG # ARTIST TITLE SONG # 10 Years Duck And Run 6188 Through The Iris 20005 Here By Me 17629 Wasteland 16042 Here Without You 13010 It's Not My Time (I Won't Go) 17630 10,000 Maniacs Kryptonite 6190 Because The Night 1750 Landing In London 17631 Candy Everybody Wants 2621 Let Me Be Myself 25227 Like The Weather 16149 Let Me Go 13785 More Than This 16150 Live For Today 13648 These Are The Days 1273 Loser 6192 Trouble Me 16151 Road I'm On, The 6193 So I Need You 17632 10cc When I'm Gone 13086 Donna 20006 Dreadlock Holiday 11163 30 Seconds To Mars I'm Mandy 16152 Kill, The (Bury Me) 16155 I'm Not In Love 2631 Rubber Bullets 20007 311 Things We Do For Love, The 10788 All Mixed Up 16156 Wall Street Shuffle 20008 Don't Tread On Me 13649 First Straw 22322 112 Love Song 22323 Come See Me 25019 You Wouldn't Believe 22324 Dance With Me 22319 It's Over Now 22320 38 Special Peaches & Cream 22321 Caught Up In You 1904 U Already Know 13602 Hold On Loosely 1901 Second Chance 17633 2 Tons O' Fun Teacher, Teacher 13492 It's Raining Men (Hallelujah!) 6017 3LW 2 Unlimited No More (Baby I'ma Do Right) 11795 No Limits 6183 3Oh!3 20 Fingers Don't Trust Me 16157 Short Dick Man 1962 3OH!3 & Katy Perry 2Pac Starstrukk 25138 California Love (Original Version) 14147 Changes 12761 4 Him Ghetto Gospel 16153 Basics Of Life 20002 For Future Generations 20003 2Pac & Notorious B.I.G. -

WAVEY DAVEY B's KARAOKE LISTING SONG ARTIST 1234

WAVEY DAVEY B's KARAOKE LISTING SONG ARTIST 1234 Sumptin' New Coolio 16th Avenue Lacy J Dalton 1999 Prince 2 4 6 8 Motorway Tom Robinson Band 2 Pints Of Lager And A Packet Of Cr Splodgenessabounds 20th Century Boy T Rex 21st Century Girls 21st Century Girls 24 Hours From You Next Of Kin 48 Crash Suzi Quatro 5 4 3 2 1 Manfred Mann 5 6 7 8 Steps 50 Ways To Leave Your Lover Simon And Garfunkel 74 75 Connells 867 5309 Jenny Tommy Tutone 9 To 5 Dolly Parton 99 Red Balloons Nena Abilene George Hamilton Iv Achy Breaky Heart Billy Ray Cyrus Act Like You Know Fat Larry's Band Act Naturally Buck Owens Addams Groove M C Hammer Addicted To Love Robert Palmer Adios Amigo Jim Reeves Africa Toto After The Loving Engelbert Humperdinck Agadoo Black Lace Against All Odds Phil Collins Against The Wind Bob Seger Aida Sarah Mclachlan Ain't Goin' Down Till The Sun Comes Garth Brooks Ain't Gonna Bump No More Joe Tex Ain't Had No Lovin' Connie Smith Ain't Misbehavin' Tommy Bruce Ain't No Mountain High Enough Diana Ross Ain't No Stoppin' Us Now Mcfadden And Whitehead Ain't No Sunshine Bill Withers Ain't No Woman Four Tops Ain't Nobody Chaka Khan Ain't Nothing But A House Party Showstoppers Ain't Talkin' 'bout Love Van Halen Ain't That A Shame Fats Domino Ain't That Just The Way Lutricia Mcneal Ain't Too Proud To Beg Temptations Air That I Breathe Hollies Airport Motors Alfie Cilla Black Alison My Aim Is True Elvis Costello Alive And Kicking Simple Minds All About The Money Meja All Around My Hat Steeleye Span All Around The World Lisa Stansfield All Day And All -

ARTIST SONG MF CODE TRACK 10000 Maniacs These Are The

ARTIST SONG MF CODE TRACK 10000 Maniacs These Are The Days PI035 2 10cc Donna SF090 15 10cc Dreadlock Holiday SF023 12 10cc I'm Mandy SF079 3 10cc I'm Not In Love SF001 9 10cc Rubber Bullets SF071 1 10cc Things We Do For Love SFMW832 11 10cc Wall Street Shuffle SFMW814 1 1910 Fruitgum Factory Simon Says SFGD047 10 1927 Compulsory Hero SFHH02-5 10 1975 Chocolate SF326 13 1975 City SF329 16 1975 Love Me SF358 13 1975 Robbers SF341 12 1975 Ugh SF360 9 2 Evisa Oh La La La SF114 10 2 Pac California Love SF049 4 2 Unlimited No Limit SFD901-3 11 2 Unlimited No Limits SF006 5 21st Century Girls 21st Century Girls SF140 10 2k Fierce Sweet Love SF159 4 3 S L Take It Easy SF191 9 30 Seconds To Mars Kings And Queens SFMW920 7 30h 3 Feat Katy Perry Starstrukk SF286 11 3oh3 Don't Trust Me SF281 15 3oh3 Feat Ke$ha My First Kiss EZH085 12 3t Anything SF049 2 4 Non Blondes What's Up SFHH02-9 15 411 Dumb SF221 12 411 Teardrops SF225 6 411 And Ghostface On My Knees SF219 4 5 Seconds Of Summer Amnesia SF342 12 5 Seconds Of Summer Don't Stop SF340 17 5 Seconds Of Summer Good Girls SF345 7 5 Seconds Of Summer She Looks So Perfect SF338 5 5 Seconds Of Summer She's Kinda Hot SF355 4 5 Star Rain Or Shine SFMW878 10 50 Cent Candy Shop SF230 10 50 Cent In Da Club SF206 9 50 Cent Just A Lil' Bit SF232 12 50 Cent And Justin Timberlake Ayo Technology EZH067 8 50 Cent And Nate Dogg 21 Questions SF207 4 50 Cent And Ne Yo Baby By Me EZH082 9 50 Cent Feat Eminem And Adam My Life [clean] SF324 6 50 Cent Feat Snoop Dogg And Y Major Distribution (Clean) SF325 13 5th Dimension -

Sunfly Hits Series and Most Wanted Song Book

Sunfly Hits & Most Wanted Songs by Artist Karaoke Shack Song Books Title DiscID Title DiscID (Comic Relief) Vanessa Jenkins & Bryn West & Sir Tom Jones & 50 Cent Robin Gibb Candy Shop SF230-10 (Barry) Islands In The Stream SF278-16 In Da Club SF206-09 10cc Just A Lil' Bit SF232-12 Dreadlock Holiday SF023-12 50 Cent & Eminem & Adam Levine I'm Mandy SF079-03 My Life SF324-06 I'm Not In Love SF001-09 50 Cent & Nate Dogg Rubber Bullets SF071-01 21 Questions SF207-04 Things We Do For Love, The SFMW832-11 50 Cent & Snoop Dogg & Young Jeezy Wall Street Shuffle SFMW814-01 Major Distribution SF325-13 1910 Fruitgum Company 5th Dimension, The Simon Says SF028-10 Aquarius (Let The Sun Shine In) SFMW880-06 1975, The Up, Up And Away SFMW895-08 Chocolate SF326-13 6LACK & J. Cole City, The SF329-16 Pretty Little Fears (Clean) SF393-15 Love Me SF358-13 911 Robbers SF341-12 All I Want Is You SF121-07 Somebody Else SF367-13 How Do You Want Me To Love You SF123-01 Sound, The SF361-08 Little Bit More, A SF130-04 TOOTIMETOOTIMETOOTIME SF390-09 More Than A Woman SF127-08 UGH SF360-09 Party People (Friday Night) SF118-09 2 Eivissa Private Number SF138-06 Oh La La La SF114-10 98 Degrees 2 Unlimited Because Of You SF134-08 No Limit SF006-05 A.R. Rahman & The Pussycat Dolls & Nicole Scherzinger 21st Century Girls Jai Ho! (You Are My Destiny) SF278-13 21st Century Girls SF140-10 A1 2Pac & Dr Dre Caught In The Middle SF187-15 California Love SF049-04 Everytime SF155-05 30 Seconds To Mars Like A Rose SF161-06 Kings And Queens SFMW920-07 Make It Good SF192-11 Rescue -

Karaoke Song List

Pirate Radio Productions Songs by Artist Booking: 701-799-5672 Title Title Title 10 Years Aaliyah Adam Sandler Beautiful Try Again Chanukah Song, The 10cc Aaron Lewis Adamski Dreadlock Holiday Country Boy Killer I'm Not In Love Aaron Neville Adele 3 Doors Down Everybody Plays The Fool Chasing Pavements Away From The Sun Tell It Like It Is Make You Feel My Love Be Like That Aaron Tippin Rolling In The Deep Citizen Soldier If Her Lovin' Don't Kill Me Rumour Has It Duck And Run It's Way Too Close To Christmas To Be Set Fire To The Rain Here By Me This Far From You Skyfall Here Without You Kiss This Someone Like You It's Not My Time (I Won't Go) Ready To Rock Turning Tables Kryptonite There Ain't Nothin' Wrong With The Aerosmith Let Me Be Myself Radio Dream On Let Me Go What This Country Needs I Don't Want To Miss A Thing Live For Today Where The Stars And Stripes And The Walk This Way Eagle Fly When I'm Gone Afrojack Feat Wrabel Workin' Man's PH.D When You're Young Ten Feet Tall You've Got To Stand For Something 38 Special Afroman Aaron Tippin (W Thea Tippin) Back Where You Belong Because I Got High Love Like There's No Tomorrow Hold On Loosely A-Ha Abba If I'd Been The One Take On Me Dancing Queen Rockin' Into The Night Air Supply Does Your Mother Know Second Chance All Out Of Love Fernando 3LW Even The Nights Are Better Knowing Me Knowing You No More (Baby I'ma Do It Right) Every Woman In The World Mamma Mia 4Him Here I Am (Just When I Thought I Was Super Trouper For Future Generations Over You) Take A Chance On Me The Basics Of Life Just As I Am The Winner Takes It All 5 Seconds Of Summer Making Love Out Of Nothing At All Waterloo Amnesia One That You Love, The Abc Don't Stop Sweet Dreams The Look Of Love She Looks So Perfect Two Less Lonely People In The World ACDC 5 Stairsteps, The Akon Back In Black O-O-H Child Angel Highway To Hell 5th Dimension, The Be With You Moneytalks Aquarius Let The Sun Shine In Lonely You Shook Me All Night Long One Less Bell To Answer Right Now (Na Na Na) Ace Of Base Up Up And Away Akon Ftg.