New Second-Preimage Attacks on Hash Functions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Second Preimage Attacks on Dithered Hash Functions

Second Preimage Attacks on Dithered Hash Functions Elena Andreeva1, Charles Bouillaguet2, Pierre-Alain Fouque2, Jonathan J. Hoch3, John Kelsey4, Adi Shamir2,3, and Sebastien Zimmer2 1 SCD-COSIC, Dept. of Electrical Engineering, Katholieke Universiteit Leuven, [email protected] 2 École normale supérieure (Département d’Informatique), CNRS, INRIA, {Charles.Bouillaguet,Pierre-Alain.Fouque,Sebastien.Zimmer}@ens.fr 3 Weizmann Institute of Science, {Adi.Shamir,Yaakov.Hoch}@weizmann.ac.il 4 National Institute of Standards and Technology, [email protected] Abstract. We develop a new generic long-message second preimage at- tack, based on combining the techniques in the second preimage attacks of Dean [8] and Kelsey and Schneier [16] with the herding attack of Kelsey and Kohno [15]. We show that these generic attacks apply to hash func- tions using the Merkle-Damgård construction with only slightly more work than the previously known attack, but allow enormously more con- trol of the contents of the second preimage found. Additionally, we show that our new attack applies to several hash function constructions which are not vulnerable to the previously known attack, including the dithered hash proposal of Rivest [25], Shoup’s UOWHF[26] and the ROX hash construction [2]. We analyze the properties of the dithering sequence used in [25], and develop a time-memory tradeoff which allows us to apply our second preimage attack to a wide range of dithering sequences, including sequences which are much stronger than those in Rivest’s proposals. Fi- nally, we show that both the existing second preimage attacks [8, 16] and our new attack can be applied even more efficiently to multiple target messages; in general, given a set of many target messages with a total of 2R message blocks, these second preimage attacks can find a second preimage for one of those target messages with no more work than would be necessary to find a second preimage for a single target message of 2R message blocks. -

A Full Key Recovery Attack on HMAC-AURORA-512

A Full Key Recovery Attack on HMAC-AURORA-512 Yu Sasaki NTT Information Sharing Platform Laboratories, NTT Corporation 3-9-11 Midori-cho, Musashino-shi, Tokyo, 180-8585 Japan [email protected] Abstract. In this note, we present a full key recovery attack on HMAC- AURORA-512 when 512-bit secret keys are used and the MAC length is 512-bit long. Our attack requires 2257 queries and the off-line com- plexity is 2259 AURORA-512 operations, which is significantly less than the complexity of the exhaustive search for a 512-bit key. The attack can be carried out with a negligible amount of memory. Our attack can also recover the inner-key of HMAC-AURORA-384 with almost the same complexity as in HMAC-AURORA-512. This attack does not recover the outer-key of HMAC-AURORA-384, but universal forgery is possible by combining the inner-key recovery and 2nd-preimage attacks. Our attack exploits some weaknesses in the mode of operation. keywords: AURORA, DMMD, HMAC, Key recovery attack 1 Description 1.1 Mode of operation for AURORA-512 We briefly describe the specification of AURORA-512. Please refer to Ref. [2] for details. An input message is padded to be a multiple of 512 bits by the standard MD message padding, then, the padded message is divided into 512-bit message blocks (M0;M1;:::;MN¡1). 256 512 256 In AURORA-512, compression functions Fk : f0; 1g £f0; 1g ! f0; 1g 256 512 256 512 and Gk : f0; 1g £ f0; 1g ! f0; 1g , two functions MF : f0; 1g ! f0; 1g512 and MFF : f0; 1g512 ! f0; 1g512, and two initial 256-bit chaining U D 1 values H0 and H0 are defined . -

Security of VSH in the Real World

Security of VSH in the Real World Markku-Juhani O. Saarinen Information Security Group Royal Holloway, University of London Egham, Surrey TW20 0EX, UK. [email protected] Abstract. In Eurocrypt 2006, Contini, Lenstra, and Steinfeld proposed a new hash function primitive, VSH, very smooth hash. In this brief paper we offer com- mentary on the resistance of VSH against some standard cryptanalytic attacks, in- cluding preimage attacks and collision search for a truncated VSH. Although the authors of VSH claim only collision resistance, we show why one must be very careful when using VSH in cryptographic engineering, where additional security properties are often required. 1 Introduction Many existing cryptographic hash functions were originally designed to be message di- gests for use in digital signature schemes. However, they are also often used as building blocks for other cryptographic primitives, such as pseudorandom number generators (PRNGs), message authentication codes, password security schemes, and for deriving keying material in cryptographic protocols such as SSL, TLS, and IPSec. These applications may use truncated versions of the hashes with an implicit as- sumption that the security of such a variant against attacks is directly proportional to the amount of entropy (bits) used from the hash result. An example of this is the HMAC−n construction in IPSec [1]. Some signature schemes also use truncated hashes. Hence we are driven to the following slightly nonstandard definition of security goals for a hash function usable in practice: 1. Preimage resistance. For essentially all pre-specified outputs X, it is difficult to find a message Y such that H(Y ) = X. -

A (Second) Preimage Attack on the GOST Hash Function

A (Second) Preimage Attack on the GOST Hash Function Florian Mendel, Norbert Pramstaller, and Christian Rechberger Institute for Applied Information Processing and Communications (IAIK), Graz University of Technology, Inffeldgasse 16a, A-8010 Graz, Austria [email protected] Abstract. In this article, we analyze the security of the GOST hash function with respect to (second) preimage resistance. The GOST hash function, defined in the Russian standard GOST-R 34.11-94, is an iter- ated hash function producing a 256-bit hash value. As opposed to most commonly used hash functions such as MD5 and SHA-1, the GOST hash function defines, in addition to the common iterated structure, a check- sum computed over all input message blocks. This checksum is then part of the final hash value computation. For this hash function, we show how to construct second preimages and preimages with a complexity of about 2225 compression function evaluations and a memory requirement of about 238 bytes. First, we show how to construct a pseudo-preimage for the compression function of GOST based on its structural properties. Second, this pseudo- preimage attack on the compression function is extended to a (second) preimage attack on the GOST hash function. The extension is possible by combining a multicollision attack and a meet-in-the-middle attack on the checksum. Keywords: cryptanalysis, hash functions, preimage attack 1 Introduction A cryptographic hash function H maps a message M of arbitrary length to a fixed-length hash value h. A cryptographic hash function has to fulfill the following security requirements: – Collision resistance: it is practically infeasible to find two messages M and M ∗, with M ∗ 6= M, such that H(M) = H(M ∗). -

The Hitchhiker's Guide to the SHA-3 Competition

History First Second Third The Hitchhiker’s Guide to the SHA-3 Competition Orr Dunkelman Computer Science Department 20 June, 2012 Orr Dunkelman The Hitchhiker’s Guide to the SHA-3 Competition 1/ 33 History First Second Third Outline 1 History of Hash Functions A(n Extremely) Short History of Hash Functions The Sad News about the MD/SHA Family 2 The First Phase of the SHA-3 Competition Timeline The SHA-3 First Round Candidates 3 The Second Round The Second Round Candidates The Second Round Process 4 The Third Round The Finalists Current Performance Estimates The Outcome of SHA-3 Orr Dunkelman The Hitchhiker’s Guide to the SHA-3 Competition 2/ 33 History First Second Third History Sad Outline 1 History of Hash Functions A(n Extremely) Short History of Hash Functions The Sad News about the MD/SHA Family 2 The First Phase of the SHA-3 Competition Timeline The SHA-3 First Round Candidates 3 The Second Round The Second Round Candidates The Second Round Process 4 The Third Round The Finalists Current Performance Estimates The Outcome of SHA-3 Orr Dunkelman The Hitchhiker’s Guide to the SHA-3 Competition 3/ 33 History First Second Third History Sad A(n Extremely) Short History of Hash Functions 1976 Diffie and Hellman suggest to use hash functions to make digital signatures shorter. 1979 Salted passwords for UNIX (Morris and Thompson). 1983/4 Davies/Meyer introduce Davies-Meyer. 1986 Fiat and Shamir use random oracles. 1989 Merkle and Damg˚ard present the Merkle-Damg˚ard hash function. -

NISTIR 7620 Status Report on the First Round of the SHA-3

NISTIR 7620 Status Report on the First Round of the SHA-3 Cryptographic Hash Algorithm Competition Andrew Regenscheid Ray Perlner Shu-jen Chang John Kelsey Mridul Nandi Souradyuti Paul NISTIR 7620 Status Report on the First Round of the SHA-3 Cryptographic Hash Algorithm Competition Andrew Regenscheid Ray Perlner Shu-jen Chang John Kelsey Mridul Nandi Souradyuti Paul Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8930 September 2009 U.S. Department of Commerce Gary Locke, Secretary National Institute of Standards and Technology Patrick D. Gallagher, Deputy Director NISTIR 7620: Status Report on the First Round of the SHA-3 Cryptographic Hash Algorithm Competition Abstract The National Institute of Standards and Technology is in the process of selecting a new cryptographic hash algorithm through a public competition. The new hash algorithm will be referred to as “SHA-3” and will complement the SHA-2 hash algorithms currently specified in FIPS 180-3, Secure Hash Standard. In October, 2008, 64 candidate algorithms were submitted to NIST for consideration. Among these, 51 met the minimum acceptance criteria and were accepted as First-Round Candidates on Dec. 10, 2008, marking the beginning of the First Round of the SHA-3 cryptographic hash algorithm competition. This report describes the evaluation criteria and selection process, based on public feedback and internal review of the first-round candidates, and summarizes the 14 candidate algorithms announced on July 24, 2009 for moving forward to the second round of the competition. The 14 Second-Round Candidates are BLAKE, BLUE MIDNIGHT WISH, CubeHash, ECHO, Fugue, Grøstl, Hamsi, JH, Keccak, Luffa, Shabal, SHAvite-3, SIMD, and Skein. -

MD4 Is Not One-Way

MD4 is Not One-Way Gaëtan Leurent École Normale Supérieure – Département d’Informatique, 45 rue d’Ulm, 75230 Paris Cedex 05, France [email protected] Abstract. MD4 is a hash function introduced by Rivest in 1990. It is still used in some contexts, and the most commonly used hash func- tions (MD5, SHA-1, SHA-2) are based on the design principles of MD4. MD4 has been extensively studied and very efficient collision attacks are known, but it is still believed to be a one-way function. In this paper we show a partial pseudo-preimage attack on the com- pression function of MD4, using some ideas from previous cryptanalysis of MD4. We can choose 64 bits of the output for the cost of 232 compres- sion function computations (the remaining bits are randomly chosen by the preimage algorithm). This gives a preimage attack on the compression function of MD4 with complexity 296, and we extend it to an attack on the full MD4 with complexity 2102. As far as we know this is the first preimage attack on a member of the MD4 family. Keywords: MD4, hash function, cryptanalysis, preimage, one-way. 1 Introduction Hash functions are fundamental cryptographic primitives used in many con- structions and protocols. A hash function takes a bitstring of arbitrary length as input, and outputs a digest, a small bitstring of fixed length n. F : {0, 1}∗ →{0, 1}n When used in a cryptographic context, we expect a hash function to behave somewhat like a random oracle. The digest is used as a kind of fingerprint: it can be used to test whether two documents are equal, but should neither reveal any other information about the input nor be malleable. -

A Second Preimage Attack on the Merkle-Damgard Scheme with a Permutation for Hash Functions

A SECOND PREIMAGE ATTACK ON THE MERKLE-DAMGARD SCHEME WITH A PERMUTATION FOR HASH FUNCTIONS Shiwei Chen and Chenhui Jin Institute of Information Science and Technology, Zhengzhou 450004, China Keywords: Hash functions, MD construction, MDP, Multicollisions, Second preimage attack, Computational complexity. Abstract: Using one kind of multicollsions of the Merkle-Damgard(MD) construction for hash functions proposed by Kelsey and Schneier, this paper presents a second preimage attack on MDP construction which is a simple variant of MD scheme with a permutation for hash functions. Then we prove that the computational complexity of our second preimage attack is k × 2n/2+1 + 2n−k less than 2n where n is the size of the hash value and 2k + k + 1 is the length of the target message. 1 INTRODUCTION into two kinds: - Cryptanalytic attacks: Mainly apply to the com- A cryptographic hash function H maps a message M pression functions of the hash functions. Using the with arbitrary length to a fixed-length hash value h. internal properties of the compression functions, an It has to satisfy the following three security require- adversary can attack the hash functions. For exam- ments: ple, the collision attacks on MD-family proposed in - Preimage resistance: For a given hash value h, (Xiaoyun and Hongbo, 2005); it is computationally infeasible to find a message M - Generic attacks: Apply to the domain extension such that h = H(M); transforms directly with some assumptions on the - Second preimage resistance: For a given mes- compression functions. Examples are long-message sage M, it is computationally infeasible to find a sec- second preimage attack(Kelsey and Schneier, 2005), ′ ′ ond message M 6= M such that H(M ) = H(M); herding attack(Kelsey and Kohno, 2006) and the at- - Collision resistance: It is computationally infea- tack on the MD with XOR-linear/additive checksum ′ sible to find two different messages M and M such in (Gauravaram and Kelsey, 2007). -

Constructing Secure Hash Functions by Enhancing Merkle-Damgård

Constructing Secure Hash Functions by Enhancing Merkle-Damg˚ard Construction Praveen Gauravaram1, William Millan1,EdDawson1, and Kapali Viswanathan2 1 Information Security Institute (ISI) Queensland University of Technology (QUT) 2 George Street, GPO Box 2434, Brisbane QLD 4001, Australia [email protected], {b.millan, e.dawson}@qut.edu.au 2 Technology Development Department, ABB Corporate Research Centre ABB Global Services Limited, 49, Race Course Road, Bangalore - 560 001, India [email protected] Abstract. Recently multi-block collision attacks (MBCA) were found on the Merkle-Damg˚ard (MD)-structure based hash functions MD5, SHA-0 and SHA-1. In this paper, we introduce a new cryptographic construction called 3C devised by enhancing the MD construction. We show that the 3C construction is at least as secure as the MD construc- tion against single-block and multi-block collision attacks. This is the first result of this kind showing a generic construction which is at least as resistant as MD against MBCA. To further improve the resistance of the design against MBCA, we propose the 3C+ design as an enhance- ment of 3C. Both these constructions are very simple adjustments to the MD construction and are immune to the straight forward extension attacks that apply to the MD hash function. We also show that 3C resists some known generic attacks that work on the MD construction. Finally, we compare the security and efficiency features of 3C with other MD based proposals. Keywords: Merkle-Damg˚ard construction, MBCA, 3C, 3C+. 1 Introduction In 1989, Damg˚ard [2] and Merkle [13] independently proposed a similar iter- ative structure to construct a collision resistant cryptographic hash function H : {0, 1}∗ →{0, 1}t using a fixed length input collision resistant compression function f : {0, 1}b ×{0, 1}t →{0, 1}t. -

CNIT 141 Cryptography for Computer Networks

CNIT 141 Cryptography for Computer Networks 6. Hash Functions Updated 10-1-2020 Topics • Secure Hash Functions • Building Hash Functions • The SHA Family • BLAKE2 • How Things Can Go Wrong Use Cases of Hash Functions • Digital signatures • Public-key encryption • Message authentication • Integrity verification • Password storage Fingerprinting • Hash function creates a fixed-length "fingerprint" • From input of any length • Not encryption • No key; cannot be reversed Non-Cryptographic Hash Functions • Provide no security; easily fooled by crafted input • Used in hash tables • To make table lookups faster • To detect accidental errors • Ex: CRC (Cyclic Redundancy Check) • Used at layer 2 by Ethernet and Wi-Fi Secure Hash Functions Digital Signature • Most common use case for hashing • Message M hashed, then the hash is signed • Hash is smaller so the signature computation is faster • But the hash function must be secure Unpredictability • Two different inputs must always have different hashes • Changing one bit in the input makes the hash totally different Non-Uniqueness • Consider an input message 1024 bits long • And a hash 256 bits long • There are 21024 different messages • But only are 2256 different hashes • So many different messages have the same hash One-Wayness • Hashing cannot be reversed • An attacker cannot find M from H • In principle, always true • Many different messages have the same hash Preimage Resistance • An attacker given H cannot find any M with that hash • This is preimage resistance • Also called first preimage -

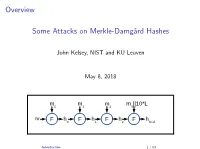

Some Attacks on Merkle-Damgård Hashes

Overview Some Attacks on Merkle-Damg˚ardHashes John Kelsey, NIST and KU Leuven May 8, 2018 m m m m ||10*L 0 1 2 3 iv F h F h F h F h 0 1 2 final Introduction 1 / 63 Overview I Cryptographic Hash Functions I Thinking About Collisions I Merkle-Damg˚ardhashing I Joux Multicollisions[2004] I Long-Message Second Preimage Attacks[1999,2004] I Herding and the Nostradamus Attack[2005] Introduction 2 / 63 Why Talk About These Results? I These are very visual results{looking at the diagram often explains the idea. I The results are pretty accessible. I Help you think about what's going on inside hashing constructions. Introduction 3 / 63 Part I: Preliminaries/Review I Hash function basics I Thinking about collisions I Merkle-Damg˚ardhash functions Introduction 4 / 63 Cryptographic Hash Functions I Today, they're the workhorse of crypto. I Originally: Needed for digital signatures I You can't sign 100 MB message{need to sign something short. I \Message fingerprint" or \message digest" I Need a way to condense long message to short string. I We need a stand-in for the original message. I Take a long, variable-length message... I ...and map it to a short string (say, 128, 256, or 512 bits). Cryptographic Hash Functions 5 / 63 Properties What do we need from a hash function? I Collision resistance I Preimage resistance I Second preimage resistance I Many other properties may be important for other applications Note: cryptographic hash functions are designed to behave randomly. Cryptographic Hash Functions 6 / 63 Collision Resistance The core property we need. -

New Preimage Attack on MDC-4

New Preimage Attack on MDC-4 Deukjo Hong and Daesung Kwon Abstract In this paper, we provide some cryptanalytic results for double-block- length (DBL) hash modes of block ciphers, MDC-4. Our preimage attacks follow the framework of Knudsen et al.’s time/memory trade- off preimage attack on MDC-2. We find how to apply it to our objects. When the block length of the underlying block cipher is n bits, the most efficient preimage attack on MDC-4 requires time and space about 23n/2, which is to be compared to the previous best known preimage attack having time complexity of 27n/4. Additionally, we propose an enhanced version of MDC-4, MDC-4∗ based on a simple idea. It is secure against our preimage attack and previous attacks and has the same efficiency as MDC-4. Key Words. MDC4, Hash Function, Preimage 1 Introduction Block ciphers and hash functions are widely used popular cryptographic primitives. Many hash functions are often designed based on block-cipher- like components. Some of them can be regarded as hash modes of block ciphers. PGV modes [12] are representative single-block-length (SBL) hash modes, where the length of the chaining and hash values is the same as the block length of the underlying block cipher. There are several double- block-length (DBL) hash modes such as MDC-2 , MDC-4 [5, 10], Hirose’s scheme [4], Abreast-DM, and Tandem-DM schemes [8], where the length of the chaining and hash value is twice as long as the block length of the underlying block cipher.