Live Coding Language Design

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

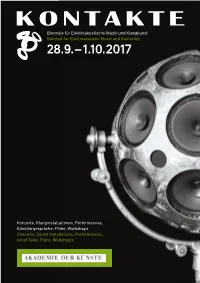

Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops

Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art 28.9. – 1.10.2017 Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops 1 KONTAKTE’17 28.9.–1.10.2017 Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops KONTAKTE '17 INHALT 28. September bis 1. Oktober 2017 Akademie der Künste, Berlin Programmübersicht 9 Ein Festival des Studios für Elektroakustische Musik der Akademie der Künste A festival presented by the Studio for Electro acoustic Music of the Akademie der Künste Konzerte 10 Im Zusammenarbeit mit In collaboration with Installationen 48 Deutsche Gesellschaft für Elektroakustische Musik Berliner Künstlerprogramm des DAAD Forum 58 Universität der Künste Berlin Hochschule für Musik Hanns Eisler Berlin Technische Universität Berlin Ausstellung 62 Klangzeitort Helmholtz Zentrum Berlin Workshop 64 Ensemble ascolta Musik der Jahrhunderte, Stuttgart Institut für Elektronische Musik und Akustik der Kunstuniversität Graz Laboratorio Nacional de Música Electroacústica Biografien 66 de Cuba singuhr – projekte Partner 88 Heroines of Sound Lebenshilfe Berlin Deutschlandfunk Kultur Lageplan 92 France Culture Karten, Information 94 Studio für Elektroakustische Musik der Akademie der Künste Hanseatenweg 10, 10557 Berlin Fon: +49 (0) 30 200572236 www.adk.de/sem EMail: [email protected] KONTAKTE ’17 www.adk.de/kontakte17 #kontakte17 KONTAKTE’17 Die zwei Jahre, die seit der ersten Ausgabe von KONTAKTE im Jahr 2015 vergangen sind, waren für das Studio für Elektroakustische Musik eine ereignisreiche Zeit. Mitte 2015 erhielt das Studio eine großzügige Sachspende ausgesonderter Studiotechnik der Deut schen Telekom, die nach entsprechenden Planungs und Wartungsarbeiten seit 2016 neue Produktionsmöglichkeiten eröffnet. -

An AI Collaborator for Gamified Live Coding Music Performances

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Falmouth University Research Repository (FURR) Autopia: An AI Collaborator for Gamified Live Coding Music Performances Norah Lorway,1 Matthew Jarvis,1 Arthur Wilson,1 Edward J. Powley,2 and John Speakman2 Abstract. Live coding is \the activity of writing (parts of) regards to this dynamic between the performer and audience, a program while it runs" [20]. One significant application of where the level of risk involved in the performance is made ex- live coding is in algorithmic music, where the performer mod- plicit. However, in the context of the system proposed in this ifies the code generating the music in a live context. Utopia3 paper, we are more concerned with the effect that this has on is a software tool for collaborative live coding performances, the performer themselves. Any performer at an algorave must allowing several performers (each with their own laptop pro- be prepared to share their code publicly, which inherently en- ducing its own sound) to communicate and share code during courages a mindset of collaboration and communal learning a performance. We propose an AI bot, Autopia, which can with live coders. participate in such performances, communicating with human performers through Utopia. This form of human-AI collabo- 1.2 Collaborative live coding ration allows us to explore the implications of computational creativity from the perspective of live coding. Collaborative live coding takes its roots from laptop or- chestra/ensemble such as the Princeton Laptop Orchestra (PLOrk), an ensemble of computer based instruments formed 1 Background at Princeton University [19]. -

Chuck: a Strongly Timed Computer Music Language

Ge Wang,∗ Perry R. Cook,† ChucK: A Strongly Timed and Spencer Salazar∗ ∗Center for Computer Research in Music Computer Music Language and Acoustics (CCRMA) Stanford University 660 Lomita Drive, Stanford, California 94306, USA {ge, spencer}@ccrma.stanford.edu †Department of Computer Science Princeton University 35 Olden Street, Princeton, New Jersey 08540, USA [email protected] Abstract: ChucK is a programming language designed for computer music. It aims to be expressive and straightforward to read and write with respect to time and concurrency, and to provide a platform for precise audio synthesis and analysis and for rapid experimentation in computer music. In particular, ChucK defines the notion of a strongly timed audio programming language, comprising a versatile time-based programming model that allows programmers to flexibly and precisely control the flow of time in code and use the keyword now as a time-aware control construct, and gives programmers the ability to use the timing mechanism to realize sample-accurate concurrent programming. Several case studies are presented that illustrate the workings, properties, and personality of the language. We also discuss applications of ChucK in laptop orchestras, computer music pedagogy, and mobile music instruments. Properties and affordances of the language and its future directions are outlined. What Is ChucK? form the notion of a strongly timed computer music programming language. ChucK (Wang 2008) is a computer music program- ming language. First released in 2003, it is designed to support a wide array of real-time and interactive Two Observations about Audio Programming tasks such as sound synthesis, physical modeling, gesture mapping, algorithmic composition, sonifi- Time is intimately connected with sound and is cation, audio analysis, and live performance. -

Confessions-Of-A-Live-Coder.Pdf

Proceedings of the International Computer Music Conference 2011, University of Huddersfield, UK, 31 July - 5 August 2011 CONFESSIONS OF A LIVE CODER Thor Magnusson ixi audio & Faculty of Arts and Media University of Brighton Grand Parade, BN2 0JY, UK ABSTRACT upon writing music with programming languages for very specific reasons and those are rarely comparable. This paper describes the process involved when a live At ICMC 2007, in Copenhagen, I met Andrew coder decides to learn a new musical programming Sorensen, the author of Impromptu and member of the language of another paradigm. The paper introduces the aa-cell ensemble that performed at the conference. We problems of running comparative experiments, or user discussed how one would explore and analyse the studies, within the field of live coding. It suggests that process of learning a new programming environment an autoethnographic account of the process can be for music. One of the prominent questions here is how a helpful for understanding the technological conditioning functional programming language like Impromptu of contemporary musical tools. The author is conducting would influence the thinking of a computer musician a larger research project on this theme: the part with background in an object orientated programming presented in this paper describes the adoption of a new language, such as SuperCollider? Being an avid user of musical programming environment, Impromptu [35], SuperCollider, I was intrigued by the perplexing code and how this affects the author’s musical practice. structure and work patterns demonstrated in the aa-cell performances using Impromptu [36]. I subsequently 1. INTRODUCTION decided to embark upon studying this environment and perform a reflexive study of the process. -

Spaces to Fail In: Negotiating Gender, Community and Technology in Algorave

Spaces to Fail in: Negotiating Gender, Community and Technology in Algorave Feature Article Joanne Armitage University of Leeds (UK) Abstract Algorave presents itself as a community that is open and accessible to all, yet historically, there has been a lack of diversity on both the stage and dance floor. Through women- only workshops, mentoring and other efforts at widening participation, the number of women performing at algorave events has increased. Grounded in existing research in feminist technology studies, computing education and gender and electronic music, this article unpacks how techno, social and cultural structures have gendered algorave. These ideas will be elucidated through a series of interviews with women participating in the algorave community, to centrally argue that gender significantly impacts an individual’s ability to engage and interact within the algorave community. I will also consider how live coding, as an embodied techno-social form, is represented at events and hypothesise as to how it could grow further as an inclusive and feminist practice. Keywords: gender; algorave; embodiment; performance; electronic music Joanne Armitage lectures in Digital Media at the School of Media and Communication, University of Leeds. Her work covers areas such as physical computing, digital methods and critical computing. Currently, her research focuses on coding practices, gender and embodiment. In 2017 she was awarded the British Science Association’s Daphne Oram award for digital innovation. She is a current recipient of Sound and Music’s Composer-Curator fund. Outside of academia she regularly leads community workshops in physical computing, live coding and experimental music making. Joanne is an internationally recognised live coder and contributes to projects including laptop ensemble, OFFAL and algo-pop duo ALGOBABEZ. -

Estuary: Browser-Based Collaborative Projectional Live Coding of Musical Patterns

Estuary: Browser-based Collaborative Projectional Live Coding of Musical Patterns David Ogborn Jamie Beverley Luis Navarro del Angel McMaster University McMaster University McMaster University [email protected] [email protected] [email protected] Eldad Tsabary Alex McLean Concordia University Deutsches Museum [email protected] [email protected] ABSTRACT This paper describes the initial design and development of Estuary, a browser-based collaborative projectional editing environment built on top of the popular TidalCycles language for the live coding of musical pattern. Key features of Estuary include a strict form of structure editing (making syntactical errors impossible), a click-only border-free approach to interface design, explicit notations to modulate the liveness of different parts of the code, and a server-based network collaboration system that can be used for many simultaneous collaborative live coding performances, as well as to present different views of the same live coding activity. Estuary has been developed using Reflex-DOM, a Haskell-based framework for web development whose strictness promises robustness and security advantages. 1 Goals 1.1 Projectional Editing and Live Coding The interface of the text editor is focused on alphabetic characters rather than on larger tokens that directly represent structures specific to a certain way of notating algorithms. Programmers using text editors, including live coding artists using text-based programming languages as their media, then have to devote cognitive resources to dealing with various kinds of mistakes and delays, including but not limited to accidentally typing the wrong character, not recalling the name of an entity, attempting to use a construct from one language in another, and misrecognition of “where one is” in the hierarchy of structures, among many other possible challenges. -

Live Coding Practice

Proceedings of the 2007 Conference on New Interfaces for Musical Expression (NIME07), New York, NY, USA Live Coding Practice Click Nilson University of Sussex Department of Informatics Falmer, Brighton, BN1 9QH +44 (0)1273 877837 [email protected] ABSTRACT I wish to discuss the practice of live coding, not only as an area of investigation and activity, but also in the sense of Live coding is almost the antithesis of immediate physical actually practising to improve ability. I shall describe the musicianship, and yet, has attracted the attentions of a number results of a month long experiment where live coding was of computer-literate musicians, as well as the music-savvy undertaken every day in an attempt to cast live coding as akin programmers that might be more expected. It is within the to more familiar forms of musical practice. context of live coding that I seek to explore the question of practising a contemporary digital musical instrument, which is Exercises will be discussed that may be found efficacious to often raised as an aside but more rarely carried out in research improve live coding abilities. In relation to these, literature (though see [12]). At what stage of expertise are the members from developmental and educational psychology (both of of the live coding movement, and what practice regimes might music and computing), and theories of expertise and skill help them to find their true potential? acquisition, were consulted. The possibility of expert performance [7] in live coding is discussed. Keywords I should state at the outset that I will be focusing primarily on Practice, practising, live coding one particular form of live coding in this paper, that of programming a computer on a concert platform as a performance act, often as an unaccompanied soloist. -

Virtual Agents in Live Coding: a Short Review

FINAL DRAFT – Submitted to eContact! (https://econtact.ca) Special issue: 21.1 — Take Back the Stage: Live coding, live audiovisual, laptop orchestra… Virtual Agents in Live Coding: A Short Review ...................................................................................... 2 Introduction ................................................................................................................................................................. 2 Conceptual Foundations .......................................................................................................................................... 3 Broader Context ............................................................................................................................................................................ 3 Virtuality, Agency, and Virtual Agency ............................................................................................................................... 4 Machine Musicianship, Musical Metacreation, and Musical Cyborgs .................................................................... 5 Theoretical Frameworks ........................................................................................................................................................... 6 Examples in Practice ................................................................................................................................................. 7 Betablocker .................................................................................................................................................................................... -

Quaverseries: a Live Coding Environment for Music Performance Using Web Technologies

QuaverSeries: A Live Coding Environment for Music Performance Using Web Technologies Qichao Lan Alexander Refsum Jensenius RITMO RITMO Department of Musicology Department of Musicology University of Oslo University of Oslo [email protected] [email protected] ABSTRACT live coding languages is mainly borrowed from other pro- QuaverSeries consists of a domain-specific language and a gramming languages. For example, the use of parentheses single-page web application for collaborative live coding in is ubiquitous in programming languages, and it is adopted music performances. Its domain-specific language borrows in almost every live coding language. That is the case even principles from both programming and digital interface de- though live coding without the parentheses is (more) read- sign in its syntax rules, and hence adopts the paradigm able for humans [12]. of functional programming. The collaborative environment Inheriting standard programming syntax may create dif- features the concept of `virtual rooms', in which performers ficulties for non-programmers who want to get started with can collaborate from different locations, and the audience live coding. We have therefore been exploring how it is pos- can watch the collaboration at the same time. Not only is sible to design a live coding syntax based on the design prin- the code synchronised among all the performers and online ciples of digital musical interface design. This includes ele- audience connected to the server, but the code executing ments such as audio effect chains, sequencers, patches, and command is also broadcast. This communication strategy, so on. The aim has been to only create a rule by 1) bor- achieved by the integration of the language design and the rowing from digital musicians' familiarity with the digital environment design, provides a new form of interaction for interfaces mentioned above, or 2) reusing the syntax from web-based live coding performances. -

International Computer Music Conference (ICMC/SMC)

Conference Program 40th International Computer Music Conference joint with the 11th Sound and Music Computing conference Music Technology Meets Philosophy: From digital echos to virtual ethos ICMC | SMC |2014 14-20 September 2014, Athens, Greece ICMC|SMC|2014 14-20 September 2014, Athens, Greece Programme of the ICMC | SMC | 2014 Conference 40th International Computer Music Conference joint with the 11th Sound and Music Computing conference Editor: Kostas Moschos PuBlished By: x The National anD KapoDistrian University of Athens Music Department anD Department of Informatics & Telecommunications Panepistimioupolis, Ilissia, GR-15784, Athens, Greece x The Institute for Research on Music & Acoustics http://www.iema.gr/ ADrianou 105, GR-10558, Athens, Greece IEMA ISBN: 978-960-7313-25-6 UOA ISBN: 978-960-466-133-6 Ξ^ĞƉƚĞŵďĞƌϮϬϭϰʹ All copyrights reserved 2 ICMC|SMC|2014 14-20 September 2014, Athens, Greece Contents Contents ..................................................................................................................................................... 3 Sponsors ..................................................................................................................................................... 4 Preface ....................................................................................................................................................... 5 Summer School ....................................................................................................................................... -

Organised Sound Live Coding in Laptop Performance

Organised Sound http://journals.cambridge.org/OSO Additional services for Organised Sound: Email alerts: Click here Subscriptions: Click here Commercial reprints: Click here Terms of use : Click here Live coding in laptop performance NICK COLLINS, ALEX McLEAN, JULIAN ROHRHUBER and ADRIAN WARD Organised Sound / Volume 8 / Issue 03 / December 2003, pp 321 330 DOI: 10.1017/S135577180300030X, Published online: 21 April 2004 Link to this article: http://journals.cambridge.org/abstract_S135577180300030X How to cite this article: NICK COLLINS, ALEX McLEAN, JULIAN ROHRHUBER and ADRIAN WARD (2003). Live coding in laptop performance. Organised Sound, 8, pp 321330 doi:10.1017/S135577180300030X Request Permissions : Click here Downloaded from http://journals.cambridge.org/OSO, IP address: 139.184.30.132 on 14 May 2013 Live coding in laptop performance NICK COLLINS,1 ALEX McLEAN, JULIAN ROHRHUBER and ADRIAN WARD 1St. John’s College, Cambridge, CB2 1TP E-mail: [email protected], [email protected], [email protected], [email protected] URL: http://www.sicklincoln.org, http://www.slab.org, http://swiki.hfbk-hamburg.de:8888/MusicTechnology/6, http://www.slub.org Seeking new forms of expression in computer music, a small code and run aesthetic. We do not formally set out in number of laptop composers are braving the challenges of this article the choice between scripting languages like coding music on the fly. Not content to submit meekly to the Perl, Ruby or SuperCollider, believing that decision to rigid interfaces of performance software like Ableton Live or be a matter for the individual composer/programmer. Reason, they work with programming languages, building Yet there is undoubtedly a sense in which the language their own custom software, tweaking or writing the programs can influence one’s frame of mind, though we do not themselves as they perform. -

Supercopair: Collaborative Live Coding on Supercollider Through the Cloud

SuperCopair: Collaborative Live Coding on SuperCollider through the cloud Antonio Deusany de Carvalho Junior Sang Won Lee Georg Essl Universidade de São Paulo University of Michigan University of Michigan [email protected] [email protected] [email protected] ABSTRACT In this work we present the SuperCopair package, which is a new way to integrate cloud computing into a collaborative live coding scenario with minimum efforts in the setup. is package, created in Coffee Script for Atom.io, is developed to interact with SuperCollider and provide opportunities for the crowd of online live coders to collaborate remotely on distributed performances. Additionally, the package provides the advantages of cloud services offered by Pusher. Users can share code and evaluate lines or selected portions of code on computers connected to the same session, either at the same place and/or remotely. e package can be used for remote performances or rehearsal purposes with just an Internet connection to share code and sounds. In addition, users can take advantage of code sharing to teach SuperCollider online or fix bugs in the algorithm. 1. Introduction Playing in a live coding ensemble oen invites the utilization of network capability. Exchanging data over the network facilitates collaboration by supporting communication, code sharing, and clock synchronization among musicians. ese kinds of functions require live coding musicians to develop additional extensions to their live coding environments. Due to the diversity of the live coding environment and the collaboration strategies seled for performances, implementing such a function has been tailored to meet some ensemble’s requirements. In addition, networking among machines oen requires additional configuration and setup, for example, connecting to specific machines using an IP address.