Classification of Root Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Matroid Automorphisms of the F4 Root System

∗ Matroid automorphisms of the F4 root system Stephanie Fried Aydin Gerek Gary Gordon Dept. of Mathematics Dept. of Mathematics Dept. of Mathematics Grinnell College Lehigh University Lafayette College Grinnell, IA 50112 Bethlehem, PA 18015 Easton, PA 18042 [email protected] [email protected] Andrija Peruniˇci´c Dept. of Mathematics Bard College Annandale-on-Hudson, NY 12504 [email protected] Submitted: Feb 11, 2007; Accepted: Oct 20, 2007; Published: Nov 12, 2007 Mathematics Subject Classification: 05B35 Dedicated to Thomas Brylawski. Abstract Let F4 be the root system associated with the 24-cell, and let M(F4) be the simple linear dependence matroid corresponding to this root system. We determine the automorphism group of this matroid and compare it to the Coxeter group W for the root system. We find non-geometric automorphisms that preserve the matroid but not the root system. 1 Introduction Given a finite set of vectors in Euclidean space, we consider the linear dependence matroid of the set, where dependences are taken over the reals. When the original set of vectors has `nice' symmetry, it makes sense to compare the geometric symmetry (the Coxeter or Weyl group) with the group that preserves sets of linearly independent vectors. This latter group is precisely the automorphism group of the linear matroid determined by the given vectors. ∗Research supported by NSF grant DMS-055282. the electronic journal of combinatorics 14 (2007), #R78 1 For the root systems An and Bn, matroid automorphisms do not give any additional symmetry [4]. One can interpret these results in the following way: combinatorial sym- metry (preserving dependence) and geometric symmetry (via isometries of the ambient Euclidean space) are essentially the same. -

Platonic Solids Generate Their Four-Dimensional Analogues

1 Platonic solids generate their four-dimensional analogues PIERRE-PHILIPPE DECHANT a;b;c* aInstitute for Particle Physics Phenomenology, Ogden Centre for Fundamental Physics, Department of Physics, University of Durham, South Road, Durham, DH1 3LE, United Kingdom, bPhysics Department, Arizona State University, Tempe, AZ 85287-1604, United States, and cMathematics Department, University of York, Heslington, York, YO10 5GG, United Kingdom. E-mail: [email protected] Polytopes; Platonic Solids; 4-dimensional geometry; Clifford algebras; Spinors; Coxeter groups; Root systems; Quaternions; Representations; Symmetries; Trinities; McKay correspondence Abstract In this paper, we show how regular convex 4-polytopes – the analogues of the Platonic solids in four dimensions – can be constructed from three-dimensional considerations concerning the Platonic solids alone. Via the Cartan-Dieudonne´ theorem, the reflective symmetries of the arXiv:1307.6768v1 [math-ph] 25 Jul 2013 Platonic solids generate rotations. In a Clifford algebra framework, the space of spinors gen- erating such three-dimensional rotations has a natural four-dimensional Euclidean structure. The spinors arising from the Platonic Solids can thus in turn be interpreted as vertices in four- dimensional space, giving a simple construction of the 4D polytopes 16-cell, 24-cell, the F4 root system and the 600-cell. In particular, these polytopes have ‘mysterious’ symmetries, that are almost trivial when seen from the three-dimensional spinorial point of view. In fact, all these induced polytopes are also known to be root systems and thus generate rank-4 Coxeter PREPRINT: Acta Crystallographica Section A A Journal of the International Union of Crystallography 2 groups, which can be shown to be a general property of the spinor construction. -

Classifying the Representation Type of Infinitesimal Blocks of Category

Classifying the Representation Type of Infinitesimal Blocks of Category OS by Kenyon J. Platt (Under the Direction of Brian D. Boe) Abstract Let g be a simple Lie algebra over the field C of complex numbers, with root system Φ relative to a fixed maximal toral subalgebra h. Let S be a subset of the simple roots ∆ of Φ, which determines a standard parabolic subalgebra of g. Fix an integral weight ∗ µ µ ∈ h , with singular set J ⊆ ∆. We determine when an infinitesimal block O(g,S,J) := OS of parabolic category OS is nonzero using the theory of nilpotent orbits. We extend work of Futorny-Nakano-Pollack, Br¨ustle-K¨onig-Mazorchuk, and Boe-Nakano toward classifying the representation type of the nonzero infinitesimal blocks of category OS by considering arbitrary sets S and J, and observe a strong connection between the theory of nilpotent orbits and the representation type of the infinitesimal blocks. We classify certain infinitesimal blocks of category OS including all the semisimple infinitesimal blocks in type An, and all of the infinitesimal blocks for F4 and G2. Index words: Category O; Representation type; Verma modules Classifying the Representation Type of Infinitesimal Blocks of Category OS by Kenyon J. Platt B.A., Utah State University, 1999 M.S., Utah State University, 2001 M.A, The University of Georgia, 2006 A Thesis Submitted to the Graduate Faculty of The University of Georgia in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Athens, Georgia 2008 c 2008 Kenyon J. Platt All Rights Reserved Classifying the Representation Type of Infinitesimal Blocks of Category OS by Kenyon J. -

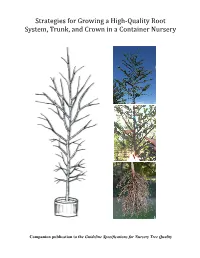

Strategies for Growing a High‐Quality Root System, Trunk, and Crown in a Container Nursery

Strategies for Growing a High‐Quality Root System, Trunk, and Crown in a Container Nursery Companion publication to the Guideline Specifications for Nursery Tree Quality Table of Contents Introduction Section 1: Roots Defects ...................................................................................................................................................................... 1 Liner Development ............................................................................................................................................. 3 Root Ball Management in Larger Containers .......................................................................................... 6 Root Distribution within Root Ball .............................................................................................................. 10 Depth of Root Collar ........................................................................................................................................... 11 Section 2: Trunk Temporary Branches ......................................................................................................................................... 12 Straight Trunk ...................................................................................................................................................... 13 Section 3: Crown Central Leader ......................................................................................................................................................14 Heading and Re‐training -

Introuduction to Representation Theory of Lie Algebras

Introduction to Representation Theory of Lie Algebras Tom Gannon May 2020 These are the notes for the summer 2020 mini course on the representation theory of Lie algebras. We'll first define Lie groups, and then discuss why the study of representations of simply connected Lie groups reduces to studying representations of their Lie algebras (obtained as the tangent spaces of the groups at the identity). We'll then discuss a very important class of Lie algebras, called semisimple Lie algebras, and we'll examine the repre- sentation theory of two of the most basic Lie algebras: sl2 and sl3. Using these examples, we will develop the vocabulary needed to classify representations of all semisimple Lie algebras! Please email me any typos you find in these notes! Thanks to Arun Debray, Joakim Færgeman, and Aaron Mazel-Gee for doing this. Also{thanks to Saad Slaoui and Max Riestenberg for agreeing to be teaching assistants for this course and for many, many helpful edits. Contents 1 From Lie Groups to Lie Algebras 2 1.1 Lie Groups and Their Representations . .2 1.2 Exercises . .4 1.3 Bonus Exercises . .4 2 Examples and Semisimple Lie Algebras 5 2.1 The Bracket Structure on Lie Algebras . .5 2.2 Ideals and Simplicity of Lie Algebras . .6 2.3 Exercises . .7 2.4 Bonus Exercises . .7 3 Representation Theory of sl2 8 3.1 Diagonalizability . .8 3.2 Classification of the Irreducible Representations of sl2 .............8 3.3 Bonus Exercises . 10 4 Representation Theory of sl3 11 4.1 The Generalization of Eigenvalues . -

Platonic Solids Generate Their Four-Dimensional Analogues

This is a repository copy of Platonic solids generate their four-dimensional analogues. White Rose Research Online URL for this paper: https://eprints.whiterose.ac.uk/85590/ Version: Accepted Version Article: Dechant, Pierre-Philippe orcid.org/0000-0002-4694-4010 (2013) Platonic solids generate their four-dimensional analogues. Acta Crystallographica Section A : Foundations of Crystallography. pp. 592-602. ISSN 1600-5724 https://doi.org/10.1107/S0108767313021442 Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ 1 Platonic solids generate their four-dimensional analogues PIERRE-PHILIPPE DECHANT a,b,c* aInstitute for Particle Physics Phenomenology, Ogden Centre for Fundamental Physics, Department of Physics, University of Durham, South Road, Durham, DH1 3LE, United Kingdom, bPhysics Department, Arizona State University, Tempe, AZ 85287-1604, United States, and cMathematics Department, University of York, Heslington, York, YO10 5GG, United Kingdom. E-mail: [email protected] Polytopes; Platonic Solids; 4-dimensional geometry; Clifford algebras; Spinors; Coxeter groups; Root systems; Quaternions; Representations; Symmetries; Trinities; McKay correspondence Abstract In this paper, we show how regular convex 4-polytopes – the analogues of the Platonic solids in four dimensions – can be constructed from three-dimensional considerations concerning the Platonic solids alone. -

Contents 1 Root Systems

Stefan Dawydiak February 19, 2021 Marginalia about roots These notes are an attempt to maintain a overview collection of facts about and relationships between some situations in which root systems and root data appear. They also serve to track some common identifications and choices. The references include some helpful lecture notes with more examples. The author of these notes learned this material from courses taught by Zinovy Reichstein, Joel Kam- nitzer, James Arthur, and Florian Herzig, as well as many student talks, and lecture notes by Ivan Loseu. These notes are simply collected marginalia for those references. Any errors introduced, especially of viewpoint, are the author's own. The author of these notes would be grateful for their communication to [email protected]. Contents 1 Root systems 1 1.1 Root space decomposition . .2 1.2 Roots, coroots, and reflections . .3 1.2.1 Abstract root systems . .7 1.2.2 Coroots, fundamental weights and Cartan matrices . .7 1.2.3 Roots vs weights . .9 1.2.4 Roots at the group level . .9 1.3 The Weyl group . 10 1.3.1 Weyl Chambers . 11 1.3.2 The Weyl group as a subquotient for compact Lie groups . 13 1.3.3 The Weyl group as a subquotient for noncompact Lie groups . 13 2 Root data 16 2.1 Root data . 16 2.2 The Langlands dual group . 17 2.3 The flag variety . 18 2.3.1 Bruhat decomposition revisited . 18 2.3.2 Schubert cells . 19 3 Adelic groups 20 3.1 Weyl sets . 20 References 21 1 Root systems The following examples are taken mostly from [8] where they are stated without most of the calculations. -

Math 210C. Size of Fundamental Group 1. Introduction Let (V,Φ) Be a Nonzero Root System. Let G Be a Connected Compact Lie Group

Math 210C. Size of fundamental group 1. Introduction Let (V; Φ) be a nonzero root system. Let G be a connected compact Lie group that is 0 semisimple (equivalently, ZG is finite, or G = G ; see Exercise 4(ii) in HW9) and for which the associated root system is isomorphic to (V; Φ) (so G 6= 1). More specifically, we pick a maximal torus T and suppose an isomorphism (V; Φ) ' (X(T )Q; Φ(G; T ))) is chosen. Where can X(T ) be located inside V ? It certainly has to contain the root lattice Q := ZΦ determined by the root system (V; Φ). Exercise 1(iii) in HW7 computes the index of this inclusion of lattices: X(T )=Q is dual to the finite abelian group ZG. The root lattice is a \lower bound" on where the lattice X(T ) can sit inside V . This handout concerns the deeper \upper bound" on X(T ) provided by the Z-dual P := (ZΦ_)0 ⊂ V ∗∗ = V of the coroot lattice ZΦ_ (inside the dual root system (V ∗; Φ_)). This lattice P is called the weight lattice (the French word for \weight" is \poids") because of its relation with the representation theory of any possibility for G (as will be seen in our discussion of the Weyl character formula). To explain the significance of P , recall from HW9 that semisimplicity of G implies π1(G) is a finite abelian group, and more specifically if f : Ge ! G is the finite-degree universal cover and Te := f −1(T ) ⊂ Ge (a maximal torus of Ge) then π1(G) = ker f = ker(f : Te ! T ) = ker(X∗(Te) ! X∗(T )) is dual to the inclusion X(T ) ,! X(Te) inside V . -

Linear Algebraic Groups

Clay Mathematics Proceedings Volume 4, 2005 Linear Algebraic Groups Fiona Murnaghan Abstract. We give a summary, without proofs, of basic properties of linear algebraic groups, with particular emphasis on reductive algebraic groups. 1. Algebraic groups Let K be an algebraically closed field. An algebraic K-group G is an algebraic variety over K, and a group, such that the maps µ : G × G → G, µ(x, y) = xy, and ι : G → G, ι(x)= x−1, are morphisms of algebraic varieties. For convenience, in these notes, we will fix K and refer to an algebraic K-group as an algebraic group. If the variety G is affine, that is, G is an algebraic set (a Zariski-closed set) in Kn for some natural number n, we say that G is a linear algebraic group. If G and G′ are algebraic groups, a map ϕ : G → G′ is a homomorphism of algebraic groups if ϕ is a morphism of varieties and a group homomorphism. Similarly, ϕ is an isomorphism of algebraic groups if ϕ is an isomorphism of varieties and a group isomorphism. A closed subgroup of an algebraic group is an algebraic group. If H is a closed subgroup of a linear algebraic group G, then G/H can be made into a quasi- projective variety (a variety which is a locally closed subset of some projective space). If H is normal in G, then G/H (with the usual group structure) is a linear algebraic group. Let ϕ : G → G′ be a homomorphism of algebraic groups. Then the kernel of ϕ is a closed subgroup of G and the image of ϕ is a closed subgroup of G. -

Root System - Wikipedia

Root system - Wikipedia https://en.wikipedia.org/wiki/Root_system Root system In mathematics, a root system is a configuration of vectors in a Euclidean space satisfying certain geometrical properties. The concept is fundamental in the theory of Lie groups and Lie algebras, especially the classification and representations theory of semisimple Lie algebras. Since Lie groups (and some analogues such as algebraic groups) and Lie algebras have become important in many parts of mathematics during the twentieth century, the apparently special nature of root systems belies the number of areas in which they are applied. Further, the classification scheme for root systems, by Dynkin diagrams, occurs in parts of mathematics with no overt connection to Lie theory (such as singularity theory). Finally, root systems are important for their own sake, as in spectral graph theory.[1] Contents Definitions and examples Definition Weyl group Rank two examples Root systems arising from semisimple Lie algebras History Elementary consequences of the root system axioms Positive roots and simple roots Dual root system and coroots Classification of root systems by Dynkin diagrams Constructing the Dynkin diagram Classifying root systems Weyl chambers and the Weyl group Root systems and Lie theory Properties of the irreducible root systems Explicit construction of the irreducible root systems An Bn Cn Dn E6, E 7, E 8 F4 G2 The root poset See also Notes References 1 of 14 2/22/2018, 8:26 PM Root system - Wikipedia https://en.wikipedia.org/wiki/Root_system Further reading External links Definitions and examples As a first example, consider the six vectors in 2-dimensional Euclidean space, R2, as shown in the image at the right; call them roots . -

Now We Will Work out the Roots of Orthogonal Groups. We First Find A

Now we will work out the roots of orthogonal groups. We first find a Cartan subgroup of On(R). For example, we can take the group generated by rotations in n/2 or (n − 1)/2 orthogonal planes. The problem is that this is rather a mess as it is not diagonal (“split”). So let’s start again, this time using the quadratic form x1x2 + x3x4 + ··· + x2m−1 + x2m. This time a Cartan subalgebra is easier to describe: one consists of the diagaonal matrices with entries a1, 1/a1, a2, 1/a2,.... The corresponding Cartan subalgebra consists of diagonal matrices with entries α1, −α1, α2, −α2,.... Now let’s find the roots, in other words the eigenvalues of the adjoint representation. The Lie algebra consists of matrices A with AJ = −JAt, or in other words AJ is skew symmetric, where J is the matrix of the quadratic form, which in this case has blocks of 0 1 ( 1 0 ) down the diagonal. For m = 2 the elements of the Lie algebra are α1 0 a b 0 −α1 c d −d −b α2 0 −c −a 0 −α2 so the roots corresponding to the entries a, b, c, d are α1 − α2, α1 + α2, −α1 −α2,−α1 +α2, so are just ±α1 ±α2. (Notice that in this case the roots split into two orthogonal pairs, correspoinding to the fact that so4(C) is a product of two copies of sl2(C).) Similarly for arbitrary m the roots are ±αi ± αj. This roots system is called Dm. Now we find the Weyl group. -

Crystallographic Root Systems

CRYSTALLOGRAPHIC ROOT SYSTEMS MATH61202 MSc Single Project Spring Semester - 2014 Aram Dermenjian Student ID: 9071063 Supervisor: Sasha Premet School of Mathematics Contents Abstract 4 1 Introduction - Reflection Groups 5 1.1 Euclidean Space[6],[7] ............................ 5 1.2 Reflection Groups[8],[9] ........................... 7 1.2.1 I2(m)................................ 9 1.2.2 An .................................. 10 1.2.3 Bn .................................. 12 1.2.4 Dn .................................. 12 2 Lie Algebras 14 2.1 History[2],[11] ................................. 14 2.2 Definitions[5],[8] ............................... 15 2.3 Solvability and Nilpotency[8],[13] ...................... 16 2.4 Representations[5],[8] ............................. 19 2.5 Killing Form[5],[8] .............................. 21 2.6 Cartan Decompositions[8] .......................... 23 2.7 Weight Spaces[8] ............................... 27 2.8 A new Euclidean space[8] .......................... 28 3 Root Systems 31 3.1 Definitions[8],[9] ............................... 31 3.2 Positive and Simple Roots[9],[15] ...................... 32 3.3 Coxeter Groups[3],[9] ............................. 35 3.4 Fundamental Domains[9] .......................... 41 3.4.1 Parabolic Subgroups . 41 2 3.4.2 Fundamental Domains . 42 3.5 Irreducible Root Systems[8] ......................... 44 4 Classification of Root Systems 46 4.1 Coxeter Graphs[9] .............................. 46 4.2 Crystallographic Root Systems[5],[8] .................... 47 4.3 Dynkin Diagrams[8] ............................. 49 4.4 Classification . 50 4.4.1 Classification of Dynkin Diagrams[4] ................ 51 4.4.2 Classification of Coxeter Graphs[9] ................. 57 5 Simple Lie Algebras 64 5.1 Connections with Crystallographic Root Systems[3],[9] .......... 64 5.2 Simple Lie Algebras Constructed[3],[8],[9] .................. 67 5.2.1 An .................................. 67 5.2.2 Bn .................................. 68 5.2.3 Cn .................................