Appropriate Security and Confinement Technologies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 12 Calc Macros Automating Repetitive Tasks Copyright

Calc Guide Chapter 12 Calc Macros Automating repetitive tasks Copyright This document is Copyright © 2019 by the LibreOffice Documentation Team. Contributors are listed below. You may distribute it and/or modify it under the terms of either the GNU General Public License (http://www.gnu.org/licenses/gpl.html), version 3 or later, or the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0/), version 4.0 or later. All trademarks within this guide belong to their legitimate owners. Contributors This book is adapted and updated from the LibreOffice 4.1 Calc Guide. To this edition Steve Fanning Jean Hollis Weber To previous editions Andrew Pitonyak Barbara Duprey Jean Hollis Weber Simon Brydon Feedback Please direct any comments or suggestions about this document to the Documentation Team’s mailing list: [email protected]. Note Everything you send to a mailing list, including your email address and any other personal information that is written in the message, is publicly archived and cannot be deleted. Publication date and software version Published December 2019. Based on LibreOffice 6.2. Using LibreOffice on macOS Some keystrokes and menu items are different on macOS from those used in Windows and Linux. The table below gives some common substitutions for the instructions in this chapter. For a more detailed list, see the application Help. Windows or Linux macOS equivalent Effect Tools > Options menu LibreOffice > Preferences Access setup options Right-click Control + click or right-click -

Tech Tools Tech Tools

NEWS Tech Tools Tech Tools LLVM 3.0 Released Low Level Virtual Machine (LLVM) recently announced ver- replacing the C/ Objective-C compiler in the GCC system with a sion 3.0 of it compiler infrastructure. Originally implemented more easily integrated system and wider support for multithread- for C/ C++, the language-agnostic design of LLVM has ing. Other features include a new register allocator (which can spawned a wide variety of provide substantial perfor- front ends, including Objec- mance improvements in gen- tive-C, Fortran, Ada, Haskell, erated code), full support for Java bytecode, Python, atomic operations and the Ruby, ActionScript, GLSL, new C++ memory model, Clang, and others. and major improvement in The new release of LLVM the MIPS back end. represents six months of de- All LLVM releases are velopment over the previous available for immediate version and includes several download from the LLVM re- major changes, including dis- leases web site at: http:// continued support for llvm- llvm.org/releases/. For more gcc; the developers recom- information about LLVM, mend switching to Clang or visit the main LLVM website DragonEgg. Clang is aimed at at: http://llvm.org/. YaCy Search Engine Online Open64 5.0 Released The YaCy project has released version 1.0 of its Open64, the open source (GPLv2-licensed) peer-to-peer Free Software search engine. YaCy compiler for C/C++ and Fortran that’s does not use a central server; instead, its search re- backed by AMD and has been developed by sults come from a network of independent peers. SGI, HP, and various universities and re- According to the announcement, in this type of dis- search organizations, recently released ver- tributed network, no single entity decides which sion 5.0. -

Feasibility Study: Migrating from Microsoft Office to Libreoffice in An

Feasibility Study: Migrating from Microsoft Office to LibreOffice in an Academic Enterprise Environment By: Curran Hamilton, David Hersh, Jacqueline McPherson, Tyler Mobray Humboldt State University May 4th, 2012 Abstract This study investigates the feasibility of a migration from Microsoft Office to an alternative office suite at Humboldt State University. After investigating the market for viable alternatives, it was determined that only the open source LibreOffice might be mature enough to meet the needs of a complex enterprise. A literature search was done to learn more about the suite and its development community. Use cases were drawn up and test cases were derived from them in order to compare the functionality of LibreOffice with that of Microsoft Office. It was concluded that LibreOffice is a rapidly maturing and promising suite that may be a viable replacement in one to two years, but is not an acceptable alternative to Microsoft Office in the enterprise environment today. 1 1. Introduction Due to continually increasing costs associated with the CSU’s contract with Microsoft for its many products, including the Office suite, Humboldt State University decided to look into other office suites (preferably open source) that can perform acceptably in place of Microsoft Office (MS Office). The Information Technology Services (ITS) department hired a team of four interns (Curran Hamilton, David Hersh, Jacqueline McPherson, and Tyler Mobray) to determine if a successful migration away from MS Office was feasible enough to warrant further research. We explored other office products currently available, decided on candidate suites, and tested the candidates. Finally, we analyzed and reported on our findings. -

Can Libreoffice and Apache Openoffice Be Alternatives to MS-Office from Consumer's Perspective?

2016 2 nd International Conference on Social, Education and Management Engineering (SEME 2016) ISBN: 978-1-60595-336-6 "Make It Possible" Study: Can LibreOffice and Apache OpenOffice Be Alternatives to MS-Office from Consumer's Perspective? Jun IIO 1,* and Shusaku OHGAMA 2 1Socio-informatics Dept. Faculty of Letters, Chuo University, 742-1 Higashinakano, Hachioji-shi, Tokyo, Japan 2Information Technology Dept. Information Systems Div., Sumitomo Electric Industries, Ltd., 4-5-33 Kitahama, Chuo-ku, Osaka-shi, Osaka, Japan *Corresponding author Keywords: Open-source software, LibreOffice, OpenOffice, Productivity suites. Abstract. There are many guidebooks which offer some instruction of using productivity suites, and most of them focus on Microsoft's Office (MS-Office,) because it is the dominant products in the market. However, we have some other productivity suites, such as LibreOffice and Apache OpenOffice, as the alternative of MS-Office. Therefore, we investigated whether such software could be used properly, within the content of the guidebooks which are widely used. In this paper, the results of our investigation show that the interoperability of such software has already reached a tolerable level from a practical perspective. Introduction Recently, we can find in bookstores many books for reference that provide guidelines on how to operate productivity suites. However, most of them only focus on Microsoft's Office suites (MS-Office,) because it has the largest market share in the world in the productivity software market. On the other hand, there are some other options, such as LibreOffice and Apache OpenOffice. In recent days, persons in businesses face some problems in using these productivity suites. -

Release Notes for X11R7.5 the X.Org Foundation 1

Release Notes for X11R7.5 The X.Org Foundation 1 October 2009 These release notes contains information about features and their status in the X.Org Foundation X11R7.5 release. Table of Contents Introduction to the X11R7.5 Release.................................................................................3 Summary of new features in X11R7.5...............................................................................3 Overview of X11R7.5............................................................................................................4 Details of X11R7.5 components..........................................................................................5 Build changes and issues..................................................................................................10 Miscellaneous......................................................................................................................11 Deprecated components and removal plans.................................................................12 Attributions/Acknowledgements/Credits......................................................................13 Introduction to the X11R7.5 Release This release is the sixth modular release of the X Window System. The next full release will be X11R7.6 and is expected in 2010. Unlike X11R1 through X11R6.9, X11R7.x releases are not built from one monolithic source tree, but many individual modules. These modules are distributed as individ- ual source code releases, and each one is released when it is ready, instead -

Apache Openoffice the Free Opensource Office Software Suite

Apache OpenOffice: The Free Open-Source Office Software Suite 1 / 5 Apache OpenOffice: The Free Open-Source Office Software Suite 2 / 5 3 / 5 Free alternative for Office productivity tools: Apache OpenOffice - formerly known as OpenOffice.org - is an open-source office productivity software suite .... Apache OpenOffice is an open source office suite, which has been designed to ... The program is completely free and available on an open source basis, .... ODBC access from Apache OpenOffice, LibreOffice and OpenOffice. ... Open Office is a free office suit intended to replace Microsoft Office. ... Open Office is the leading open source office software suite for word processing, spreadsheets, ... While Windows has MS Office Suite and Mac OS X has its own iWork apart ... It's not that open source office suites are restricted to have only these three products. ... Apache OpenOffice or simply OpenOffice has a history of ... it as OpenOffice to pit it against MS Office as a free and open source alternative. Tropico 6 – The Llama of Wall Street It is also very important to mention here that the well known LibreOffice open source office suite is based on the source code of this application.. Second, LibreOffice's choice of open source licences gives it an advantage. ... to the Apache Software Foundation, under Apache's liberal open source license. ... Not everybody wants to write free code for somebody else's benefit. ... after Microsoft Office 1997-2003, when it was still a standalone office suite.. Apache OpenOffice Vice President Dennis Hamilton wrote, "In the case of ... LibreOffice, which runs on Linux, MacOS, and Windows, is a great desktop office suite. -

Introducting Innovations in Open Source Projects

Introducing Innovations into Open Source Projects Dissertation zur Erlangung des Grades eines Doktors der Naturwissenschaften (Dr. rer. nat.) am Fachbereich Mathematik und Informatik der Freien Universität Berlin von Sinan Christopher Özbek Berlin August 2010 2 Gutachter: Professor Dr. Lutz Prechelt, Freie Universität Berlin Professor Kevin Crowston, Syracuse University Datum der Disputation: 17.12.2010 4 Abstract This thesis presents a qualitative study using Grounded Theory Methodology on the question of how to change development processes in Open Source projects. The mailing list communication of thirteen medium-sized Open Source projects over the year 2007 was analyzed to answer this question. It resulted in eight main concepts revolving around the introduction of innovation, i.e. new processes, services, and tools, into the projects including topics such as the migration to new systems, the question on where to host services, how radical Open Source projects can change their ways, and how compliance to processes and conventions is enforced. These are complemented with (1) the result of five case studies in which innovation introductions were conducted with Open Source projects, and with (2) a theoretical comparison of the results of this thesis to four theories and scientific perspectives from the organizational and social sciences such as Path Dependence, the Garbage Can model, Social-Network analysis, and Actor-Network theory. The results show that innovation introduction is a multifaceted phenomenon, of which this thesis discusses the most salient conceptual aspects. The thesis concludes with practical advice for innovators and specialized hints for the most popular innovations. 5 6 Acknowledgements I want to thank the following individuals for contributing to the completion of this thesis: • Lutz Prechelt for advising me over these long five years. -

Porting Open64 to the Cygwin Environment

Porting Open64 to the Cygwin Environment Nathan Tallent Mike Fagan ∗ November 2003 Abstract Cygwin is a Linux-like environment for Windows. It is sufficiently com- plete and stable that porting even very large codes to the environment is relatively straightforward. Cygwin is easy enough to download and install that it provides a convenient platform for traveling with code and giving conference demonstra- tions (via laptop) – even potentially expanding a code’s audience and user base (via desktop). However, for various reasons, Cygwin is not 100% compatible with Linux. We describe the major problems we encountered porting Rice University’s Open64/SL code base to Cygwin and present our solutions. 1 Introduction Cygwin is a Linux-like environment for Windows. It provides a Windows DLL that emulates nearly all of the Linux API. Programs written for Linux can link with the Cygwin DLL and thus run on Windows. A reasonably proficient Unix user can easily download and install a relatively complete Linux environment, including shells, de- velopment tools (GCC, make, gdb), editors (emacs, vi) and a graphical environment (XFree86). Moreover, the environment is complete and stable enough that it is rela- tively straightforward to port even very large codes to Cygwin. Because of the preva- lence of Windows-based desktops and laptops, Cygwin provides an attractive means for traveling with code and demonstrating it at conferences – even potentially expand- ing a code’s audience and user base. However, for full-time development or for critical applications, Unix remains superior. More information about Cygwin can be found on its web site, http://www.cygwin.com. -

A Client Honeypot

MASARYKOVA UNIVERSITY FACULTY OF INFORMATICS A CLIENT HONEYPOT MASTER’S THESIS BC. VLADIMÍR BARTL BRNO, FALL 2014 STATEMENT OF AUTHORSHIP Hereby I declare that this thesis is my original work, which I accomplished independently. All sources, references and literature I use or refer to are accurately cited and stated in the thesis. Signature:............................................ i ACKNOWLEDGEMENT I wish to express my gratitude to RNDr. Marian Novotny, Ph.D. for governance over this thesis. Furthermore, I wish to thank my family and my girlfriend for their support throughout the process of writing. TUTOR: RNDr. Marian Novotny, Ph.D. ii ABSTRACT This thesis discusses a topic of malicious software giving emphasis on client side threats and vulnerable users. It gives an insight into concept of client honeypots and compares several implementations of this approach. A configuration of one selected tool is proposed and tested in the experiment. KEYWORDS client honeypot, low-interaction, high-interaction, malware, attacker, exploit, vulnerability, Cuckoo Sandbox iii TABLE OF CONTENTS 1 INTRODUCTION .......................................................................................................................... 3 1.1 MOTIVATION ........................................................................................................................ 4 1.2 CONTRIBUTION .................................................................................................................... 6 2 BACKGROUND THEORY .......................................................................................................... -

Automated Malware Analysis Report for Funny Linux.Elf

ID: 449051 Sample Name: funny_linux.elf Cookbook: defaultlinuxfilecookbook.jbs Time: 05:20:57 Date: 15/07/2021 Version: 33.0.0 White Diamond Table of Contents Table of Contents 2 Linux Analysis Report funny_linux.elf 3 Overview 3 General Information 3 Detection 3 Signatures 3 Classification 3 Analysis Advice 3 General Information 3 Process Tree 3 Yara Overview 3 Jbx Signature Overview 4 Mitre Att&ck Matrix 4 Malware Configuration 4 Behavior Graph 4 Antivirus, Machine Learning and Genetic Malware Detection 5 Initial Sample 5 Dropped Files 5 Domains 5 URLs 5 Domains and IPs 5 Contacted Domains 5 Contacted IPs 5 Runtime Messages 6 Joe Sandbox View / Context 6 IPs 6 Domains 6 ASN 6 JA3 Fingerprints 6 Dropped Files 6 Created / dropped Files 6 Static File Info 6 General 6 Static ELF Info 7 ELF header 7 Sections 7 Program Segments 8 Dynamic Tags 8 Symbols 8 Network Behavior 10 System Behavior 10 Analysis Process: funny_linux.elf PID: 4576 Parent PID: 4498 10 General 10 File Activities 10 File Read 10 Copyright Joe Security LLC 2021 Page 2 of 10 Linux Analysis Report funny_linux.elf Overview General Information Detection Signatures Classification Sample funny_linux.elf Name: SSaampplllee hhaass sstttrrriiippppeedd ssyymbboolll tttaabblllee Analysis ID: 449051 Sample has stripped symbol table MD5: e0ba4089e9b457… Ransomware SHA1: 21b3392a2fdab2a… Miner Spreading SHA256: d2544462756205… mmaallliiiccciiioouusss malicious Evader Phishing Infos: sssuusssppiiiccciiioouusss suspicious cccllleeaann clean Exploiter Banker Spyware Trojan / Bot Adware Score: -

Release Notes for X11R7.7 the X.Org Foundation [ April 2012

Release Notes for X11R7.7 The X.Org Foundation [http://www.x.org/wiki/XorgFoundation] April 2012 Abstract These release notes contain information about features and their status in the X.Org Foun- dation X11R7.7 release. 1 Release Notes for X11R7.7 Table of Contents Introduction to the X11R7.7 Release .................................................................... 3 Summary of new features in X11R7.7 .................................................................. 4 Overview of X11R7.7 ............................................................................................. 5 Details of X11R7.7 components ............................................................................ 5 Video Drivers ................................................................................................. 5 Input Drivers .................................................................................................. 7 Xorg server .................................................................................................... 7 Font support ................................................................................................. 10 Build changes and issues .................................................................................... 11 Strict compilation flags ................................................................................ 11 Silent build rules ......................................................................................... 11 New configure options for font modules .................................................... -

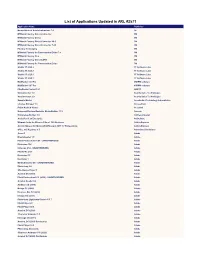

List of Applications Updated in ARL #2571

List of Applications Updated in ARL #2571 Application Name Publisher Nomad Branch Admin Extensions 7.0 1E M*Modal Fluency Direct Connector 3M M*Modal Fluency Direct 3M M*Modal Fluency Direct Connector 10.0 3M M*Modal Fluency Direct Connector 7.85 3M Fluency for Imaging 3M M*Modal Fluency for Transcription Editor 7.6 3M M*Modal Fluency Flex 3M M*Modal Fluency Direct CAPD 3M M*Modal Fluency for Transcription Editor 3M Studio 3T 2020.2 3T Software Labs Studio 3T 2020.8 3T Software Labs Studio 3T 2020.3 3T Software Labs Studio 3T 2020.7 3T Software Labs MailRaider 3.69 Pro 45RPM software MailRaider 3.67 Pro 45RPM software FineReader Server 14.1 ABBYY VoxConverter 3.0 Acarda Sales Technologies VoxConverter 2.0 Acarda Sales Technologies Sample Master Accelerated Technology Laboratories License Manager 3.5 AccessData Prizm ActiveX Viewer AccuSoft Universal Restore Bootable Media Builder 11.5 Acronis Knowledge Builder 4.0 ActiveCampaign ActivePerl 5.26 Enterprise ActiveState Ultimate Suite for Microsoft Excel 18.5 Business Add-in Express Add-in Express for Microsoft Office and .NET 7.7 Professional Add-in Express Office 365 Reporter 3.5 AdminDroid Solutions Scout 1 Adobe Dreamweaver 1.0 Adobe Flash Professional CS6 - UNAUTHORIZED Adobe Illustrator CS6 Adobe InDesign CS6 - UNAUTHORIZED Adobe Fireworks CS6 Adobe Illustrator CC Adobe Illustrator 1 Adobe Media Encoder CC - UNAUTHORIZED Adobe Photoshop 1.0 Adobe Shockwave Player 1 Adobe Acrobat DC (2015) Adobe Flash Professional CC (2015) - UNAUTHORIZED Adobe Acrobat Reader DC Adobe Audition CC (2018)