1 Title: Computational Complexity and Finance Authors: Peter Bossaerts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Upcoming Programs

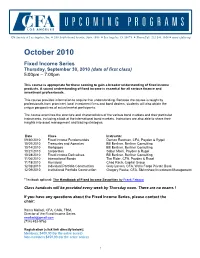

UPCOMING PROGRAMS CFA Society of Los Angeles, Inc. ■ 350 South Grand Avenue, Suite 1680 ■ Los Angeles, CA 90071 ■ Phone/Fax: 213.341.1164 ■ www.cfala.org October 2010 Fixed Income Series Thursday, September 30, 2010 (date of first class) 5:00pm – 7:00pm This course is appropriate for those seeking to gain a broader understanding of fixed income products. A sound understanding of fixed income is essential for all serious finance and investment professionals. This course provides information to acquire that understanding. Because the course is taught by professionals from prominent local investment firms and bond dealers, students will also obtain the unique perspectives of actual market participants. The course examines the structure and characteristics of the various bond markets and their particular instruments, including a look at the international bond markets. Instructors are also able to share their insights into asset management and trading strategies. Date Class Instructor 09/30/2010 Fixed Income Fundamentals Damon Eastman, CFA, Payden & Rygel 10/07/2010 Treasuries and Agencies Bill Berliner, Berliner Consulting 10/14/2010 Mortgages Bill Berliner, Berliner Consulting 10/21/2010 Corporates Sabur Moini, Payden & Rygel 10/28/2010 Fixed Income Derivatives Bill Berliner, Berliner Consulting 11/04/2010 International Bonds Tim Rider, CFA, Payden & Rygel 11/18/2010 Municipal Chad Rach, Capital Group 12/02/2010 Individual Portfolio Construction Gary Larsen, CFA, Wells Fargo Private Bank 12/09/2010 Institutional Portfolio Construction Gregory Peeke, CFA, Skrimshaw Investment Management *Textbook optional: The Handbook of Fixed Income Securities by Frank Fabozzi Class handouts will be provided every week by Thursday noon. -

Chapman's Economic Science Institute Presents Free Lecture Series Chapman University Media Relations

Chapman University Chapman University Digital Commons Chapman Press Releases 2003-2011 Chapman Press 9-1-2008 Chapman's Economic Science Institute Presents Free Lecture Series Chapman University Media Relations Follow this and additional works at: http://digitalcommons.chapman.edu/press_releases Part of the Higher Education Commons, and the Higher Education Administration Commons Recommended Citation Chapman University Media Relations, "Chapman's Economic Science Institute Presents Free Lecture Series" (2008). Chapman Press Releases 2003-2011. Paper 169. http://digitalcommons.chapman.edu/press_releases/169 This Article is brought to you for free and open access by the Chapman Press at Chapman University Digital Commons. It has been accepted for inclusion in Chapman Press Releases 2003-2011 by an authorized administrator of Chapman University Digital Commons. For more information, please contact [email protected]. Chapman's Economic Science Institute Presents Free Lecture Series ORANGE, Calif., Sept. 1, 2008 – Chapman University’s new Economic Science Institute will present a series of free lectures by visiting scholars on a wide range of developments in the field of economic science. The institute, under the direction of Stephen Rassenti, Ph.D. and including Nobel laureate Vernon L. Smith, was founded last year at Chapman when the university attracted the five-member group of economic scientists from George Mason University. The series is co- presented by the International Foundation for Research in Experimental Economics (IFREE), founded by Smith in 1997. All lectures will be held at the Institute’s headquarters on the first floor of historic Wilkinson Hall on the Chapman campus. Admission is free of charge and open to the public. -

2017 Neuropsychoeconomics Conference Program

2017 NeuroPsychoEconomics Conference Program Conference Theme: “Neuroeconomic foundations of bounded rationality and heuristic decision making” CITY CAMPUS of the UNIVERSITY OF ANTWERP (The Grauwzusters Cloister, Lange Sint-Annastraat 7, 2000 Antwerpen, Belgium) The conference language is English. Thursday, June 8, 2017 1:30-2:00 PM: Registration & coffee Location: Patio 2:00-2:15 PM: Welcome note Carolyn Declerck, Christophe Boone, University of Antwerp Location: Promotion Room 2:15-2:45 PM: Plenary session I Wim Van Hecke, Icometrix Imaging Biomarker Experts Advances in neuroimaging data-analysis Location: Promotion Room 2:50-3:30 PM: Session I Track: Heuristics and reinforcement learning Track chair: Carlos Alós-Ferrer Location: Promotion Room 2:50 PM: Carlos Alós-Ferrer, Alexander Ritschel Reinforcement heuristic in normal form games 3:10 PM: Carlos Alós-Ferrer, Michele Garagnani Who is a reinforcer, who is Bayesian? A comparison of behavioral rules in a new beliefs updating paradigm Track: Price and payment Track chair: Carolyn Declerck Location: Chapel 2:50 PM: Robin Goldstein Do premium and generic prices diverge over time? 3:10 PM: Luis-Alberto Casado-Aranda, Francisco José Liébana Cabanillas, Juan Sánchez Fernández Neural correlates of online payment methods: an fMRI study Track: Food consumption behavior Track chair: Charlotte De Backer Location: Room S004 2:50 PM: Eglė Vaičiukynaitė, Darius Černiauskas Emotional food: the power of colour and taste 3:10 PM: Judit Simon, Ildikó Kemény, Ákos Varga, Erica van Herpen, Aikaterini -

Equilibrium Asset Pricing and Portfolio Choice Under Asymmetric Information

09-018 Research Group: Finance in Toulouse March 4, 2009 Equilibrium Asset Pricing and Portfolio Choice under Asymmetric Information BRUNO BIAIS, PETER BOSSAERTS AND CHESTER SPATT Equilibrium Asset Pricing And Portfolio Choice Under Asymmetric Information Bruno Biais, Toulouse University (Gremaq{CNRS, IDEI) Peter Bossaerts, California institute of Technology and Ecole Polytechnique F´ed´eraleLausanne Chester Spatt, Carnegie Mellon University Revised March 04, 2009 Acknowledgment: We gratefully acknowledge financial support from the Institute for Quantitative Research in Finance, a Moore grant at the California Institute of Technology, the Swiss Finance Institute and the Financial Markets and Investment Banking Value Chain Chair, sponsored by the F´ed´erationBancaire Fran¸caise. This is a substantial revision of a previous paper entitled: Equilibrium Asset Pricing Under Heterogeneous Information. Many thanks to the editor, Matt Spiegel, and an anonymous referee for very helpful comments. Many thanks also to participants at seminars at American University, Arizona State University, Babson College, Carnegie Mellon University, Columbia University, CRSP (University of Chicago), ESSEC, Federal Reserve Bank of New York, George Washington University, Harvard Business School, INSEAD, the Interdisciplinary Center (IDC), London Business School, London School of Economics, Oxford University, Securities and Exchange Commission, Toulouse University, UC Davis, the University of Connecticut, the University of Maryland, University of Toronto, University -

Annual Report 2012 Swiss Finance Institute @ Epfl Table of Contents

ANNUAL REPORT 2012 SWISS FINANCE INSTITUTE @ EPFL TABLE OF CONTENTS PART A: SELF-EVALUatION REPORT 1. OVERVIEW p.6 2. BRIEF HistorY p.7 3. GENERAL structure & governance p.9 4. FACULTY p.10 4.1 Composition p.10 4.2 Mentoring and promotion of assistant professors p.10 5. AREAS OF COMPETENCE p.11 6. Integration into THE Swiss FINANCE INSTITUTE networK p.12 7. MAJOR collaborations p.14 7.1 University Finance Centre of Lausanne (CULF) p.14 7.2 Collaboration in macroeconomics p.16 7.3 NCCR FINRISK p.17 7.4 Collaboration with Princeton University p.18 7.5 Swissquote p.18 8. OrganiZation AND manpower p.19 8.1 Promotion of gender equity p.20 9. BIBLIOMETRIC ANALYSIS p.21 10. MASTER PROGRAM IN FINANCIAL ENGINEERING p.26 10.1 Study program p.26 10.2 Student admission procedures and statistics p.28 10.3 Program structure p.28 10.4 MFE versus the Master specialization “Statistics and financial mathematics” offered by the Mathematics Section of the EPFL School of Basic Sciences p.30 10.5 Initiatives to recruit more EPFL students for the MFE program p.30 11. Doctoral PROGRAM IN FINANCE p.32 11.1 First phase: foundation courses p.32 11.2 Second phase: PhD research p.32 11.3 Student support p.33 11.4 Student placement record p.33 11.5 Program structure p.34 12 Strategic PRIORITIES P.34 13 OutreacH p.35 14 BUDGET p.38 15 ANNEX p.39 Annex 1. Finance research seminars at SFI@EPFL, schedule for 2012 - 2013 (sponsored by Unigestion) p.39 Annex 2. -

SPRING 2010 Pamela Brooks Gann BOARD of ADVISORS James B

TheExchang e NEWS FROM THE FINANCIAL ECONOMICS INSTITUTE AT CLAREMONT MCKENNA COLLEGE CMC PRESIDENT VOLUME 10, SPRING 2010 Pamela Brooks Gann BOARD OF ADVISORS James B. McElwee ’74 P’12 (Chair) Director’s Report Weston Presidio by Eric Hughson Kenneth D. Brody Taconic Capital Advisors, LLC William S. Broeksmit ’77 P’12 THIS YEAR WAS academic careers, and it is our job to help Deutsche Morgan Grenfell Justin Hance ’06 the seventh year for students get where they want to go. We think Harris Associates, LP Brent R. Harris ‘81 the FEI. The FEI is that in addition to the courses they take, the Pacific Investment Management Company the finance research research that the students do that is associated Alan C. Heuberger ‘96 Cascade Investment arm of the Robert Day with the FEI helps prepare them for success Kurt M. Hocker ‘88 Union Bank School of Economics in their chosen careers. Gregg E. Ireland ‘72 and Finance, which Some of our academic efforts are, Capital Research and Management Company Eric Hughson Grant Kvalheim ’78 P’12 provides data and however, more practical. This year, we are Barclays Capital (Retired) John R. Shrewsberry ‘87 research support for faculty, and more building a course around the CMC Student Wells Fargo Bank importantly, provides research experience Investment Fund. The objective is twofold. Cody J Smith ‘79 Goldman Sachs & Company (Retired) for students. Last year, I reported that we First, we want to provide academic content Robert P. Thomas ‘99 The George Kaiser Family Foundation had an article co-authored with a student in valuation and asset allocation to better BOARD ASSOCIATES accepted at the Journal of Financial Economics . -

Discussion Paper Series

DISCUSSION PAPER SERIES No. 2578 BASIC PRINCIPLES OF ASSET PRICING THEORY: EVIDENCE FROM LARGE-SCALE EXPERIMENTAL FINANCIAL MARKETS Peter Bossaerts and Charles Plott FINANCIAL ECONOMICS ZZZFHSURUJ ISSN 0265-8003 BASIC PRINCIPLES OF ASSET PRICING THEORY: EVIDENCE FROM LARGE-SCALE EXPERIMENTAL FINANCIAL MARKETS Peter Bossaerts, California Institute of Technology and CEPR Charles Plott, California Institute of Technology Discussion Paper No. 2578 October 2000 Centre for Economic Policy Research 90–98 Goswell Rd, London EC1V 7RR, UK Tel: (44 20) 7878 2900, Fax: (44 20) 7878 2999 Email: [email protected], Website: www.cepr.org This Discussion Paper is issued under the auspices of the Centre’s research programme in Financial Economics. Any opinions expressed here are those of the author(s) and not those of the Centre for Economic Policy Research. Research disseminated by CEPR may include views on policy, but the Centre itself takes no institutional policy positions. The Centre for Economic Policy Research was established in 1983 as a private educational charity, to promote independent analysis and public discussion of open economies and the relations among them. It is pluralist and non-partisan, bringing economic research to bear on the analysis of medium- and long-run policy questions. Institutional (core) finance for the Centre has been provided through major grants from the Economic and Social Research Council, under which an ESRC Resource Centre operates within CEPR; the Esmée Fairbairn Charitable Trust; and the Bank of England. These organizations do not give prior review to the Centre’s publications, nor do they necessarily endorse the views expressed therein. These Discussion Papers often represent preliminary or incomplete work, circulated to encourage discussion and comment. -

Asset Pricing Under Computational Complexity

Under Review Asset Pricing under Computational Complexity Peter Bossaerts, Elizabeth Bowman, Shijie Huang, Carsten Murawski, Shireen Tang and Nitin Yadav August 28, 2018 Under Review 1 ASSET PRICING UNDER COMPUTATIONAL COMPLEXITY 1 2 2 3 Peter Bossaerts, Elizabeth Bowman, Shijie Huang, Carsten 3 4 Murawski, Shireen Tang and Nitin Yadav 4 5 We study asset pricing in a setting where correct valuation of securities re- 5 quires market participants to solve instances of the 0-1 knapsack problem,a 6 6 computationally \hard" problem. We identify a tension between the need for 7 7 absence of arbitrage at the market level and individuals' tendency to use me- 8 thodical approaches to solve the knapsack problem that are effective but easily 8 9 violate the sure-thing principle. We formulate hypotheses that resolve this ten- 9 10 sion. We demonstrate experimentally that: (i) Market prices only reveal inferior 10 solutions; (ii) Valuations of the average trader are better than those reflected in 11 11 market prices; (iii) Those with better valuations earn more through trading; (iv) 12 12 Price quality is unaffected by the size of the search space, but decreases with 13 instance complexity as measured in the theory of computation; (v) Participants 13 14 learn from market prices, in interaction with trading volume. 14 15 Keywords: Efficient Markets Hypothesis, Computational Complexity, Fi- 15 16 nancial Markets, Noisy Rational Expectations Equilibrium, Absence of Arbi- 16 trage. 17 17 18 18 19 19 20 20 21 21 22 22 23 23 24 24 25 25 26 26 27 All authors are of the Brain, Mind & Markets Laboratory, Department of 27 28 Finance, Faculty of Business and Economics, The University of Melbourne, 28 Victoria 3010, Australia. -

Peter Bossaerts Utah Laboratory for Experimental Economics and Finance, 1655 East Campus Center Drive, Salt Lake City, Utah 84112

Peter Bossaerts Utah Laboratory for Experimental Economics and Finance, 1655 East Campus Center Drive, Salt Lake City, Utah 84112 T: +1.626.200.9282 E: [email protected] Honors Fellow of The Econometric Society (Elected 2010) Fellow of The Society for the Advancement of Economic Theory (Elected 2011) Academic David Eccles Professor of Finance, University of Utah 2013 - present Appointments William D. Hacker Professor of Economics and Management, Caltech 2003 – 2013 – Primary Swiss Finance Institute Professor, EPFL 2007 - 2012 Professor of Finance, Caltech 1998 – 2013 Associate Professor of Finance, Caltech 1994 - 1998 Professor of Investments Analysis, Tilburg University 1994 - 1996 Assistant Professor of Finance, Caltech 1990 - 1994 Assistant Professor of Finance, Carnegie Mellon University 1987 - 1990 Academic Professor, Experimental Finance & Decision Neuroscience (PT), University of Melbourne 2015-2020 Appointments Honorary Professorial Fellow, The Florey Institute of Neuroscience and Mental Health 2014-2016 – Secondary Professor, Experimental Finance & Decision Neuroscience, University of Melbourne 2014 Visiting Associate in Finance, Caltech 2013 – 2015 Visiting Professor, Cambridge University 2012 - 2014 Honorary Professorial Fellow, University of Melbourne 2012 – 2013 Fellow, UTS Market Design Centre 2014 - present Research Fellow, Centre for Economic Policy Research 1995 – 2014 Fellow, University of Zurich Center for Engineering Social and Economic Institutions 2012 - 2014 Affiliate, USC Theoretical Research in Neuroeconomic -

'Lucas' in the Laboratory

NBER WORKING PAPER SERIES 'LUCAS' IN THE LABORATORY Elena Asparouhova Peter Bossaerts Nilanjan Roy William Zame Working Paper 19068 http://www.nber.org/papers/w19068 NATIONAL BUREAU OF ECONOMIC RESEARCH 1050 Massachusetts Avenue Cambridge, MA 02138 May 2013 Financial support from Inquire Europe, the Hacker Chair at the California Institute of Technology (Caltech), and the Development Fund of the David Eccles School of Business at the University of Utah is gratefully acknowledged. Zame thanks EIEF for support and hospitality. The paper benefited from discussions during presentations at many academic institutions and conferences. Comments from Klaus Adam, Robert Bloomfield, Luis Braido, Darrell Duffie, John Duffy, Burton Hollifield, Hanno Lustig, Ramon Marimon, Richard Roll, Jose Sheinkman, Jason Shachat, Shyam Sunder, Stijn van Nieuwerburgh and Michael Woodford were particularly helpful. Elena Asparouhova acknowledges support from the National Science Foundation (grant SES-1061844). The views expressed herein are those of the authors and do not necessarily reflect the views of the National Bureau of Economic Research. NBER working papers are circulated for discussion and comment purposes. They have not been peer- reviewed or been subject to the review by the NBER Board of Directors that accompanies official NBER publications. © 2013 by Elena Asparouhova, Peter Bossaerts, Nilanjan Roy, and William Zame. All rights reserved. Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission provided that full credit, including © notice, is given to the source. 'Lucas' In The Laboratory Elena Asparouhova, Peter Bossaerts, Nilanjan Roy, and William Zame NBER Working Paper No. 19068 May 2013 JEL No. C92,E21,E32,G12 ABSTRACT This paper reports on experimental tests of an instantiation of the Lucas asset pricing model with heterogeneous agents and time-varying private income streams. -

Download the 2016 Program Here

The George L. Argyros School of Business and Economics Conference on the Experimental and Behavioral Aspects of Financial Markets Chapman University Program Friday, January 8, 2016 Location: Argyros Forum, Room 201 9 a.m. .......................................................... Continental Breakfast 9:15 a.m. to 9:30 a.m. ............................... Welcome 9:30 a.m. to 10:10 a.m. ............................. Noussair “Futures markets, cognitive ability, and mispricing in experimental asset markets” 10:10 a.m. to 10:50 a.m. ........................... Corgnet “What makes a good trader? On the role of quant skills, behavioral biases and intuition on trader performance” 10:50 a.m. to 11:10 a.m. ........................... Break (coffee and tea provided) 11:10 a.m. to 11:50 a.m. ........................... Biais “Risk sharing and asset pricing in an experimental market” 11:50 a.m. to 12:30 p.m. ........................... Asparouhova “Market bubbles and crashes as an expression of tension between social and individual rationality” 12:30 p.m. to 2 p.m. .................................. Lunch (Argyros Forum, Faculty Athenaeum) 2 p.m. to 2:40 p.m. .................................... Plott “Field tests of parimutuel based information aggregation mechanisms: Box office revenues” 2:40 p.m. to 3:20 p.m. ............................... Bossaerts “Perception of intentionality in investor attitudes towards financial risks” 3:20 p.m. to 3:40 p.m. ............................... Break (coffee and tea provided) 3:40 p.m. to 4:40 p.m. ............................... Roundtable discussion (Asparouhova, Biais, Bossaerts, Noussair, Odean, Plott, Smith) 4:40 p.m. to 4:50 p.m. ............................... Concluding remarks Experimental and Behavioral Aspects of Financial Markets Speakers’Bios Elena Asparouhova Elena is an Associate Professor in the Finance Department of the University of Utah. -

J. Pablo Franco –

June 23, 2021 B [email protected] Í linkedin.com/in/jpablofranco/ J. Pablo Franco ORCID: 0000-0003-2608-2035 EDUCATION 2017 - PhD in Decision, Risk and Financial Sciences, present The University of Melbourne, Melbourne, Australia. 2014 - 2016 Research Master’s degree in Cognitive and Clinical Neuroscience - Neuroeconomics Track (Cum Laude), Maastricht University, Maastricht, The Netherlands. 2007 - 2012 Bachelor’s degree in Mathematics, Universidad de Los Andes, Bogotá, Colombia. 2006 - 2012 Bachelor’s degree in Economics, Universidad de Los Andes, Bogotá, Colombia. PUBLICATIONS PEER-REVIEWED 2017 Asset Price Bubbles: Existence, Persistence and Migration, Gomez-Gonzalez, J. E., Ojeda-Joya, J. N., Franco, J. P. and Torres, J. E., South African Journal of Economics, DOI: 10.1111/saje.12108. PREPRINTS 2020 Task-independent metrics of computational hardness predict performance of human problem-solving, Franco, J. P., Doroc, K., Yadav, N., Bossaerts, P., Murawski C., BioRxiv, DOI: 2021.04.25.441300. 2018 Where the really hard choices are: A general framework to quantify decision difficulty, Franco, J. P., Yadav, N., Bossaerts, P., Murawski C., BioRxiv, DOI: 10.1101/405449. BOOK CHAPTERS 2015 Burbujas en precios de activos financieros: existencia, persistencia y migración, (Bubbles in prices of financial assets: existence, persistence and migration) Franco, J. P., Gomez-Gonzalez, J. E., Ojeda-Joya, J. N., Torres, J. E., In Política monetaria y estabilidad financiera en economías pequeñas y abiertas, DOI: 10.32468/ebook.664-314-6. THESES 2021 (forth- Computational complexity of decisions: Quantifying computational hardness and its coming) effects on human computation, PhD Thesis, Decision Risk and Financial Sciences, The University of Melbourne.