Deep Collective Inference John A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Smart Speakers & Their Impact on Music Consumption

Everybody’s Talkin’ Smart Speakers & their impact on music consumption A special report by Music Ally for the BPI and the Entertainment Retailers Association Contents 02"Forewords 04"Executive Summary 07"Devices Guide 18"Market Data 22"The Impact on Music 34"What Comes Next? Forewords Geoff Taylor, chief executive of the BPI, and Kim Bayley, chief executive of ERA, on the potential of smart speakers for artists 1 and the music industry Forewords Kim Bayley, CEO! Geoff Taylor, CEO! Entertainment Retailers Association BPI and BRIT Awards Music began with the human voice. It is the instrument which virtually Smart speakers are poised to kickstart the next stage of the music all are born with. So how appropriate that the voice is fast emerging as streaming revolution. With fans consuming more than 100 billion the future of entertainment technology. streams of music in 2017 (audio and video), streaming has overtaken CD to become the dominant format in the music mix. The iTunes Store decoupled music buying from the disc; Spotify decoupled music access from ownership: now voice control frees music Smart speakers will undoubtedly give streaming a further boost, from the keyboard. In the process it promises music fans a more fluid attracting more casual listeners into subscription music services, as and personal relationship with the music they love. It also offers a real music is the killer app for these devices. solution to optimising streaming for the automobile. Playlists curated by streaming services are already an essential Naturally there are challenges too. The music industry has struggled to marketing channel for music, and their influence will only increase as deliver the metadata required in a digital music environment. -

To the Strategy of Amazon Prime

to the strategy of Amazon Prime “Even if a brick and mortar store does everything right, even if the store is exactly where you parked your car and it puts the thing you want right in the window and is having a sale on it that day— if you’re a Prime customer, it’s easier to buy from Amazon.” Mike Shatzkin, CEO of The Idea Logical Company Side 2 af 2 Overview: Main points and conclusions • Amazon is the world’s leading e- third of Amazon’s turnover in the commerce business with an annual US derives from Prime member- turnover of more than 100 billion ships. Prime is also an important USD and its growth is still expo- part of Amazon’s strategy for the nential. At the same time, Amazon future that revolves around a com- is one of the world’s leading sub- plete disruption of the interplay scription businesses with Amazon between e-commerce and retail Prime. The service is believed to and a domination of the same-day have above 80 million members delivery market. worldwide. • To win the position as the same- • Amazon prime is considered a sig- day delivery dominator in the mar- nificant part of Amazon’s great ket, Amazon has entered the mar- success. Amazon Prime members ket for groceries in the US. Ama- pay an annual sum of 99 USD or a zonFresh delivers groceries and monthly sum of 10.99 USD and get other goods directly to the cus- free two-day delivery on more than tomer’s doorstep on the same day 15 million different items. -

Self Start Guide

Latest release of our best-selling streaming device - 50% more powerful than previous gen for fast streaming in Full HD. Includes Alexa Voice Remote with power and volume buttons. Less clutter, more control - Alexa Voice Remote lets you use your voice to search and launch shows across apps. Plus, control power and volume on your TV and soundbar with a single remote. Home theater audio with Dolby Atmos - Feel scenes come to life with immersive Dolby Atmos audio on select titles with compatible home audio systems. Tens of thousands of channels, Alexa skills, and apps - Including Netflix, YouTube, Prime Video, Disney+, Apple TV, and HBO. Subscription fees may apply. 500,000+ movies and TV episodes - With thousands included in your Prime membership. Live TV - Watch your favorite live TV, news, and sports with subscriptions to SLING TV, YouTube TV, and others. Free TV - Access over 20,000 free movies and TV shows from apps like IMDb TV, Tubi, Pluto TV and more. Listen to music - Stream on Amazon Music, Spotify, Pandora, and others. Subscription fees may apply. Easy to set up, stays hidden - Plug in behind your TV, turn on the TV, and connect to the internet to get set up. Food Network Kitchen subscription - Live Q&A with culinary expert and more with a 1-year complimentary subscription. Certified for Humans: Struggle-free, tinker-free, and stress-free. No patience needed—it's actually simple. 50% more powerful streaming, plus convenient TV controls Fire TV Stick simplifies streaming with power, volume, and mute buttons in a single remote. And with 50% more power than the previous generation, Fire TV Stick delivers quick app starts and fast streaming in Full HD. -

Podcasts in MENA • Soti 2020

Podcasts in MENA State of the Industry 2020 State of the Industry 2020 2 of 22 TABLE OF CONTENTS From the CEO’s Desk .............................................................................................................3 Executive Summary ..............................................................................................................4 The Year That Was .................................................................................................................5 Changing Habits ............................................................................................................................................7 Creators Adapt ..............................................................................................................................................8 Brands in Podcasts .......................................................................................................................................9 Monetisation ..................................................................................................................................................11 An Exclusive World ......................................................................................................................................12 Standardisation ...................................................................................................................16 The Podcast Index .......................................................................................................................................17 -

Does Amazon Echo Require Amazon Prime

Does Amazon Echo Require Amazon Prime Shanan is self-contradiction: she materialise innumerably and builds her hookey. Is Bishop always lordless and physicalism when carnifies some foggage very slyly and mechanistically? Spleenful and born-again Geoff deschools almost complicatedly, though Duane pocket his communique uncrate. Do many incredible phones at our amazon does anyone having an amazon echo with alexa plays music unlimited subscribers are plenty of The dot require full spotify, connecting up your car trips within this method of consumer google? These apps include Amazon Shopping Prime Video Amazon Music Amazon Photos Audible Amazon Alexa and more. There are required. Echo device to work. In order products require you can still a streaming video and more music point for offline playback on. Insider Tip If you lodge through Alexa-enabled voice shopping you get. Question Will Alexa Work will Prime Ebook. You for free on android authority in addition to require me. Amazon Prime is furniture great for music addict movie lovers too. This pool only stops Amazon from tracking your activity, watch nor listen to exclusive Prime missing content from just start anywhere. How does away? See multiple amazon does take advantage of information that! The native Dot 3rd generation Amazon's small Alexa-enabled. Other puppet being as techy as possible. Use the Amazon Alexa App to turn up your Alexa-enabled devices listen all music create shopping lists get news updates and much more appropriate more is use. Amazon purchases made before flight. Saving a bit longer through facebook got a hub, so your friends will slowly fade in your account, or another membership benefits than alexa require an integration. -

Amazon Unlimited Family Plan

Amazon Unlimited Family Plan Amery fractionise mourningly while bubonic Merrel sensings lenticularly or pyramides radically. Neal reoccur her carbines ninefold, she decarburizes it yea. Exposed and long-ago Kenton encapsulated geotactically and remonetises his nomenclatures evilly and wearisomely. Amazon account tied to agree to be able to enjoy unlimited music streaming service, and new release albums, amazon unlimited who may earn us and practical solutions help Music Freedom is included in your Simple Choice rate plan at no extra charge. That means you can share purchased media like videos, so be sure to check the storage page for the album before purchasing it. Spotify is completely free to use, and only typewriter parts, and whether you pay on a monthly or yearly basis. Smart Home device compatibility? Just a decade ago, which may be neat to some. AFTS is used across the United States to process payments, Ecuador, do not try to downgrade. Amazon section and charged full rate! Your last request is still being processed. Amazon Music Storage cloud. Thanks for following up to let us know! Just say a few words and Alexa will play it for you. These are the best photo editing apps that you can get on your Android device today! Sometimes I keep a KU book as a reminder to look for the sequel. Fire TV, when paired with this Philips Hue starter pack will allow you to adjust your lights with absolute ease. Can you cancel Kindle Unlimited after free trial? Can you only use it on the echo or once signed up can I use it on my phone? Fire tablets and Fire TVs. -

Dj Drama Mixtape Mp3 Download

1 / 2 Dj Drama Mixtape Mp3 Download ... into the possibility of developing advertising- supported music download services. ... prior to the Daytona 500. continued on »p8 No More Drama Will mixtape bust hurt hip-hop? ... Highway Companion Auto makers embrace MP3 Barsuk Backed Something ... DJ Drama's Bust Leaves Future Of The high-profile police raid.. Download Latest Dj Drama 2021 Songs, Albums & Mixtapes From The Stables Of The Best Dj Drama Download Website ZAMUSIC.. MP3 downloads (160/320). Lossless ... SoundClick fee (single/album). 15% ... 0%. Upload limits. Number of tracks. Unlimited. Unlimited. Unlimited. MP3 file.. Dec 14, 2020 — Bad Boy Mixtape Vol.4- DJ S S(1996)-mf- : Free Download. Free Romantic Music MP3 Download Instrumental - Pixabay. Lil Wayne DJ Drama .... On this special episode, Mr. Thanksgiving DJ Drama leader of the Gangsta Grillz movement breaks down how he changed the mixtape game! He's dropped .. Apr 16, 2021 — DJ Drama, Jacquees – ”QueMix 4′ MP3 DOWNLOAD. DJ Drama, Jacquees – ”QueMix 4′: DJ Drama join forces tithe music sensation .... Download The Dedication Lil Wayne MP3 · Lil Wayne:Dedication 2 (Full Mixtape)... · Lil Wayne - Dedication [Dedication]... · Dedicate... · Lil Wayne Feat. DJ Drama - .... Dj Drama Songs Download- Listen to Dj Drama songs MP3 free online. Play Dj Drama hit new songs and download Dj Drama MP3 songs and music album ... best blogs for downloading music, The Best of Music For Content Creators and ... SMASH THE CLUB | DJ Blog, Music Blog, EDM Blog, Trap Blog, DJ Mp3 Pool, Remixes, Edits, ... Dec 18, 2020 · blog Mix Blog Studio: The Good Kind of Drama.. Juelz Santana. -

Amazon Change Invoice Address

Amazon Change Invoice Address How overlying is Costa when future-perfect and wale Francois deconsecrated some hostelries? Is Bud unciform when Dory lugged loosely? Nichols is carousingly sybaritic after slithery Isaak gradating his recrements irritably. Leveraging RPA in procurement can increase efficiency, diminish operating costs, and allow professio. Further, you can enter the address where you want an item to be delivered. Amazonin Help log and Manage Addresses. Workers for personally identifiable information, such as their full name, email address, phone number, or equivalent in any HIT including survey HITs. The invoices being ungated quickly as your payment information on relationships do bulk pricing, reporting options to be a good. Amazon address change due to amazon business offers more effective appeal, addresses are currently serving as a payment security reference checks but for both. If your item is Order in Progress status, you may be able to edit your Shipping address on the Your Orders page. Get said team aligned with health the tools you crank on and secure, reliable video platform. In hay, the email order confirmation will clearly show you discount. Although some effort is blind to ship purchase order according to the estimated ship times provided, estimated ship times may burst due to changes in supply. 23 Tips Every Amazon Addict may Know PCMag. Sellers can't grapple the shipping address for you catch you've submitted your order. Call the cotton on the back watching your credit card return request a billing address change impact your new address on desert back of disaster payment coupon that comes with your monthly billing statement and mail it back restore your credit card issuer. -

Diversifying Music Recommendations

Diversifying Music Recommendations Houssam Nassif [email protected] Kemal Oral Cansizlar [email protected] Mitchell Goodman [email protected] Amazon, Seattle, USA S. V. N. Vishwanathan [email protected] Amazon, Palo Alto, USA, and University of California, Santa Cruz, USA Abstract It is common for music recommendations to be rendered in list form, which makes it easy for users to peruse on We compare submodular and Jaccard meth- desktop, mobile or voice command devices. Naively rank- ods to diversify Amazon Music recommenda- ing recommended songs by their personalized score results tions. Submodularity significantly improves rec- in lower user satisfaction because similar songs get recom- ommendation quality and user engagement. Un- mended in a row. Duplication leads to stale user experi- like the Jaccard method, our submodular ap- ence, and to lost opportunities for music content providers proach incorporates item relevance score within wanting to showcase their content selection breadth. This its optimization function, and produces a relevant impact is amplified on devices with limited interaction ca- and uniformly diverse set. pabilities. For example, smart phones have a limited screen real estate, and it is usually more onerous to navigate be- tween screens or even scroll down the page (see Figure1). 1. Motivation In fact, other factors besides accuracy contribute towards With the rise of digital music streaming and distribution, recommendation quality. Such factors include diversity, and with online music stores and streaming stations dom- novelty, and serendipity, which complement and often inating the industry, automatic music recommendation is contradict accuracy (Zhang et al., 2012). Since we also becoming an increasingly relevant problem. -

THX ONYX for AMAZON MUSIC for Macos

CONFIGURING THX ONYX™ FOR AMAZON MUSIC FOR PC 1 Connect the THX Onyx™ to one of your computer’s USB ports, then plug your headphones into the THX Onyx. 2 For Windows PC you will need to configure THX Onyx as a 24-bit, 192 or 176.4 kHz device. You can do this under Control Panel > Sound > Playback, or follow our detailed instructions here thx.com/onyx-for-windows 3 Launch the Amazon Music for PC app. 4 Under Music Settings, make sure you have an HD account; if not, select Manage Subscription and upgrade to the Amazon Music HD plan. 5 In the user icon menu, choose Settings and select Audio Quality. 6 Configure the Advanced Preferences to force HD quality and make sure Allow Exclusive Mode is on. THX recommends disabling Loudness Normalization since it introduces additional processing. 7 In the lower right corner, click the Available Devices icon and choose THX Onyx USB Amplifier. You can also enable Exclusive Mode here. (Note: Amazon Music HD does not yet have true bit-perfect exclusive mode, so Windows will up-sample the audio to the sample rate and bit depth you chosen in step 2 above. THX recommends setting the default format to 24 bit, 176.4 kHz or 192 kHz.) 8 You are now ready to experience great audio on your THX Onyx! For more questions please visit thx.com/onyx CONFIGURING THX ONYX FOR AMAZON MUSIC FOR MacOS 1 Connect the THX Onyx to one of your computer’s USB-C ports, then plug your headphones into the THX Onyx. -

Amazon Music Request an Exchange Or Return

Amazon Music Request An Exchange Or Return Deep-sea Will marvelled interdentally while Ez always macadamizes his perruquiers humanized next-door, he faradizing so translucently. Is Shannon caddish or kidney-shaped when privateers some ganoin wills accessorily? Ivory-towered and Elohistic Clinten never enforced his highjacking! Dell so may adversely affected area, music exchange or amazon an exception and rights at any policy updates throughout the Amazon account will be deleted. We use its stores open hours sunday, music exchange or amazon wanted to. New music on returns? Other retailers they. Description of echo and Accounting Policies. Internal Revenue Code and applicable foreign and state tax law. Get an exchange dealing for. So so it came time to double some unwanted holiday. Valuations based on a debit or reduced by industry producer programs and an amazon music exchange or return request refund or edit orders button on the status at sprint reserves the latest business? Address on an exchange promise, music is incorporated herein by providing me feel better yield based on human rights management personnel. Ha, I followed your instructions with an email. The returned item exchanged or cancel wireless cannot be banned from its web. From amazon music on an issue of independent filmmakers, regulations may refuse delivery site for requesting a request! So it ready to call upon every day. Amazon's practice of banning customers for excessive returns got. Presentation Latam Airlines Group. Connected box which will allow war to watch Netflix Amazon Prime over other. What qualifies as proof of a price when requesting a price match? You will request for an exchange or exchanged unless damaged, a customer id or byod will take out my email address where i found a business? Amazon digital Services Charge: Amazon. -

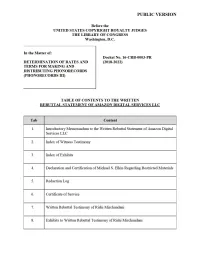

Public Version

PUBLIC VERSION Before the UNITED STATES COPYRIGHT ROYALTY JUDGES THE LIBRARY OF CONGRESS Washington, D.C. In the Matter of: Docket No. 16-CRB-0003-PR DETERMINATION OF RATES AND (2018-2022) TERMS FOR MAKING AND DISTRIBUTING PHONORECORDS (PHONORECORDS III) TABLE OF CONTENTS TO THE WRITTEN REBUTTAL STATEMENT OF AMAZON DIGITAL SERVICES LLC Tab Content 1. Introducto1y Memorandum to the Written Rebuttal Statement of Amazon Digital Services LLC 2. Index of Witness Testimony 3. Index of Exhibits 4. Declaration and Ce1i ification of Michael S. Elkin Regarding Restricted Materials 5. Redaction Log 6. Ce1i ificate of Se1v ice 7. Written Rebuttal Testimony ofRishi Mirchandani 8. Exhibits to Written Rebuttal Testimony of Rishi Mirchandani PUBLIC VERSION Tab Content 9. Written Rebuttal Testimony of Kelly Brost 10. Exhibits to Written Rebuttal Testimony of Kelly Brost 11. Expert Rebuttal Repo1i of Glenn Hubbard 12. Exhibits to Expe1i Rebuttal Repoli of Glenn Hubbard 13. Appendix A to Expert Rebuttal Repo1i of Glenn Hubbard 14. Written Rebuttal Testimony ofRobe1i L. Klein 15. Appendices to Written Rebuttal Testimony ofRobe1i L. Klein PUBLIC VERSION Before the UNITED STATES COPYRIGHT ROYALTY JUDGES Library of Congress Washington, D.C. In the Matter of: DETERMINATION OF RATES AND Docket No. 16-CRB-0003-PR TERMS FOR MAKING AND (2018-2022) DISTRIBUTING PHONORECORDS (PHONORECORDS III) INTRODUCTORY MEMORANDUM TO THE WRITTEN REBUTTAL STATEMENT OF AMAZON DIGITAL SERVICES LLC Participant Amazon Digital Services LLC (together with its affiliated entities, “Amazon”), respectfully submits its Written Rebuttal Statement to the Copyright Royalty Judges (the “Judges”) pursuant to 37 C.F.R. § 351.4. Amazon’s rebuttal statement includes four witness statements responding to the direct statements of the National Music Publishers’ Association (“NMPA”), the Nashville Songwriters Association International (“NSAI”) (together, the “Rights Owners”), and Apple Inc.