Empowering Radiologists and Accelerating AI Adoption Using Clinical Analytics for AI Model Validation, Evaluation, and Performance Monitoring

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nuance Healthcare by the Numbers

Healthcare Solutions Fact Sheet Nuance Healthcare Nuance Healthcare by the numbers Nuance’s healthcare solutions help care teams capture and communicate more than 300+ million patient stories each year—freeing them to focus on caring for patients. Nuance Healthcare at a glance – Nuance has deployed healthcare solutions to 90% of all U.S. hospitals. – Nuance clients and partners are located across North America, Europe, the % Middle East, Africa and Asia Pacific. – More than 500,000 clinicians and 10,000 healthcare facilities worldwide leverage Nuance’s award-winning clinical documentation solutions to support a natural clinical workflow on any device. 90 Nuance’s healthcare solutions – Nuance technology powers 14+ billion cloud transactions annually. are deployed in 90% of all – More than 200 healthcare IT and EHR vendors—including Allscripts, U.S. hospitals. athenahealth, Cerner, eClinicalWorks, Epic, NextGen, MEDITECH—partner with Nuance to ease the burden of clinician documentation. – Nuance Healthcare transcribes more than 3 billion lines on an annual basis. – 90% of Nuance clients are in the top 50% of US hospitals in CareChex overall mortality ratings, and see significant quality rating improvements after implementing Nuance’s healthcare solutions. – Nuance ensures healthcare clients capture more than $1 billion of appropriate reimbursement a year. – Over 5,000 healthcare facilities use Nuance cloud-based medical image sharing network. – 1 million imaging studies per month are shared on the PowerShare Network. Nuance Healthcare’s industry recognition #1 Nuance named KLAS Software – 70% of radiology reports in U.S. are created with PowerScribe & Services Category Leader – 2 out of 3 radiologists rely on Nuance every day for Quality Management four consecutive years. -

Buffalo Flexible Income Fund COMMENTARY September 30, 2020

QUARTERLY Buffalo Flexible Income Fund COMMENTARY September 30, 2020 Capital Market Overview Fund Facts Investor Institutional Equity markets sustained their momentum in the 3rd quarter, with the S&P 500 Index returning 8.93%. Macro data continued to improve, and companies broadly reported earnings Ticker: BUFBX BUIBX that proved to be more resilient than expectations. While the U.S. experienced another spike in Inception Date: 8/12/94 7/1/19 COVID-19 cases during the quarter and tragically surpassed 200,000 deaths, positive news Expense Ratio: 1.02% 0.87% on vaccines and therapy fronts continued to provide hope for investors. Fund Assets: $409.09 Million The Russell 3000 Index increased 9.21% in the quarter. Growth continued to outperform Category: Large Cap Value value, as the Russell 3000 Growth Index advanced 12.86%, compared to the Russell 3000 Benchmark: Morningstar Moderately Value Index return of 5.41%. Relative performance was correlated with market cap size in Aggressive Target Risk the quarter, with the large cap Russell 1000 Index delivering a return of 9.47%, compared Index to the Russell Mid Cap Index return of 7.46%, the smaller cap Russell 2000 Index return of 4.93%, and the Russell Micro Cap Index return of 3.69%. More cyclically-exposed Consumer Discretionary, Materials, and Industrial sectors performed best in the quarter. Management Team Energy was the sole declining sector, hurt by lingering weakness in oil demand. John Kornitzer Manager since Inception Performance Commentary B.A. – St. Francis Univ. (PA) The Buffalo Flexible Income Fund (BUFBX) produced a return of 0.16% for the quarter compared to 6.06% for the Morningstar Moderately Aggressive Target Risk Index. -

3D Systems 3M M*Modal 4Dmedical Accumetra / QIBA Conformance

3D Systems 3M M*Modal 4DMedical Accumetra / QIBA Conformance Advanced Quality Systems Inc Agfa HealthCare AHRA: The Association For Medical Imaging Management AI Medic Inc. Aidoc Medical Ltd AlgoMedica Inc. Allm North America Alpha Source Group Altamont Software Ambra Health American Association of Physicists in Medicine American College of Radiology Amrad Medical/Summit Industries, LLC Apollo Enterprise Imaging Corp ARRT, The American Registry of Radiologic Technologists Aspect Imaging Association of Vascular and Interventional Radiographers AuntMinnie.com Balzano ScanDiags AI Engineers Barco Bayer Bayer AI BD Beacon Display Technology Co, Ltd Biogen BioMind® Blackford Analysis Bracco Candelis Inc Canon Electron Tubes & Devices Canon USA Inc Carestream Health CCD CEIA USA Ferromagnetic Division Change Healthcare Chison Medical Technologies Co Ltd Circle Cardiovascular Imaging CitiusTech Inc CIVCO Medical Solutions ClariPi Inc Clickview Corporation CMR Codonics Inc Combinostics ContextVision Control-X Medical Inc Coreline Soft CorTechs Labs CureMetrix Inc CurveBeam DeepHealth, Inc. DeepTek Inc Densitas Inc DiA Imaging Analysis Dicom Systems Inc Digitec Srl Double Black Imaging & Image Systems DRTECH North America Dunlee EBM Technologies Inc Echolight Medical E-COM Technology Limited ECORAY Co Ltd EDAN Instruments Inc EIZO Corporation Elsevier Emergent Connect Endra Life Sciences Enlitic Inc Envision Physician Services eRAD Esaote ETS-Lindgren European Society of Radiology (ESR) Flywheel Formlabs Fovia Ai Fraunhofer MEVIS FUJIFILM Medical -

Portfolio of Investments

PORTFOLIO OF INVESTMENTS Columbia Seligman Premium Technology Growth Fund, Inc., March 31, 2021 (Unaudited) (Percentages represent value of investments compared to net assets) Investments in securities Common Stocks 98.5% Common Stocks (continued) Issuer Shares Value ($) Issuer Shares Value ($) Communication Services 9.7% Information Technology 84.0% Entertainment 1.4% Communications Equipment 5.5% Activision Blizzard, Inc. 59,508 5,534,244 Arista Networks, Inc.(a) 13,996 4,225,252 (a) Sciplay Corp., Class A 81,606 1,320,385 Cisco Systems, Inc. 27,100 1,401,341 Total 6,854,629 CommScope Holding Co., Inc.(a) 119,600 1,837,056 Interactive Media & Services 6.3% F5 Networks, Inc.(a) 28,700 5,987,394 Alphabet, Inc., Class A(a) 8,041 16,584,724 Lumentum Holdings, Inc.(a) 59,900 5,471,865 Alphabet, Inc., Class C(a) 6,629 13,712,948 Plantronics, Inc.(a) 174,938 6,806,838 Match Group, Inc.(a) 8,000 1,099,040 Telefonaktiebolaget LM Ericsson, ADR 123,300 1,626,327 Total 31,396,712 Total 27,356,073 Media 1.4% IT Services 7.5% Discovery, Inc., Class C(a) 41,300 1,523,557 DXC Technology Co.(a) 53,000 1,656,780 Fox Corp., Class A 142,700 5,152,897 Fidelity National Information Services, Inc. 34,400 4,836,984 Total 6,676,454 Fiserv, Inc.(a) 31,600 3,761,664 Wireless Telecommunication Services 0.6% Genpact Ltd. 72,670 3,111,730 T-Mobile USA, Inc.(a) 6,200 776,798 Global Payments, Inc. -

Eavesdropping Concerns in Samsung Smart Tvs (Update) 10 February 2015, Byyoukyung Lee

Eavesdropping concerns in Samsung smart TVs (Update) 10 February 2015, byYoukyung Lee the channel or the volume. That speech isn't stored or transmitted, according to Samsung. But the remote control also has a microphone that can not only respond to those commands but also search for content, such as requests to recommend a good movie. The speech is translated by third-party software into text and sent back to the TV as a command. Although Samsung initially declined to name the software company, the blog post identifies it as Nuance Communications Inc. The TV also transmits other information including its unique identifier, both to provide the service and to In this Jan. 5, 2015 photo, models pose with a Samsung improve the feature. Electronics Co.' SUHD 4K smart TV during a press conference in Seoul, South Korea. Samsung Electronics Co. on Tuesday, Feb, 10, 2015, said voice recognition technology in its Internet connected TVs can capture and transmit nearby conversations.(AP Photo/Ahn Young-joon) Watch what you say in your living room. Samsung's smart TV could be listening. And sharing. At least that's what you'd conclude in reading Samsung's privacy policy for smart TVs. Voice recognition technology in Samsung's Internet- In this Jan. 5, 2015 photo, a journalist passes by connected TVs captures and transmits nearby Samsung Electronics Co.' SUHD 4K smart TVs on conversations. The policy warns, "Please be aware display outside the venue of a press conference in Seoul, that if your spoken words include personal or other South Korea. Samsung Electronics Co. -

Dragon® Naturallyspeaking® for Education Helping All Students Reach Their Full Potential

DRAGON® NATURALLYSPEAKING® FOR EDUCATION HELPING ALL STUDENTS REACH THEIR FULL POTENTIAL A REVOLUTIONARY EDUCATIONAL TOOL Dragon® NaturallySpeaking®, the world’s best-selling speech recognition software for the PC, enables students, teachers and school administrators to talk to their computers and watch their spoken words appear in documents, email, instant messages and more — three times faster than most people type — with up to 99% accuracy. It lets users dictate and edit in programs like Microsoft® Word, Excel® and Outlook® and OpenOffice Writer, surf the Web using Internet Explorer® and Mozilla® Firefox®, and command and control virtually any Windows application — just by speaking. Dragon NaturallySpeaking has gained broad acceptance as a cost-effective accessibility tool for a wide range of students. It not only provides an alternative input method for students with physical disabilities, but it has also been shown to help students with learning disabilities make significant advances in the areas of reading, writing and spelling. By eliminating the physical demands of typing and cognitive barriers to written communication, Dragon can help all students better realize their full potential. Speech recognition is gaining traction in the general education arena, too. Because people of all abilities are using speech to interact with mainstream technology — computers, handheld devices, navigation systems, gaming consoles, and more — students need to be trained in using voice interfaces. That’s why more middle and secondary schools are adopting Dragon as part of their business education curriculum. This training helps students boost productivity, prepare for tomorrow’s workforce, and prevent increasingly common repetitive stress injuries. REDUCE THE STRESS AND ANXIETY ASSOCIATED WITH HOMEWORK AND REPORTS Imagine the new levels of freedom and success students could experience by easily transferring their ideas into written text. -

Arun Sarin, Former CEO of Vodafone, to Be Appointed Chairman

Nuance Identifies Board of Directors for Cerence Inc., its Automotive Spin-Off; Arun Sarin, Former CEO of Vodafone, to be Appointed Chairman August 7, 2019 BURLINGTON, Mass., Aug. 07, 2019 (GLOBE NEWSWIRE) -- Nuance Communications, Inc. (NASDAQ: NUAN), a leading provider of conversational AI, today identified the Board of Directors for its upcoming Automotive spin-off, Cerence Inc. Among the directors, the Company announced that Arun Sarin, former CEO of Vodafone, will be appointed Cerence Chairman of the Board when the spin-off is completed on October 1, 2019. In addition to Mr. Sarin, the board members will include Thomas Beaudoin, EVP, Business Transformation, at Nuance; Marianne Budnik, CMO, CyberArk Software; Sanjay Dhawan, future Cerence president and CEO, and a division president and CTO at Harman; Sanjay Jha, general partner at Eclipse Ventures and former CEO of Motorola Mobility, Inc.; Kristi Ann Matus, executive advisor at Thomas H. Lee Partners and former CFO at athenahealth; and Alfred Nietzel, executive consultant and former CFO at CDK Global. “For Cerence’s future Board of Directors, we have assembled a group of highly talented leaders who bring a variety of meaningful, deep talent and expertise,” said Mark Benjamin, CEO of Nuance. “As Nuance prepares to spin the automotive business into Cerence, the extensive knowledge, unique insights, and proven success of this board will serve Cerence and its shareholders well and will be integral to the company’s bright future.” Industry Veteran and Former Vodafone CEO Arun Sarin to be Named Cerence Chairman of the Board When the spin-off is completed, Arun Sarin will be named Charmain of the Board for Cerence Inc. -

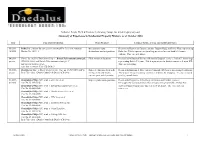

Summary of Experience in Intellectual Property Matters, As of October 2020

Nathaniel Polish, Ph.D. ♦ Daedalus Technology Group, Inc. ♦ [email protected] Summary of Experience in Intellectual Property Matters, as of October 2020 Date Case and Jurisdiction Work Product Counsel, Nature of Case and Additional Notes 06/2019- Roku, Inc. petition for inter partes review of US 7,821,505 Attorney Declarations, reply Retained by Haynes and Boone (contacts: Raghav Bajaj and Philip Woo) representing 10/2020 Docket No. 48331.3 declarations, and a deposition. Roku, Inc. This is a patent case involving user interfaces on hand held remote controls. This case is pending. 04/2019- Vimeo, Inc and IAC/InterActiveCorp, v. British Telecommunications plc, Filed several declarations. Retained by McDonnell Boehnen Hulbert & Berghoff (contact: Jeffrey P. Armstrong) present IPR2019-00833 and British Telecommunications plc. V. representing British Telecom. This is a patent case in district court as well as an IPR. IAC/InterActiveCorp et al., This case is pending. Case 1:18-vc-003366-VAC-CJB (D. Del.) 05/2018- Retailmenot, Inc. v. Honey Science Corp. Case no. 18-937(CFC)(MPT) Expert declarations, help with Retained by Kirkland & Ellis (contact: Christpher DeCoro) representing Retailmenot. present In the United States District Court for the District of Delaware infringement contentions, This is a patent case involving ecommerce websites for shopping. The case is stayed expert report, and deposition. pending consolidation. 01/2017- Koninklijke Philips N.V. et al. v. Acer Inc. et al., Expert reports and depositions. Retained by Fitzpatrick, Cella, Harper & Scinto and Venable (contact: 01/2020 Case No. 15-1170-GMS Christopher Gerson or Jonathan Sharret) representing Koninklijke Philips N.V. -

Nuance Communications, Inc. Form DEFA14A Filed 2021-04-13

SECURITIES AND EXCHANGE COMMISSION FORM DEFA14A Additional definitive proxy soliciting materials and Rule 14(a)(12) material Filing Date: 2021-04-13 SEC Accession No. 0001193125-21-113805 (HTML Version on secdatabase.com) FILER Nuance Communications, Inc. Mailing Address Business Address 1 WAYSIDE ROAD 1 WAYSIDE ROAD CIK:1002517| IRS No.: 943156479 | State of Incorp.:DE | Fiscal Year End: 0930 BURLINGTON MA 01803 BURLINGTON MA 01803 Type: DEFA14A | Act: 34 | File No.: 001-36056 | Film No.: 21821943 781-565-5000 SIC: 7372 Prepackaged software Copyright © 2021 www.secdatabase.com. All Rights Reserved. Please Consider the Environment Before Printing This Document UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 8-K CURRENT REPORT Pursuant to Section 13 or 15(d) of the Securities Exchange Act of 1934 Date of Report (Date of earliest event reported): April 9, 2021 NUANCE COMMUNICATIONS, INC. (Exact name of registrant as specified in its charter) Delaware 000-27038 94-3156479 (State of Other Jurisdiction (Commission (I.R.S. Employer of Incorporation) File Number) Identification No.) 1 Wayside Road Burlington, Massachusetts 01803 (Address of Principal Executive Offices) (Zip Code) Registrants telephone number, including area code: (781) 565-5000 Not Applicable (Former Name or Former Address, if Changed Since Last Report) Check the appropriate box below if the Form 8-K filing is intended to simultaneously satisfy the filing obligation of the registrant under any of the following provisions (see General Instruction -

NUANCE COMMUNICATIONS, INC. (Exact Name of Registrant As Specified in Its Charter)

Table of Contents UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 Form 10-K (Mark One) ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended September 30, 2006 OR o TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to Commission file number 0-27038 NUANCE COMMUNICATIONS, INC. (Exact name of Registrant as Specified in its Charter) Delaware 94-3156479 (State or Other Jurisdiction of (I.R.S. Employer Incorporation or Organization) Identification No.) 1 Wayside Road Burlington, Massachusetts 01803 (Address of Principal Executive Offices) (Zip Code) Registrant’s telephone number, including area code (781) 565-5000 SECURITIES REGISTERED PURSUANT TO SECTION 12(b) OF THE ACT: None SECURITIES REGISTERED PURSUANT TO SECTION 12(g) OF THE ACT: Common Stock, par value $0.001 per share Preferred Share Purchase Rights Indicate by check mark if the Registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes No o Indicate by check mark if the Registrant is not required to file reports pursuant to Section 13 or Section 15(d) of the Act. Yes o No Indicate by check mark whether the Registrant: (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the Registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. -

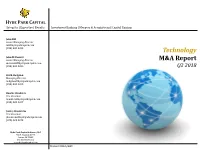

Technology M&A Report

HYDE PARK CAPITAL Integrity | Expertise | Results Investment Banking | Mergers & Acquisitions | Capital Raising John Hill Senior Managing Director [email protected] (813) 383-0205 Technology John McDonald Senior Managing Director M&A Report [email protected] (813) 383-0206 Q2 2018 Keith Hodgdon Managing Director [email protected] (813) 383-0208 Charlie Hendrick Vice President [email protected] (813) 383-0207 Jeffrey Hendricks Vice President [email protected] (678) 523-3073 Hyde Park Capital Advisors, LLC 701 N. Franklin Street Tampa, FL 33602 Tel: 813-383-0202 www.hydeparkcapital.com Member FINRA/SIPC Capital Markets Overview Commentary Select Technology Public Offerings (Q2 2018) Transaction Transaction The S&P Global Information Technology Index increased 26.6% Offer Date Issuer Industry Sector Type Value ($mm) during the twelve month period ended June 30, 2018, while the 07/26/18 Tenable Holdings, Inc. Software IPO $251 Nasdaq and the S&P 500 increased 22.9% and 11.9%, respectively. 06/28/18 Domo, Inc. Software IPO $193 06/20/18 i3 Verticals, Inc. Data & Analytics IPO $86 IPO activity in the Technology industry increased during Q2 2018 after a slower Q1 2018. GreenSky, Inc. (Nasdaq:GSKY) 06/14/18 Avalara, Inc. Software IPO $180 raised $874 million in the largest IPO of the quarter, valuing the 05/23/18 GreenSky, Inc. Data & Analytics IPO $874 company at $1.6 billion. 05/22/18 EVO Payments, Inc. Data & Analytics IPO $224 The Software-as-a-Service (SaaS) and Application Software 05/17/18 Adaptive Insights, Inc. Internet IPO $123 (Horizontal and Vertical) sectors were the industry’s leaders with respect to valuation during Q2 2018, trading at revenue 05/16/18 Pluralsight, Inc. -

Nuance Powershare™ Network Specifications

Nuance® PowerShare™ Network VRN Specifications – Teleradiology Supplement Specifications The purpose of the following is to advise clients of the minimum and recommended specifications for the suite of teleradiology products and services provided by Nuance PowerShare. After reviewing this document, clients will be able to select appropriate hardware and platform software from which to run our software and services. Contents VRN Web Portal ............................................................................................................. 2 Client Outlook – eUnity Viewer (Flash) ........................................................................... 3 Accelerator Gateway (AGW) ........................................................................................... 5 VRN Gateway – TurboQA - Legacy ................................................................................ 6 Firewall Considerations – All Products ............................................................................. 7 PowerShare Projected Upload/Download Times ............................................................... 8 Nuance VRN Specifications March 23, 2016 Page 1 of 9 2.2 Nuance® PowerShare™ Network VRN Specifications – Teleradiology Supplement VRN Web Portal PC or MAC Workstation Processor Intel® Core™ 2 Duo IV – 3.0+ GHz RAM 4 GB Hard Disk 10 GB Video 1536 x 2048, 16bit+ Colors Monitor (17+” LCD) Other Hardware Mouse (or trackball) Network Interface Card Operating System Windows 7+ 32/64bit* *Windows 10 not currently supported Other