Legacy Processor and Device Support for Fully Virtualized Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

VM Virtualization Tasks Problems of X86 Categories of X86 Virtualization

VM Starts from IBM VM/370 definition: "an efficient, isolated duplicate of a real machine" Old purpose: share the resource of the expensive mainframe machine New purpose: fault tolerance and security; run multiple different OSes on the same desktop; server consolidation and performance isolation, etc. type 1 vmm: vmm running on bare hardware type 2 vmm: vmm running on host os Virtualization tasks (isolate, secure, fast, transparent …) 1. cpu virtualization How to make sure privileged instructions (e.g., enable/disable interrupts; change critical registers) will not be executed directly 2. memory virtualization OS thought they can control the whole physical memory. No! 3. I/O virtualization OS thought I/O devices are all under its own control. No! Problems of X86 1. Some privileged instructions do not trap; they just fail/change-semantic silently. 2. TLB miss will be handled by hardware instead of software Categories of X86 virtualization Full-virtualization (VMWare) No change to guest OS Use binary translation to change some instructions (privilege instructions or all OS code) on the fly Para-virtualization (Xen) Change guest OS to improve performance Rewrite OS; replace privileged instructions with hypercalls Many other changes Disco On MIPS DISCO directly runs on multi-processor machine; Oses run upon DISCO Goal: use commodity OSes to leverage many-core cluster VMM runs in kernel mode VM OS runs in supervisor mode VM user runs in user mode Privileged instructions will trap to VMM and be emulated CPU virtualization No special challenge (seemed that all privilege instructions will trap) VMM maintains a process-table-entry-like data structure for each VM (virtual PC) (including registers, states, and TLB content (used for emulation …) ). -

Rochyderabad 27072017.Pdf

List of Companies under Strike Off Sl.No CIN Number Name of the Company 1 U93000TG1947PLC000008 RAJAHMUNDRY CHAMBER OF COMMERCE LIMITED 2 U80301TG1939GAP000595 HYDERABAD EDUCATIONAL CONFERENCE 3 U52300TG1957PTC000772 GUNTI AND CO PVT LTD 4 U99999TG1964PTC001025 HILITE PRODUCTS PVT LTD 5 U74999AP1965PTC001083 BALAJI MERCHANTS ASSOCIATION PRIVATE LIMITED 6 U92111TG1951PTC001102 PRASAD ART PICTURES PVT LTD 7 U26994AP1970PTC001343 PADMA GRAPHITE INDUSTRIES PRIVATE LIMITED 8 U16001AP1971PTC001384 ALLIED TOBBACCO PACKERS PVT LTD 9 U63011AP1972PTC001475 BOBBILI TRANSPORTS PRIVATE LIMITED 10 U65993TG1972PTC001558 RAJASHRI INVESTMENTS PRIVATE LIMITED 11 U85110AP1974PTC001729 DR RANGARAO NURSING HOME PRIVATE LIMITED 12 U74999AP1974PTC001764 CAPSEAL PVT LTD 13 U21012AP1975PLC001875 JAYALAKSHMI PAPER AND GENERAL MILLS LIMITED 14 U74999TG1975PTC001931 FRUTOP PRIVATE LIMITED 15 U05005TG1977PTC002166 INTERNATIONAL SEA FOOD PVT LTD 16 U65992TG1977PTC002200 VAMSI CHIT FUNDS PVT LTD 17 U74210TG1977PTC002206 HIMALAYA ENGINEERING WORKS PVT LTD 18 U52520TG1978PTC002306 BLUEFIN AGENCIES AND EXPORTS PVT LTD 19 U52110TG1979PTC002524 G S B TRADING PRIVATE LIMITED 20 U18100AP1979PTC002526 KAKINADA SATSANG SAREES PRINTING AND DYEING CO PVT LTD 21 U26942TG1980PLC002774 SHRI BHOGESWARA CEMENT AND MINERAL INDUSTRIES LIMITED 22 U74140TG1980PTC002827 VERNY ENGINEERS PRIVATE LIMITED 23 U27109TG1980PTC002874 A P PRECISION LIGHT ENGINEERING PVT LTD 24 U65992AP1981PTC003086 CHAITANYA CHIT FUNDS PVT LTD 25 U15310AP1981PTC003087 R K FLOUR MILLS PVT LTD 26 U05005AP1981PTC003127 -

Security Analysis of Encrypted Virtual Machines

Security Analysis of Encrypted Virtual Machines Felicitas Hetzelt Robert Buhren Technical University of Berlin Technical University of Berlin Berlin, Germany Berlin, Germany fi[email protected] [email protected] Abstract 1. Introduction Cloud computing has become indispensable in today’s com- Cloud computing has been one of the most prevalent trends puter landscape. The flexibility it offers for customers as in the computer industry in the last decade. It offers clear ad- well as for providers has become a crucial factor for large vantages for both customers and providers. Customers can parts of the computer industry. Virtualization is the key tech- easily deploy multiple servers and dynamically allocate re- nology that allows for sharing of hardware resources among sources according to their immediate needs. Providers can different customers. The controlling software component, ver-commit their hardware and thus increase the overall uti- called hypervisor, provides a virtualized view of the com- lization of their systems. The key technology that made this puter resources and ensures separation of different guest vir- possible is virtualization, it allows multiple operating sys- tual machines. However, this important cornerstone of cloud tems to share hardware resources. The hypervisor is respon- computing is not necessarily trustworthy or bug-free. To mit- sible for providing temporal and spatial separation of the igate this threat AMD introduced Secure Encrypted Virtu- virtual machines (VMs). However, besides these advantages alization, short SEV, which transparently encrypts a virtual virtualization also introduced new risks. machines memory. Customers who want to utilize the infrastructure of a In this paper we analyse to what extend the proposed fea- cloud provider must fully trust the cloud provider. -

Nessus 8.3 User Guide

Nessus 8.3.x User Guide Last Updated: September 24, 2021 Table of Contents Welcome to Nessus 8.3.x 12 Get Started with Nessus 15 Navigate Nessus 16 System Requirements 17 Hardware Requirements 18 Software Requirements 22 Customize SELinux Enforcing Mode Policies 25 Licensing Requirements 26 Deployment Considerations 27 Host-Based Firewalls 28 IPv6 Support 29 Virtual Machines 30 Antivirus Software 31 Security Warnings 32 Certificates and Certificate Authorities 33 Custom SSL Server Certificates 35 Create a New Server Certificate and CA Certificate 37 Upload a Custom Server Certificate and CA Certificate 39 Trust a Custom CA 41 Create SSL Client Certificates for Login 43 Nessus Manager Certificates and Nessus Agent 46 Install Nessus 48 Copyright © 2021 Tenable, Inc. All rights reserved. Tenable, Tenable.io, Tenable Network Security, Nessus, SecurityCenter, SecurityCenter Continuous View and Log Correlation Engine are registered trade- marks of Tenable,Inc. Tenable.sc, Tenable.ot, Lumin, Indegy, Assure, and The Cyber Exposure Company are trademarks of Tenable, Inc. All other products or services are trademarks of their respective Download Nessus 49 Install Nessus 51 Install Nessus on Linux 52 Install Nessus on Windows 54 Install Nessus on Mac OS X 56 Install Nessus Agents 58 Retrieve the Linking Key 59 Install a Nessus Agent on Linux 60 Install a Nessus Agent on Windows 64 Install a Nessus Agent on Mac OS X 70 Upgrade Nessus and Nessus Agents 74 Upgrade Nessus 75 Upgrade from Evaluation 76 Upgrade Nessus on Linux 77 Upgrade Nessus on Windows 78 Upgrade Nessus on Mac OS X 79 Upgrade a Nessus Agent 80 Configure Nessus 86 Install Nessus Home, Professional, or Manager 87 Link to Tenable.io 88 Link to Industrial Security 89 Link to Nessus Manager 90 Managed by Tenable.sc 92 Manage Activation Code 93 Copyright © 2021 Tenable, Inc. -

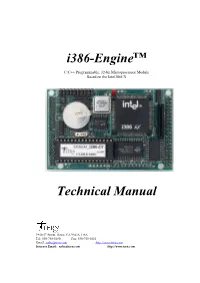

I386-Engine™ Technical Manual

i386-Engine™ C/C++ Programmable, 32-bit Microprocessor Module Based on the Intel386EX Technical Manual 1950 5 th Street, Davis, CA 95616, USA Tel: 530-758-0180 Fax: 530-758-0181 Email: [email protected] http://www.tern.com Internet Email: [email protected] http://www.tern.com COPYRIGHT i386-Engine, VE232, A-Engine, A-Core, C-Engine, V25-Engine, MotionC, BirdBox, PowerDrive, SensorWatch, Pc-Co, LittleDrive, MemCard, ACTF, and NT-Kit are trademarks of TERN, Inc. Intel386EX and Intel386SX are trademarks of Intel Coporation. Borland C/C++ are trademarks of Borland International. Microsoft, MS-DOS, Windows, and Windows 95 are trademarks of Microsoft Corporation. IBM is a trademark of International Business Machines Corporation. Version 2.00 October 28, 2010 No part of this document may be copied or reproduced in any form or by any means without the prior written consent of TERN, Inc. © 1998-2010 1950 5 th Street, Davis, CA 95616, USA Tel: 530-758-0180 Fax: 530-758-0181 Email: [email protected] http://www.tern.com Important Notice TERN is developing complex, high technology integration systems. These systems are integrated with software and hardware that are not 100% defect free. TERN products are not designed, intended, authorized, or warranted to be suitable for use in life-support applications, devices, or systems, or in other critical applications. TERN and the Buyer agree that TERN will not be liable for incidental or consequential damages arising from the use of TERN products. It is the Buyer's responsibility to protect life and property against incidental failure. TERN reserves the right to make changes and improvements to its products without providing notice. -

Hardware Virtualization

Hardware Virtualization E-516 Cloud Computing 1 / 33 Virtualization Virtualization is a vital technique employed throughout the OS Given a physical resource, expose a virtual resource through layering and enforced modularity Users of the virtual resource (usually) cannot tell the difference Different forms: Multiplexing: Expose many virtual resources Aggregation: Combine many physical resources [RAID, Memory] Emulation: Provide a different virtual resource 2 / 33 Virtualization in Operating Systems Virtualizing CPU enables us to run multiple concurrent processes Mechanism: Time-division multiplexing and context switching Provides multiplexing and isolation Similarly, virtualizing memory provides each process the illusion/abstraction of a large, contiguous, and isolated “virtual” memory Virtualizing a resource enables safe multiplexing 3 / 33 Virtual Machines: Virtualizing the hardware Software abstraction Behaves like hardware Encapsulates all OS and application state Virtualization layer (aka Hypervisor) Extra level of indirection Decouples hardware and the OS Enforces isolation Multiplexes physical hardware across VMs 4 / 33 Hardware Virtualization History 1967: IBM System 360/ VM/370 fully virtualizable 1980s–1990s: “Forgotten”. x86 had no support 1999: VMWare. First x86 virtualization. 2003: Xen. Paravirtualization for Linux. Used by Amazon EC2 2006: Intel and AMD develop CPU extensions 2007: Linux Kernel Virtual Machines (KVM). Used by Google Cloud (and others). 5 / 33 Guest Operating Systems VMs run their own operating system (called “guest OS”) Full Virtualization: run unmodified guest OS. But, operating systems assume they have full control of actual hardware. With virtualization, they only have control over “virtual” hardware. Para Virtualization: Run virtualization-aware guest OS that participates and helps in the virtualization. Full machine hardware virtualization is challenging What happens when an instruction is executed? Memory accesses? Control I/O devices? Handle interrupts? File read/write? 6 / 33 Full Virtualization Requirements Isolation. -

Protection Strategies for Direct Access to Virtualized I/O Devices

Protection Strategies for Direct Access to Virtualized I/O Devices Paul Willmann, Scott Rixner, and Alan L. Cox Rice University {willmann, rixner, alc}@rice.edu Abstract chine virtualization [1, 2, 5, 8, 9, 10, 14, 19, 22]. How- Commodity virtual machine monitors forbid direct ac- ever, virtualization can impose performance penalties up cess to I/O devices by untrusted guest operating systems to a factor of 5 on I/O-intensive workloads [16, 19]. in order to provide protection and sharing. However, These penalties stem from the overhead of providing both I/O memory management units (IOMMUs) and re- shared and protected access to I/O devices by untrusted cently proposed software-based methods can be used to guest operating systems. Commodity virtualization ar- reduce the overhead of I/O virtualization by providing chitectures provide protection in software by forbidding untrusted guest operating systems with safe, direct ac- direct access to I/O hardware by untrusted guests— cess to I/O devices. This paper explores the performance instead, all I/O accesses, both commands and data, are and safety tradeoffs of strategies for using these mecha- routed through a single software entity that provides both nisms. protection and sharing. The protection strategies presented in this paper pro- Preferably, guest operating systems would be able to vide equivalent inter-guest protection among operating directly access I/O devices without the need for the data system instances. However, they provide varying levels to traverse an intermediate software layer within the vir- of intra-guest protection from driver software and incur tual machine monitor [17, 23]. -

Hardware and Software Companies During the Microcomputer Revolution

Technology Companies Hardware and software houses of the microcomputer age James Tam Recall: Computers Before The Microprocessor James Tam Image: “A History of Computing Technology” (Williams) CPSC 409: The Microcomputer era The Microprocessor1, 2 • Intel was commissioned to design a special purpose system for a client. – Busicom (client): A Japanese hand-held calculator manufacturer – Prior to this the core money making business of Intel was manufacturing computer memory. • “Intel designed a set of four chips known as the MCS-4.”1 – The CPU for the chip was the 4004 (1971) – Also it came with ROM, RAM and a chip for I/O – It was found that by designing a general purpose computer and customizing it through software that this system could meet the client’s needs but reach a larger market. – Clock: 108 kHz3 1 http://www.intel.com/content/www/us/en/history/museum-story-of-intel-4004.html 2 https://spectrum.ieee.org/tech-history/silicon-revolution/chip-hall-of-fame-intel-4004-microprocessor James Tam 3 http://www.intel.com/pressroom/kits/quickreffam.htm The Microprocessor1,2 (2) • Intel negotiated an arrangement with Busicom so it could freely sell these chips to others. – Busicom eventually went bankrupt! – Intel purchased the rights to the chip and marketed it on their own. James Tam CPSC 409: The Microcomputer era The Microprocessor (3) • 8080 processor: second 8 bit (data) microprocessor (first was 8008). – Clock speed: 2 MHz – Used to power the Altair computer – Many, many other processors came after this: • 80286, 80386, 80486, Pentium Series I – IV, Celeron, Core • The microprocessors development revolutionized computers by allowing computers to be more widely used. -

A Viga T Ing R T Ificia L N Te Ll Igence

July 24, 2018 Semiconductor Get real with artificial intelligence (AI) "Seriously, do you think you could actually purchase one of my kind in Walmart, say in the next 10 years?" NTELLIGENCE I "You do?! You'd better read this report from RTIFICIAL RTIFICIAL cover to cover, and I assure you Peter is not being funny at all this time." A ■ Fantasies remain in Star Trek. Let’s talk about practical AI technologies. ■ There are practical limitations in using today’s technology to realise AI elegantly. ■ AI is to be enabled by a collaborative ecosystem, likely dominated by “gorillas”. ■ An explosion of innovations in AI is happening to enhance user experience. ■ Rewards will go to the problem solvers that have invested in R&D ahead of others. Analyst(s) AVIGATING AVIGATING Peter CHAN T (82) 2 6730 6128 E [email protected] N IMPORTANT DISCLOSURES, INCLUDING ANY REQUIRED RESEARCH CERTIFICATIONS, ARE PROVIDED AT THE Powered by END OF THIS REPORT. IF THIS REPORT IS DISTRIBUTED IN THE UNITED STATES IT IS DISTRIBUTED BY CIMB the EFA SECURITIES (USA), INC. AND IS CONSIDERED THIRD-PARTY AFFILIATED RESEARCH. Platform Navigating Artificial Intelligence Technology - Semiconductor│July 24, 2018 TABLE OF CONTENTS KEY CHARTS .......................................................................................................................... 4 Executive Summary .................................................................................................................. 5 I. From human to machine .......................................................................................................10 -

DOS Virtualized in the Linux Kernel

DOS Virtualized in the Linux Kernel Robert T. Johnson, III Abstract Due to the heavy dominance of Microsoft Windows® in the desktop market, some members of the software industry believe that new operating systems must be able to run Windows® applications to compete in the marketplace. However, running applications not native to the operating system generally causes their performance to degrade significantly. When the application and the operating system were written to run on the same machine architecture, all of the instructions in the application can still be directly executed under the new operating system. Some will only need to be interpreted differently to provide the required functionality. This paper investigates the feasibility and potential to speed up the performance of such applications by including the support needed to run them directly in the kernel. In order to avoid the impact to the kernel when these applications are not running, the needed support was built as a loadable kernel module. 1 Introduction New operating systems face significant challenges in gaining consumer acceptance in the desktop marketplace. One of the first realizations that must be made is that the majority of this market consists of non-technical users who are unlikely to either understand or have the desire to understand various technical details about why the new operating system is “better” than a competitor’s. This means that such details are extremely unlikely to sway a large amount of users towards the operating system by themselves. The incentive for a consumer to continue using their existing operating system or only upgrade to one that is backwards compatible is also very strong due to the importance of application software. -

CP/M-80 Kaypro

$3.00 June-July 1985 . No. 24 TABLE OF CONTENTS C'ing Into Turbo Pascal ....................................... 4 Soldering: The First Steps. .. 36 Eight Inch Drives On The Kaypro .............................. 38 Kaypro BIOS Patch. .. 40 Alternative Power Supply For The Kaypro . .. 42 48 Lines On A BBI ........ .. 44 Adding An 8" SSSD Drive To A Morrow MD-2 ................... 50 Review: The Ztime-I .......................................... 55 BDOS Vectors (Mucking Around Inside CP1M) ................. 62 The Pascal Runoff 77 Regular Features The S-100 Bus 9 Technical Tips ........... 70 In The Public Domain... .. 13 Culture Corner. .. 76 C'ing Clearly ............ 16 The Xerox 820 Column ... 19 The Slicer Column ........ 24 Future Tense The KayproColumn ..... 33 Tidbits. .. .. 79 Pascal Procedures ........ 57 68000 Vrs. 80X86 .. ... 83 FORTH words 61 MSX In The USA . .. 84 On Your Own ........... 68 The Last Page ............ 88 NEW LOWER PRICES! NOW IN "UNKIT"* FORM TOO! "BIG BOARD II" 4 MHz Z80·A SINGLE BOARD COMPUTER WITH "SASI" HARD·DISK INTERFACE $795 ASSEMBLED & TESTED $545 "UNKIT"* $245 PC BOARD WITH 16 PARTS Jim Ferguson, the designer of the "Big Board" distributed by Digital SIZE: 8.75" X 15.5" Research Computers, has produced a stunning new computer that POWER: +5V @ 3A, +-12V @ 0.1A Cal-Tex Computers has been shipping for a year. Called "Big Board II", it has the following features: • "SASI" Interface for Winchester Disks Our "Big Board II" implements the Host portion of the "Shugart Associates Systems • 4 MHz Z80-A CPU and Peripheral Chips Interface." Adding a Winchester disk drive is no harder than attaching a floppy-disk The new Ferguson computer runs at 4 MHz. -

Intel Embedded Graphics Drivers, EFI Video Driver, and Video BIOS V10.4

Intel® Embedded Graphics Drivers, EFI Video Driver, and Video BIOS v10.4 User’s Guide April 2011 Document Number: 274041-032US INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The information here is subject to change without notice. Do not finalize a design with this information. The products described in this document may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.