C Compiler Aided Design of Application-Specific Instruction-Set Processors Using the Machine Description Language LISA

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Glibc and System Calls Documentation Release 1.0

Glibc and System Calls Documentation Release 1.0 Rishi Agrawal <[email protected]> Dec 28, 2017 Contents 1 Introduction 1 1.1 Acknowledgements...........................................1 2 Basics of a Linux System 3 2.1 Introduction...............................................3 2.2 Programs and Compilation........................................3 2.3 Libraries.................................................7 2.4 System Calls...............................................7 2.5 Kernel.................................................. 10 2.6 Conclusion................................................ 10 2.7 References................................................ 11 3 Working with glibc 13 3.1 Introduction............................................... 13 3.2 Why this chapter............................................. 13 3.3 What is glibc .............................................. 13 3.4 Download and extract glibc ...................................... 14 3.5 Walkthrough glibc ........................................... 14 3.6 Reading some functions of glibc ................................... 17 3.7 Compiling and installing glibc .................................... 18 3.8 Using new glibc ............................................ 21 3.9 Conclusion................................................ 23 4 System Calls On x86_64 from User Space 25 4.1 Setting Up Arguements......................................... 25 4.2 Calling the System Call......................................... 27 4.3 Retrieving the Return Value...................................... -

Preview Objective-C Tutorial (PDF Version)

Objective-C Objective-C About the Tutorial Objective-C is a general-purpose, object-oriented programming language that adds Smalltalk-style messaging to the C programming language. This is the main programming language used by Apple for the OS X and iOS operating systems and their respective APIs, Cocoa and Cocoa Touch. This reference will take you through simple and practical approach while learning Objective-C Programming language. Audience This reference has been prepared for the beginners to help them understand basic to advanced concepts related to Objective-C Programming languages. Prerequisites Before you start doing practice with various types of examples given in this reference, I'm making an assumption that you are already aware about what is a computer program, and what is a computer programming language? Copyright & Disclaimer © Copyright 2015 by Tutorials Point (I) Pvt. Ltd. All the content and graphics published in this e-book are the property of Tutorials Point (I) Pvt. Ltd. The user of this e-book can retain a copy for future reference but commercial use of this data is not allowed. Distribution or republishing any content or a part of the content of this e-book in any manner is also not allowed without written consent of the publisher. We strive to update the contents of our website and tutorials as timely and as precisely as possible, however, the contents may contain inaccuracies or errors. Tutorials Point (I) Pvt. Ltd. provides no guarantee regarding the accuracy, timeliness or completeness of our website or its contents including this tutorial. If you discover any errors on our website or in this tutorial, please notify us at [email protected] ii Objective-C Table of Contents About the Tutorial .................................................................................................................................. -

Resourceable, Retargetable, Modular Instruction Selection Using a Machine-Independent, Type-Based Tiling of Low-Level Intermediate Code

Reprinted from Proceedings of the 2011 ACM Symposium on Principles of Programming Languages (POPL’11) Resourceable, Retargetable, Modular Instruction Selection Using a Machine-Independent, Type-Based Tiling of Low-Level Intermediate Code Norman Ramsey Joao˜ Dias Department of Computer Science, Tufts University Department of Computer Science, Tufts University [email protected] [email protected] Abstract Our compiler infrastructure is built on C--, an abstraction that encapsulates an optimizing code generator so it can be reused We present a novel variation on the standard technique of selecting with multiple source languages and multiple target machines (Pey- instructions by tiling an intermediate-code tree. Typical compilers ton Jones, Ramsey, and Reig 1999; Ramsey and Peyton Jones use a different set of tiles for every target machine. By analyzing a 2000). C-- accommodates multiple source languages by providing formal model of machine-level computation, we have developed a two main interfaces: the C-- language is a machine-independent, single set of tiles that is machine-independent while retaining the language-independent target language for front ends; the C-- run- expressive power of machine code. Using this tileset, we reduce the time interface is an API which gives the run-time system access to number of tilers required from one per machine to one per archi- the states of suspended computations. tectural family (e.g., register architecture or stack architecture). Be- cause the tiler is the part of the instruction selector that is most dif- C-- is not a universal intermediate language (Conway 1958) or ficult to reason about, our technique makes it possible to retarget an a “write-once, run-anywhere” intermediate language encapsulating instruction selector with significantly less effort than standard tech- a rigidly defined compiler and run-time system (Lindholm and niques. -

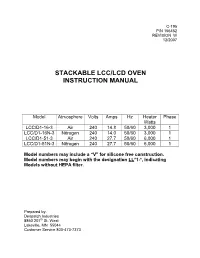

Stackable Lcc/Lcd Oven Instruction Manual

C-195 P/N 156452 REVISION W 12/2007 STACKABLE LCC/LCD OVEN INSTRUCTION MANUAL Model Atmosphere Volts Amps Hz Heater Phase Watts LCC/D1-16-3 Air 240 14.8 50/60 3,000 1 LCC/D1-16N-3 Nitrogen 240 14.0 50/60 3,000 1 LCC/D1-51-3 Air 240 27.7 50/60 6,000 1 LCC/D1-51N-3 Nitrogen 240 27.7 50/60 6,000 1 Model numbers may include a “V” for silicone free construction. Model numbers may begin with the designation LL *1-*, indicating Models without HEPA filter. Prepared by: Despatch Industries 8860 207 th St. West Lakeville, MN 55044 Customer Service 800-473-7373 NOTICE Users of this equipment must comply with operating procedures and training of operation personnel as required by the Occupational Safety and Health Act (OSHA) of 1970, Section 6 and relevant safety standards, as well as other safety rules and regulations of state and local governments. Refer to the relevant safety standards in OSHA and National Fire Protection Association (NFPA), section 86 of 1990. CAUTION Setup and maintenance of the equipment should be performed by qualified personnel who are experienced in handling all facets of this type of system. Improper setup and operation of this equipment could cause an explosion that may result in equipment damage, personal injury or possible death. Dear Customer, Thank you for choosing Despatch Industries. We appreciate the opportunity to work with you and to meet your heat processing needs. We believe that you have selected the finest equipment available in the heat processing industry. -

The LLVM Instruction Set and Compilation Strategy

The LLVM Instruction Set and Compilation Strategy Chris Lattner Vikram Adve University of Illinois at Urbana-Champaign lattner,vadve ¡ @cs.uiuc.edu Abstract This document introduces the LLVM compiler infrastructure and instruction set, a simple approach that enables sophisticated code transformations at link time, runtime, and in the field. It is a pragmatic approach to compilation, interfering with programmers and tools as little as possible, while still retaining extensive high-level information from source-level compilers for later stages of an application’s lifetime. We describe the LLVM instruction set, the design of the LLVM system, and some of its key components. 1 Introduction Modern programming languages and software practices aim to support more reliable, flexible, and powerful software applications, increase programmer productivity, and provide higher level semantic information to the compiler. Un- fortunately, traditional approaches to compilation either fail to extract sufficient performance from the program (by not using interprocedural analysis or profile information) or interfere with the build process substantially (by requiring build scripts to be modified for either profiling or interprocedural optimization). Furthermore, they do not support optimization either at runtime or after an application has been installed at an end-user’s site, when the most relevant information about actual usage patterns would be available. The LLVM Compilation Strategy is designed to enable effective multi-stage optimization (at compile-time, link-time, runtime, and offline) and more effective profile-driven optimization, and to do so without changes to the traditional build process or programmer intervention. LLVM (Low Level Virtual Machine) is a compilation strategy that uses a low-level virtual instruction set with rich type information as a common code representation for all phases of compilation. -

Software Orchestration of Instruction Level Parallelism on Tiled Processor Architectures

Software Orchestration of Instruction Level Parallelism on Tiled Processor Architectures by Walter Lee B.S., Computer Science Massachusetts Institute of Technology, 1995 M.Eng., Electrical Engineering and Computer Science Massachusetts Institute of Technology, 1995 Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of MiASSACHUSETTS INST IJUE DOCTOR OF PHILOSOPHY OF TECHNOLOGY at the OCT 2 12005 MASSACHUSETTS INSTITUTE OF TECHNOLOGY LIBRARIES May 2005 @ 2005 Massachusetts Institute of Technology. All rights reserved. Signature of Author: Department of Electrical Engineering and Computer Science A May 16, 2005 Certified by: Anant Agarwal Professr of Computer Science and Engineering Thesis Supervisor Certified by: Saman Amarasinghe Associat of Computer Science and Engineering Thesis Supervisor Accepted by: Arthur C. Smith Chairman, Departmental Graduate Committee BARKER ovlv ". Software Orchestration of Instruction Level Parallelism on Tiled Processor Architectures by Walter Lee Submitted to the Department of Electrical Engineering and Computer Science on May 16, 2005 in partial fulfillment of the requirements for the Degree of Doctor of Philosophy in Electrical Engineering and Computer Science ABSTRACT Projection from silicon technology is that while transistor budget will continue to blossom according to Moore's law, latency from global wires will severely limit the ability to scale centralized structures at high frequencies. A tiled processor architecture (TPA) eliminates long wires from its design by distributing its resources over a pipelined interconnect. By exposing the spatial distribution of these resources to the compiler, a TPA allows the compiler to optimize for locality, thus minimizing the distance that data needs to travel to reach the consuming computation. -

The Lcc 4.X Code-Generation Interface

The lcc 4.x Code-Generation Interface Christopher W. Fraser and David R. Hanson Microsoft Research [email protected] [email protected] July 2001 Technical Report MSR-TR-2001-64 Abstract Lcc is a widely used compiler for Standard C described in A Retargetable C Compiler: Design and Implementation. This report details the lcc 4.x code- generation interface, which defines the interaction between the target- independent front end and the target-dependent back ends. This interface differs from the interface described in Chap. 5 of A Retargetable C Com- piler. Additional infomation about lcc is available at http://www.cs.princ- eton.edu/software/lcc/. Microsoft Research Microsoft Corporation One Microsoft Way Redmond, WA 98052 http://www.research.microsoft.com/ The lcc 4.x Code-Generation Interface 1. Introduction Lcc is a widely used compiler for Standard C described in A Retargetable C Compiler [1]. Version 4.x is the current release of lcc, and it uses a different code-generation interface than the inter- face described in Chap. 5 of Reference 1. This report details the 4.x interface. Lcc distributions are available at http://www.cs.princeton.edu/software/lcc/ along with installation instruc- tions [2]. The code generation interface specifies the interaction between lcc’s target-independent front end and target-dependent back ends. The interface consists of a few shared data structures, a 33-operator language, which encodes the executable code from a source program in directed acyclic graphs, or dags, and 18 functions, that manipulate or modify dags and other shared data structures. On most targets, implementations of many of these functions are very simple. -

Emerging Technologies Multi/Parallel Processing

Emerging Technologies Multi/Parallel Processing Mary C. Kulas New Computing Structures Strategic Relations Group December 1987 For Internal Use Only Copyright @ 1987 by Digital Equipment Corporation. Printed in U.S.A. The information contained herein is confidential and proprietary. It is the property of Digital Equipment Corporation and shall not be reproduced or' copied in whole or in part without written permission. This is an unpublished work protected under the Federal copyright laws. The following are trademarks of Digital Equipment Corporation, Maynard, MA 01754. DECpage LN03 This report was produced by Educational Services with DECpage and the LN03 laser printer. Contents Acknowledgments. 1 Abstract. .. 3 Executive Summary. .. 5 I. Analysis . .. 7 A. The Players . .. 9 1. Number and Status . .. 9 2. Funding. .. 10 3. Strategic Alliances. .. 11 4. Sales. .. 13 a. Revenue/Units Installed . .. 13 h. European Sales. .. 14 B. The Product. .. 15 1. CPUs. .. 15 2. Chip . .. 15 3. Bus. .. 15 4. Vector Processing . .. 16 5. Operating System . .. 16 6. Languages. .. 17 7. Third-Party Applications . .. 18 8. Pricing. .. 18 C. ~BM and Other Major Computer Companies. .. 19 D. Why Success? Why Failure? . .. 21 E. Future Directions. .. 25 II. Company/Product Profiles. .. 27 A. Multi/Parallel Processors . .. 29 1. Alliant . .. 31 2. Astronautics. .. 35 3. Concurrent . .. 37 4. Cydrome. .. 41 5. Eastman Kodak. .. 45 6. Elxsi . .. 47 Contents iii 7. Encore ............... 51 8. Flexible . ... 55 9. Floating Point Systems - M64line ................... 59 10. International Parallel ........................... 61 11. Loral .................................... 63 12. Masscomp ................................. 65 13. Meiko .................................... 67 14. Multiflow. ~ ................................ 69 15. Sequent................................... 71 B. Massively Parallel . 75 1. Ametek.................................... 77 2. Bolt Beranek & Newman Advanced Computers ........... -

The Glib/GTK+ Development Platform

The GLib/GTK+ Development Platform A Getting Started Guide Version 0.8 Sébastien Wilmet March 29, 2019 Contents 1 Introduction 3 1.1 License . 3 1.2 Financial Support . 3 1.3 Todo List for this Book and a Quick 2019 Update . 4 1.4 What is GLib and GTK+? . 4 1.5 The GNOME Desktop . 5 1.6 Prerequisites . 6 1.7 Why and When Using the C Language? . 7 1.7.1 Separate the Backend from the Frontend . 7 1.7.2 Other Aspects to Keep in Mind . 8 1.8 Learning Path . 9 1.9 The Development Environment . 10 1.10 Acknowledgments . 10 I GLib, the Core Library 11 2 GLib, the Core Library 12 2.1 Basics . 13 2.1.1 Type Definitions . 13 2.1.2 Frequently Used Macros . 13 2.1.3 Debugging Macros . 14 2.1.4 Memory . 16 2.1.5 String Handling . 18 2.2 Data Structures . 20 2.2.1 Lists . 20 2.2.2 Trees . 24 2.2.3 Hash Tables . 29 2.3 The Main Event Loop . 31 2.4 Other Features . 33 II Object-Oriented Programming in C 35 3 Semi-Object-Oriented Programming in C 37 3.1 Header Example . 37 3.1.1 Project Namespace . 37 3.1.2 Class Namespace . 39 3.1.3 Lowercase, Uppercase or CamelCase? . 39 3.1.4 Include Guard . 39 3.1.5 C++ Support . 39 1 3.1.6 #include . 39 3.1.7 Type Definition . 40 3.1.8 Object Constructor . 40 3.1.9 Object Destructor . -

Automatic Isolation of Compiler Errors

Automatic Isolation of Compiler Errors DAVID B.WHALLEY Flor ida State University This paper describes a tool called vpoiso that was developed to automatically isolate errors in the vpo com- piler system. The twogeneral types of compiler errors isolated by this tool are optimization and nonopti- mization errors. When isolating optimization errors, vpoiso relies on the vpo optimizer to identify sequences of changes, referred to as transformations, that result in semantically equivalent code and to pro- vide the ability to stop performing improving (or unnecessary) transformations after a specified number have been performed. Acompilation of a typical program by vpo often results in thousands of improving transformations being performed. The vpoiso tool can automatically isolate the first improving transforma- tion that causes incorrect output of the execution of the compiled program by using a binary search that varies the number of improving transformations performed. Not only is the illegaltransformation automati- cally isolated, but vpoiso also identifies the location and instant the transformation is performed in vpo. Nonoptimization errors occur from problems in the front end, code generator,and necessary transforma- tions in the optimizer.Ifanother compiler is available that can produce correct (but perhaps more ineffi- cient) code, then vpoiso can isolate nonoptimization errors to a single function. Automatic isolation of compiler errors facilitates retargeting a compiler to a newmachine, maintenance of the compiler,and sup- porting experimentation with newoptimizations. General Terms: Compilers, Testing Additional Key Words and Phrases: Diagnosis procedures, nonoptimization errors, optimization errors 1. INTRODUCTION To increase portability compilers are often split into twoparts, a front end and a back end. -

Exploiting Choice: Instruction Fetch and Issue on an Implementable Simultaneous Multithreading Processor

Exploiting Choice: Instruction Fetch and Issue on an Implementable Simultaneous Multithreading Processor ¡ Dean M. Tullsen , Susan J. Eggers , Joel S. Emer , Henry M. Levy , ¡ Jack L. Lo , and Rebecca L. Stamm ¡ Dept of Computer Science and Engineering Digital Equipment Corporation University of Washington HLO2-3/J3 Box 352350 77 Reed Road Seattle, WA 98195-2350 Hudson, MA 01749 Abstract an SMT processor to achieve signi®cantly higher throughput than either a wide superscalar or a multithreaded processor. That paper Simultaneous multithreading is a technique that permits multiple also demonstrated the advantages of simultaneous multithreading independent threads to issue multiple instructions each cycle. In over multiple processors on a single chip, due to SMT's ability to previous work we demonstrated the performance potential of si- dynamically assign execution resources where needed each cycle. multaneous multithreading, based on a somewhat idealized model. Those results showed SMT's potential based on a somewhat ide- In this paper we show that the throughput gains from simultaneous alized model. This paper extends that work in four signi®cant ways. multithreading can be achieved without extensive changes to a con- First, we demonstrate that the throughput gains of simultaneous mul- ventional wide-issue superscalar, either in hardware structures or tithreading are possible without extensive changesto a conventional, sizes. We present an architecture for simultaneous multithreading wide-issue superscalar processor. We propose an architecture that that achieves three goals: (1) it minimizes the architectural impact is more comprehensive, realistic, and heavily leveraged off existing on the conventional superscalar design, (2) it has minimal perfor- superscalar technology. -

Automatic Derivation of Compiler Machine Descriptions

Automatic Derivation of Compiler Machine Descriptions CHRISTIAN S. COLLBERG University of Arizona We describe a method designed to significantly reduce the effort required to retarget a compiler to a new architecture, while at the same time producing fast and effective compilers. The basic idea is to use the native C compiler at compiler construction time to discover architectural features of the new architecture. From this information a formal machine description is produced. Given this machine description, a native code-generator can be generated by a back-end generator such as BEG or burg. A prototype automatic Architecture Discovery Tool (called ADT) has been implemented. This tool is completely automatic and requires minimal input from the user. Given the Internet address of the target machine and the command-lines by which the native C compiler, assembler, and linker are invoked, ADT will generate a BEG machine specification containing the register set, addressing modes, instruction set, and instruction timings for the architecture. The current version of ADT is general enough to produce machine descriptions for the integer instruction sets of common RISC and CISC architectures such as the Sun SPARC, Digital Alpha, MIPS, DEC VAX, and Intel x86. Categories and Subject Descriptors: D.2.7 [Software Engineering]: Distribution, Maintenance, and Enhancement—Portability; D.3.2 [Programming Languages]: Language Classifications— Macro and assembly languages; D.3.4 [Programming Languages]: Processors—Translator writ- ing systems and compiler generators General Terms: Languages Additional Key Words and Phrases: Back-end generators, compiler configuration scripts, retargeting 1. INTRODUCTION An important aspect of a compiler implementation is its retargetability.For example, a new programming language whose compiler can be quickly retar- geted to a new hardware platform or a new operating system is more likely to gain widespread acceptance than a language whose compiler requires extensive retargeting effort.