II-20: Intelligent and Pragmatic Analytic Categorization of Image Collections

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

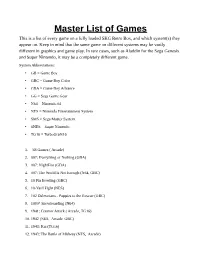

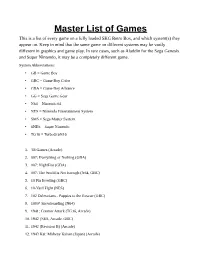

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

Video Game Archive: Nintendo 64

Video Game Archive: Nintendo 64 An Interactive Qualifying Project submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfilment of the requirements for the degree of Bachelor of Science by James R. McAleese Janelle Knight Edward Matava Matthew Hurlbut-Coke Date: 22nd March 2021 Report Submitted to: Professor Dean O’Donnell Worcester Polytechnic Institute This report represents work of one or more WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. Abstract This project was an attempt to expand and document the Gordon Library’s Video Game Archive more specifically, the Nintendo 64 (N64) collection. We made the N64 and related accessories and games more accessible to the WPI community and created an exhibition on The History of 3D Games and Twitch Plays Paper Mario, featuring the N64. 2 Table of Contents Abstract…………………………………………………………………………………………………… 2 Table of Contents…………………………………………………………………………………………. 3 Table of Figures……………………………………………………………………………………………5 Acknowledgements……………………………………………………………………………………….. 7 Executive Summary………………………………………………………………………………………. 8 1-Introduction…………………………………………………………………………………………….. 9 2-Background………………………………………………………………………………………… . 11 2.1 - A Brief of History of Nintendo Co., Ltd. Prior to the Release of the N64 in 1996:……………. 11 2.2 - The Console and its Competitors:………………………………………………………………. 16 Development of the Console……………………………………………………………………...16 -

![[Japan] SALA GIOCHI ARCADE 1000 Miglia](https://docslib.b-cdn.net/cover/3367/japan-sala-giochi-arcade-1000-miglia-393367.webp)

[Japan] SALA GIOCHI ARCADE 1000 Miglia

SCHEDA NEW PLATINUM PI4 EDITION La seguente lista elenca la maggior parte dei titoli emulati dalla scheda NEW PLATINUM Pi4 (20.000). - I giochi per computer (Amiga, Commodore, Pc, etc) richiedono una tastiera per computer e talvolta un mouse USB da collegare alla console (in quanto tali sistemi funzionavano con mouse e tastiera). - I giochi che richiedono spinner (es. Arkanoid), volanti (giochi di corse), pistole (es. Duck Hunt) potrebbero non essere controllabili con joystick, ma richiedono periferiche ad hoc, al momento non configurabili. - I giochi che richiedono controller analogici (Playstation, Nintendo 64, etc etc) potrebbero non essere controllabili con plance a levetta singola, ma richiedono, appunto, un joypad con analogici (venduto separatamente). - Questo elenco è relativo alla scheda NEW PLATINUM EDITION basata su Raspberry Pi4. - Gli emulatori di sistemi 3D (Playstation, Nintendo64, Dreamcast) e PC (Amiga, Commodore) sono presenti SOLO nella NEW PLATINUM Pi4 e non sulle versioni Pi3 Plus e Gold. - Gli emulatori Atomiswave, Sega Naomi (Virtua Tennis, Virtua Striker, etc.) sono presenti SOLO nelle schede Pi4. - La versione PLUS Pi3B+ emula solo 550 titoli ARCADE, generati casualmente al momento dell'acquisto e non modificabile. Ultimo aggiornamento 2 Settembre 2020 NOME GIOCO EMULATORE 005 SALA GIOCHI ARCADE 1 On 1 Government [Japan] SALA GIOCHI ARCADE 1000 Miglia: Great 1000 Miles Rally SALA GIOCHI ARCADE 10-Yard Fight SALA GIOCHI ARCADE 18 Holes Pro Golf SALA GIOCHI ARCADE 1941: Counter Attack SALA GIOCHI ARCADE 1942 SALA GIOCHI ARCADE 1943 Kai: Midway Kaisen SALA GIOCHI ARCADE 1943: The Battle of Midway [Europe] SALA GIOCHI ARCADE 1944 : The Loop Master [USA] SALA GIOCHI ARCADE 1945k III SALA GIOCHI ARCADE 19XX : The War Against Destiny [USA] SALA GIOCHI ARCADE 2 On 2 Open Ice Challenge SALA GIOCHI ARCADE 4-D Warriors SALA GIOCHI ARCADE 64th. -

THE ART of Puzzle Game Design

THE ART OF Puzzle Design Scott Kim & Alexey Pajitnov with Bob Bates, Gary Rosenzweig, Michael Wyman March 8, 2000 Game Developers Conference These are presentation slides from an all-day tutorial given at the 2000 Game Developers Conference in San Jose, California (www.gdconf.com). After the conference, the slides will be available at www.scottkim.com. Puzzles Part of many games. Adventure, education, action, web But how do you create them? Puzzles are an important part of many computer games. Cartridge-based action puzzle gamse, CD-ROM puzzle anthologies, adventure game, and educational game all need good puzzles. Good News / Bad News Mental challenge Marketable? Nonviolent Dramatic? Easy to program Hard to invent? Growing market Small market? The good news is that puzzles appeal widely to both males and females of all ages. Although the market is small, it is rapidly expanding, as computers become a mass market commodity and the internet shifts computer games toward familiar, quick, easy-to-learn games. Outline MORNING AFTERNOON What is a puzzle? Guest Speakers Examples Exercise Case studies Question & Design process Answer We’ll start by discussing genres of puzzle games. We’ll study some classic puzzle games, and current projects. We’ll cover the eight steps of the puzzle design process. We’ll hear from guest speakers. Finally we’ll do hands-on projects, with time for question and answer. What is a Puzzle? Five ways of defining puzzle games First, let’s map out the basic genres of puzzle games. Scott Kim 1. Definition of “Puzzle” A puzzle is fun and has a right answer. -

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

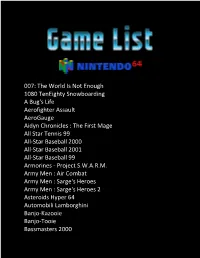

007: the World Is Not Enough 1080 Teneighty Snowboarding a Bug's

007: The World Is Not Enough 1080 TenEighty Snowboarding A Bug's Life Aerofighter Assault AeroGauge Aidyn Chronicles : The First Mage All Star Tennis 99 All-Star Baseball 2000 All-Star Baseball 2001 All-Star Baseball 99 Armorines - Project S.W.A.R.M. Army Men : Air Combat Army Men : Sarge's Heroes Army Men : Sarge's Heroes 2 Asteroids Hyper 64 Automobili Lamborghini Banjo-Kazooie Banjo-Tooie Bassmasters 2000 Batman Beyond : Return of the Joker BattleTanx BattleTanx - Global Assault Battlezone : Rise of the Black Dogs Beetle Adventure Racing! Big Mountain 2000 Bio F.R.E.A.K.S. Blast Corps Blues Brothers 2000 Body Harvest Bomberman 64 Bomberman 64 : The Second Attack! Bomberman Hero Bottom of the 9th Brunswick Circuit Pro Bowling Buck Bumble Bust-A-Move '99 Bust-A-Move 2: Arcade Edition California Speed Carmageddon 64 Castlevania Castlevania : Legacy of Darkness Chameleon Twist Chameleon Twist 2 Charlie Blast's Territory Chopper Attack Clay Fighter : Sculptor's Cut Clay Fighter 63 1-3 Command & Conquer Conker's Bad Fur Day Cruis'n Exotica Cruis'n USA Cruis'n World CyberTiger Daikatana Dark Rift Deadly Arts Destruction Derby 64 Diddy Kong Racing Donald Duck : Goin' Qu@ckers*! Donkey Kong 64 Doom 64 Dr. Mario 64 Dual Heroes Duck Dodgers Starring Daffy Duck Duke Nukem : Zero Hour Duke Nukem 64 Earthworm Jim 3D ECW Hardcore Revolution Elmo's Letter Adventure Elmo's Number Journey Excitebike 64 Extreme-G Extreme-G 2 F-1 World Grand Prix F-Zero X F1 Pole Position 64 FIFA 99 FIFA Soccer 64 FIFA: Road to World Cup 98 Fighter Destiny 2 Fighters -

Putting Tactility Back Into Touch Controls Zach Gage

Controls You Can Feel Putting Tactility Back Into Touch Controls Zach Gage - @helvetica - STFJ.net Tuesday, March 6, 12 so this is a talk on why tactility matters and how to bring that to touch games I am not a scientist Tuesday, March 6, 12 I need to say off the bat that I'm not a scientist. I am an artist Tuesday, March 6, 12 I'm an artist who has spent a lot of time thinking and working with interaction. Tuesday, March 6, 12 From large scale interactive artworks Tuesday, March 6, 12 to small scale critically acclaimed iPhone and iPad games Tuesday, March 6, 12 to weird art games, Tuesday, March 6, 12 to chat software Tuesday, March 6, 12 to interactive sculpture Tuesday, March 6, 12 to aggressive books, I’ve designed or thought about interaction in dozens different digital and non-digital contexts. In my experience, if you know what to think about, designing quality interaction is easy, and on touch systems, quality interaction often means designing for tactility. Tactility Tuesday, March 6, 12 When I talk about tactility, I’m not just referring to what you feel with your finger tips, I’m talking about the entire experience of physically interacting with a game. This presentation is an attempt to break my process down into rules that you can use to design your own games either for touch screens or ideally, any unfamiliar system of interaction. Interaction possibility dictates game possibility. Tuesday, March 6, 12 As far as I can tell, the root of many unsuccessful iPhone control schemes comes from designers not realizing that when you make a game for a touch screen you have to design controls. -

Nintedo 64 Diddy Kong Racing Mario Party 3 Pokémon

Nintedo 64 Diddy Kong Racing Mario Party 3 Pokémon Snap Banjo-Tooie A Bug's Life Pokémon Stadium Super Mario 64 Castlevania : Legacy of Darkness Gex 3 : Deep Cover Gecko Dr. Mario 64 Mario Party Donkey Kong 64 Bomberman 64 : The Second Attack! Mario Party 2 Mario Tennis 1080 TenEighty Snowboarding Pokémon Stadium 2 Donald Duck: Cou@k Att@ck ?*! The Legend of Zelda : Ocarina of Time Mega Man 64 Legend of Zelda : Majora's Mask, The F-Zero X Mario Golf Mario Kart 64 Jeopardy! NBA Hangtime Turok 3 - Shadow of Oblivion Mischief Makers Virtual Chess 64 Lylat Wars San Francisco Rush 2049 BattleTanx Rugrats : Treasure Hunt Transformers : Beast Wars Transmetals Buck Bumble Chopper Attack Star Wars - Episode I - Racer Mystical Ninja Starring Goemon GoldenEye 007 Turok : Dinosaur Hunter Daikatana Earthworm Jim 3D Cruis'n USA NBA In The Zone '98 Armorines - Project S.W.A.R.M. NFL Blitz Conker's Bad Fur Day NBA Live 99 Road Rash 64 South Park Excitebike 64 Doom 64 Resident Evil 2 Wave Race 64 CyberTiger Ms. Pac-Man : Maze Madness Nagano Winter Olympics '98 Rakuga Kids NFL Blitz : Special Edition Bomberman Hero NBA Jam 2000 RR64 : Ridge Racer 64 Stunt Racer 64 Rocket : Robot On Wheels A Bug's Life Batman Beyond : Return of the Joker Elmo's Number Journey Monaco Grand Prix BattleTanx - Global Assault FIFA Soccer 64 Midway's Greatest Arcade Hits : Volume 1 NASCAR 99 Micro Machines 64 Turbo Bust-A-Move '99 Command & Conquer Lode Runner 3-D Star Wars : Rogue Squadron Top Gear Overdrive The New Tetris Top Gear Rally Super Smash Bros. -

Premiere Issue Monkeying Around Game Reviews: Special Report

Atari Coleco Intellivision Computers Vectrex Arcade ClassicClassic GamerGamer Premiere Issue MagazineMagazine Fall 1999 www.classicgamer.com U.S. “Because Newer Isn’t Necessarily Better!” Special Report: Classic Videogames at E3 Monkeying Around Revisiting Donkey Kong Game Reviews: Atari, Intellivision, etc... Lost Arcade Classic: Warp Warp Deep Thaw Chris Lion Rediscovers His Atari Plus! · Latest News · Guide to Halloween Games · Win the book, “Phoenix” “As long as you enjoy the system you own and the software made for it, there’s no reason to mothball your equipment just because its manufacturer’s stock dropped.” - Arnie Katz, Editor of Electronic Games Magazine, 1984 Classic Gamer Magazine Fall 1999 3 Volume 1, Version 1.2 Fall 1999 PUBLISHER/EDITOR-IN-CHIEF Chris Cavanaugh - [email protected] ASSOCIATE EDITOR Sarah Thomas - [email protected] STAFF WRITERS Kyle Snyder- [email protected] Reset! 5 Chris Lion - [email protected] Patrick Wong - [email protected] Raves ‘N Rants — Letters from our readers 6 Darryl Guenther - [email protected] Mike Genova - [email protected] Classic Gamer Newswire — All the latest news 8 Damien Quicksilver [email protected] Frank Traut - [email protected] Lee Seitz - [email protected] Book Bytes - Joystick Nation 12 LAYOUT/DESIGN Classic Advertisement — Arcadia Supercharger 14 Chris Cavanaugh PHOTO CREDITS Atari 5200 15 Sarah Thomas - Staff Photographer Pong Machine scan (page 3) courtesy The “New” Classic Gamer — Opinion Column 16 Sean Kelly - Digital Press CD-ROM BIRA BIRA Photos courtesy Robert Batina Lost Arcade Classics — ”Warp Warp” 17 CONTACT INFORMATION Classic Gamer Magazine Focus on Intellivision Cartridge Reviews 18 7770 Regents Road #113-293 San Diego, Ca 92122 Doin’ The Donkey Kong — A closer look at our 20 e-mail: [email protected] on the web: favorite monkey http://www.classicgamer.com Atari 2600 Cartridge Reviews 23 SPECIAL THANKS To Sarah. -

Study of Multimedia Technology in Posture Training for the Elderly

Engineering, 2013, 5, 47-52 http://dx.doi.org/10.4236/eng.2013.510B010 Published Online October 2013 (http://www.scirp.org/journal/eng) Study of Multimedia Technology in Posture Training for the Elderly Chih-Chen Chen1, De-Jou Hong2, Shih-Ching Chen3, Ying-Ying Shih4, Yu-Luen Chen2,5 1Department of Management Information System, Hwa Hsia Institute of Technology, Taipei, Chinese Taipei 2Department of Computer Science, National Taipei University of Education, Taipei, Chinese Taipei 3Department of Physical Medicine & Rehabilitation, Taipei Medical University Hospital, Taipei, Chinese Taipei 4Department Physical & Rehabilitation Medicine, Chang Gung Memorial Hospital, Tao-Yuan, Chinese Taipei 5Department of Information Management, St. Mary’s Medicine, Nursing and Management College, Yilan, Chinese Taipei Email: [email protected], [email protected], [email protected] Received October 2012 ABSTRACT Wrist diseases, also known as “mama hand”, “mouse hand” or “keyboard hand”, are commonly seen and easily over- looked symptoms in daily life. The diseases are mostly related to incorrect exercise or excessive force imposed on hands, leading to tenosynovitis or carpal tunnel syndrome. To alleviate the symptoms or even to recover, besides drug treatment, supplementary rehabilitation training is necessary. The rehabilitation process forces the affected wrist area to continually exercise in order to stimulate the self-repair signals sent to the affected area for partial restoration, if not all, of the supposed functions. In modern world, using information technology to improve the rehabilitation environment with better approaches has become a trend. In this study, software programs coded in C# and Flash are developed to work on the Bluetooth ball hardware to facilitate the rehabilitation. -

EA Launches New Tetris® Game on the App Store Today

EA Launches New Tetris® Game on the App Store Today Tetris®, Re-imagined! Discover the All-New One Touch Revolution and Experience Visionary New Ways to Play! REDWOOD CITY, Calif.--(BUSINESS WIRE)-- EA Mobile™, a division ofElectronic Arts Inc., (NASDAQ: ERTS) and the world's leading mobile games publisher, along with The Tetris Company, LLC, today announced the launch of a re-imagined version of the globally popular puzzle game on the App StoreSM. The new Tetris® App gives fans access to new features that give them revolutionary ways to play on their iPad®, iPhone®, and iPod touch®, including: ● The fan-favorite Marathon Mode. ● A brand new level-based Galaxy Mode, where players can use game-changing power ups to dig down and shatter their scores. ● Innovative Marathon One Touch Mode, a simple and easy-to-use control scheme for Marathon mode that is specially designed for touch screen gameplay. ● New gameplay challenges delivered via the never-before-seen Tetris Log system. ● Tetris Rank functionality that tracks players' lifetime "lines cleared" to assign an overall rank. ● Ability to subscribe to the T-Club, the only Tetris fan club to give members exclusive access to new mobile challenges and content. "The Tetris App for iPad, iPhone and iPod touch was specifically designed to let players experience a re- imagined Tetris universe including the traditional Tetris gameplay they know and love," said Bernard Kim, Senior Vice President of Sales and Marketing for EA™ Interactive. "The innovative new features are sure to bring hours of frenzied fun for both newcomers and seasoned Tetris fans." In addition to the inclusion of Marathon One Touch and Galaxy modes, as well as the fan-favorite Marathon mode, EA's newest version of the Tetris game also delivers new in-game content for fans, including Tetris Log, an Origin-powered function that delivers fresh challenges and tracks lifetime accumulated "lines cleared" across multiple mobile devices to assign an official Tetris Rank. -

Differential Effects of a Cognitive Interference Task After Encoding

Journal of Experimental Psychology: General © 2019 The Author(s) 2019, Vol. 148, No. 12, 2154–2180 0096-3445/19/$12.00 http://dx.doi.org/10.1037/xge0000598 Intrusive Memories and Voluntary Memory of a Trauma Film: Differential Effects of a Cognitive Interference Task After Encoding Alex Lau-Zhu, Richard N. Henson, and Emily A. Holmes University of Cambridge, United Kingdom Methods to reduce intrusive memories (e.g., of traumatic events) should ideally spare voluntary memory for the same event (e.g., to report on the event in court). Single-trace memory accounts assume that interfering with a trace should impact both its involuntary and voluntary expressions, whereas separate- trace accounts assume these two can dissociate, allowing for selective interference. This possibility was investigated in 3 experiments. Nonclinical participants viewed a trauma film followed by an interference task (Tetris game-play after reminder cues). Next, memory for the film was assessed with various measures. The interference task reduced the number of intrusive memories (diary-based, Experiments 1 and 2), but spared performance on well-matched measures of voluntary retrieval—free recall (Experi- ment 1) and recognition (Experiments 1 and 2)—challenging single-trace accounts. The interference task did not affect other measures of involuntary retrieval—perceptual priming (Experiment 1) or attentional bias (Experiment 2). However, the interference task did reduce the number of intrusive memories in a laboratory-based vigilance-intrusion task (Experiments 2 and 3), irrespective of concurrent working memory load during intrusion retrieval (Experiment 3). Collectively, results reveal a robust dissociation between intrusive and voluntary memories, having ruled out key methodological differences between how these two memory expressions are assessed, namely cue overlap (Experiment 1), attentional capture (Experiment 2), and retrieval load (Experiment 3).