Apache Tez: a Unifying Framework for Modeling and Building Data Processing Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Digitalised Payment the Era of Rising Fintech

INFOKARA RESEARCH ISSN NO: 1021-9056 DIGITALISED PAYMENT THE ERA OF RISING FINTECH Ms. R.Hishitaa, I MBA, SRMIST, Vadapalani e mail id: [email protected] Dr.S.Gayathry Professor, Department of Management Studies, SRMIST, Vadapalani e mail id: [email protected] ABSTRACT For a long time, Indian citizens have remained skeptical about evolving along with the pace that technology has set.But in recent years, the country has embraced change, and it has brought so much advancement in terms of how payments are simplified. An Electronic payment protocol is a series of transactions,at the end of which a payment has been made, using a token issued by a third party.Consumers expect a safe, convenient and affordable globalised payment platform. The security features for such systems are privacy, authenticity and no repudiation. India being a developing country,encourages innovation. A factor behind the rise of digital payments is the Indian Reserve Bank’s recent policy of Demonetization.One of the fintech innovations that have completely revolutionized how payments work in India is the UPI – an instant, real-time payment system developed by the National Payments Corporation of India (NPCI).Tez, built over the UPI interface, was launched in India in September 2017. Google has rebranded its payment app Google Tez to Google Pay to tap into the growing Indian digital payment space.Google Pay has now become the king of UPI apps.The purpose of this paper is to provide a brief background about the most downloaded finance app, Google Pay andits impacton the Indian Economy. KEYWORDS-e payment, payment protocol, digital payment space Volume 8 Issue 11 2019 888 http://infokara.com/ INFOKARA RESEARCH ISSN NO: 1021-9056 DIGITALISED PAYMENT – THE ERA OF RISING FINTECH 1.1. -

Towards the Performance Analysis of Apache Tez Applications

QUDOS Workshop ICPE’18 Companion, April 9̶–13, 2018, Berlin, Germany Towards the Performance Analysis of Apache Tez Applications José Ignacio Requeno, Iñigo Gascón, José Merseguer Dpto. de Informática e Ingeniería de Sistemas Universidad de Zaragoza, Spain {nrequeno,685215,jmerse}@unizar.es ABSTRACT For leveraging the performance of the framework, Apache Tez Apache Tez is an application framework for large data processing developers customize their applications by several parameters, e.g., using interactive queries. When a Tez developer faces the fulfillment parallelism or scheduling. Certainly, this is not an easy task, so of performance requirements s/he needs to configure and optimize we argue that they need aids to prevent incorrect configurations the Tez application to specific execution contexts. However, these leading to losings, monetary or coding. In our view, these aids are not easy tasks, though the Apache Tez configuration will im- should come early during application design and in the form of pact in the performance of the application significantly. Therefore, predicting the behavior of the Tez application for future demands we propose some steps, towards the modeling and simulation of (e.g., the impact of the stress situations) in performance parameters, Apache Tez applications, that can help in the performance assess- such as response time, throughput or utilization of the devices. ment of Tez designs. For the modeling, we propose a UML profile Aligned with our work in the DICE project [1, 5], this paper for Apache Tez. For the simulation, we propose to transform the presents the first steps towards the modeling and simulation of stereotypes of the profile into stochastic Petri nets, which canbe Apache Tez applications with high performance behavior. -

A Study on Customer Satisfaction of Bharat Interface for Money (BHIM)

International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075, Volume-8 Issue-6, April 2019 A Study on Customer Satisfaction of Bharat Interface for Money (BHIM) Anjali R, Suresh A Abstract: After demonetization on November 8th, 2016, India saw an increased use of different internet payment systems for Mobile banking saw its growth during the period of 2009- money transfer through various devices. NPCI (National 2010 with improvement in mobile internet services across Payments Corporation India) launched Bharat interface for India. SMS based applications along with mobile Money (BHIM) an application run on UPI (Unified Payment application compatible with smartphones offered improved Interface) in December 2016 to cater the growing online payment banking services to the customers. Apart from the bank’s needs. The different modes of digital payments saw a drastic mobile applications other applications like BHIM, Paytm, change in usage in the last 2 years. Though technological Tez etc. offered provided enhanced features that lead to easy innovations brought in efficiency and security in transactions, access to banking services. In addition to this, The Reserve many are still unwilling to adopt and use it. Earlier studies Bank of India has given approval to 80 Banks to start mobile related to adoption, importance of internet banking and payment systems attributed it to some factors which are linked to security, banking services including applications. Bharat Interface for ease of use and satisfaction level of customers. The purpose of money (BHIM) was launched after demonetization by this study is to unfold some factors which have an influence on National Payments Corporation (NPCI) by Prime Minister the customer satisfaction of BHIM application. -

The Five-Step Guide on Capitalizing on the Evolution of Real-Time

THE FIVE-STEP GUIDE TO CAPITALIZING ON THE EVOLUTION OF REAL-TIME PAYMENTS IN INDIA HOW CAN YOU ENSURE YOUR SYSTEMS CAN COPE WITH GROWING DEMAND? THE PAST FEW YEARS HAVE SEEN HUGE ADVANCEMENTS DOING NOTHING IS NOT AN IN HOW PAYMENTS AND BANKING ARE CONDUCTED IN INDIA. OPTION, AND WILL INEVITABLY MEAN FALLING BEHIND, AS Driving this is consumer appetite, with 88% (Unified Payments Interface) by the National of people preferring digital*, cashless Payments Corporation of India (NPCI), OTHER BANKS CAPITALIZE payments over notes, cards and coins; and fundamentally changing the RTP landscape UPON THE SIGNIFICANT a widespread move to online banking. Yet in India. OPPORTUNITY THAT THE the government has also been instrumental in accelerating the growth of financial In the simplest terms, India is signed up to GROWTH AND EVOLUTION innovation, including real-time payments innovative ways of managing money. So, OF RTP PRESENTS. (RTP). Initiatives such as Digital India (due for clearly, this is an opportunity for Indian banks completion in 2019), and the 2016 launch of to prioritize modern, technology-led ways the DigiShala television channel, are promoting of working. digital payments and educating citizens. The Reserve Bank of India’s (RBI) Vision However, many instead face a huge risk. HERE ARE FIVE WAYS THAT BANKS 2018 program worked to strengthen existing The growth of RTP in India is so profound payment systems, and to introduce newer that current solutions designed to facilitate CAN ENSURE THEIR TECHNOLOGY innovative payment products, to help banks it will soon be unable to cope with demand. REMAINS READY FOR REAL- and financiers meet the expectations of the This could cause system failure, increased government’s demonetization plan. -

![25222 SIPA White Paper [Digital Revolution]-PPG.Indd](https://docslib.b-cdn.net/cover/1880/25222-sipa-white-paper-digital-revolution-ppg-indd-1141880.webp)

25222 SIPA White Paper [Digital Revolution]-PPG.Indd

In 2016, the Nasdaq Educational Foundation awarded the Columbia University School of International and Public Affairs (SIPA) a multi-year grant to support initiatives at the intersection of digital entrepreneurship and public policy. Over the past three years, SIPA has undertaken new research, introduced new pedagogy, launched student venture competitions, and convened policy forums that have engaged scholars across Columbia University as well as entrepreneurs and leaders from both the public and private sectors. New research has covered three broad areas: Cities & Innovation; Digital Innovation & Entrepreneurial Solutions; and Emerging Global Digital Policy. Specific topics have included global education technology; cryptocurrencies and the new technologies of money; the urban innovation environment, with a focus on New York City; government measures to support the digital economy in Brazil, Shenzhen, China, and India; and entrepreneurship focused on addressing misinformation. With special thanks to the Nasdaq Educational Foundation for its support of SIPA’s Entrepreneurship and Policy Initiative. SIPA’s Entrepreneurship & Policy Initiative Working Paper Series Digital Revolution, Financial Infrastructure and Entrepreneurship: The Case of India Arvind Panagariya1 Digital Revolution has been sweeping across the world I divide the paper into three parts. Part 1 focuses on over the last three decades. This revolution has spread the spread of financial technologies in India. Part 2 far more rapidly, especially in developing countries, considers how the digital revolution has helped spawn than was the case with either the Industrial or Agricul- and transform the nature of entrepreneurship in the tural Revolution. Indeed, the spread has been so rapid country. Part 3 offers some concluding remarks that China has become its major driver with India emerging as one as well. -

OCCASION This Publication Has Been Made Available to the Public on the Occasion of the 50 Anniversary of the United Nations Indu

OCCASION This publication has been made available to the public on the occasion of the 50th anniversary of the United Nations Industrial Development Organisation. DISCLAIMER This document has been produced without formal United Nations editing. The designations employed and the presentation of the material in this document do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations Industrial Development Organization (UNIDO) concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries, or its economic system or degree of development. Designations such as “developed”, “industrialized” and “developing” are intended for statistical convenience and do not necessarily express a judgment about the stage reached by a particular country or area in the development process. Mention of firm names or commercial products does not constitute an endorsement by UNIDO. FAIR USE POLICY Any part of this publication may be quoted and referenced for educational and research purposes without additional permission from UNIDO. However, those who make use of quoting and referencing this publication are requested to follow the Fair Use Policy of giving due credit to UNIDO. CONTACT Please contact [email protected] for further information concerning UNIDO publications. For more information about UNIDO, please visit us at www.unido.org UNITED NATIONS INDUSTRIAL DEVELOPMENT ORGANIZATION Vienna International Centre, P.O. Box 300, 1400 Vienna, Austria Tel: (+43-1) 26026-0 · www.unido.org · [email protected] 2 8 !'!' 2 5 1.0 1:1 c 3 ,' 111111/ 0 1\llll _ 111111.1 111111. -

How Will Traditional Credit-Card Networks Fare in an Era of Alipay, Google Tez, PSD2 and W3C Payments?

How will traditional credit-card networks fare in an era of Alipay, Google Tez, PSD2 and W3C payments? Eric Grover April 20, 2018 988 Bella Rosa Drive Minden, NV 89423 * Views expressed are strictly the author’s. USA +1 775-392-0559 +1 775-552-9802 (fax) [email protected] Discussion topics • Retail-payment systems and credit cards state of play • Growth drivers • Tectonic shifts and attendant risks and opportunities • US • Europe • China • India • Closing thoughts Retail-payment systems • General-purpose retail-payment networks were the greatest payments and retail-banking innovation in the 20th century. • >300 retail-payment schemes worldwide • Global traditional payment networks • Mastercard • Visa • Tier-two global networks • American Express, • China UnionPay • Discover/Diners Club • JCB Retail-payment systems • Alternative networks building claims to critical mass • Alipay • Rolling up payments assets in Asia • Partnering with acquirers to build global acceptance • M-Pesa • PayPal • Trading margin for volume, modus vivendi with Mastercard, Visa and large credit-card issuers • Opening up, partnering with African MNOs • Paytm • WeChat Pay • Partnering with acquirers to build overseas acceptance • National systems – Axept, Pago Bancomat, BCC, Cartes Bancaires, Dankort, Elo, iDeal, Interac, Mir, Rupay, Star, Troy, Euro6000, Redsys, Sistema 4b, et al The global payments land grab • There have been campaigns and retreats by credit-card issuers building multinational businesses, e.g. Citi, Banco Santander, Discover, GE, HSBC, and Capital One. • Discover’s attempts overseas thus far have been unsuccessful • UK • Diners Club • Network reciprocity • Under Jeff Immelt GE was the worst-performer on the Dow –a) and Synchrony unwound its global franchise • Amex remains US-centric • Merchant acquiring and processing imperative to expand internationally. -

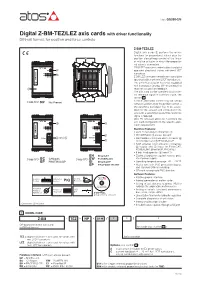

Digital Z-BM-TEZ/LEZ Axis Cards with Driver Functionality DIN-Rail Format, for Position and Force Controls

Table GS330-2/E Digital Z-BM-TEZ/LEZ axis cards with driver functionality DIN-rail format, for position and force controls Z-BM-TEZ/LEZ Digital axis cards ቢ perform the driver functions for proportional valves plus the ባ position closed loop control of the linear or rotative actuator to which the proportio- nal valve is connected. Z-BM-TEZ execution controls direct and pilot USB ቤ T-000 operated directional valves with one LVDT F E D 0123456789 1 2 3 4 1 2 3 4 PW ቩ 1 2 3 4 transducer. -15V -15V +15V ST +15V AGND AGND LVDT_L LVDT_T SOL_S1- SOL_S2- SOL_S1+ SOL_S2+ L1 L2 L3 Z-BM-LEZ execution controls directional pilot www.atos.com made in Italy Z-BM-TEZ-NP operated valves with two LVDT transducers. The controlled actuator has to be equipped 0123456789 P_MONITOR ENABLE F_MONITOR FAULT P_INPUT+ INPUT- F_INPUT+ EARTH V+ V0 VL+ VL0 with transducer (analog, SSI or Encoder) to 1 2 3 4 1 2 3 4 1 2 3 4 A B ቢ C Z-BM-LEZ-NP-05H 10 read the axis position feedback. The axis card can be operated via an exter- nal reference signal or automatic cycle, see section 4 . ባ A force alternated control may be set by Z-BM-TEZ- Not Present NP software additionally to the position control: a pressure/force transducer has to be assem- bled into the actuator and connected to the axis card; a second pressure/force reference signal is required. Atos PC software allows to customize the axis card configuration to the specific appli- USB ቤ USB ቤ cation requirements. -

City of London Corporation Pwc Fintech Series : India-UK Payments Landscape

www.cityoflondon.gov.uk/indiapublications City of London Corporation PwC Fintech series : India-UK Payments Landscape www.pwc.in 1 Executive summary 3 • Objective of our research work • Opportunities in the Payments space • Potential challenges • Our perspectives • Conclusion 2 The Fintech story in India so far 11 • Introduction • Evolution of Fintech in India • Strong Governmental Support for Fintech in India • What makes Fintech unique in India • The digital payments journey in India • Opportunities and Challenges faced by Fintech Payment Firms in India UK’s Fintech growth and regulatory 30 3 landscape on digital payments • The UK’s Fintech growth so far • Regulatory landscape • Payment Services Regulation (PSR) • Open banking under PSD2 Contents • Opportunities and Challenges faced by Fintech Payment Firms in UK India Case studies – Google Tez 46 4 and Paytm 5 Way Forward 51 2 PwC City of London Corporation PwC Fintech series : India-UK Payments Landscape 1 Executive summary 3 PwC City of London Corporation PwC Fintech series : India-UK Payments Landscape Objective of our research work The UK witnessed a major Fintech revolution post City of London Corporation – PwC the global financial crisis of 2008 and the City of London Corporation in particular evolved rapidly in Report Series: embracing innovative technologies and establishing Based on the Fintech round-table events organized a mature Fintech eco-system. When it comes to in India over the past 12-18 months by the City of Financial Services, London has always been the London Corporation representative office in Mumbai, global financial hub and the Fintech growth in there is an increasing appetite from both the UK London is a natural extension of London’s competitive and Indian Fintech players to consider in-bound edge. -

Real-Time Payments Systems & Third Party Access

Real-Time Payments Systems & Third Party Access A perspective from Google Payments November 2019 Contents 3 Executive summary 4 Terms (Glossary) 5 Foreword: Real-time payments in action 10 Case Study: India transforms its banking system by rolling out the Unified Payments Interface 11 Overview 12 The RTP journey for India 16 Learnings 17 Google Pay 19 Real stories 20 Technical recommendations for RTPs 21 Technical Recommendations for the Building Blocks Of An RTP System 21 Transactions: Push or request 21 Mandates 22 The Importance of refunds 22 Clear & traceable merchant settlement 23 Conveyance mechanisms 23 QR codes 24 Tiered KYC 25 Deterministic status of transactions 26 Idempotency 26 Financial institution uptime & health 27 Recommendations for a National Addressing Database (NAD) 28 Recommendations for including Third Parties 30 Direct access to the RTP system with a standardized API 32 The right approach to authentication 34 Trust delegation 35 Matching digital identities to real-world identities 37 Federated identity 37 The importance of privacy 37 Conclusion 38 Annex 39 What are QR codes? 40 Strong customer authentication RTP Systems & Third Party Access 2 Executive summary Just as digital technologies have transformed so much of our lives, from access to information to communicating, the adoption of digital payments is fundamentally transforming banking systems, commerce, and societies around the world. Digital payments are full of promise. They can bring new levels of convenience and efficiencies, security and transparency, access and growth. Countries that have rolled out payment systems are already reaping the benefits. However, due to the inherent complexities of financial systems and of deploying digital technology, there is no one-size-fits-all model. -

COMPETITION COMMISSION of INDIA Case No. 07 of 2020 in Re

COMPETITION COMMISSION OF INDIA Case No. 07 of 2020 In Re: XYZ Informant And 1. Alphabet Inc. 1600 Amphitheatre Parkway Mountain View, CA 94043 United States of America Opposite Party No. 1 2. Google LLC c/o Custodian of Records 1600 Amphitheatre Parkway Mountain View, CA 94043 United States of America Opposite Party No. 2 3. Google Ireland Limited Google Building Gordon House 4 Barrow St, Dublin, D04 E5W5, Ireland Opposite Party No. 3 4. Google India Private Limited No. 3, RMZ Infinity – Tower E, Old Madras Road, 3rd, 4th, and 5th Floors, Bangalore, 560016 Opposite Party No. 4 5. Google India Digital Services Private Limited Unit 207, 2nd Floor Signature Tower-II Tower A, Sector 15 Part II Silokhera, Gurgaon 122001, Haryana, India. Opposite Party No. 5 Case No. 07 of 2020 1 CORAM: Mr. Ashok Kumar Gupta Chairperson Ms. Sangeeta Verma Member Mr. Bhagwant Singh Bishnoi Member Order under Section 26(1) of the Competition Act, 2002 1. The present Information has been filed, on 21.02.2020, under Section 19(1)(a) of the Competition Act, 2002 (the ‘Act’) by XYZ (the ‘Informant’) against Alphabet Inc. (‘OP-1’), Google LLC (‘OP-2’), Google Ireland Limited (‘Google Ireland/ OP-3’), Google India Private Limited (‘Google India/ OP-4’) and Google India Digital Services Private Limited (‘Google Digital Services/ OP-5’) alleging contravention of various provisions of Section 4 of the Act. The opposite parties are hereinafter collectively referred to as ‘Google/ Opposite Parties’. Facts as stated in the Information 2. Google LLC (OP-2) is stated to be a multi-national conglomerate specialising in internet related products and services. -

Fintrack Tracking Innovation in Financial Services About This Product

September 2017 FinTrack Tracking innovation in financial services About This Product Introducing FinTrack, GlobalData’s financial innovations tracker. Every month, FinTrack will showcase the latest innovations from financial providers around the world. Each innovation is assessed and rated on key criteria, providing you with valuable insight. FinTrack will help you to: • Keep up-to-date with the latest innovations from your competitors. • Develop cutting-edge product and channel strategies. • Identify the latest trends in the delivery of financial services. FinTrack: the inside track on the latest financial innovations. September 2017 Table of Contents Consumer Payments Google unveils Tez mobile wallet in India 5 HSBC introduces selfie payments in China 6 Mastercard launches emergency cash service at ATMs 7 General Insurance Alan launches mobile app for health and life insurance 9 Chubb-led partnership launches flight delay cover 10 InMyBag’s claim to replace gadgets within hours 11 Retail Banking Douugh: an AI-based app offering personalized banking 13 Monzo introduces in-app energy supplier switching service 14 Wealth Management Bambu provides white-label robo-advice 17 Citigold Private Client starts Young Successor Program 18 Goldman Sachs launches securities-based lending service 19 September 2017 Consumer Payments Google unveils Tez mobile wallet in India Google has launched a new mobile wallet called Tez in India. The wallet allows users to link up their bank account to the Tez app and make online and in-store payments, as well as P2P payments, using the linked account. A key point about Tez is that it uses audio QR (which is inaudible to human ears) to send payment data and identification between the merchant and the payer’s phone, rather than printed codes, Bluetooth, NFC, or other communication technologies.