On Hash Functions Using Checksums

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hash Functions

Hash Functions A hash function is a function that maps data of arbitrary size to an integer of some fixed size. Example: Java's class Object declares function ob.hashCode() for ob an object. It's a hash function written in OO style, as are the next two examples. Java version 7 says that its value is its address in memory turned into an int. Example: For in an object of type Integer, in.hashCode() yields the int value that is wrapped in in. Example: Suppose we define a class Point with two fields x and y. For an object pt of type Point, we could define pt.hashCode() to yield the value of pt.x + pt.y. Hash functions are definitive indicators of inequality but only probabilistic indicators of equality —their values typically have smaller sizes than their inputs, so two different inputs may hash to the same number. If two different inputs should be considered “equal” (e.g. two different objects with the same field values), a hash function must re- spect that. Therefore, in Java, always override method hashCode()when overriding equals() (and vice-versa). Why do we need hash functions? Well, they are critical in (at least) three areas: (1) hashing, (2) computing checksums of files, and (3) areas requiring a high degree of information security, such as saving passwords. Below, we investigate the use of hash functions in these areas and discuss important properties hash functions should have. Hash functions in hash tables In the tutorial on hashing using chaining1, we introduced a hash table b to implement a set of some kind. -

SELECTION of CYCLIC REDUNDANCY CODE and CHECKSUM March 2015 ALGORITHMS to ENSURE CRITICAL DATA INTEGRITY 6

DOT/FAA/TC-14/49 Selection of Federal Aviation Administration William J. Hughes Technical Center Cyclic Redundancy Code and Aviation Research Division Atlantic City International Airport New Jersey 08405 Checksum Algorithms to Ensure Critical Data Integrity March 2015 Final Report This document is available to the U.S. public through the National Technical Information Services (NTIS), Springfield, Virginia 22161. U.S. Department of Transportation Federal Aviation Administration NOTICE This document is disseminated under the sponsorship of the U.S. Department of Transportation in the interest of information exchange. The U.S. Government assumes no liability for the contents or use thereof. The U.S. Government does not endorse products or manufacturers. Trade or manufacturers’ names appear herein solely because they are considered essential to the objective of this report. The findings and conclusions in this report are those of the author(s) and do not necessarily represent the views of the funding agency. This document does not constitute FAA policy. Consult the FAA sponsoring organization listed on the Technical Documentation page as to its use. This report is available at the Federal Aviation Administration William J. Hughes Technical Center’s Full-Text Technical Reports page: actlibrary.tc.faa.gov in Adobe Acrobat portable document format (PDF). Technical Report Documentation Page 1. Report No. 2. Government Accession No. 3. Recipient's Catalog No. DOT/FAA/TC-14/49 4. Title and Subitle 5. Report Date SELECTION OF CYCLIC REDUNDANCY CODE AND CHECKSUM March 2015 ALGORITHMS TO ENSURE CRITICAL DATA INTEGRITY 6. Performing Organization Code 220410 7. Author(s) 8. Performing Organization Report No. -

The Missing Difference Problem, and Its Applications to Counter Mode

The Missing Difference Problem, and its Applications to Counter Mode Encryption? Ga¨etanLeurent and Ferdinand Sibleyras Inria, France fgaetan.leurent,[email protected] Abstract. The counter mode (CTR) is a simple, efficient and widely used encryption mode using a block cipher. It comes with a security proof that guarantees no attacks up to the birthday bound (i.e. as long as the number of encrypted blocks σ satisfies σ 2n=2), and a matching attack that can distinguish plaintext/ciphertext pairs from random using about 2n=2 blocks of data. The main goal of this paper is to study attacks against the counter mode beyond this simple distinguisher. We focus on message recovery attacks, with realistic assumptions about the capabilities of an adversary, and evaluate the full time complexity of the attacks rather than just the query complexity. Our main result is an attack to recover a block of message with complexity O~(2n=2). This shows that the actual security of CTR is similar to that of CBC, where collision attacks are well known to reveal information about the message. To achieve this result, we study a simple algorithmic problem related to the security of the CTR mode: the missing difference problem. We give efficient algorithms for this problem in two practically relevant cases: where the missing difference is known to be in some linear subspace, and when the amount of data is higher than strictly required. As a further application, we show that the second algorithm can also be used to break some polynomial MACs such as GMAC and Poly1305, with a universal forgery attack with complexity O~(22n=3). -

Linear-XOR and Additive Checksums Don't Protect Damgård-Merkle

Linear-XOR and Additive Checksums Don’t Protect Damg˚ard-Merkle Hashes from Generic Attacks Praveen Gauravaram1! and John Kelsey2 1 Technical University of Denmark (DTU), Denmark Queensland University of Technology (QUT), Australia. [email protected] 2 National Institute of Standards and Technology (NIST), USA [email protected] Abstract. We consider the security of Damg˚ard-Merkle variants which compute linear-XOR or additive checksums over message blocks, inter- mediate hash values, or both, and process these checksums in computing the final hash value. We show that these Damg˚ard-Merkle variants gain almost no security against generic attacks such as the long-message sec- ond preimage attacks of [10,21] and the herding attack of [9]. 1 Introduction The Damg˚ard-Merkle construction [3, 14] (DM construction in the rest of this article) provides a blueprint for building a cryptographic hash function, given a fixed-length input compression function; this blueprint is followed for nearly all widely-used hash functions. However, the past few years have seen two kinds of surprising results on hash functions, that have led to a flurry of research: 1. Generic attacks apply to the DM construction directly, and make few or no assumptions about the compression function. These attacks involve attacking a t-bit hash function with more than 2t/2 work, in order to violate some property other than collision resistance. Exam- ples of generic attacks are Joux multicollision [8], long-message second preimage attacks [10,21] and herding attack [9]. 2. Cryptanalytic attacks apply to the compression function of the hash function. -

Hash (Checksum)

Hash Functions CSC 482/582: Computer Security Topics 1. Hash Functions 2. Applications of Hash Functions 3. Secure Hash Functions 4. Collision Attacks 5. Pre-Image Attacks 6. Current Hash Security 7. SHA-3 Competition 8. HMAC: Keyed Hash Functions CSC 482/582: Computer Security Hash (Checksum) Functions Hash Function h: M MD Input M: variable length message M Output MD: fixed length “Message Digest” of input Many inputs produce same output. Limited number of outputs; infinite number of inputs Avalanche effect: small input change -> big output change Example Hash Function Sum 32-bit words of message mod 232 M MD=h(M) h CSC 482/582: Computer Security 1 Hash Function: ASCII Parity ASCII parity bit is a 1-bit hash function ASCII has 7 bits; 8th bit is for “parity” Even parity: even number of 1 bits Odd parity: odd number of 1 bits Bob receives “10111101” as bits. Sender is using even parity; 6 1 bits, so character was received correctly Note: could have been garbled, but 2 bits would need to have been changed to preserve parity Sender is using odd parity; even number of 1 bits, so character was not received correctly CSC 482/582: Computer Security Applications of Hash Functions Verifying file integrity How do you know that a file you downloaded was not corrupted during download? Storing passwords To avoid compromise of all passwords by an attacker who has gained admin access, store hash of passwords Digital signatures Cryptographic verification that data was downloaded from the intended source and not modified. Used for operating system patches and packages. -

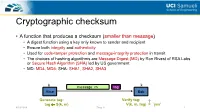

Cryptographic Checksum

Cryptographic checksum • A function that produces a checksum (smaller than message) • A digest function using a key only known to sender and recipient • Ensure both integrity and authenticity • Used for code-tamper protection and message-integrity protection in transit • The choices of hashing algorithms are Message Digest (MD) by Ron Rivest of RSA Labs or Secure Hash Algorithm (SHA) led by US government • MD: MD4, MD5; SHA: SHA1, SHA2, SHA3 k k message m tag Alice Bob Generate tag: Verify tag: ? tag S(k, m) V(k, m, tag) = `yes’ 4/19/2019 Zhou Li 1 Constructing cryptographic checksum • HMAC (Hash Message Authentication Code) • Problem to solve: how to merge key with hash H: hash function; k: key; m: message; example of H: SHA-2-256 ; output is 256 bits • Building a MAC out of a hash function: Paddings to make fixed-size block https://en.wikipedia.org/wiki/HMAC 4/19/2019 Zhou Li 2 Cryptoanalysis on crypto checksums • MD5 broken by Xiaoyun Input Output Wang et al. at 2004 • Collisions of full MD5 less than 1 hour on IBM p690 cluster • SHA-1 theoretically broken by Xiaoyun Wang et al. at 2005 • 269 steps (<< 280 rounds) to find a collision • SHA-1 practically broken by Google at 2017 • First collision found with 6500 CPU years and 100 GPU years Current Secure Hash Standard Properties https://shattered.io/static/shattered.pdf 4/19/2019 Zhou Li 3 Elliptic Curve Cryptography • RSA algorithm is patented • Alternative asymmetric cryptography: Elliptic Curve Cryptography (ECC) • The general algorithm is in the public domain • ECC can provide similar -

On Hash Functions Using Checksums

Downloaded from orbit.dtu.dk on: Sep 27, 2021 On hash functions using checksums Gauravaram, Praveen; Kelsey, John; Knudsen, Lars Ramkilde; Thomsen, Søren Steffen Publication date: 2008 Document Version Early version, also known as pre-print Link back to DTU Orbit Citation (APA): Gauravaram, P., Kelsey, J., Knudsen, L. R., & Thomsen, S. S. (2008). On hash functions using checksums. MAT report No. 2008-06 General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. Users may download and print one copy of any publication from the public portal for the purpose of private study or research. You may not further distribute the material or use it for any profit-making activity or commercial gain You may freely distribute the URL identifying the publication in the public portal If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. On hash functions using checksums ⋆ Praveen Gauravaram1⋆⋆, John Kelsey2, Lars Knudsen1, and Søren Thomsen1⋆ ⋆ ⋆ 1 DTU Mathematics, Technical University of Denmark, Denmark [email protected],[email protected],[email protected] 2 National Institute of Standards and Technology (NIST), USA [email protected] Abstract. We analyse the security of iterated hash functions that compute an input dependent check- sum which is processed as part of the hash computation. -

FIPS 198, the Keyed-Hash Message Authentication Code (HMAC)

ARCHIVED PUBLICATION The attached publication, FIPS Publication 198 (dated March 6, 2002), was superseded on July 29, 2008 and is provided here only for historical purposes. For the most current revision of this publication, see: http://csrc.nist.gov/publications/PubsFIPS.html#198-1. FIPS PUB 198 FEDERAL INFORMATION PROCESSING STANDARDS PUBLICATION The Keyed-Hash Message Authentication Code (HMAC) CATEGORY: COMPUTER SECURITY SUBCATEGORY: CRYPTOGRAPHY Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8900 Issued March 6, 2002 U.S. Department of Commerce Donald L. Evans, Secretary Technology Administration Philip J. Bond, Under Secretary National Institute of Standards and Technology Arden L. Bement, Jr., Director Foreword The Federal Information Processing Standards Publication Series of the National Institute of Standards and Technology (NIST) is the official series of publications relating to standards and guidelines adopted and promulgated under the provisions of Section 5131 of the Information Technology Management Reform Act of 1996 (Public Law 104-106) and the Computer Security Act of 1987 (Public Law 100-235). These mandates have given the Secretary of Commerce and NIST important responsibilities for improving the utilization and management of computer and related telecommunications systems in the Federal government. The NIST, through its Information Technology Laboratory, provides leadership, technical guidance, and coordination of government efforts in the development of standards and guidelines in these areas. Comments concerning Federal Information Processing Standards Publications are welcomed and should be addressed to the Director, Information Technology Laboratory, National Institute of Standards and Technology, 100 Bureau Drive, Stop 8900, Gaithersburg, MD 20899-8900. William Mehuron, Director Information Technology Laboratory Abstract This standard describes a keyed-hash message authentication code (HMAC), a mechanism for message authentication using cryptographic hash functions. -

Table of Contents Local Transfers

Table of Contents Local Transfers......................................................................................................1 Checking File Integrity.......................................................................................................1 Local File Transfer Commands...........................................................................................3 Shift Transfer Tool Overview..............................................................................................5 Local Transfers Checking File Integrity It is a good practice to confirm whether your files are complete and accurate before you transfer the files to or from NAS, and again after the transfer is complete. The easiest way to verify the integrity of file transfers is to use the NAS-developed Shift tool for the transfer, with the --verify option enabled. As part of the transfer, Shift will automatically checksum the data at both the source and destination to detect corruption. If corruption is detected, partial file transfers/checksums will be performed until the corruption is rectified. For example: pfe21% shiftc --verify $HOME/filename /nobackuppX/username lou% shiftc --verify /nobackuppX/username/filename $HOME your_localhost% sup shiftc --verify filename pfe: In addition to Shift, there are several algorithms and programs you can use to compute a checksum. If the results of the pre-transfer checksum match the results obtained after the transfer, you can be reasonably certain that the data in the transferred files is not corrupted. If -

The Effectiveness of Checksums for Embedded Control Networks

IEEE TRANSACTIONS ON DEPENDABLE AND SECURE COMPUTING, VOL. 6, NO. 1, JANUARY-MARCH 2009 59 The Effectiveness of Checksums for Embedded Control Networks Theresa C. Maxino, Member, IEEE, and Philip J. Koopman, Senior Member, IEEE Abstract—Embedded control networks commonly use checksums to detect data transmission errors. However, design decisions about which checksum to use are difficult because of a lack of information about the relative effectiveness of available options. We study the error detection effectiveness of the following commonly used checksum computations: exclusive or (XOR), two’s complement addition, one’s complement addition, Fletcher checksum, Adler checksum, and cyclic redundancy codes (CRCs). A study of error detection capabilities for random independent bit errors and burst errors reveals that the XOR, two’s complement addition, and Adler checksums are suboptimal for typical network use. Instead, one’s complement addition should be used for networks willing to sacrifice error detection effectiveness to reduce computational cost, the Fletcher checksum should be used for networks looking for a balance between error detection and computational cost, and CRCs should be used for networks willing to pay a higher computational cost for significantly improved error detection. Index Terms—Real-time communication, networking, embedded systems, checksums, error detection codes. Ç 1INTRODUCTION common way to improve network message data However, the use of less capable checksum calculations Aintegrity is appending a checksum. Although it is well abounds.Onewidelyusedalternativeistheexclusiveor(XOR) known that cyclic redundancy codes (CRCs) are effective at checksum, usedby HART[4],Magellan [5],and manyspecial- error detection, many embedded networks employ less purpose embedded networks (for example, [6], [7], and [8]). -

Algebraic Cryptanalysis of GOST Encryption Algorithm

Journal of Computer and Communications, 2014, 2, 10-17 Published Online March 2014 in SciRes. http://www.scirp.org/journal/jcc http://dx.doi.org/10.4236/jcc.2014.24002 Algebraic Cryptanalysis of GOST Encryption Algorithm Ludmila Babenko, Ekaterina Maro Department of Information Security, Southern Federal University, Taganrog, Russia Email: [email protected] Received October 2013 Abstract This paper observes approaches to algebraic analysis of GOST 28147-89 encryption algorithm (also known as simply GOST), which is the basis of most secure information systems in Russia. The general idea of algebraic analysis is based on the representation of initial encryption algorithm as a system of multivariate quadratic equations, which define relations between a secret key and a cipher text. Extended linearization method is evaluated as a method for solving the nonlinear sys- tem of equations. Keywords Encryption Algorithm GOST; GOST⊕; S-Box; Systems of Multivariate Quadratic Equations; Algebraic Cryptanalysis; Extended Linearization Method; Gaussian Elimination 1. Introduction The general idea of algebraic cryptanalysis is finding equations that describe nonlinear transformations of S- boxes followed by finding solution of these equations and obtaining the secret key. This method of cryptanalysis belongs to the class of attacks with known plaintext. It is enough to have a single plaintext/ciphertext pair for the success. Algebraic methods of cryptanalysis contain the following stages: • Creation of the system of equations that describe transformations in non-linear cryptographic primitives of the analyzed cipher (i.e., S-boxes for most symmetric ciphers); • Finding solution of this system. The idea to describe an encryption algorithm as system of linear equations originated quite long time ago. -

GOST R 34.12-2015: Block Cipher "Magma"

Stream: Independent Submission RFC: 8891 Updates: 5830 Category: Informational Published: September 2020 ISSN: 2070-1721 Authors: V. Dolmatov, Ed. D. Baryshkov JSC "NPK Kryptonite" Auriga, Inc. RFC 8891 GOST R 34.12-2015: Block Cipher "Magma" Abstract In addition to a new cipher with a block length of n=128 bits (referred to as "Kuznyechik" and described in RFC 7801), Russian Federal standard GOST R 34.12-2015 includes an updated version of the block cipher with a block length of n=64 bits and key length of k=256 bits, which is also referred to as "Magma". The algorithm is an updated version of an older block cipher with a block length of n=64 bits described in GOST 28147-89 (RFC 5830). This document is intended to be a source of information about the updated version of the 64-bit cipher. It may facilitate the use of the block cipher in Internet applications by providing information for developers and users of the GOST 64-bit cipher with the revised version of the cipher for encryption and decryption. Status of This Memo This document is not an Internet Standards Track specification; it is published for informational purposes. This is a contribution to the RFC Series, independently of any other RFC stream. The RFC Editor has chosen to publish this document at its discretion and makes no statement about its value for implementation or deployment. Documents approved for publication by the RFC Editor are not candidates for any level of Internet Standard; see Section 2 of RFC 7841. Information about the current status of this document, any errata, and how to provide feedback on it may be obtained at https://www.rfc-editor.org/info/rfc8891.