New Frameworks for Studying and Developing Computational Thinking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SOAP Plug-In

User Guide SOAP Plug-in Release 5.0 © 2010 Avaya Inc. All Rights Reserved. Notice While reasonable efforts were made to ensure that the information in this document was complete and accurate at the time of printing, Avaya Inc. can assume no liability for any errors. Changes and corrections to the information in this document may be incorporated in future releases. Documentation disclaimer Avaya Inc. is not responsible for any modifications, additions, or deletions to the original published version of this documentation unless such modifications, additions, or deletions were performed by Avaya. Link disclaimer Avaya Inc. is not responsible for the contents or reliability of any linked Web sites referenced elsewhere within this Documentation, and Avaya does not necessarily endorse the products, services, or information described or offered within them. We cannot guarantee that these links will work all of the time and we have no control over the availability of the linked pages. License USE OR INSTALLATION OF THE PRODUCT INDICATES THE END USER'S ACCEPTANCE OF THE TERMS SET FORTH HEREIN AND THE GENERAL LICENSE TERMS AVAILABLE ON THE AVAYA WEBSITE AT http://support.avaya.com/LicenseInfo/ ("GENERAL LICENSE TERMS"). IF YOU DO NOT WISH TO BE BOUND BY THESE TERMS, YOU MUST RETURN THE PRODUCT(S) TO THE POINT OF PURCHASE WITHIN TEN (10) DAYS OF DELIVERY FOR A REFUND OR CREDIT. Avaya grants End User a license within the scope of the license types described below. The applicable number of licenses and units of capacity for which the license is granted will be one (1), unless a different number of licenses or units of capacity is specified in the Documentation or other materials available to End User. -

A Comparative Study on SOAP and Restful Web Services Sirsha Chatterjee1, Mamatha T2

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 07 Issue: 05 | May 2020 www.irjet.net p-ISSN: 2395-0072 A comparative study on SOAP and RESTful web services Sirsha Chatterjee1, Mamatha T2 1Under Graduate Student, Dept. of Computer Science and Engineering, RV College of Engineering, Bengaluru, Karnataka 2Assistant Professor, Dept. of Computer Science and Engineering, RV College of Engineering, Bengaluru, Karnataka ---------------------------------------------------------------------***---------------------------------------------------------------------- Abstract - Modern enterprise applications nowadays need request is sent by the client to the server, where the message to build web-based applications using a wide variety of is processed and appropriate response os sent back to the programming platforms, for different requirements. While client. REST was developed in 2000 by Roy Fielding. [3] backend service applications are developed using Java, .Net, or states that REST services is not only limited to XML but can Node JS, front-end application are developed using ReactJS, also support JSON (JavaScript Object Notation), as well as AngularJS, etc. These heterogeneity in use of types of plain text, unlike SOAP which only supports documents in application platforms require a common communication XML format. service to transfer information and data. Web services provide this service which enables multiple applications to In this paper, the two services are analyzed and compared communicate with each other. Web services perform functions based on their underlying architecture, differences, ranging from simple requests to complicate business advantages and disadvantages. processes. SOAP (Simple Object Access Protocol) and REST (Representational State Transfer) are the most common and 2. SOAP Web Service popular types of web service protocols in use. -

United Concordia (UCD) Real Time Claim Submission & Adjudication

United Concordia (UCD) Real Time Claim Submission & Adjudication Connectivity Specifications May 15, 2015 Contents 1. Real Time Overview 2. Requirements 3. SOAP Messages 4. SOAP Faults 5. CORE-Compliant Error Responses 6. UCD EDI WebServices Certificate 1 1. Overview Real Time transactions utilize Simple Object Access Protocol (SOAP). SOAP is a simple XML based protocol to let applications exchange information over HTTP. Since the Internet is being utilized to transport the data, encryption will be utilized to secure messages in the same way financial transactions are secured over the Internet. Access to UCD’s networks will follow the same security model in place today, which requires a Login/Password. In order to understand the lifecycle of the transaction, processes have been outlined below: (1) Transaction Initiation UCD Trading Partner’s Transaction Management System will initiate a Real Time X12 HIPAA transaction. (2) Establish Connection The Trading Partner’s Transaction Management System will establish a secure Internet connection (HTTPS) to UCD and send an encrypted SOAP message that contains a HIPAA X12 transaction payload, along with the Trading Partner logon id, and password assigned by UCD. (3) Receive Transaction UCD receives the Real Time request on its Web Server. 2 (4) Authentication/Authorization When the SOAP message is received by UCD’s WebSphere application, the SOAP message is validated and the Trading Partner’s logon id, password and defined role are authenticated using LDAP (Lightweight Directory Access Protocol). Only Trading Partners that have signed a UCD Trading Partner Agreement are granted logon id’s, passwords and defined roles. To obtain a copy of the Trading Partner Agreement and the Trading Partner Application, please visit: https://secure.ucci.com/ducdws/dentist.xhtml?content=dentist-trading-partners . -

CSS-1H, CSS-1Hl, CSS-1Hm SDS Number: AMI-403 Revision Date: 2/1/2019 Page 1 of 5

Asphalt Materials, Inc. CSS-1h, CSS-1hL, CSS-1hM SDS Number: AMI-403 Revision Date: 2/1/2019 Page 1 of 5 1 PRODUCT AND COMPANY IDENTIFICATION Manufacturer Vendor Asphalt Materials, Inc. Asphalt Materials, Inc. 5400 W. 86th Street 5400 W. 86th Street Indianapolis, Indiana 46268 Indianapolis, Indiana 46268 Emergency: CHEMTREC: 800-424-9300 Emergency: CHEMTREC: 800-424-9300 Contact: Keith Toombs Contact: Keith Toombs Phone: 317-872-6010 Phone: 317-872-6010 Fax: 317-874-4900 Fax: 317-874-4900 Email: [email protected] Email: [email protected] Web: www.asphalt-materials.com Web: www.asphalt-materials.com Product Name: CSS-1h, CSS-1hL, CSS-1hM Revision Date: 2/1/2019 SDS Number: AMI-403 Common Name: Asphalt Emulsion Cationic CAS Number: Mixture Chemical Family: Emulsified complex petroleum hydrocarbon and water Synonyms: Cationic Asphalt Emulsion, Emulsified Asphalt, Microsurfacing Asphalt Emulsion Product Use: Highway Paving Applications and Mixtures 2 HAZARDS IDENTIFICATION Classification of the substance or mixture GHS Classification in accordance with 29 CFR 1910 (OSHA HCS): Health, Acute toxicity, 5 Dermal Health, Serious Eye Damage/Eye Irritation, 2 B GHS Label elements, including precautionary statements GHS Signal Word: WARNING GHS Hazard Pictograms: no GHS pictograms indicated for this product GHS Hazard Statements: H313 - May be harmful in contact with skin H320 - Causes eye irritation GHS Precautionary Statements: P202 - Do not handle until all safety precautions have been read and understood. P280 - Wear protective gloves/protective clothing/eye protection/face protection. Hazards not otherwise classified (HNOC) or not covered by GHS Inhalation: Breathing vapors, fumes, or mists may cause irritation to nasal and respiratory tract and central nervous system effects. -

Columns in FOP

Columns in FOP Block 0: The Extensible Markup Language associated with it a great number of other (XML) is a subset of SGML that is standards, most of them under W3C (World- completely described in this document. Its Wide Web Consortium) auspices. Among goal is to enable generic SGML to be these are XML Namespaces, XML Pointer, served, received, and processed on the XPath, XSLT, XHTML, SVG, RELAX, SOAP, Web in the way that is now possible with and any number of others. This file has been HTML. XML has been designed for ease of prepared using formatting objects, an XML implementation and for interoperability with vocabulary described in the XSL both SGML and HTML. For further specification of October 18, 2000. information read normal.pdf. XML has Formatting objects are used to specify associated with it a great number of other pagination and composition, and are standards, most of them under W3C (World- intended for high-quality, precision layout- Wide Web Consortium) auspices. Among driven formatting. these are XML Namespaces, XML Pointer, Block 2: The Extensible Markup Language XPath, XSLT, XHTML, SVG, RELAX, SOAP, (XML) is a subset of SGML that is and any number of others. This file has been completely described in this document. Its prepared using formatting objects, an XML goal is to enable generic SGML to be vocabulary described in the XSL served, received, and processed on the specification of October 18, 2000. Web in the way that is now possible with Formatting objects are used to specify HTML. XML has been designed for ease of pagination and composition, and are implementation and for interoperability with intended for high-quality, precision layout- both SGML and HTML. -

No Slide Title

Introduction to SOAP Henrik Frystyk Nielsen <[email protected]> 1 SOAP/1.1 Spec Status SOAP/1.1 was submitted to W3C and became a W3C Note on May 8, 2000 Intent was to start standards process W3C was the natural place because SOAP is intended as general infrastructure Discussion happens on public lists [email protected] [email protected] 2 SOAP Development Developed in traditional Web style: Very distributed community with broad support Several Implementations MSDN Toolkit, MS .Net Framework, Apache SOAP, Developmentor SOAP toolkits in Perl and Java, SOAP::lite (Perl), libwww based SOAP, IONA, and many more New spec for SOAP binding to MIME Multipart John Barton, HP Labs, Satish Thatte, MS, and myself SOAP/1.1 issues list 3 SOAP/1.1 Authors Don Box, DevelopMentor David Ehnebuske, IBM Gopal Kakivaya, Microsoft Andrew Layman, Microsoft Noah Mendelsohn, Lotus Henrik Frystyk Nielsen, Microsoft Satish Thatte, Microsoft Dave Winer,UserLandSoftware 4 W3C Submitters Ariba, Inc. Commerce One, Inc. Compaq Computer Corporation DevelopMentor, Inc. Hewlett Packard Company International Business Machines Corporation IONA Technologies Lotus Development Corporation Microsoft Corporation SAP AG UserLand Software Inc. 5 The W3C XML Protocol Activity In Sep 2000, W3C started XML Protocol Activity Contains the XP Working Group Chair is David Fallside, IBM Very large (70+ members) Public charter and mailing list [email protected] SOAP/1.1 is the starting point for this work Will be evaluated against requirements -

Crude Tall Oil Low ILUC Risk Assessment Comparing Global Supply and Demand

Crude tall oil low ILUC risk assessment Comparing global supply and demand Crude tall oil low ILUC risk assessment Comparing global supply and demand By: Daan Peters, Viktorija Stojcheva Date: 24 April 2017 Project number: SISNL17494 © Ecofys 2017 by order of: UPM ECOFYS Netherlands B.V. | Kanaalweg 15G | 3526 KL Utrecht| T +31 (0)30 662-3300 | F +31 (0)30 662-3301 | E [email protected] | I www.ecofys.com Chamber of Commerce 30161191 Executive Summary UPM produces renewable diesel and naphtha from crude tall oil (CTO) in its biorefinery in Lappeenranta, Finland from early 2015 onwards. Because the company wanted clarity on the status and sustainability of its feedstock, UPM asked Ecofys in 2013 to assess whether CTO can be regarded as a residue and whether the feedstock would be low ILUC risk, meaning its use for biofuels would not lead to displace- ment effects of existing other uses. The current report is an updated version of the 2013 report. An important change since the previous report is that biofuel production at UPM has started, so any effects of CTO usage for biofuels has on the CTO market would be visible. Ecofys studies the following questions in this report: 1. Can CTO be defined as a residue based on biofuel legislation 2. Does the feedstock create an additional demand for land (is it a low ILUC risk feed stock?) 3. Does the use of the feedstock for biofuel production cause significant distortive effects on markets? The second and third question are closely interlinked. CTO in itself is a non-land using feedstock. -

SOAP API Developer's Guide

PureConnect® 2019 R4 Generated: 11-November-2019 SOAP API Content last updated: 06-November-2019 See Change Log for summary of Developer's Guide changes. Abstract This document contains Application Programming Interface (API) reference information for the Simple Object Access Protocol (SOAP) with PureConnect. For the latest version of this document, see the PureConnect Documentation Library at: http://help.genesys.com/cic. For copyright and trademark information, see https://help.genesys.com/cic/desktop/copyright_and_trademark_information.htm. 1 Table of Contents Table of Contents 2 CIC and SOAP API Developer's Guide overview 5 Audience 5 Organization of Material 5 Related Documentation 5 Recommended Web Links 5 Introduction to SOAP in the CIC Environment 7 What is SOAP? 7 Who uses CIC's SOAP functionality? 8 SOAP's Request/Response Model 9 Web Services 9 Requests and Responses are XML Documents 9 What is XML? 10 What is the relationship between XML and markup languages, such as HTML or SGML? 10 XML Parsers 11 Viewing XML in Internet Explorer or Edge 11 Comparing XML to HTML 12 Other features of XML 12 Structure of an XML file 12 Listing 1: Sample XML File 12 XML Declaration 12 Rules that govern tags 13 The Root Element 13 Child Elements 13 Structure of SOAP Messages 13 Envelope Section 15 Header Section 15 Body Section 16 Request Messages 16 Response Messages 16 Fault Messages 17 CIC's SOAP Components 17 SOAP Tools in Interaction Designer 17 The SOAP Tracer Utility 20 SOAP ISAPI Listener Task for IIS 25 SOAP Notifier COM Objects 25 Install -

SAP Fieldglass Web Service Technical Specification Configuration Guide Company

PUBLIC SAP Fieldglass 2021-07-13 SAP Fieldglass Web Service Technical Specification Configuration Guide company. All rights reserved. All rights company. affiliate THE BEST RUN 2021 SAP SE or an SAP SE or an SAP SAP 2021 © Content 1 Introduction...................................................................3 2 About This Guide...............................................................5 3 Request Types and Data Transfers ..................................................6 4 Request File Formats and Structures................................................8 4.1 dataTransfer................................................................... 9 4.2 dataTransferStatus..............................................................11 4.3 getDataByTransactionId.......................................................... 12 5 Activation and Setup........................................................... 13 5.1 Download the WSDL Document.....................................................14 5.2 Download the WSDL Schema File....................................................15 Schema Definition File Descriptions................................................15 5.3 Examples: Data Transfer Requests and Responses........................................19 Upload Request..............................................................20 cXML Invoice Download Request with Data Marker.....................................20 Asynchronous cXML Invoice Download Response......................................21 cXML Invoice Download Request with System -

CSS-1 Material Safety Data Sheet

CSS-1 Material Safety Data Sheet Manufacturer’s Name: Martin Asphalt Company Date: January 15, 2014 Telephone Number: 800-662-0987 Address: 300 Christy Place South Houston, TX 77587 For Emergency Assistance Call: (713) 941-4410 NFPA HAZARD DEGREE OF HAZARD HAZARD RATINGS IDENTIFICATION HEALTH: 1 0= LEAST FIRE: 0 1= SLIGHT REACTIVITY: 0 2= MODERATE 3= HIGH 4= EXTREME SECTION 1 - COMPONENT DATA PRODUCT NAME: CSS-1 EMULSIFIED ASPHALT COMMON NAME ASPHALT EMULSION, BITUMEN CHEMICAL NAME PETROLEUM HYDROCARBON SECTION 2 - PHYSICAL DATA C.A.S. NUMBER: 8052-42-4 BOILING POINT (°F): 212 SPECIFIC GRAVITY (H20=1): 1.01 MELTING POINT (°F): NA VAPOR PRESSURE (mmHg@20ºC): NA PERCENT VOLATILE: 0 VAPOR DENSITY (AIR=1): NA EVAPORATIVE RATE (EE=1): NA SOLUBILITY IN WATER: SOLUBLE APPEARANCE AND ODOR: BROWN LIQUID, FAINT PETROLEUM ODOR SECTION 3 - FIRE & EXPLOSION DATA FLASH POINT (OF) & METHOD: N/A FLAMMABILITY LIMITS: LOWER: NA UPPER: NA AUTO IGNITION TEMPERATURE UNKNOWN (°F): EXTINGUISHING MEDIA USE DRY CHEMICAL, FOAM OR CARBON DIOXIDE. SPECIAL FIRE FIGHTING WATER MAY BE INEFFECTIVE BUT CAN BE USED TO COOL CONTAINERS PROCEDURES EXPOSED TO HEAT OR FLAME. CAUTION SHOULD BE EXERCISED WHEN USING WATER OR FOAM AS FROTHING MAY OCCUR, ESPECIALLY IF SPRAYED INTO CONTAINERS OF HOT, BURNING LIQUID. UNUSUAL FIRE AND EXPLOSIVE DENSE SMOKE MAY BE GENERATED WHILE BURNING. CARBON MONOXIDE, CONDITIONS CARBON DIOXIDE AND OTHER OXIDES MAY BE GENERATED AS PRODUCTS OF COMBUSTION. *NA=NOT APPLICABLE **ND=NOT DETERMINED CSS-1 - 01-15-14-MSDS Page 1 of 3 www.MartinAsphalt.com SECTION 4 - REACTIVITY DATA STABILITY: STABLE STABLE CONDITIONS TO AVOID: NONE HAZARDOUS POLYMERIZATION: WILL NOT OCCUR INCOMPATIBILITY MATERIALS TO DO NOT ALLOW HOT MOLTEN PRODUCT TO CONTACT WATER OR LIQUIDS AS AVOID: THIS CAN CAUSE VIOLENT ERUPTIONS. -

Give Your SOA a REST with Apis: the Cornerstone of a Modern Digital Business Foundation 02 SOA: a Revolutionary

WHITE PAPER Give your SOA a REST with APIs: The cornerstone of a modern digital business foundation 02 SOA: A revolutionary 01 idea that stalled INTRODUCTION 03 In the early 2000s, the Services-oriented Architecture (SOA) paradigm Digital transformation = was heralded for its agility potential. However, its loose coupling and customer experience use of standards did not widely translate into the kind of business transformation transformation that adopters envisioned. The technology proved to be more complex and challenging to manage than experts had predicted. 04 In contrast, the RESTful API, an evolutionary step up from SOAP, has The differences between proved to be adept at delivering flexible application integration at a SOA and APIs skill level applicable to widespread adoption. It enables the true digital transformation of customer experiences and business itself. Moving to API-driven customer experiences Now, organizations that embraced SOA 05 can transition to an API-based architecture Overview of the process and benefit from its improvements in connectivity and manageability. 06 5 API best practices While the distance from SOA (and legacy applications) to APIs may seem like a chasm, with the right practices and platform, the path from 07 SOA to API is a reasonable undertaking. This paper reviews how to make The role of the API the move and convert SOAP web services into a productive part of a management platform broader API ecosystem. 08 Conclusion axway.com 1 SOA: A revolutionary idea that stalled The SOA concept was viable. With all the big players As the Internet grew in popularity in the 1990s, on board, many enterprises demonstrating the unprecedented market power embarked on ambitious programs to of open standards like HTML and HTTP, people build Services-oriented Architectures. -

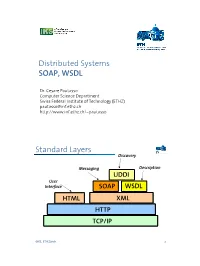

Distributed Systems SOAP, WSDL Standard Layers

Distributed Systems SOAP, WSDL Dr. Cesare Pautasso Computer Science Department Swiss Federal Institute of Technology (ETHZ) [email protected] http://www.inf.ethz.ch/~pautasso Standard Layers Discovery Messaging Description UDDI User Interface SOAPSOAP WSDL HTML XML HTTP TCP/IP ©IKS, ETH Zürich. 2 The Web services stack WSDL-based Semantic Web ebXML Messaging SOAP ebXML MSS Description WSDL RDF ebXML CPP Nonfunctional description WSEL Conversations WSCL ebXML WSCI WS- DAML-S BPSS Choreography Coordination BPEL4WS Business BPML BPML processes WSFL/XLANG Contracts ebXML CPA ebXML Discovery UDDI registries WS- Transactions Transactions BTP BTP SAML Security WS-Security S/MIME ©IKS, ETH Zürich. 3 HTTP, HTML and XML WWW basics The earliest implementations BROWSER were very simple and built directly upon the existing systems URL (client/server based on RPC, TP- INTERNET Monitors, or any other form of response middleware which allowed page interaction through a programmable client) the CGI script (or program) acted WEB SERVER as client in the traditional sense map URL to CGI script (for instance using RPC) execute CGI script The user clicked in a given URL get results back (stdout of CGI script) and the server invoked the script prepare response page corresponding to that URL send page to browser the script executed, produced the results and passed them back to the server (usually as the address Implemented of a web page) as a normal client CGI the server retrieved the page and script send it to the browser Existing Middleware Infrastructure ©IKS, ETH Zürich. WWW 5 HTTP as a communication protocol HTTP was designed for exchanging documents.