Tips: Making Volatile Index Structures Persistent with DRAM-NVMM Tiering R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hillview: a Trillion-Cell Spreadsheet for Big Data

Hillview: A trillion-cell spreadsheet for big data Mihai Budiu Parikshit Gopalan Lalith Suresh [email protected] [email protected] [email protected] VMware Research VMware Research VMware Research Udi Wieder Han Kruiger Marcos K. Aguilera [email protected] University of Utrecht [email protected] VMware Research VMware Research ABSTRACT Unfortunately, enterprise data is growing dramatically, and cur- Hillview is a distributed spreadsheet for browsing very large rent spreadsheets do not work with big data, because they are lim- datasets that cannot be handled by a single machine. As a spread- ited in capacity or interactivity. Centralized spreadsheets such as sheet, Hillview provides a high degree of interactivity that permits Excel can handle only millions of rows. More advanced tools such data analysts to explore information quickly along many dimen- as Tableau can scale to larger data sets by connecting a visualiza- sions while switching visualizations on a whim. To provide the re- tion front-end to a general-purpose analytics engine in the back- quired responsiveness, Hillview introduces visualization sketches, end. Because the engine is general-purpose, this approach is either or vizketches, as a simple idea to produce compact data visualiza- slow for a big data spreadsheet or complex to use as it requires tions. Vizketches combine algorithmic techniques for data summa- users to carefully choose queries that the system is able to execute rization with computer graphics principles for efficient rendering. quickly. For example, Tableau can use Amazon Redshift as the an- While simple, vizketches are effective at scaling the spreadsheet alytics back-end but users must understand lengthy documentation by parallelizing computation, reducing communication, providing to navigate around bad query types that are too slow to execute [17]. -

Member Renewal Guide

MEMBERSHIP DUES (All prices indicated are in U.S. dollars) membership dues (cont’d) Professional Member .........................................................................................$99 Professional Member PLUS Digital Library ($99 + $99) .........$198 PAYMENT Lifetime Membership ...................................................................... Prices vary Payment accepted by Visa, MasterCard, American Express, Available to ACM Professional Members in three age tiers. Discover, check, or money order in U.S. dollars. For residents Pricing is determined as a multiple of current professional rates. outside the US our preferred method of payment is credit The pricing structure is listed at www.acm.org/membership/life. card—but we do accept checks drawn in foreign currency at Membership the current monetary exchange rate. Student to Professional Transition ..........................................................$49 Renewal PLUS Digital Library ($49 + $50) ................................................................$99 CHANGING YOUR MEMBERSHIP STATUS... Student Membership .........................................................................................$19 From Student to Professional Guide Access to online books and courses; print subscription to XRDS; (Special Student Transition Dues of $49) online subscription to Communications of the ACM; and more. Cross out “Student” in the Member Class section, and write Student Membership PLUS Digital Library .......................................$42 “Student Tran sition -

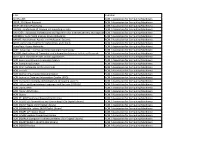

Central Library: IIT GUWAHATI

Central Library, IIT GUWAHATI BACK VOLUME LIST DEPARTMENTWISE (as on 20/04/2012) COMPUTER SCIENCE & ENGINEERING S.N. Jl. Title Vol.(Year) 1. ACM Jl.: Computer Documentation 20(1996)-26(2002) 2. ACM Jl.: Computing Surveys 30(1998)-35(2003) 3. ACM Jl.: Jl. of ACM 8(1961)-34(1987); 43(1996);45(1998)-50 (2003) 4. ACM Magazine: Communications of 39(1996)-46#3-12(2003) ACM 5. ACM Magazine: Intelligence 10(1999)-11(2000) 6. ACM Magazine: netWorker 2(1998)-6(2002) 7. ACM Magazine: Standard View 6(1998) 8. ACM Newsletters: SIGACT News 27(1996);29(1998)-31(2000) 9. ACM Newsletters: SIGAda Ada 16(1996);18(1998)-21(2001) Letters 10. ACM Newsletters: SIGAPL APL 28(1998)-31(2000) Quote Quad 11. ACM Newsletters: SIGAPP Applied 4(1996);6(1998)-8(2000) Computing Review 12. ACM Newsletters: SIGARCH 24(1996);26(1998)-28(2000) Computer Architecture News 13. ACM Newsletters: SIGART Bulletin 7(1996);9(1998) 14. ACM Newsletters: SIGBIO 18(1998)-20(2000) Newsletters 15. ACM Newsletters: SIGCAS 26(1996);28(1998)-30(2000) Computers & Society 16. ACM Newsletters: SIGCHI Bulletin 28(1996);30(1998)-32(2000) 17. ACM Newsletters: SIGCOMM 26(1996);28(1998)-30(2000) Computer Communication Review 1 Central Library, IIT GUWAHATI BACK VOLUME LIST DEPARTMENTWISE (as on 20/04/2012) COMPUTER SCIENCE & ENGINEERING S.N. Jl. Title Vol.(Year) 18. ACM Newsletters: SIGCPR 17(1996);19(1998)-20(1999) Computer Personnel 19. ACM Newsletters: SIGCSE Bulletin 28(1996);30(1998)-32(2000) 20. ACM Newsletters: SIGCUE Outlook 26(1998)-27(2001) 21. -

Candidate for President (1 July 2018 – 30 June 2020) Jack Davidson

Candidate for President (1 July 2018 – 30 June 2020) Jack Davidson Professor of Computer Science University of Virginia Charlottesville, VA U.S.A. BIOGRAPHY Education and Employment • B.A.S. (Computer Science) Southern Methodist University, 1975; M.S. (Computer Science) Southern Methodist University, 1977; Ph.D. (Computer Science) University of Arizona, 1981. • University of Virginia, Professor, 1982–present. • President, Zephyr Software, 2001–present. • Princeton University, Visiting Professor, 1992–1993. • Microsoft Research, Visiting Researcher, 2000–2001. • Programmer Analyst III, University of Texas Health Science Center at Dallas, 1993–1997. ACM and SIG Activities • ACM member since 1975. • ACM Publications Board Co-Chair, 2010–present; ACM Publications Board member, 2007–2010. • ACM Student Chapter Excellence Award Judge, 2010–2017. • ACM Student Research Competition Grand Finals Judge, 2011–2017. • Associate Editor, ACM TOPLAS, 1994–2000. Associate Editor, ACM TACO, 2005–2016. Member of SIGARCH, SIGBED, SIGCAS, SIGCSE, and SIGPLAN • SIGPLAN Chair, 2005–2007. • SIGPLAN Executive Committee, 1999–2001, 2003–2005. • SGB Representative to ACM Council, 2008–2010. • SGB Executive Committee, 2006–2008. Awards and Honors • DARPA Cyber Grand Challenge Competition, 2nd Place, $1M prize (2016) • ACM Fellow (2008). • IEEE Computer Society Taylor L. Booth Education Award (2008). • UVA ACM Student Chapter Undergraduate Teaching Award (2000). • NCR Faculty Innovation Award (1994). Jack Davidson’s research interests include compilers, computer architecture, system software, embedded systems, computer security, and computer science education. He is co-author of two introductory textbooks: C++ Program Design: An Introduction to Object Oriented Programming and Java 5.0 Program Design: An Introduction to Programming and Object-oriented Design. Professionally, he has helped organize many conferences across several fields. -

Acm's Fy13 Annual Report

acm’s annual report for FY13 DOI:10.1145/2541883.2541887 ACM’s FY13 Annual Report FY ’13 was an exceptional year for ACM. Membership reached a record high for the 11th consecutive year, solidifying ACM’s lead as the world’s largest educational and scientific society in computing. able feat for an organization less than of other prestigious honors to share Recognizing the expressed desires four years old. With this status, ACM information and insights with 200 of our membership and the research Europe can participate in discussions young researchers from around the community at large, ACM took a bold with the European Union on such top- world. Imagine the young student be- new approach to—and stand on— ics as computing research, technology ing able to sit down with the very role open access publishing, one that will policies, and education priorities. models that sparked their comput- no doubt set the tone for other profes- Education is the heartbeat of ACM: ing passions. It was an extraordinary sional organizations. We witnessed be it steering the computing cur- event—a natural for ACM—and I look the steadfast commitment and global riculum for students and educators; forward to next year’s forum. impact of our hubs in Europe, India, taking the lead in equipping K–12 The following report lists just some and China. And we continue to enrich teachers with the tools and talent to of the many activities and accom- ACM’s pledge to educate future gener- teach next generations; providing plishments of the Association over ations about the wonders of computer publications of the highest quality the fiscal year. -

ACM Annual Report for FY07

AnualReport_lo:AnualReport_lo 1/8/08 12:50 AM Page 27 ACM Annual Report for FY07 n recent months we have celebrated younger scientists whose innovations are ACM’s 60th Anniversary and the having a dramatic impact on the comput- 50th Anniversary of its flagship pub- ing field. The award will carry a prize of lication, Communications of the $150,000, and was endowed by the ACM. Both events signify the Infosys Foundation. Ienduring role ACM has played as the ACM’s global outreach has never been conduit for the world’s educators, stronger, as witnessed by the growth in the researchers, and professionals to share number of technical conferences, profes- their common computing interests, sional chapters, and Special Interest Group inspire new innovation, and reveal their initiatives taking root overseas this year. latest research. Indeed, ACM’s steadfast The Association’s devotion to forging pro- commitment to advancing computing as fessional relationships worldwide was a science and profession worldwide can solidified by meetings to establish an be traced from the first days of the ACM presence in the major technology ENIAC, to improving today’s quality of hubs of China and India. ACM’s China life, to encouraging and educating future Task Force held its first two meetings in generations to join a field of countless FY07. An agreement was reached with opportunities. Tsinghua University to establish an ACM In the past year, major corporations office in Beijing as a focal point for the have endorsed ACM’s global influence in Association’s presence in China. ACM’s recognizing technical excellence by spon- India Task Force met in Bangalore recently soring or increasing the cash value of a to explore how best to serve this burgeon- number of the Association’s prestigious ing audience. -

Title Publisher 3C ON-LINE ACM / Association for Computing Machinery 3DOR

Title Publisher 3C ON-LINE ACM / Association for Computing Machinery 3DOR: 3D Object Retrieval ACM / Association for Computing Machinery 3DVP: 3D Video Processing ACM / Association for Computing Machinery A2CWiC: Conference of Women in Computing in India ACM / Association for Computing Machinery AAA-IDEA : Advanced Architectures and Algorithms for Internet Delivery and ApplicationsACM / Association for Computing Machinery AADEBUG: Automated analysis-driven debugging ACM / Association for Computing Machinery AAMAS: Autonomous Agents and Multiagent Systems ACM / Association for Computing Machinery ACDC: Automated Control for Datacenters and Clouds ACM / Association for Computing Machinery AcessNets: Access Networks ACM / Association for Computing Machinery ACET: Advances in Computer Enterntainment Technology ACM / Association for Computing Machinery ACISNR: Applications of Computer and Information Sciences to Nature Research ACM / Association for Computing Machinery ACL2: ACL2 Theorem Prover and its Applications ACM / Association for Computing Machinery ACM Communications in Computer Algebra ACM / Association for Computing Machinery ACM Computing Surveys ACM / Association for Computing Machinery ACM DEV: Computing for Development ACM / Association for Computing Machinery ACM Inroads ACM / Association for Computing Machinery ACM Journal of Computer Documentation ACM / Association for Computing Machinery ACM Journal of Data and Information Quality (JDIQ) ACM / Association for Computing Machinery ACM journal on emerging technologies in computing -

STUDENT CHAPTER-IN-A-BOX a Practical Guide to Starting, Running and Marketing Your Student Chapter STUDENT CHAPTER-IN-A-BOX

STUDENT CHAPTER-IN-A-BOX A practical guide to starting, running and marketing your Student Chapter STUDENT CHAPTER-IN-A-BOX TABLE OF CONTENTS 3 Bylaws 3 Responsibilities of Chapter Officers 4 ACM Chapter and Chapter Member Benefits 5 ACM Headquarters Support 6 Chapter Web Tools 7 Recruiting Members 8 ACM Content for Chapter Activities 9 Chapter Activity Ideas 10 Chapter Meetings 11 Certificates of Insurance 11 Chapter Outreach and Communication 12 Financial Responsibilities and Requirements 15 Appendix A: Bylaws 19 Appendix B: Responsibilities of Chapter Officers 22 Appendix C: Ideas for Chapter Activities 32 Appendix D: Chapter Meetings 34 Appendix E: Chapter Newsletter 36 Appendix F: Acknowledgement of Support Letter Example 2 STUDENT CHAPTER-IN-A-BOX Chapter-in-a-Box contains the resources required for organizing and maintaining an ACM chapter. This compilation of materials and practices includes advice about recruiting members, ideas for activities, how-tos for running meetings and conferences and much more. If you do not have a chapter and are interested in creating one, please visit: http://www.acm.org/chapters/start-chapter. BYLAWS All student chapters must adhere to the ACM Chapter bylaws. To view the ACM Student Chapter bylaws please see Appendix A. RESPONSIBILITIES OF CHAPTER OFFICERS ACM student chapters are required to have a Chair, Vice Chair, and Treasurer who are ACM student members, as well as a Faculty Sponsor who is an ACM professional member. ACM chapters are charged with meeting the needs of their members, members of the Association, and members of the larger community in which they operate. -

Electronic Journals & Periodicals

Electronic Journals & Periodicals Dates of full-text availability range from title to title. Most titles listed have the current issues as well as several years back-file available. 3G technology, Markets and Business Strategies AS/400 systems management Access control & security systems integration Ascent technology magazine : ATM ACM computing surveys Asia computer weekly ACM guide to computing literature Asia-Pacific Mobile Communications Report ACM journal of computer documentation AsiaPacific telecom ACM journal of experimental algorithmics Asia-Pacific telecommunications ACM journal on emerging technologies in AT&T technology computing systems Automation systems Integrator Yearbook ACM queue Bank network news ACM transactions on algorithms Bank network news : Special issue ACM transactions on applied perception Bank systems + technology ACM transactions on architecture and code Bank technology news optimization Behavior research methods, instruments, & ACM transactions on Asian language information computers processing Behaviour & information technology ACM transactions on computational logic Bell Labs technical journal ACM transactions on computer systems Berkeley technology law journal ACM transactions on computer-human Bestwire interaction Boston University journal of science & ACM transactions on database systems technology law ACM transactions on design automation of British journal of educational technology electronic systems ACM transactions on embedded computing Broad Band Access 2002 systems Broadband Business Forecast ACM -

COMP 522 Multicore Computing: an Introduction

COMP 522 Multicore Computing: An Introduction John Mellor-Crummey Department of Computer Science Rice University [email protected] COMP 522 Lecture 1 8 January 2019 Logistics Instructor: John Mellor-Crummey —email: [email protected] —phone: x5179 —office: DH 3082 —office hours: by appointment Teaching Assistant: Keren Zhou —email: [email protected] —office: DH 2069 —office hours: by appointment Meeting time —scheduled T/Th 1:00 - 2:15 Class Location: DH 1075 Web site: http://www.cs.rice.edu/~johnmc/comp522 !2 Multicore Computing How are modern microprocessors organized and why? How do threads share cores for efficient execution? How do threads share data? What are memory models and why do you need them? How does one synchronize data accesses and work? How does one write highly concurrent data structures? How do parallel programming models work? How can a runtime schedule work efficiently on multiple cores? How can you automatically detect errors in parallel programs? How can you tell if you are using microprocessors efficiently? !3 The Shift to Multicore Microprocessors !4 Advance of Semiconductors: “Moore’s Law” Gordon Moore, Founder of Intel • 1965: since the integrated circuit was invented, the number of transistors in these circuits roughly doubled every year; this trend would continue for the foreseeable future • 1975: revised - circuit complexity doubles every two years By shigeru23 CC BY-SA 3.0, Increasing problems with power consumption via Wikimedia Commons and heat as clock speeds increase !5 25 Years of Microprocessors • Performance -

Network I/O Fairness in Virtual Machines

Network I/O Fairness in Virtual Machines Muhammad Bilal Anwer, Ankur Nayak, Nick Feamster, Ling Liu School of Computer Science, Georgia Tech ABSTRACT tive, they fall into two categories: the driver-domain model We present a mechanism for achieving network I/O fair- and those that provide direct I/O access for high speed net- ness in virtual machines, by applying flexible rate limiting work I/O. mechanisms directly to virtual network interfaces. Con- A key problem in virtual machinesis network I/O fairness, ventional approaches achieve this fairness by implementing which guarantees that no virtual machine can have dispro- rate limiting either in the virtual machine monitor or hyper- portionate use of the physical network interface. This paper visor, which generates considerable CPU interrupt and in- presents a design that achieves network I/O fairness across struction overhead for forwarding packets. In contrast, our virtual machines by applying rate limiting in hardware on design pushes per-VM rate limiting as close as possible to virtual interfaces. We show that applying rate limiting in the physical hardware themselves, effectively implementing hardware can reduce the CPU cycles required to implement per-virtual interface rate limiting in hardware. We show that per-virtual machine rate limiting for network I/O. Our de- this design reduces CPU overhead (both interrupts and in- sign applies generally to network I/O fairness for network structions) by an order of magnitude. Our design can be interfaces in general, making our contributions applicable to applied either to virtual servers for cloud-based services, or any setting where virtual machines need network I/O fair- to virtual routers. -

Acm's Annual Report

acm’s annual report for FY12 DOI:10.1145/2398356.2398362 Alain Chesnais During my two years of service, ACM’s Annual Report I was honored to FY12 was an outstanding year for ACM. be part of some Membership reached an all-time high key initiatives for the 10th consecutive year. We witnessed that will hopefully our global hubs in Europe, India, and China continue to propel take root and flourish. And we elevated thrilled to see membership exceed the ACM as the ACM’s overriding commitment to edu- 100,000 mark this year. Even in these world’s largest cating future generations about the economically tenuous times, ACM re- wonders of computer science to a new alized a 7.7% increase in membership and most level by joining forces with other asso- over last year. Much of this growth is prestigious ciations, corporations, and scientific attributable to our international ini- societies dedicated to the same cause. tiatives, principally China. scientific and The fiscal year culminated in a I am particularly proud of ACM’s computing society. landmark event honoring the life and leadership role in Computing in the legacy of Alan Turing on what would Core, a nonpartisan advocacy coali- have been his 100th birthday. ACM’s tion striving to promote computer Turing Centenary Celebration drew science education as a core academic more than 1,000 participants, includ- subject in K–12 education. The suc- ing over 30 ACM A.M. Turing Award cess of this initiative is key to giving laureates and a host of other world- young people the college- and career- renowned computer scientists and readiness, knowledge, and skills nec- technology pioneers for a historic essary in a technology-focused society.