Tristan Courtney

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Weaving Genres, and Roughing up Beats: New Album Review

Weaving Genres, And Roughing Up Beats: New Album Review Music | Bittles’ Magazine: The music column from the end of the world Is it just me, or was Record Store Day 2017 a tiny bit crap? The last time I was surrounded by that amount of middle-aged, balding men, reeking of sweat, I had walked into a strip-club by mistake. So, rather than relive the horrors of that day, I will dispense with the waffle and dive straight into the reviews. By JOHN BITTLES The electronic musings of Darren Jordan Cunningham’s Actress project have long been a favourite of those who enjoy a bit of cerebral resonance with their beats. While 2014’s Ghettoville disappointed, there was more than enough goodwill left over from the dusty grooves of his R.I.P. album and his excellent DJ Kicks mix to ensure that expectations for a new LP were suitably high Thankfully the futuristic funk and controlled passion of AZD doesn’t let us down! Out now on the always reliable Ninja Tune label the album is a dense listening experience which makes the ideal soundtrack for lonely dance floors and fevered heads. After the short intro of Nimbus, Untitled 7 gets us off to a stunning start with its deep, steady groove. From here Fantasynth’s shuffling beats and solitary melody line recall the twisted genius of Howie B and Dancing In The Smoke deconstructs the ghost of Chicago house and takes it to a gloriously darkened place. Meanwhile Faure In Chrome and There’s An Angel In The Shower’s disquieting ambiance are so good you could wallow in them for days. -

Visual Metaphors on Album Covers: an Analysis Into Graphic Design's

Visual Metaphors on Album Covers: An Analysis into Graphic Design’s Effectiveness at Conveying Music Genres by Vivian Le A THESIS submitted to Oregon State University Honors College in partial fulfillment of the requirements for the degree of Honors Baccalaureate of Science in Accounting and Business Information Systems (Honors Scholar) Presented May 29, 2020 Commencement June 2020 AN ABSTRACT OF THE THESIS OF Vivian Le for the degree of Honors Baccalaureate of Science in Accounting and Business Information Systems presented on May 29, 2020. Title: Visual Metaphors on Album Covers: An Analysis into Graphic Design’s Effectiveness at Conveying Music Genres. Abstract approved:_____________________________________________________ Ryann Reynolds-McIlnay The rise of digital streaming has largely impacted the way the average listener consumes music. Consequentially, while the role of album art has evolved to meet the changes in music technology, it is hard to measure the effect of digital streaming on modern album art. This research seeks to determine whether or not graphic design still plays a role in marketing information about the music, such as its genre, to the consumer. It does so through two studies: 1. A computer visual analysis that measures color dominance of an image, and 2. A mixed-design lab experiment with volunteer participants who attempt to assess the genre of a given album. Findings from the first study show that color scheme models created from album samples cannot be used to predict the genre of an album. Further findings from the second theory show that consumers pay a significant amount of attention to album covers, enough to be able to correctly assess the genre of an album most of the time. -

The Definitive Guide to Evolver by Anu Kirk the Definitive Guide to Evolver

The Definitive Guide To Evolver By Anu Kirk The Definitive Guide to Evolver Table of Contents Introduction................................................................................................................................................................................ 3 Before We Start........................................................................................................................................................................... 5 A Brief Overview ......................................................................................................................................................................... 6 The Basic Patch........................................................................................................................................................................... 7 The Oscillators ............................................................................................................................................................................ 9 Analog Oscillators....................................................................................................................................................................... 9 Frequency ............................................................................................................................................................................ 10 Fine ...................................................................................................................................................................................... -

Documentary in the Steps of Trisha Brown, the Touching Before We Go And, in Honour of Festival Artist Rocio Molina, Flamenco, Flamenco

Barbican October highlights Transcender returns with its trademark mix of transcendental and hypnotic music from across the globe including shows by Midori Takada, Kayhan Kalhor with Rembrandt Trio, Susheela Raman with guitarist Sam Mills, and a response to the Indian declaration of Independence 70 years ago featuring Actress and Jack Barnett with Indian music producer Sandunes. Acclaimed American pianist Jeremy Denk starts his Milton Court Artist-in-Residence with a recital of Mozart’s late piano music and a three-part day of music celebrating the infinite variety of the variation form. Other concerts include Academy of Ancient Music performing Purcell’s King Arthur, Kid Creole & The Coconuts and Arto Lindsay, Wolfgang Voigt’s ambient project GAS, Gilberto Gil with Cortejo Afro and a screening of Shiraz: A Romance of India with live musical accompaniment by Anoushka Shankar. The Barbican presents Basquiat: Boom for Real, the first large-scale exhibition in the UK of the work of American artist Jean-Michel Basquiat (1960-1988).The exhibition brings together an outstanding selection of more than 100 works, many never seen before in the UK. The Grime and the Glamour: NYC 1976-90, a major season at Barbican Cinema, complements the exhibition and Too Young for What? - a day celebrating the spirit, energy and creativity of Basquiat - showcases a range of new work by young people from across east London and beyond. Barbican Art Gallery also presents Purple, a new immersive, six-channel video installation by British artist and filmmaker John Akomfrah for the Curve charting incremental shifts in climate change across the planet. -

Poly Evolver Keyboard – Synthesizer Performance-Wunder Text: Björn Bojahr Foto: Dieter Stork

y 052 keyboards test cd track 02 & datenteil Dave Smith Instruments Poly Evolver Keyboard – Synthesizer Performance-Wunder text: Björn Bojahr foto: Dieter Stork Die Oberfläche des Instruments nutzt seine gung koppeln – breite Flächen oder ein Stereo- komplette Breite aus und zeigt mit entspre- Lead-Sound machen oft eben erst bei polyfoner chender Anordnung der Parameter sowie klei- Spielweise wirklich Spaß. Der PEK ist für dieses nen gelben Linien ganz klar den Aufbau der Einsatzgebiet bestens gerüstet, da die Klanger- Klangerzeugung an. Kaum drücke ich eine Taste, zeugung komplett in Stereo aufgebaut ist. läuft der Step-Sequenzer los – jeder Step mit Der Step-Sequenzer ist ein oft zu Unrecht ver- einer eigenen LED. Vier Voice-LEDs zeigen, wel- nachlässigtes Feature der Evolver-Klangerzeu- che Stimme den aktuellen Ton erzeugt. gung, kann er doch bis zu vier Parameter unab- Aber nicht nur die Optik überzeugt, der Poly hängig voneinander steuern. Das Programmie- Evolver Keyboard (PEK) klingt auch noch sensa- ren eigener Evolver-Sequenzen war noch nie so tionell. Vier komplette Evolver verbergen sich komfortabel wie mit der Keyboard-Version, und unter der Haube, samt Step-Sequenzer und der glauben Sie mir: Wenn Sie die Knöpfe schon di- ganz eigenen Verschaltung analoger und digita- rekt vor sich haben, dann benutzen Sie sie auch. ler Elemente. Sie müssen diese vier Module Während dieses Tests habe ich eher weniger in nicht getrennt ansprechen, der Synthesizer die Tasten gelangt, weitaus mehr fasziniert hat kann sie auch zu einer polyfonen Klangerzeu- mich der direkte Zugriff auf die Step-Sequenzer. KEYBOARDS 10.2005 y 053 profil Hersteller / Vertrieb: Seite sind optisch eine wahre Freude, beim Dave Smith Instruments Spielen hatte ich jedoch einige Probleme mit Internet: dem Pitch-Bend-Controller: Die Federung und www.davesmithinstruments.com Mittenrasterung des Rades erfordert zuviel Anschlüsse: Kraftaufwand. -

DAS WAR (Der Geschichtldrucker) / THIS WAS (The Tattletale Printer) In

DAS WAR (Der Geschichtldrucker) / THIS WAS (The Tattletale Printer) In the spring of 2016, Thomas Baldischwyler got a call from the office of the Federal Government Commissioner for Culture. The caller underscored the need for absolute discretion until the winner of the Rome Prize would be officially announced. But Baldischwyler couldn’t resist the temptation to post a coded statement on Facebook, and so he chose a line from Thomas Bernhard’s “Extinction”: “Standing at the open window, I said to myself, I’m here to stay, nothing will make me leave.” Rome—the exile of Bernhard’s protagonist Franz-Josef Murau was also the writer’s own exile. And he wouldn’t have traded it for any place in the world. Arriving in Rome in September 2017, Baldischwyler soon doubted that he would stay for long. His original project (the idea was to draw a connection between the story of how the Italian entrepreneur Silvio Berlusconi went into politics in the early 1990s and two dystopian techno records that the Roman producers Leo Anibaldi and Lory D released in 1993, examining the economic foundations of creative practices in light of their substantive concerns and vice versa) collided with the unfolding sociocultural crisis in Italy. The country seemed utterly paralyzed before the upcoming elections. There was nothing people were less keen on than talking about their fairly recent past. One bright spot: the freelance curator Pier Paolo Pancotto was interested in Baldischwyler’s record label Travel By Goods and invited him to perform a sort of avant-garde DJ set together with a couple of Italian artists at the Villa Medici. -

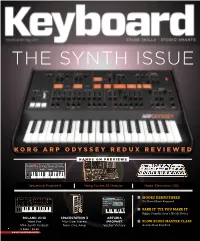

The Synth Issue

THE SYNTH ISSUE KORG ARP ODYSSEY REDUX REVIEWED HANDS ON PREVIEWS Sequential Prophet-6 Moog System 55 Modular Modal Electronics 002 MODES DEMYSTIFIED No Sheet Music Required FAKE IT ’TIL YOU MAKE IT Bigger Samples Aren’t Always Better ROLAND JD-Xi SPACESTATION 3 ARTURIA Meet the Mid-Side Stereo iPROPHET SLOW BLUES MASTER CLASS Mini-Synth to Beat from One Amp Vector Victory Get the Real-Deal Feel 5.2015 | $5.99 A MUSIC PLAYER PUBLICATION 40 YEARS OF GROUNDBREAKING SYNTHS Grammy® winner and MIDI co-creator Dave Smith has designed more groundbreaking synths than anyone. Ever. Whatever your musical need or budget, Dave’s award-winning line of analog and analog/digital hybrid instruments has the right tool for you. Pro 2 · Prophet 12 · Prophet ’08 Mopho · Mopho x4 · Mopho SE Tetra · Tempest · Evolver THE PROPHET-6 IS COMING SOON! www.davesmithinstruments.com Designed and built in California CONTENTS MAY 2015 KNOW TALK 32 SYNTH SOLOING CÞ 8 Voices, tips, and breaking news from the Keyboard community. 4 œ œ We’ve explored his sound; now dive &4 œ œ œ Jan Hammer NEW GEAR SYNTH EDITION into the playing style of . D minor pentatonic 34 BEYOND THE MANUAL 10 In our special synthesizer-focused issue, we bring you first-look Learn tweaks to get more soft synth coverage of the Dave Smith Instruments Prophet 6, Modal mileage from your computer. Electronics 002, and Moog’s Modular systems, plus ten more new synth releases. 36 DANCE Making classic sounds with the ARP. HEAR REVIEW 16 ROAD WARRIORS In NRBQ’s 50th anniversary year, keyboardist 38 ANALOG SYNTH and founding member Terry Adams discusses Korg ARP Odyssey his touring gear, and the Monk tribute he’s always dreamed of making. -

Warmth: Parallel

Warmth: Parallel spectrumculture.com/2018/05/14/warmth-parallel-review Daniel Bromfield May 14, 2018 As Warmth, Spanish producer Agustín Mena makes ambient music of a fearsome purity. This is not music that seeks to elicit an emotional reaction or stake out a psychedelic space for the listener to explore. This is ambient in the strictest, most workmanlike sense, and though Mena comes from club music (he was a dub techno producer before shedding all aspects of his sound save distant chords), his music is closer to what Brian Eno envisioned when he stipulated a new form of music that could act as “wallpaper.” Eno recently released a box set of music intended to accompany art installations. It sounds like free jazz compared to Warmth. But over Mena’s impressive recent run of albums—2016’s Essay, last year’s Home, and the brand-new Parallel—little wrinkles have appeared in the project that reveal more going on than meets the eye. Though the three records had almost exactly the same sound palate, the mood of each is distinct and obvious. Essay was positive, an album for meditation and deep thought, a happy place; it accomplished this through an expansive presence in the stereo field and subtle frills like electric piano. Home was colder and more insular, occupying the dead center of the stereo field and focusing on heavy bass tones rather than comfortable midrange. Parallel finds a neat middle ground between the two, the midrange allowing the music to 1/2 maintain the illusion of wide-open space while the bass injects a subterranean sense of foreboding. -

Public Voices

Public Voices Government at the Margins: Locating/Deconstructing Ways of Performing Government in the Age of Excessive Force, Hyper-Surveillance, Civil Dis- obedience, and Political Self-interest – Lessons Learned in “Post-Racial” America Symposium Symposium Editor Valerie L. Patterson School of International and Public Affairs Florida International University Editor-in-Chief Marc Holzer Institute for Public Service Suffolk University Managing Editor Iryna Illiash Volume XV Number 2 Public Voices Vol. XV No. 2 Publisher Public Voices is published by the Institute for Public Service, Suffolk University. Copyright © 2018 Subscriptions Subscriptions are available on a per volume basis (two issues) at the following rates: $36 per year for institutions, agencies and libraries; $24 per year for individuals; $12 for single copies and back orders. Noninstitutional subscribers can make payments by personal check or money order to: Public Voices Suffolk University Institute for Public Service 120 Tremont Street Boston, MA 02108 Attn: Dr. Marc Holzer All members of SHARE receive an annual subscription to Public Voices. Members of ASPA may add SHARE membership on their annual renewal form, or may send the $20 annual dues at any time to: ASPA C/o SunTrust Bank Department 41 Washington, DC 20042-0041 Public Voices (Online) ISSN 2573-1440 Public Voices (Print) ISSN 1072-5660 Public Voices Vol. XIV No. 2 Public Voices Editor-in-Chief: Marc Holzer, Suffolk University, Institute for Public Service Managing Editor: Iryna Illiash, CCC Creative Center Editorial Assistant: Jonathan Wexler, Rutgers University - Campus at Newark Editorial Assistant: Mallory Sullivan, Suffolk University, Institute for Public Service Editorial Board Robert Agranoff, Indiana University Robert A. -

DJ Andy Stott Udforsker Stilhed Og Støj Med Smukke Lydbilleder I VEGA

2016-09-09 11:00 CEST DJ Andy Stott udforsker stilhed og støj med smukke lydbilleder i VEGA VEGA præsenterer DJ Andy Stott udforsker stilhed og støj med smukke lydbilleder i VEGA Torsdag den 8. december indtager den anmelderroste og nyskabende Andy Stott Lille VEGA og bringer drømme- og trancelignende tilstande til sit publikum med smukke lydbilleder. Den Manchester-baserede producer, Andy Stott, nægter at lade sit elektroniske talent begrænse af pre-definerede genrer. Han bevæger sig fra alt imellem dyb techno, garage, stille house, footwork, grime og bølgende pop-mutationer. Der er dog konstanter i hans musik i form af Stotts unikke vekslen mellem dystre og drømmende teksturer, hans legen med rytmen i sangene samt en luftig og sårbar vokal, som alt sammen skaber en dybde og kompleksitet i et betagende smukt musikunivers. Intensitet og intelligens i beundringsværdig kompromisløshed Senest har Stott udgivet albummet Too Many Voices i foråret 2016, vis titel kan ses som en frisk reference til hans diversitet og konstante udvikling inden for musikken. Hans gamle klaverlærer, Alison Skidmore, bidrager med sin yderst delikate vokal på flere af produktionerne. Too Many Voices er et album, der med ekstrem intelligens og udefinerede rytmer vækker både melankolske og drømmende følelser hos lytteren. Lyt selv med på nummeret ”Butterflies” fra albummet. Stott fik for alvor hul igennem med Luxury Problems i 2012, et melodisk techno- og housealbum. Det efterfølgende album, der blev udnævnt til album of the year i2014 af Resident Advisor, udkom i slutningen af 2014 med titlen Faith In Strangers. Soundvenue skrev dengang om albummet: ”Man står tilbage med fornemmelsen af albummet som et lille melodisk paradoks, der udforsker forholdet mellem en flydende overjordisk stilhed og en industriel jordisk støj, og det er ganske interessant.” Fakta om koncerten: Andy Stott (UK) Torsdag den 8. -

Moogfest Cover Flat.Indd

In plaIn sIght JustIce m o o g f e s t Your weekend-long dance eVents at Moog FactorY reMIX Masters partY starts here Moog Music makes Moog instruments, including the Little Phatty and Voyager. The factory is French electro-pop duo Justice is the unofficial start to Moogfest: the band’s American tour in downtown Asheville at 160 Broadway St., where the analog parts are manufactured by hand. brings them to the U.S. Cellular Center on Thursday, Oct. 25. And while the show isn’t part of This year's Moogfest (the third for the locally held immersion course in electronic music) Check out these events happening there: the Moogfest lineup, festival attendees did have first dibs on pre-sale tickets. brings some changes — a smaller roster, for one. And no over-the-top headliner. No Flaming Even without a Moogfest ticket, you can go to MinimoogFest. It’s free and open to the public. Gaspard Augé and Xavier de Rosnay gained recognition back in ‘03 when they entered their Lips. No Massive Attack. Think of it as the perfect opportunity to get to know the 2012 per- Check out DJ sets by locals Marley Carroll and In Plain Sight (winners of the Remix Orbital for remix of “Never Be Alone” by Simian in a college radio contest. Even before Justice’s ‘07 debut, formers better. It's also a prime chance to check out some really experimental sounds, from Moogfest contest), along with MSSL CMMND (featuring Chad Hugo from N.E.R.D. and Daniel †, was released, they’d won a best video accolade at the ‘06 MTV Europe Music Awards. -

Techno's Journey from Detroit to Berlin Advisor

The Day We Lost the Beat: Techno’s Journey From Detroit to Berlin Advisor: Professor Bryan McCann Honors Program Chair: Professor Amy Leonard James Constant Honors Thesis submitted to the Department of History Georgetown University 9 May 2016 2 Table of Contents Acknowledgements 3 Introduction 5 Glossary of terms and individuals 6 The techno sound 8 Listening suggestions for each chapter 11 Chapter One: Proto-Techno in Detroit: They Heard Europe on the Radio 12 The Electrifying Mojo 13 Cultural and economic environment of middle-class young black Detroit 15 Influences on early techno and differences between house and techno 22 The Belleville Three and proto-techno 26 Kraftwerk’s influence 28 Chapter Two: Frankfurt, Berlin, and Rave in the late 1980s 35 Frankfurt 37 Acid House and Rave in Chicago and Europe 43 Berlin, Ufo and the Love Parade 47 Chapter Three: Tresor, Underground Resistance, and the Berlin sound 55 Techno’s departure from the UK 57 A trip to Chicago 58 Underground Resistance 62 The New Geography of Berlin 67 Tresor Club 70 Hard Wax and Basic Channel 73 Chapter Four: Conclusion and techno today 77 Hip-hop and techno 79 Techno today 82 Bibliography 84 3 Acknowledgements Thank you, Mom, Dad, and Mary, for putting up with my incessant music (and me ruining last Christmas with this thesis), and to Professors Leonard and McCann, along with all of those in my thesis cohort. I would have never started this thesis if not for the transformative experiences I had at clubs and afterhours in New York and Washington, so to those at Good Room, Flash, U Street Music Hall, and Midnight Project, keep doing what you’re doing.