An Introduction and Evaluation of a Lossless Fuzzy Binary AND/OR Compressor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Modification of Adaptive Huffman Coding for Use in Encoding Large Alphabets

ITM Web of Conferences 15, 01004 (2017) DOI: 10.1051/itmconf/20171501004 CMES’17 Modification of Adaptive Huffman Coding for use in encoding large alphabets Mikhail Tokovarov1,* 1Lublin University of Technology, Electrical Engineering and Computer Science Faculty, Institute of Computer Science, Nadbystrzycka 36B, 20-618 Lublin, Poland Abstract. The paper presents the modification of Adaptive Huffman Coding method – lossless data compression technique used in data transmission. The modification was related to the process of adding a new character to the coding tree, namely, the author proposes to introduce two special nodes instead of single NYT (not yet transmitted) node as in the classic method. One of the nodes is responsible for indicating the place in the tree a new node is attached to. The other node is used for sending the signal indicating the appearance of a character which is not presented in the tree. The modified method was compared with existing methods of coding in terms of overall data compression ratio and performance. The proposed method may be used for large alphabets i.e. for encoding the whole words instead of separate characters, when new elements are added to the tree comparatively frequently. Huffman coding is frequently chosen for implementing open source projects [3]. The present paper contains the 1 Introduction description of the modification that may help to improve Efficiency and speed – the two issues that the current the algorithm of adaptive Huffman coding in terms of world of technology is centred at. Information data savings. technology (IT) is no exception in this matter. Such an area of IT as social media has become extremely popular 2 Study of related works and widely used, so that high transmission speed has gained a great importance. -

Data Compression in Solid State Storage

Data Compression in Solid State Storage John Fryar [email protected] Santa Clara, CA August 2013 1 Acknowledgements This presentation would not have been possible without the counsel, hard work and graciousness of the following individuals and/or organizations: Raymond Savarda Sandgate Technologies Santa Clara, CA August 2013 2 Disclaimers The opinions expressed herein are those of the author and do not necessarily represent those of any other organization or individual unless specifically cited. A thorough attempt to acknowledge all sources has been made. That said, we’re all human… Santa Clara, CA August 2013 3 Learning Objectives At the conclusion of this tutorial the audience will have been exposed to: • The different types of Data Compression • Common Data Compression Algorithms • The Deflate/Inflate (GZIP/GUNZIP) algorithms in detail • Implementation Options (Software/Hardware) • Impacts of design parameters in Performance • SSD benefits and challenges • Resources for Further Study Santa Clara, CA August 2013 4 Agenda • Background, Definitions, & Context • Data Compression Overview • Data Compression Algorithm Survey • Deflate/Inflate (GZIP/GUNZIP) in depth • Software Implementations • HW Implementations • Tradeoffs & Advanced Topics • SSD Benefits and Challenges • Conclusions Santa Clara, CA August 2013 5 Definitions Item Description Comments Open A system which will compress Must strictly adhere to standards System data for use by other entities. on compress / decompress I.E. the compressed data will algorithms exit the system Interoperability among vendors mandated for Open Systems Closed A system which utilizes Can support a limited, optimized System compressed data internally but subset of standard. does not expose compressed Also allows custom algorithms data to the outside world No Interoperability req’d. -

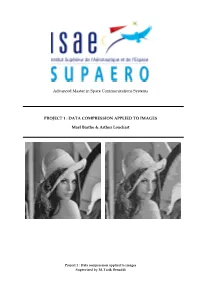

Advanced Master in Space Communications Systems PROJECT 1 : DATA COMPRESSION APPLIED to IMAGES Maël Barthe & Arthur Louchar

Advanced Master in Space Communications Systems PROJECT 1 : DATA COMPRESSION APPLIED TO IMAGES Maël Barthe & Arthur Louchart Project 1 : Data compression applied to images Supervised by M. Tarik Benaddi Special thanks to : Lenna Sjööblom In the early seventies, an unknown researcher at the University of Southern California working on compression technologies scanned in the image of Lenna Sjööblom centerfold from Playboy magazine (playmate of the month November 1972). Since that time, images of the Playmate have been used as the industry standard for testing ways in which pictures can be manipulated and transmitted electronically. Over the past 25 years, no image has been more important in the history of imaging and electronic communications. Because of the ubiquity of her Playboy photo scan, she has been called the "first lady of the internet". The title was given to her by Jeff Seideman in a press release he issued announcing her appearance at the 50th annual IS&T Conference (Imaging Science & Technology). This project is dedicated to Lenna. 1 Project 1 : Data compression applied to images Supervised by M. Tarik Benaddi Table of contents INTRODUCTION ............................................................................................................................................. 3 1. WHAT IS DATA COMPRESSION? .......................................................................................................................... 4 1.1. Definition .......................................................................................................................................... -

The Lempel Ziv Algorithm

The Lempel Ziv Algorithm Christina Zeeh Seminar ”Famous Algorithms” January 16, 2003 The Lempel Ziv Algorithm is an algorithm for lossless data compres- sion. It is not a single algorithm, but a whole family of algorithms, stem- ming from the two algorithms proposed by Jacob Ziv and Abraham Lem- pel in their landmark papers in 1977 and 1978. Lempel Ziv algorithms are widely used in compression utilities such as gzip, GIF image compression and the V.42 modem standard. Figure 1: The Lempel Ziv Algorithm Family This report shows how the two original Lempel Ziv algorithms, LZ77 and LZ78, work and also presents and compares several of the algorithms that have been derived from the original Lempel Ziv algorithms. 1 Contents 1 Introduction to Data Compression 3 2 Dictionary Coding 4 2.1 Static Dictionary . 4 2.2 Semi-Adaptive Dictionary . 4 2.3 Adaptive Dictionary . 5 3 LZ77 5 3.1 Principle . 5 3.2 The Algorithm . 6 3.3 Example . 7 3.4 Improvements . 8 3.4.1 LZR . 8 3.4.2 LZSS . 8 3.4.3 LZB . 9 3.4.4 LZH . 9 3.5 Comparison . 9 4 LZ78 10 4.1 Principle . 10 4.2 Algorithm . 11 4.3 Example . 11 4.4 Improvements . 12 4.4.1 LZW . 13 4.4.2 LZC . 14 4.4.3 LZT . 14 4.4.4 LZMS . 14 4.4.5 LZJ . 15 4.4.6 LZFG . 15 4.5 Comparison . 15 4.6 Comparison LZ77 and LZ78 . 16 2 1 Introduction to Data Compression Most data shows patterns and is subject to certain constraints. -

A Comparative Study of Text Compression Algorithms

International Journal of Wisdom Based Computing, Vol. 1 (3), December 2011 68 A Comparative Study Of Text Compression Algorithms Robert Lourdusamy Senthil Shanmugasundaram Computer Science & Info. System Department, Department of Computer Science, Community College in Al-Qwaiya, Vidyasagar College of Arts and Science, Shaqra University, KSA Udumalpet, Tamilnadu, India (Government Arts College, Coimbatore-641 018) E-mail : [email protected] E-mail : [email protected] Abstract: Data Compression is the science and art of techniques because of the way human visual and hearing representing information in a compact form. For decades, systems work. Data compression has been one of the critical enabling Lossy algorithms achieve better compression technologies for the ongoing digital multimedia revolution. effectiveness than lossless algorithms, but lossy There are lot of data compression algorithms which are compression is limited to audio, images, and video, available to compress files of different formats. This paper provides a survey of different basic lossless data where some loss is acceptable. compression algorithms. Experimental results and The question of the better technique of the two, comparisons of the lossless compression algorithms using “lossless” or “lossy” is pointless as each has its own uses Statistical compression techniques and Dictionary based with lossless techniques better in some cases and lossy compression techniques were performed on text data. technique better in others. Among the statistical coding techniques the algorithms such There are quite a few lossless compression as Shannon-Fano Coding, Huffman coding, Adaptive techniques nowadays, and most of them are based on Huffman coding, Run Length Encoding and Arithmetic dictionary or probability and entropy. -

Connectivity and Attribute Compression of Triangle Meshes Von Buelow, Max (2020)

Connectivity and Attribute Compression of Triangle Meshes von Buelow, Max (2020) DOI (TUprints): https://doi.org/10.25534/tuprints-00014189 Lizenz: CC-BY 4.0 International - Creative Commons, Namensnennung Publikationstyp: Bachelorarbeit Fachbereich: 20 Fachbereich Informatik Quelle des Originals: https://tuprints.ulb.tu-darmstadt.de/14189 01001 1110100 0111010 GCC 01100 Graphics, Capture and Massively Parallel Computing Bachelor's Thesis Connectivity and Attribute Compression of Triangle Meshes Maximilian Alexander von Bülow March 2017 Technische Universität Darmstadt Department of Computer Science Graphics, Capture and Massively Parallel Computing Supervisors: Dr. rer. nat. Stefan Guthe Dr.-Ing. Michael Goesele Declaration of Authorship I certify that the work presented here is, to the best of my knowledge and belief, origi- nal and the result of my own investigations, except as acknowledged, and has not been submitted, either in part or whole, for a degree at this or any other University. Darmstadt, 13.03.2017 Maximilian Alexander von Bülow I Abstract Triangle meshes are used in various fields of applications and are able to consume a vo- luminous amount of space due to their sheer size and redundancies caused by common formats. Compressing connectivity and attributes of these triangle meshes decreases the storage consumption, thus making transmissions more efficient. I present in this thesis a compression approach using Arithmetic Coding that predicts attributes and only stores differences to the predictions, together with minimal connectivity information. It is ap- plicable for arbitrary triangle meshes and compresses to use both of their connectivity and attributes with no loss of information outside of re-ordering the triangles. My ap- proach achieves a compression rate of approximately 3.50:1, compared to the original representations and compresses in the majority of cases with rates between 1.20:1 to 1.80:1, compared to GZIP. -

Guaranteed Compression of Short Unicode Strings Using Entropy, Dictionary and Delta Encoding Techniques

Unishox - Guaranteed Compression of Short Unicode Strings using Entropy, Dictionary and Delta encoding techniques Arundale Ramanathan August 15, 2019 Abstract A new hybrid encoding method is proposed, with which short unicode strings could be compressed using context aware pre-mapped codes and delta coding resulting in surprisingly good ratios. Also shown is how this technique can guarantee compression for any language sentence of minimum 3 words. 1 Summary Compression of Short Unicode Strings of arbitrary lengths have not been ad- dressed sufficiently by lossless entropy encoding methods so far. Although it appears inconsequential, space occupied by such strings become significant in memory constrained environments such as Arduino Uno and when attempting storage of such independent strings in a database. While block compression is available for databases, retrieval efficiency could be improved if the strings are individually compressed. 2 Basic Definitions In information theory, entropy encoding is a lossless data compression scheme that is independent of the specific characteristics of the medium [1]. One of the main types of entropy coding is about creating and assigning a unique prefix-free code to each unique symbol that occurs in the input. These entropy encoders then compress data by replacing each fixed-length input sym- bol with the corresponding variable-length prefix-free output code word. According to Shannon's source coding theorem, the optimal code length for a symbol is −logbP , where b is the number of symbols used to make output codes and P is the probability of the input symbol [2]. Therefore, the most common symbols use the shortest codes. -

LAURI HAHNE CHOOSING COMPRESSOR for COMPUTING NORMALIZED COMPRESSION DISTANCE Master of Science Thesis

LAURI HAHNE CHOOSING COMPRESSOR FOR COMPUTING NORMALIZED COMPRESSION DISTANCE Master of Science Thesis Examiners: Prof. Olli Yli-Harja Prof. Matti Nykter Examiners and topic approved by the Faculty of Computer and Electrical Engineering council meeting on 4th May, 2011. II TIIVISTELMÄ TAMPEREEN TEKNILLINEN YLIOPISTO Signaalinkäsittelyn ja tietoliikennetekniikan koulutusohjelma HAHNE, LAURI: Pakkaimen valinta normalisoidun pakkausetäisyyden laskemisessa Diplomityö, 43 sivua Joulukuu 2013 Pääaine: Signaalinkäsittely Tarkastajat: Prof. Olli Yli-Harja, Prof. Matti Nykter Avainsanat: normalisoitu pakkausetäisyys, algoritminen informaatio, pakkainohjelmat Nykyaikaiset mittausmenetelmät esimerkiksi biologian alalla tuottavat huomattavan suu- ria datamääriä käsiteltäväksi. Suuret määrät mittausaineistoa kuitenkin aiheuttaa ongelmia datan käsittelyn suhteen, sillä tätä suurta määrää mittausaineista pitäisi pystyä käyttämään avuksi luokittelussa. Perinteiset luokittelumenetelmät kuitenkin perustuvat piirteenirro- tukseen, joka voi olla vaikeaa tai mahdotonta, mikäli kiinnostavia piirteita ei tunneta tai niitä on liikaa. Normalisoitu informaatioetäisyys (normalized information distance) on mitta, jota voi- daan käyttää teoriassa kaiken tyyppisen aineiston luokitteluun niiden algoritmisten piirtei- den perusteella. Valitettavasti normalisoidun informaatioetäisyyden käyttö edellyttää algo- ritmisen Kolmogorov-kompleksisuuden tuntemisen, mikä ei ole mahdollista, sillä täysin teoreettista Kolmogorov-kompleksisuutta ei voida laskea. Normalisoitu pakkausetäisyys -

Study of LZ77 and LZ78 Data Compression Techniques Suman M

ISSN: 2319-5967 ISO 9001:2008 Certified International Journal of Engineering Science and Innovative Technology (IJESIT) Volume 4, Issue 3, May 2015 Study of LZ77 and LZ78 Data Compression Techniques Suman M. Choudhary, Anjali S. Patel, Sonal J. Parmar Abstract— Data Compression is defined as the science and art of the representation of information in a crisply condensed form. For decades, Data compression is considered as critical technologies for the ongoing digital multimedia revolution .There are variety of data compression algorithms which are available to compress files of different formats. This paper provides a survey of different basic lossless data compression algorithms such as LZ77 and LZ78. Index Terms— Data Compression, LZ77, LZ78. I. INTRODUCTION Data compression reduces the amount of space needed to store data or reducing the amount of time needed to transmit data. The size of data is reduced by removing the excessive/repeated information. The goal of data compression is to represent a source in digital form with as few bits as possible while meeting the minimum requirement of reconstruction of the original. There are two kinds of compression techniques in terms of reconstructing the original source. They are called Lossless and lossy compression. In lossless technique, we get original data after decompression. While in lossy technique, we don’t get original data after decompression. The Lempel Ziv Algorithm is an algorithm for lossless data compression. This algorithm is an offshoot of the two algorithms proposed by Jacob Ziv and Abraham Lempel in their landmark papers in 1977 and 1978 which are LZ77 and LZ78[1]. -

Intra Line Copy for HEVC Screen Content Coding

Intra Line Copy for HEVC Screen Content Coding Tsui-Shan Chang, Chun-Chi Chen1, Ru-Ling Liao, Che-Wei Kuo, Wen-Hsiao Peng2 Department of Computer Science, National Chiao Tung University 1001 Ta-Hsueh Rd., Hsinchu 30010, Taiwan Email: [email protected], [email protected] Abstract—This paper presents an intra line copy mode, which content but not for screen content. Accordingly, two introduces a finer-granularity block partitioning structure for alternative approaches turn to make the best of the signal the intra copying technique, to further improve the coding characteristics of typical screen contents, that is, the frequent efficiency for screen content. This mode first divides a prediction occurrence of periodical patterns containing a few colors. block horizontally or vertically into 1-pixel-wide lines and then The palette coding [4] bypasses the intra prediction in operates intra copying line-by-line from the reconstructed pixels in the current frame. It shares some parallels with the notion of addition to skipping the transform, and redesigns the string matching widely used for lossless data compression. It, quantization and entropy coder. The mapping from however, is more compliant to the current HEVC design and is quantization indices to sample values is explicitly signaled, parallel-friendly. This paper shall detail its various design issues, and a method similar to the sliding window Lempel-Ziv including the design of a fast algorithm based on hashing coding is used as instead for coding of quantization indices. techniques and the impact of search window size on its On the contrary, the string matching approaches [5]-[6] tend performance and complexity trade-off. -

Comparative Study of Dictionary Based Compression Algorithms on Text Data

88 IJCSNS International Journal of Computer Science and Network Security, VOL.16 No.2, February 2016 Comparative Study of Dictionary based Compression Algorithms on Text Data Amit Jain Kamaljit I. Lakhtaria Sir Padampat Singhania University, Udaipur (Raj.) 323601 India transmitting data, for general purpose use we need to decrypt the Abstract: file. Lossless compression technique can be broadly With increasing amount of text data being stored rapidly, categorized in to two classes: efficient information retrieval and Storage in the compressed domain has become a major concern. Compression is the process i) Entropy Based Encoding: of coding that will effectively reduce the total number of bits In this compression process the algorithm first counts the needed to represent certain information. Data compression has frequency of occurrence of each unique symbol in the document. been one of the critical enabling technologies for the ongoing Then the compression technique replaces the symbols with the digital multimedia revolution. There are lots of data compression algorithm generated symbol. These generated symbols are fixed algorithms which are available to compress files of different for a certain symbol of the original document; and doesn’t formats. This paper presents survey on several dictionary based depend on the content of the document. The length of the lossless data compression algorithms and compares their generated symbols is variable and it varies on the frequency of performance based on compression ratio and time ratio on the certain symbol in the original document. Encoding and decoding. A set of selected algorithms are examined and implemented to evaluate the performance in ii) Dictionary Based Encoding: compressing benchmark text files. -

Image Compression

Image Compression Why? Standard definition (SD) television movie (raw data) frames pixels bytes 30 ×(720× 480) ×3 = 31,104,000bytes/sec sec frame pixel A two-hour movie bytes sec 31,104,000 ×(602 ) × 2hours ≈ 224GB sec hour Need 27 8.5GB dual-layer DVDs! High-definition (HD) television 1920x1080x24 bits/image! Image Compression (Cont’d) Standard definition (SD) television movie (raw data) frames pixels 24bits 30 ×(720× 480) × = 248,832,000bit/sec > 200Mbit/sec sec frame pixel http://en.wikipedia.org/wiki/Bit_rate 3.5Mbits/sec with MPEG-2 compression Fundamentals Data represent information – different ways Representations that contain irrelevant or repeated information contain redundant data Two representations of the same information: b and b’ bits, then the relative data redundancy 1 R =1− , where C b C = is called the compression ratio b′ In digital image processing, b is the # bits for the 2D array representation and b’ is the compressed representation Three Types of Data Redundancies Coding redundancy • Code/code book is a system to represent information • Code length is the number of symbols in each code word • Do we really need 8 bits to represent a gray-level pixel? Spatial and temporal redundancy • Neighboring (spatially or temporally) pixels usually have similar intensities! • Do we need to represent every pixel? Irrelevant information • Some image information can be ignored. Coding Redundancy Histogram nk pr (rk ) = , k = 0,1,2,..., L −1 MN Average # bits required to represent each pixel L−1 Lavg = ∑l(rk ) pr (rk ) k =0 Number of bits representing each intensity level Total bits MNLavg Fixed length Variable length C and R with variable length coding? Spatial and Temporal Redundancy Same intensity along the horizontal line The histogram is uniform.