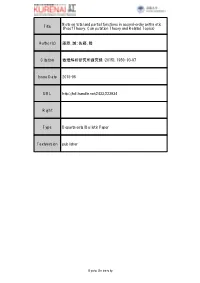

The Complexity of Partial Function Extension for Coverage Functions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Partial Function Computed by a TM M(W)

CS601 The Partial Function Computed by a TM Lecture 2 8 y if M on input “.w ” eventually <> t M(w) halts with output “.y ” ≡ t :> otherwise % Σ Σ .; ; Usually, Σ = 0; 1 ; w; y Σ? 0 ≡ − f tg 0 f g 2 0 Definition 2.1 Let f :Σ? Σ? be a total or partial function. We 0 ! 0 say that f is a partial, recursive function iff TM M(f = M( )), 9 · i.e., w Σ?(f(w) = M(w)). 8 2 0 Remark 2.2 There is an easy to compute 1:1 and onto map be- tween 0; 1 ? and N [Exercise]. Thus we can think of the contents f g of a TM tape as a natural number and talk about f : N N ! being a recursive function. If the partial, recursive function f is total, i.e., f : N N then we ! say that f is a total, recursive function. A partial function that is not total is called strictly partial. 1 CS601 Some Recursive Functions Lecture 2 Proposition 2.3 The following functions are recursive. They are all total except for peven. copy(w) = ww σ(n) = n + 1 plus(n; m) = n + m mult(n; m) = n m × exp(n; m) = nm (we let exp(0; 0) = 1) 1 if n is even χ (n) = even 0 otherwise 1 if n is even p (n) = even otherwise % Proof: Exercise: please convince yourself that you can build TMs to compute all of these functions! 2 Recursive Sets = Decidable Sets = Computable Sets Definition 2.4 Let S Σ? or S N. -

Iris: Monoids and Invariants As an Orthogonal Basis for Concurrent Reasoning

Iris: Monoids and Invariants as an Orthogonal Basis for Concurrent Reasoning Ralf Jung David Swasey Filip Sieczkowski Kasper Svendsen MPI-SWS & MPI-SWS Aarhus University Aarhus University Saarland University [email protected] fi[email protected] [email protected] [email protected] rtifact * Comple * Aaron Turon Lars Birkedal Derek Dreyer A t te n * te A is W s E * e n l l C o L D C o P * * Mozilla Research Aarhus University MPI-SWS c u e m s O E u e e P n R t v e o d t * y * s E a [email protected] [email protected] [email protected] a l d u e a t Abstract TaDA [8], and others. In this paper, we present a logic called Iris that We present Iris, a concurrent separation logic with a simple premise: explains some of the complexities of these prior separation logics in monoids and invariants are all you need. Partial commutative terms of a simpler unifying foundation, while also supporting some monoids enable us to express—and invariants enable us to enforce— new and powerful reasoning principles for concurrency. user-defined protocols on shared state, which are at the conceptual Before we get to Iris, however, let us begin with a brief overview core of most recent program logics for concurrency. Furthermore, of some key problems that arise in reasoning compositionally about through a novel extension of the concept of a view shift, Iris supports shared state, and how prior approaches have dealt with them. -

Enumerations of the Kolmogorov Function

Enumerations of the Kolmogorov Function Richard Beigela Harry Buhrmanb Peter Fejerc Lance Fortnowd Piotr Grabowskie Luc Longpr´ef Andrej Muchnikg Frank Stephanh Leen Torenvlieti Abstract A recursive enumerator for a function h is an algorithm f which enu- merates for an input x finitely many elements including h(x). f is a aEmail: [email protected]. Department of Computer and Information Sciences, Temple University, 1805 North Broad Street, Philadelphia PA 19122, USA. Research per- formed in part at NEC and the Institute for Advanced Study. Supported in part by a State of New Jersey grant and by the National Science Foundation under grants CCR-0049019 and CCR-9877150. bEmail: [email protected]. CWI, Kruislaan 413, 1098SJ Amsterdam, The Netherlands. Partially supported by the EU through the 5th framework program FET. cEmail: [email protected]. Department of Computer Science, University of Mas- sachusetts Boston, Boston, MA 02125, USA. dEmail: [email protected]. Department of Computer Science, University of Chicago, 1100 East 58th Street, Chicago, IL 60637, USA. Research performed in part at NEC Research Institute. eEmail: [email protected]. Institut f¨ur Informatik, Im Neuenheimer Feld 294, 69120 Heidelberg, Germany. fEmail: [email protected]. Computer Science Department, UTEP, El Paso, TX 79968, USA. gEmail: [email protected]. Institute of New Techologies, Nizhnyaya Radi- shevskaya, 10, Moscow, 109004, Russia. The work was partially supported by Russian Foundation for Basic Research (grants N 04-01-00427, N 02-01-22001) and Council on Grants for Scientific Schools. hEmail: [email protected]. School of Computing and Department of Mathe- matics, National University of Singapore, 3 Science Drive 2, Singapore 117543, Republic of Singapore. -

Division by Zero in Logic and Computing Jan Bergstra

Division by Zero in Logic and Computing Jan Bergstra To cite this version: Jan Bergstra. Division by Zero in Logic and Computing. 2021. hal-03184956v2 HAL Id: hal-03184956 https://hal.archives-ouvertes.fr/hal-03184956v2 Preprint submitted on 19 Apr 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. DIVISION BY ZERO IN LOGIC AND COMPUTING JAN A. BERGSTRA Abstract. The phenomenon of division by zero is considered from the per- spectives of logic and informatics respectively. Division rather than multi- plicative inverse is taken as the point of departure. A classification of views on division by zero is proposed: principled, physics based principled, quasi- principled, curiosity driven, pragmatic, and ad hoc. A survey is provided of different perspectives on the value of 1=0 with for each view an assessment view from the perspectives of logic and computing. No attempt is made to survey the long and diverse history of the subject. 1. Introduction In the context of rational numbers the constants 0 and 1 and the operations of addition ( + ) and subtraction ( − ) as well as multiplication ( · ) and division ( = ) play a key role. When starting with a binary primitive for subtraction unary opposite is an abbreviation as follows: −x = 0 − x, and given a two-place division function unary inverse is an abbreviation as follows: x−1 = 1=x. -

Division by Zero: a Survey of Options

Division by Zero: A Survey of Options Jan A. Bergstra Informatics Institute, University of Amsterdam Science Park, 904, 1098 XH, Amsterdam, The Netherlands [email protected], [email protected] Submitted: 12 May 2019 Revised: 24 June 2019 Abstract The idea that, as opposed to the conventional viewpoint, division by zero may produce a meaningful result, is long standing and has attracted inter- est from many sides. We provide a survey of some options for defining an outcome for the application of division in case the second argument equals zero. The survey is limited by a combination of simplifying assumptions which are grouped together in the idea of a premeadow, which generalises the notion of an associative transfield. 1 Introduction The number of options available for assigning a meaning to the expression 1=0 is remarkably large. In order to provide an informative survey of such options some conditions may be imposed, thereby reducing the number of options. I will understand an option for division by zero as an arithmetical datatype, i.e. an algebra, with the following signature: • a single sort with name V , • constants 0 (zero) and 1 (one) for sort V , • 2-place functions · (multiplication) and + (addition), • unary functions − (additive inverse, also called opposite) and −1 (mul- tiplicative inverse), • 2 place functions − (subtraction) and = (division). Decimal notations like 2; 17; −8 are used as abbreviations, e.g. 2 = 1 + 1, and −3 = −((1 + 1) + 1). With inverse the multiplicative inverse is meant, while the additive inverse is referred to as opposite. 1 This signature is referred to as the signature of meadows ΣMd in [6], with the understanding that both inverse and division (and both opposite and sub- traction) are present. -

On Extensions of Partial Functions A.B

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Expo. Math. 25 (2007) 345–353 www.elsevier.de/exmath On extensions of partial functions A.B. Kharazishvilia,∗, A.P. Kirtadzeb aA. Razmadze Mathematical Institute, M. Alexidze Street, 1, Tbilisi 0193, Georgia bI. Vekua Institute of Applied Mathematics of Tbilisi State University, University Street, 2, Tbilisi 0143, Georgia Received 2 October 2006; received in revised form 15 January 2007 Abstract The problem of extending partial functions is considered from the general viewpoint. Some as- pects of this problem are illustrated by examples, which are concerned with typical real-valued partial functions (e.g. semicontinuous, monotone, additive, measurable, possessing the Baire property). ᭧ 2007 Elsevier GmbH. All rights reserved. MSC 2000: primary: 26A1526A21; 26A30; secondary: 28B20 Keywords: Partial function; Extension of a partial function; Semicontinuous function; Monotone function; Measurable function; Sierpi´nski–Zygmund function; Absolutely nonmeasurable function; Extension of a measure In this note we would like to consider several facts from mathematical analysis concerning extensions of real-valued partial functions. Some of those facts are rather easy and are accessible to average-level students. But some of them are much deeper, important and have applications in various branches of mathematics. The best known example of this type is the famous Tietze–Urysohn theorem, which states that every real-valued continuous function defined on a closed subset of a normal topological space can be extended to a real- valued continuous function defined on the whole space (see, for instance, [8], Chapter 1, Section 14). -

Associativity and Confluence

Journal of Pure and Applied Mathematics: Advances and Applications Volume 3, Number 2, 2010, Pages 265-285 PARTIAL MONOIDS: ASSOCIATIVITY AND CONFLUENCE LAURENT POINSOT, GÉRARD H. E. DUCHAMP and CHRISTOPHE TOLLU Institut Galilée Laboratoire d’Informatique de Paris-Nord Université Paris-Nord UMR CNRS 7030 F-93430 Villetaneuse France e-mail: [email protected] Abstract A partial monoid P is a set with a partial multiplication × (and total identity 1P ), which satisfies some associativity axiom. The partial monoid P may be embedded in the free monoid P ∗ and the product × is simulated by a string rewriting system on P ∗ that consists in evaluating the concatenation of two letters as a product in P, when it is defined, and a letter 1P as the empty word . In this paper, we study the profound relations between confluence for such a system and associativity of the multiplication. Moreover, we develop a reduction strategy to ensure confluence, and which allows us to define a multiplication on normal forms associative up to a given congruence of P ∗. Finally, we show that this operation is associative, if and only if, the rewriting system under consideration is confluent. 2010 Mathematics Subject Classification: 16S15, 20M05, 68Q42. Keywords and phrases: partial monoid, string rewriting system, normal form, associativity and confluence. Received May 28, 2010 2010 Scientific Advances Publishers 266 LAURENT POINSOT et al. 1. Introduction A partial monoid is a set equipped with a partially-defined multiplication, say ×, which is associative in the sense that (x × y) × z = x × ()y × z means that the left-hand side is defined, if and only if the right-hand side is defined, and in this situation, they are equal. -

Wheels — on Division by Zero Jesper Carlstr¨Om

ISSN: 1401-5617 Wheels | On Division by Zero Jesper Carlstr¨om Research Reports in Mathematics Number 11, 2001 Department of Mathematics Stockholm University Electronic versions of this document are available at http://www.matematik.su.se/reports/2001/11 Date of publication: September 10, 2001 2000 Mathematics Subject Classification: Primary 16Y99, Secondary 13B30, 13P99, 03F65, 08A70. Keywords: fractions, quotients, localization. Postal address: Department of Mathematics Stockholm University S-106 91 Stockholm Sweden Electronic addresses: http://www.matematik.su.se [email protected] Wheels | On Division by Zero Jesper Carlstr¨om Department of Mathematics Stockholm University http://www.matematik.su.se/~jesper/ Filosofie licentiatavhandling Abstract We show how to extend any commutative ring (or semiring) so that di- vision by any element, including 0, is in a sense possible. The resulting structure is what is called a wheel. Wheels are similar to rings, but 0x = 0 does not hold in general; the subset fx j 0x = 0g of any wheel is a com- mutative ring (or semiring) and any commutative ring (or semiring) with identity can be described as such a subset of a wheel. The main goal of this paper is to show that the given axioms for wheels are natural and to clarify how valid identities for wheels relate to valid identities for commutative rings and semirings. Contents 1 Introduction 3 1.1 Why invent the wheel? . 3 1.2 A sketch . 4 2 Involution-monoids 7 2.1 Definitions and examples . 8 2.2 The construction of involution-monoids from commutative monoids 9 2.3 Insertion of the parent monoid . -

Dividing by Zero – How Bad Is It, Really?∗

Dividing by Zero – How Bad Is It, Really?∗ Takayuki Kihara1 and Arno Pauly2 1 Department of Mathematics, University of California, Berkeley, USA [email protected] 2 Département d’Informatique, Université Libre de Bruxelles, Belgium [email protected] Abstract In computable analysis testing a real number for being zero is a fundamental example of a non- computable task. This causes problems for division: We cannot ensure that the number we want to divide by is not zero. In many cases, any real number would be an acceptable outcome if the divisor is zero - but even this cannot be done in a computable way. In this note we investigate the strength of the computational problem Robust division: Given a pair of real numbers, the first not greater than the other, output their quotient if well-defined and any real number else. The formal framework is provided by Weihrauch reducibility. One particular result is that having later calls to the problem depending on the outcomes of earlier ones is strictly more powerful than performing all calls concurrently. However, having a nesting depths of two already provides the full power. This solves an open problem raised at a recent Dagstuhl meeting on Weihrauch reducibility. As application for Robust division, we show that it suffices to execute Gaussian elimination. 1998 ACM Subject Classification F.2.1 Numerical Algorithms and Problems Keywords and phrases computable analysis, Weihrauch reducibility, recursion theory, linear algebra Digital Object Identifier 10.4230/LIPIcs.MFCS.2016.58 1 Introduction We cannot divide by zero! is probably the first mathematical impossibility statement everyone encounters. -

Additive Decomposition Schemes for Polynomial Functions Over Fields Miguel Couceiro, Erkko Lehtonen, Tamas Waldhauser

Additive decomposition schemes for polynomial functions over fields Miguel Couceiro, Erkko Lehtonen, Tamas Waldhauser To cite this version: Miguel Couceiro, Erkko Lehtonen, Tamas Waldhauser. Additive decomposition schemes for polyno- mial functions over fields. Novi Sad Journal of Mathematics, Institut za matematiku, 2014, 44(2), pp.89-105. hal-01090554 HAL Id: hal-01090554 https://hal.archives-ouvertes.fr/hal-01090554 Submitted on 18 Feb 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. This paper appeared in Novi Sad J. Math. 44 (2014), 89{105. ADDITIVE DECOMPOSITION SCHEMES FOR POLYNOMIAL FUNCTIONS OVER FIELDS MIGUEL COUCEIRO, ERKKO LEHTONEN, AND TAMAS´ WALDHAUSER Abstract. The authors' previous results on the arity gap of functions of several vari- ables are refined by considering polynomial functions over arbitrary fields. We explicitly describe the polynomial functions with arity gap at least 3, as well as the polynomial functions with arity gap equal to 2 for fields of characteristic 0 or 2. These descriptions are given in the form of decomposition schemes of polynomial functions. Similar descrip- tions are given for arbitrary finite fields. However, we show that these descriptions do not extend to infinite fields of odd characteristic. -

Title Note on Total and Partial Functions in Second-Order Arithmetic

Note on total and partial functions in second-order arithmetic Title (Proof Theory, Computation Theory and Related Topics) Author(s) 藤原, 誠; 佐藤, 隆 Citation 数理解析研究所講究録 (2015), 1950: 93-97 Issue Date 2015-06 URL http://hdl.handle.net/2433/223934 Right Type Departmental Bulletin Paper Textversion publisher Kyoto University 数理解析研究所講究録 第 1950 巻 2015 年 93-97 93 Note on total and partial functions in second-order arithmetic Makoto Fujiwara* Takashi Sato Mathematical Institute Mathematical Institute Tohoku University Tohoku University 6-3, Aramaki Aoba, Aoba-ku Sendai, Miyagi, Japan [email protected] Abstract We analyze the total and partial extendability for partial functions in second-order arith- metic. In particular, we show that the partial extendability for consistent set of finite partial functions and that for consistent sequence of partial functions are equivalent to WKL (weak K\"onig's lemma) over $RCA_{0}$ , despite the fact that their total extendability derives ACA (arith- metical comprehension). 1 Introduction The mainstream of reverse mathematics has been developed in second-order arithmetic, whose language is set-based and functions are represented as their graphs [4]. For this reason, some results on partial functions in reverse mathematics do not correspond to those in computability theory while many results in computability theory can be transformed into the corresponding results in reverse mathematics straightforwardly. For example, it is known in computability theory that every computable partial $\{0$ , 1 $\}$ -valued function has a total extension with low degree, and also that there exists a computable partial $\{0$ , 1 $\}$ -valued function which has no computable total extension (cf. -

The Mobius Inverse Monoid

JOURNAL OF ALGEBRA 200, 428]438Ž. 1998 ARTICLE NO. JA977242 The MobiusÈ Inverse Monoid Mark V. Lawson School of Mathematics, Uni¨ersity of Wales, Bangor, Gwynedd LL57 1UT, United Kingdom Communicated by Peter M. Neumann Received March 20, 1997 We show that the MobiusÈ transformations generate an F-inverse monoid whose maximum group image is the MobiusÈ group. We describe the monoid in terms of McAlister triples. Q 1998 Academic Press 1. INTRODUCTION MobiusÈ transformations can be handled in two ways. The naive way views them as partial functions defined on the complex plane, whereas the sophisticated way views them as functions defined on the extended com- plex plane. In the first part of this paper, I shall view MobiusÈ transforma- tions from the naive standpoint. Formally, a MobiusÈ transformation is a partial function a of the complex plane, C, having the following form: aŽ.z s Žaz q b .Žr cz q d .where a, b,c, d are complex numbers and ad y bc / 0. Of course, there are many ways to write the function a because multiplying a, b, c, and d by a non-zero complex number results in a different form but the same func- tion. This must always be borne in mind in the sequel. MobiusÈ transformations are partial functions rather than functions because of their denominators; if the coefficient c is non-zero then there is one value of z satisfying cz q d s 0. Thus the transformations are of two types: the functions in which c s 0, and the partial functions in which c / 0.