Frangipani: a Scalable Distributed File System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Advfs Command Line and Application Programming Interface

AdvFS Command Line and Application Programming Interface External Reference Specification Version 1.13 JA CASL Not Inspected/Date Inspected Building ZK3 110 Spit Brook Road Nashua, NH 03062 Copyright (C) 2008 Hewlett-Packard Development Company, L.P. Page 1 of 65 Preface Version 1.4 of the AdvFS Command Line and API External Reference Specification is being made available to all partners in order to allow them to design and implement code meeting the specifications contained herein. 1 INTRODUCTION ....................................................................................................................................................................4 1.1 ABSTRACT .........................................................................................................................................................................4 1.2 AUDIENCE ..........................................................................................................................................................................4 1.3 TERMS AND DEFINITIONS ...................................................................................................................................................4 1.4 RELATED DOCUMENTS ......................................................................................................................................................4 1.5 ACKNOWLEDGEMENTS ......................................................................................................................................................4 -

Petascale Data Management: Guided by Measurement

Petascale Data Management: Guided by Measurement petascale data storage institute www.pdsi-scidac.org/ MPP2 www.pdsi-scidac.org MEMBER ORGANIZATIONS & Lustre • Los Alamos National Laboratory – institute.lanl.gov/pdsi/ • Parallel Data Lab, Carnegie Mellon University – www.pdl.cmu.edu/ • Oak Ridge National Laboratory – www.csm.ornl.gov/ • Sandia National Laboratories – www.sandia.gov/ • National Energy Research Scientific Computing Center • Center for Information Technology Integration, U. of Michigan pdsi.nersc.gov/ www.citi.umich.edu/projects/pdsi/ • Pacific Northwest National Laboratory – www.pnl.gov/ • University of California at Santa Cruz – www.pdsi.ucsc.edu/ The Computer Failure Data Repository Filesystems Statistics Survey • Goal: to collect and make available failure data from a large variety of sites GOALS • Better understanding of the characteristics of failures in the real world • Gather & build large DB of static filetree summary • Now maintained by USENIX at cfdr.usenix.org/ • Build small, non-invasive, anonymizing stats gather tool • Distribute fsstats tool via easily used web site Red Storm NAME SYSTEM TYPE SYSTEM SIZE TIME PERIOD TYPE OF DATA • Encourage contributions (output of tool) from many FSs Any node • Offer uploaded statistics & summaries to public & Lustre 22 HPC clusters 5000 nodes 9 years outage . Label Date Type File Total Size Total Space # files # dirs max size max space max dir max name avg file avg dir . 765 nodes (2008) System TB TB M K GB GB ents bytes MB ents . 1 HPC cluster 5 years PITTSBURGH 3,400 disks -

Oracle Database Administrator's Reference for UNIX-Based Operating Systems

Oracle® Database Administrator’s Reference 10g Release 2 (10.2) for UNIX-Based Operating Systems B15658-06 March 2009 Oracle Database Administrator's Reference, 10g Release 2 (10.2) for UNIX-Based Operating Systems B15658-06 Copyright © 2006, 2009, Oracle and/or its affiliates. All rights reserved. Primary Author: Brintha Bennet Contributing Authors: Kevin Flood, Pat Huey, Clara Jaeckel, Emily Murphy, Terri Winters, Ashmita Bose Contributors: David Austin, Subhranshu Banerjee, Mark Bauer, Robert Chang, Jonathan Creighton, Sudip Datta, Padmanabhan Ganapathy, Thirumaleshwara Hasandka, Joel Kallman, George Kotsovolos, Richard Long, Rolly Lv, Padmanabhan Manavazhi, Matthew Mckerley, Sreejith Minnanghat, Krishna Mohan, Rajendra Pingte, Hanlin Qian, Janelle Simmons, Roy Swonger, Lyju Vadassery, Douglas Williams This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this software or related documentation is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. -

Performance and Scalability of a Sensor Data Storage Framework

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Aaltodoc Publication Archive Aalto University School of Science Degree Programme in Computer Science and Engineering Paul Tötterman Performance and Scalability of a Sensor Data Storage Framework Master’s Thesis Helsinki, March 10, 2015 Supervisor: Associate Professor Keijo Heljanko Advisor: Lasse Rasinen M.Sc. (Tech.) Aalto University School of Science ABSTRACT OF Degree Programme in Computer Science and Engineering MASTER’S THESIS Author: Paul Tötterman Title: Performance and Scalability of a Sensor Data Storage Framework Date: March 10, 2015 Pages: 67 Major: Software Technology Code: T-110 Supervisor: Associate Professor Keijo Heljanko Advisor: Lasse Rasinen M.Sc. (Tech.) Modern artificial intelligence and machine learning applications build on analysis and training using large datasets. New research and development does not always start with existing big datasets, but accumulate data over time. The same storage solution does not necessarily cover the scale during the lifetime of the research, especially if scaling up from using common workgroup storage technologies. The storage infrastructure at ZenRobotics has grown using standard workgroup technologies. The current approach is starting to show its limits, while the storage growth is predicted to continue and accelerate. Successful capacity planning and expansion requires a better understanding of the patterns of the use of storage and its growth. We have examined the current storage architecture and stored data from different perspectives in order to gain a better understanding of the situation. By performing a number of experiments we determine key properties of the employed technologies. The combination of these factors allows us to make informed decisions about future storage solutions. -

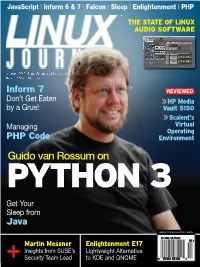

Guido Van Rossum on PYTHON 3 Get Your Sleep From

JavaScript | Inform 6 & 7 | Falcon | Sleep | Enlightenment | PHP LINUX JOURNAL ™ THE STATE OF LINUX AUDIO SOFTWARE LANGUAGES Since 1994: The Original Magazine of the Linux Community OCTOBER 2008 | ISSUE 174 Inform 7 REVIEWED JavaScript | Inform 6 & 7 | Falcon | Sleep Enlightenment PHP Audio | Inform 6 & 7 Falcon JavaScript Don’t Get Eaten HP Media by a Grue! Vault 5150 Scalent’s Managing Virtual Operating PHP Code Environment Guido van Rossum on PYTHON 3 Get Your Sleep from OCTOBER Java www.linuxjournal.com 2008 $5.99US $5.99CAN 10 ISSUE Martin Messner Enlightenment E17 Insights from SUSE’s Lightweight Alternative 174 + Security Team Lead to KDE and GNOME 0 09281 03102 4 MULTIPLY ENERGY EFFICIENCY AND MAXIMIZE COOLING. THE WORLD’S FIRST QUAD-CORE PROCESSOR FOR MAINSTREAM SERVERS. THE NEW QUAD-CORE INTEL® XEON® PROCESSOR 5300 SERIES DELIVERS UP TO 50% 1 MORE PERFORMANCE*PERFORMANCE THAN PREVIOUS INTEL XEON PROCESSORS IN THE SAME POWERPOWER ENVELOPE.ENVELOPE. BASEDBASED ONON THETHE ULTRA-EFFICIENTULTRA-EFFICIENT INTEL®INTEL® CORE™CORE™ MICROMICROARCHITECTURE, ARCHITECTURE IT’S THE ULTIMATE SOLUTION FOR MANAGING RUNAWAY COOLING EXPENSES. LEARN WHYWHY GREAT GREAT BUSINESS BUSINESS COMPUTING COMPUTING STARTS STARTS WITH WITH INTEL INTEL INSIDE. INSIDE. VISIT VISIT INTEL.CO.UK/XEON INTEL.COM/XEON. RELION 2612 s 1UAD #ORE)NTEL®8EON® RELION 1670 s 1UAD #ORE)NTEL®8EON® PROCESSOR PROCESSOR s 5SERVERWITHUPTO4" s )NTEL@3EABURG CHIPSET s )DEALFORCOST EFFECTIVE&ILE$" WITH-(ZFRONTSIDEBUS APPLICATIONS s 5PTO'"2!-IN5CLASS s 2!32ELIABILITY !VAILABILITY LEADINGMEMORYCAPACITY 3ERVICEABILITY s -ANAGEMENTFEATURESTOSUPPORT LARGECLUSTERDEPLOYMENTS 34!24).'!4$2429.00 34!24).'!4$1969.00 Penguin Computing provides turnkey x86/Linux clusters for high performance technical computing applications. -

Ted Ts'o on Linux File Systems

Ted Ts’o on Linux File Systems An Interview RIK FARROW Rik Farrow is the Editor of ;login:. ran into Ted Ts’o during a tutorial luncheon at LISA ’12, and that later [email protected] sparked an email discussion. I started by asking Ted questions that had I puzzled me about the early history of ext2 having to do with the perfor- mance of ext2 compared to the BSD Fast File System (FFS). I had met Rob Kolstad, then president of BSDi, because of my interest in the AT&T lawsuit against the University of California and BSDi. BSDi was being sued for, among other things, Theodore Ts’o is the first having a phone number that could be spelled 800-ITS-UNIX. I thought that it was important North American Linux for the future of open source operating systems that AT&T lose that lawsuit. Kernel Developer, having That said, when I compared the performance of early versions of Linux to the current version started working with Linux of BSDi, I found that they were closely matched, with one glaring exception. Unpacking tar in September 1991. He also archives using Linux (likely .9) was blazingly fast compared to BSDi. I asked Rob, and he served as the tech lead for the MIT Kerberos explained that the issue had to do with synchronous writes, finally clearing up a mystery for me. V5 development team, and was the architect at IBM in charge of bringing real-time Linux Now I had a chance to ask Ted about the story from the Linux side, as well as other questions in support of real-time Java to the US Navy. -

Unix Backup and Recovery

Page iii Unix Backup and Recovery W. Curtis Preston Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo Page iv Disclaimer: This netLibrary eBook does not include data from the CD-ROM that was part of the original hard copy book. Unix Backup and Recovery by W. Curtis Preston Copyright (c) 1999 O'Reilly & Associates, Inc. All rights reserved. Printed in the United States of America. Published by O'Reilly & Associates, Inc., 101 Morris Street, Sebastopol, CA 95472. Editor: Gigi Estabrook Production Editor: Clairemarie Fisher O'Leary Printing History: November 1999: First Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O'Reilly logo are registered trademarks of O'Reilly & Associates, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O'Reilly & Associates, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. The association between the image of an Indian gavial and the topic of Unix backup and recovery is a trademark of O'Reilly & Associates, Inc. While every precaution has been taken in the preparation of this book, the publisher assumes no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. This book is printed on acid-free paper with 85% recycled content, 15% post-consumer waste. O'Reilly & Associates is committed to using paper with the highest recycled content available consistent with high quality. ISBN: 1-56592-642-0 Page v This book is dedicated to my lovely wife Celynn, my beautiful daughters Nina and Marissa, and to God, for continuing to bless my life with gifts such as these. -

Arhitectura Sistemelui De Fisiere FAT

Facultatea de Electronica, Telecomunicatii si Tehnologia Informatiei Universitatea „Politehnica” , Bucuresti Arhitectura sistemelui de fisiere FAT Profesor Coordonator : Student: Conf.dr.ing. Stefan Stancescu Patriche Nicolae - Cristian Cuprins : Introducere …………………………………………………………………pg2 Utilizari, evolutie tehnica ………………………………………………… pg3 Sectorizare logica FAT …………………………………………………pg5 Extensii…………………………………………………………..…............pg7 Derivate.................………………………………………………………….pg8 Proiectare si design tehnic ………………………………………………....pg9 Sectorul de boot……………………………………………………….........pg10 BIOS Parameter Block ……………………………………………………pg19 Extended BIOS Parameter Block …………………………………………pg22 FAT32 Extended BIOS Parameter Block............................……………pg23 Exceptii……………………………………………………….…..............pg25 Sectorul de informatii FS……………………………………………….……pg27 Numele lung al fisierelor VFAT…………………………………………...pg28 Comparatii intre sisteme de fisiere……………………………………… pg30 Concluzii……………………………………………………......................pg45 Referinte ……………………………………………………....................pg45-46 1 Introducere 1.Privire de ansamblu File Allocation Table (FAT) este numele unei arhitecturi pentru fisiere de sistem și in momentul de fata o intreaga familie din industria de lucru a fișierelor folosesc aceasta arhitectura. Sistemul de fișiere FAT este un sistem de fișiere simplu și robust. Oferă performanțe bune chiar și în implementările mai laborioase , dar nu poate oferi aceeași performanță, fiabilitate și scalabilitate ca unele sisteme moderne de fișiere. Totuși, -

Hp Openview Storage Data Protector 5.1 Platform & Integration Support

hp OpenView storage data protector 5.1 Platform & Integration support matrices Version: 1.0 Edition date: May 15, 2003 supported operating systems Data Protector supported operating systems component Cell Manager • Windows NT 4.06 • Windows XP PRO • Windows 2000 • Windows 2003 (32-bit) • HP-UX 11.03, 11.113,5, 11.20 1,3,5 • Solaris 7, 8 & 9 Installation Server • Windows NT 4.06 • Windows XP PRO • Windows 2000 • Windows 2003 (32-bit) • HP-UX 11.03, 11.113,5, 11.20 1,3,5 • Solaris 7, 8 & 9 2 Graphical User Interface7 • Windows XP HE • Windows XP PRO • Windows XP 64-bit • Windows NT 4.0 • Windows 2000 • Windows 2003 (32-bit) 1,3,5 • HP-UX 11.03, 11.113,5, 11.20 • Solaris 7, 8 • Windows NT 4.0 Managers-of-Managers (MoM) • Windows 2000 • Windows XP PRO • Windows 2003 (32-bit) 1,3,5 • HP-UX 11.03, 11.113,5, 11.20 • Solaris 7, 8 & 9 • Windows NT 4.0, Windows 2000 Backup Device Server (media • Windows XP PRO, XP (64-bit) agent), including robotic • Windows 2003 (32-bit) control • Windows 2003 (64-bit) (For Linux, only 32-bit is • Novell NetWare 4.22, 5.12, 6.02 supported) • HP-UX (PA-RISC) -- 11.03, 11.113,5, • HP-UX (IA64) -- 11.201,3,5, 11.223,5 • Tru64 5.xx • OpenVMS 7.3-1 4 • Sun Solaris 2.6, 7, 8, 9 • MPE/iX 6.02, 6.52, 7.02, 7.52 • Linux Redhat 7.x, 8.x • RedHat Enterprise Linux -- Advanced Server 2.18 • Linux SuSe 7.x, 8.x • Linux Debian 3.0 1 The above list plus the following: Backup Device Server (media agent), without • IBM AIX 4.3.x, 5Lver.5.1, 5.2 • SNI Sinix 5.4.3, 5.4.4 robotic control • SCO OpenServer 5.0.5 • Windows NT 4.0 Backup -

Rosetta Stone for Unix

This custom drawing feature now works in both Mozilla and in IE 5 or better for Solaris, HP-UX, or Windows. Use click, shift-click, control-click, and the "set" and "clear" buttons to select the desired subset of OSs. Then select "Draw table" to launch a new window with your custom table. Thanks to Mårten Svantesson <[email protected]> for the improved JavaScript. A Sysadmin's Unixersal Translator (ROSETTA STONE) OR What do they call that in this world? Contributions and corrections gratefully accepted. Please help us fill in the blanks. New "tasks" are welcome, too! PDF version (for best results print US legal size, landscape orientation. If your browser plug-in has trouble with the PDF, then download it and load it in stand-alone acroread). Framed version. Based on Pat Wilson's version. OS versions in parentheses; e.g. 10+ means version 10 and greater; 9- means version 9 and previous. $=extra cost If not specified, commands are in one of the following directories: /usr/bin, /usr/sbin, /sbin, /bin Categories: hardware, firmware, devices disks kernel, boot, swap files, volumes networking security, backup software, patching, tracing, logging references TASK \ OS AIX Darwin DG/UX FreeBSD HP-UX IRIX Linux NetBSD OpenBSD Reliant SCO UnixWare Solaris SunOS 4 Tru64 Ultrix UNICOS OS / TASK Cray Inc., formed SCO UnixWare 7.0.1& The Open Source Fujitsu Siemens. Based from the March SCO UnixWare 7.1.1 Solaris 2.0-2.6, 7, 8, 9 (Digital Unix, OSF/1) foundation for Mac OS (rh) = Red Hat, on DC/OSX from Solaris 1.* 2000 merger of OS notes SVR4-based Mandrake, Suse,.. -

Filesystems in Livecd

Filesystems in LiveCD J. R. Okajima 2010/12 In the majority of cases, LiveCD uses Squashfs, tmpfs, and AUFS. This report compares usage of squashfs with others to implement LiveCD. Ancient Age A long long time ago, there was an approach to implement LiveCD by “shadow direc- tory”. It may be a rather old name but used very widely. For example, when we have source files under src/, and if we build it under the directory for multiple architec- tures, then we will need to remove all the built files for each build. It is a waste of time. In order to address this problem, make another directory obj/, create symbolic links under it, and build under obj/. This is the shadow directory approach. All the built object files are created under obj/ and the developers do not need to care about the difference of the build directory. LiveCD implemented by this approach (readonly FS + tmpfs + shadow dir) suc- ceeded to redirect the file I/O, eg. read from readonly FS and write to tmpfs, but it brought huge number of symbolic links. Obviously it was not the best approach. Squashfs and AUFS Later LiveCDs adopt a stackable filesystem, eg. Unionfs or AUFS. They both intro- duce a hierarchy to mounting filesystems, and mount a writable filesystem transpar- ently over readonly filesystems, and provide essential features of redirecting file I/O to layers and internal copy-up between layers which enables modification the files on the readonly filesystems logically. Since readonly filesystems can be specified more than one, LiveCD can handle them as an application packages such like compiler package, office suite. -

Administration Guide - Tru64 File System Idataagent

Administration Guide - Tru64 File System iDataAgent Administration Guide - Tru64 File System iDataAgent TABLE OF CONTENTS OVERVIEW Introduction Key Features Simplified Data Management Point-In-Time Recovery SnapProtect Backup Backup and Recovery Failovers Efficient Job Management and Reporting Block Level Deduplication Add-On Components SRM for Unix File System 1-Touch Add-On Archiver Content Indexing and Search Desktop Browse Terminology SYSTEM REQUIREMENTS SUPPORTED FEATURES GETTING STARTED GETTING STARTED - DEPLOYMENT Where to Install Method 1: Interactive Install Method 2: Install Software from CommCell Console GETTING STARTED - DEPLOYMENT ON A CLUSTER Where to Install Installing the Tru64 File System iDataAgent - Clustered Environment GETTING STARTED CONFIGURATION GETTING STARTED BACKUP Perform a Backup GETTING STARTED RESTORE Perform a Restore ADVANCED ADVANCED CONFIGURATION Understanding the CommCell Console Configuring User Defined Backup Sets Creating a Backup Set for On Demand Backups Creating a Backup Set for Wild Card Support Configuring User Defined Subclients Creating a Subclient to Backup Specific Files Creating a Subclient to Backup Symbolic Links Creating a Subclient to Backup Raw Devices Configuring Backups for Hard Links Configuring Backups of NFS-Mounted File Systems Page 1 of 224 Administration Guide - Tru64 File System iDataAgent Configuring Backups for Locked Files Excluding Locked Files in NFS Mounted File Systems Configuring Backups for Macintosh Files Excluding Job Results Folder from Backups Including Skipped