Offensive Direction Inference in Real-World Football Video

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Topic Model Approach to Represent and Classify American Football Plays

A Topic Model Approach to Represent and Classify American Football Plays Jagannadan Varadarajan1 1 Advanced Digital Sciences Center of Illinois, Singapore [email protected] 2 King Abdullah University of Science and Technology, Saudi Indriyati Atmosukarto1 Arabia [email protected] 3 University of Illinois at Urbana-Champaign, IL USA Shaunak Ahuja1 [email protected] Bernard Ghanem12 [email protected] Narendra Ahuja13 [email protected] Automated understanding of group activities is an important area of re- a Input ba Feature extraction ae Output motion angle, search with applications in many domains such as surveillance, retail, Play type templates and labels time & health care, and sports. Despite active research in automated activity anal- plays player role run left pass left ysis, the sports domain is extremely under-served. One particularly diffi- WR WR QB RB QB abc Documents RB cult but significant sports application is the automated labeling of plays in ] WR WR . American Football (‘football’), a sport in which multiple players interact W W ....W 1 2 D run right pass right . WR WR on a playing field during structured time segments called plays. Football . RB y y y ] QB QB 1 2 D RB plays vary according to the initial formation and trajectories of players - w i - documents, yi - labels WR Trajectories WR making the task of play classification quite challenging. da MedLDA modeling pass midrun mid pass midrun η βk K WR We define a play as a unique combination of offense players’ trajec- WR y QB d RB QB RB tories as shown in Fig. -

Automatic Annotation of American Football Video Footage for Game Strategy Analysis

https://doi.org/10.2352/ISSN.2470-1173.2021.6.IRIACV-303 © 2021, Society for Imaging Science and Technology Automatic Annotation of American Football Video Footage for Game Strategy Analysis Jacob Newman, Jian-Wei Lin, Dah-Jye Lee, and Jen-Jui Liu, Brigham Young University, Provo, Utah, USA Abstract helping coaches study and understand both how their team plays Annotation and analysis of sports videos is a challeng- and how other teams play. This could assist them in game plan- ing task that, once accomplished, could provide various bene- ning before the game or, if allowed, making decisions in real time, fits to coaches, players, and spectators. In particular, Ameri- thereby improving the game. can Football could benefit from such a system to provide assis- This paper presents a method of player detection from a sin- tance in statistics and game strategy analysis. Manual analysis of gle image immediately before the play starts. YOLOv3, a pre- recorded American football game videos is a tedious and ineffi- trained deep learning network, is used to detect the visible play- cient process. In this paper, as a first step to further our research ers, followed by a ResNet architecture which labels the players. for this unique application, we focus on locating and labeling in- This research will be a foundation for future research in tracking dividual football players from a single overhead image of a foot- key players during a video. With this tracking data, automated ball play immediately before the play begins. A pre-trained deep game analysis can be accomplished. learning network is used to detect and locate the players in the image. -

Using Camera Motion to Identify Types of American Football Plays

USING CAMERA MOTION TO IDENTIFY TYPES OF AMERICAN FOOTBALL PLAYS Mihai Lazarescu, Svetha Venkatesh School of Computing, Curtin University of Technology, GPO Box U1987, Perth 6001, W.A. Email: [email protected] ABSTRACT learning algorithm used to train the system. The results This paper presents a method that uses camera motion pa- are described in Section 5 with the conclusions presented rameters to recognise 7 types of American Football plays. in Section 6. The approach is based on the motion information extracted from the video and it can identify short and long pass plays, 2. PREVIOUS WORK short and long running plays, quaterback sacks, punt plays and kickoff plays. This method has the advantage that it is Motion parameters have been used in the past since they fast and it does not require player or hall tracking. The sys- provide a simple, fast and accurate way to search multi- tem was trained and tested using 782 plays and the results media databases for specific shots (for example a shot of show that the system has an overall classification accuracy a landscape is likely to involve a significant amount of pan, of 68%. whilst a shot of an aerobatic sequence is likely to contain roll). 1. INTRODUCTION Previous work done towards tbe recognition of Ameri- can Football plays has generally involved methods that com- The automatic indexing and retrieval of American Football bine clues from several sources such as video, audio and text plays is a very difficult task for which there are currently scripts. no tools available. The difficulty comes from both the com- A method for tracking players in American Football grey- plexity of American Football itself as well as the image pro- scale images that makes use of context knowledge is de- cessing involved for an accurate analysis of the plays. -

The Passing Tree Is the Number System Used for the Passing Routes

The Passing Tree is the number system used for the passing routes. All routes are the same for ALL receivers. The route assignment depends on the position of the receiver and how it is called at the line of scrimmage. This system has all ODD number routes flowing toward the center of the field, while EVEN number routes are toward the sideline. All routes are called from LEFT to RIGHT. Inside Tight Ends, Eligible Receivers (I) , are also called from LEFT to RIGHT. The above passing tree assumes the quarterback is on the left side of the route runner labeled. Below are the routes used in this playbook: ROUTE NAMES: #1 – ARROW/ SLANT. Slant 45 degrees toward middle. Expect the ball quickly. #3 – DRAG. Drive out 5 yards then drag 90 degrees toward middle.. #5 – CURL ROUTE/ BUTTON HOOK. Drive out 5-7 yards, slow and gather yourself, curl in towards QB, establish a wide stance and frame yourself. Find an open or void area #7 – POST. Drive out 8 yards, show hand fake and look back at QB, then sprint to deep post. Opposite of Flag/ Corner Route . #9 – STREAK/ FLY. Can be a straight sprint or "go" route off the line of scrimmage. #8 – HITCH N’ GO. Drive out 5-7 yards, curl away from QB, show hand fake (sell it!, and then roll out and up the field.) #6 – CORNER. Drive out 8 yards, show hand fake and look back at QB, then sprint to deep corner. #4 - OUT. Drive out 5 yards then drag 90 degrees toward sideline. -

Capital District Youth Football League (CDYFL) Cdyfootball.Com

Capital District Youth Football League (CDYFL) CDYFootball.com 2019 Member Programs Averill Park Ballston Spa Bethlehem Broadalbin- Perth Burnt Hills Colonie Columbia Mohonasen Niskayuna Schalmont Scotia - Glenville Shaker Tackle Rules Pages 2-7 Flag Rules Pages 8-11 Code of Conducts Pages 12-14 1 Capital District Tackle Football Rules The goal of the Capital District Youth Football League is to accommodate every child in our community that is interested in playing football. We want everyone to have the opportunity to play football, regardless of size or experience. Our league is geared towards teaching the fundamentals of football, while ensuring that each child has a great experience. Every member of this league understands that player development is never compromised by competition. Game Conditions/Practice Participation 1. Days 1 and 2 will be helmets only. 2. Days 3 and 4 will be Shoulder pads and helmets. 3. Day 5 is the first allowed full contact practice. 10 practice hours are needed before full contact and an additional 10 hours is needed before game play Games will be played on a school regulation size football field (100 yards with 10 yards for first down) Games are played with 11 players on each team on the field at the same time Games will consist of two 30 minute halves. Each half will be a 25 minutes running clock with the clock stopping only for injuries and timeouts. The final 5 minutes of each half will have the clock stopped for incomplete passes, penalties and out of bounds, along with injuries and timeouts. No “quarterback sneaks” under center are permitted. -

Offensive Formations That Are Reasonably Well Known

In 2002 I received an email from another youth coach, congratulating me on my web site and asking about a particular offensive system. The coach had already chosen his offense, and mentioned that he intended to run about thirty-five offensive plays. That sentence sort of brought me to a screeching halt. Now, this coach hadn't asked for my advice, but I felt compelled to mention that, in my opinion, that was an awful lofty number of plays for a youth football team. I drew a comparison between the playbook at Tomales High, where I coach now, and his youth team, pointing out that even at the varsity high school level, we feature an offensive system with only eight running and six passing plays. I think that this coach fell into one of the more common traps set for the unwary youth football coach. Unlike Little League baseball, or youth basketball, a football offense is an incredibly complex system of interconnected parts. No other sport in America features eleven separate players in so many disparate positions, each with its own responsibilities and techniques. No other sport relies so heavily upon utter teamwork. In basketball, Michael Jordan was able to dominate the NBA and win multiple championships for the Chicago Bulls virtually on his own (not to detract from the skills and hard work of his teammates). In soccer, only a few players can traverse the entire field at any one time, so while the sport includes eleven players, the actual play rarely involves more than three from any one side. -

8 Reasons for the SW

SINGLE WING ATTACK 8 Reasons Why The Single-Wing is Superior to Other Youth League and High School Offensive Systems 1. It’s Radical. Odds are good that no one else in the league runs anything remotely like it. That makes it hard to prepare for. On top of that, how much luck do you think the opposing team will have simulating it in practice with their scout team? Since nearly all the books on this offense are out of print, it’s a major challenge to get information on how to defend it. 2. It’s Tested. Coaches have been developing, refining and polishing the single-wing offense for nearly a century. How many offenses can you say that about? You can run more effective and complimentary series out of one or two formations with the single-wing than perhaps any other offense. Considering the fact that so few teams run the single-wing, the number that have recently won high school state championships (or have placed in the top 4) with it is nothing short of amazing. Plus, there have been many single-wing youth teams winning championships and breaking scoring records in recent years. 3. It’s Powerful. In the single-wing, players are not wasted just taking the snap and handing off. Everyone is either a blocker or carries out a meaningful fake. What play could possibly be more powerful than the single-wing TB wedge? – a 9-man wedge with the FB as a lead blocker. How about a weak side power play with 5 lead blockers? And in the single-wing, the power sweep is really the POWER sweep. -

For the Win: Risk-Sensitive Decision-Making in Teams

Journal of Behavioral Decision Making, J. Behav. Dec. Making, 30: 462–472 (2017) Published online 1 June 2016 in Wiley Online Library (wileyonlinelibrary.com) DOI: 10.1002/bdm.1965 For the Win: Risk-Sensitive Decision-Making in Teams JOSH GONZALES,1 SANDEEP MISHRA2* and RONALD D. CAMP II2 1Department of Psychology, University of Regina, Regina, Saskatchewan Canada 2Faculty of Business Administration, University of Regina, Regina, Saskatchewan Canada ABSTRACT Risk-sensitivity theory predicts that decision-makers should prefer high-risk options in high need situations when low-risk options will not meet these needs. Recent attempts to adopt risk-sensitivity as a framework for understanding human decision-making have been promising. However, this research has focused on individual-level decision-making, has not examined behavior in naturalistic settings, and has not examined the influ- ence of multiple levels of need on decision-making under risk. We examined group-level risk-sensitive decision-making in two American football leagues: the National Football League (NFL) and the National College Athletic Association (NCAA) Division I. Play decisions from the 2012 NFL (Study 1; N = 33 944), 2013 NFL (Study 2; N = 34 087), and 2012 NCAA (Study 3; N = 15 250) regular seasons were analyzed. Results dem- onstrate that teams made risk-sensitive decisions based on two distinct needs: attaining first downs (a key proximate goal in football) and acquiring points above parity. Evidence for risk-sensitive decisions was particularly strong when motivational needs were most salient. These findings are the first empirical demonstration of team risk-sensitivity in a naturalistic organizational setting. Copyright © 2016 John Wiley & Sons, Ltd. -

Flex Football Rule Book – ½ Field

Flex Football Rule Book – ½ Field This rule book outlines the playing rules for Flex Football, a limited-contact 9-on-9 football game that incorporates soft-shelled helmets and shoulder pads. For any rules not specifically addressed below, refer to either the NFHS rule book or the NCAA rule book based on what serves as the official high school-level rule book in your state. Flex 1/2 Field Setup ● The standard football field is divided in half with the direction of play going from the mid field out towards the end zone. ● 2 Flex Football games are to be run at the same going in opposing directions towards the end zones on their respective field. ● The ball will start play at the 45-yard line - game start and turnovers. ● The direction of offensive play will go towards the existing end zones. ● If a ball is intercepted: the defender needs to only return the interception to the 45-yard line to be considered a Defensive touchdown. Team Size and Groupings ● Each team has nine players on the field (9 on 9). ● A team can play with eight if it chooses, losing an eligible receiver on offense and non line-men on defense. ● If a team is two players short, it will automatically forfeit the game. However, the opposing coach may lend players in order to allow the game to be played as a scrimmage. The officials will call the game as if it were a regular game. ● Age ranges can be defined as common age groupings (9-and-under, 12-and under) or school grades (K-2, junior high), based on the decision of each organization. -

Axiomatic Design of a Football Play-Calling Strategy

Axiomatic Design of a Football Play-Calling Strategy A Major Qualifying Project Report Submitted to the Faculty of the WORCESTER POLYTECHNIC INSTITUTE in partial fulfillment of the requirements for the Degree of Bachelor of Science in Mechanical Engineering by _____________________________________________________________ Liam Koenen _____________________________________________________________ Camden Lariviere April 28th, 2016 Approved By: Prof. Christopher A. Brown, Advisor _____________________________________________________________ 1 Abstract The purpose of this MQP was to design an effective play-calling strategy for a football game. An Axiomatic Design approach was used to establish a list of functional requirements and corresponding design parameters and functional metrics. The two axioms to maintain independence and minimize information content were used to generate a final design in the form of a football play card. The primary focus was to develop a successful play-calling strategy that could be consistently repeatable by any user, while also being adaptable over time. Testing of the design solution was conducted using a statistical-based computer simulator. 2 Acknowledgements We would like to extend our sincere gratitude to the following people, as they were influential in the successful completion of our project. We would like to thank Professor Christopher A. Brown for his advice and guidance throughout the yearlong project and Richard Henley for sharing his intellect and thought process about Axiomatic Design and the role -

Maximum-Football for the PC Computer System

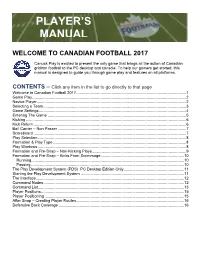

PLAYER’S MANUAL WELCOME TO CANADIAN FOOTBALL 2017 Canuck Play is excited to present the only game that brings all the action of Canadian gridiron football to the PC desktop and console. To help our gamers get started, this manual is designed to guide you through game play and features on all platforms. CONTENTS – Click any item in the list to go directly to that page Welcome to Canadian Football 2017 .................................................................................................... 1 Game Play ............................................................................................................................................ 2 Novice Player ........................................................................................................................................ 2 Selecting a Team .................................................................................................................................. 3 Game Settings ...................................................................................................................................... 4 Entering The Game .............................................................................................................................. 5 Kicking .................................................................................................................................................. 6 Kick Return .......................................................................................................................................... -

6-2 V Double Wing

6-2 V DOUBLE WING SCHEME: 4 DOWN LINEMEN - 4 LINEBACKERS - 3 DEFENSIVE BACKS. OR: 6 DOWN LINEMEN - 2 LINEBACKERS - 3 DEFENSIVE BACKS. WILL & SAM LB'S MAY BE IN A 3 POINT STANCE. PLAY MAN COVERAGE ON THE 5 ELIGIBLE RECEIVERS COUNTING OUTSIDE IN. CORNERS COVER #1 LINEBACKERS COVER #2 FREE SAFETY COVERS #3 THE INSIDE LBS OR FREE SAFETY WILL MAKE A STRENGTH CALL THAT TELLS THE DEFENSE WHERE TO LINE UP. THE STRENGTH CALL CAN BE MADE TO: -A FORMATION OR BACKFIELD SET -THE FIELD OR BOUNDARY -AN INDIVIDUAL PLAYER 6-2 V DOUBLE WING ASSIGNMENTS TACKLES 2 TECHNIQUE. KEY THE BALL & GUARD. RUN: DEFEND THE A GAP. KEEP INSIDE ARM & LEG FREE. PASS RUSH THE QB IN THE A GAP. ENDS 5 TECHNIQUE. KEY THE BALL AND TACKLE. RUN TO YOU: DEFEND THE C GAP WITH OUTSIDE ARM & LEG FREE. RUN AWAY: SQUEEZE DOWN THE L.O.S. AND PURSUE THE FOOTBALL. PASS: RUSH THE QB IN THE C GAP. SAM & WILL LB'S CREASE TECHNIQUE. TILT INSIDE TO THE CREASE. KEY THE BALL AND TE. RUN TO YOU: DEFEND THE D GAP BY ATTACKING THE TE/WING CREASE. STAY IN THE CREASE OR MAKE THE TACKLE. RUN AWAY: RE-DIRECT DOWN THE L.O.S. LOOKING FOR CUTBACK. THEN PURSUE THE FOOTBALL. VS PASS: CONTAIN PASS RUSH. INSIDE LB'S STACK THE B GAP AT 5 YARDS DEPTH. KEY: GUARD & NEAR RB. RUN PLAY TO YOU: B GAP TO SCRAPE. PLAY AWAY: SLOW SCRAPE TO FAR B GAP. THINK CUTBACK. PASS: COVER #2 ELIGIBLE (TIGHT END) MAN TO MAN.