Analyzing Password Strength and Efficient Password Cracking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GPU-Based Password Cracking on the Security of Password Hashing Schemes Regarding Advances in Graphics Processing Units

Radboud University Nijmegen Faculty of Science Kerckhoffs Institute Master of Science Thesis GPU-based Password Cracking On the Security of Password Hashing Schemes regarding Advances in Graphics Processing Units by Martijn Sprengers [email protected] Supervisors: Dr. L. Batina (Radboud University Nijmegen) Ir. S. Hegt (KPMG IT Advisory) Ir. P. Ceelen (KPMG IT Advisory) Thesis number: 646 Final Version Abstract Since users rely on passwords to authenticate themselves to computer systems, ad- versaries attempt to recover those passwords. To prevent such a recovery, various password hashing schemes can be used to store passwords securely. However, recent advances in the graphics processing unit (GPU) hardware challenge the way we have to look at secure password storage. GPU's have proven to be suitable for crypto- graphic operations and provide a significant speedup in performance compared to traditional central processing units (CPU's). This research focuses on the security requirements and properties of prevalent pass- word hashing schemes. Moreover, we present a proof of concept that launches an exhaustive search attack on the MD5-crypt password hashing scheme using modern GPU's. We show that it is possible to achieve a performance of 880 000 hashes per second, using different optimization techniques. Therefore our implementation, executed on a typical GPU, is more than 30 times faster than equally priced CPU hardware. With this performance increase, `complex' passwords with a length of 8 characters are now becoming feasible to crack. In addition, we show that between 50% and 80% of the passwords in a leaked database could be recovered within 2 months of computation time on one Nvidia GeForce 295 GTX. -

Study on Massive-Scale Slow-Hash Recovery Using Unified

S S symmetry Article Study on Massive-Scale Slow-Hash Recovery Using Unified Probabilistic Context-Free Grammar and Symmetrical Collaborative Prioritization with Parallel Machines Tianjun Wu 1,*,†, Yuexiang Yang 1,†, Chi Wang 2,† and Rui Wang 2,† 1 College of Computer, National University of Defense Technology, Changsha 410073, China; [email protected] 2 VeriClouds Co., Seattle, WA 98105, USA; [email protected] (C.W.); [email protected] (R.W.) * Correspondence: [email protected]; Tel.: +86-13-54-864-2846 † The authors contribute equally to this work and are co-first authors. Received: 23 February 2019; Accepted: 26 March 2019; Published: 1 April 2019 Abstract: Slow-hash algorithms are proposed to defend against traditional offline password recovery by making the hash function very slow to compute. In this paper, we study the problem of slow-hash recovery on a large scale. We attack the problem by proposing a novel concurrent model that guesses the target password hash by leveraging known passwords from a largest-ever password corpus. Previously proposed password-reused learning models are specifically designed for targeted online guessing for a single hash and thus cannot be efficiently parallelized for massive-scale offline recovery, which is demanded by modern hash-cracking tasks. In particular, because the size of a probabilistic context-free grammar (PCFG for short) model is non-trivial and keeping track of the next most probable password to guess across all global accounts is difficult, we choose clever data structures and only expand transformations as needed to make the attack computationally tractable. Our adoption of max-min heap, which globally ranks weak accounts for both expanding and guessing according to unified PCFGs and allows for concurrent global ranking, significantly increases the hashes can be recovered within limited time. -

Analysis of Password Cracking Methods & Applications

The University of Akron IdeaExchange@UAkron The Dr. Gary B. and Pamela S. Williams Honors Honors Research Projects College Spring 2015 Analysis of Password Cracking Methods & Applications John A. Chester The University Of Akron, [email protected] Please take a moment to share how this work helps you through this survey. Your feedback will be important as we plan further development of our repository. Follow this and additional works at: http://ideaexchange.uakron.edu/honors_research_projects Part of the Information Security Commons Recommended Citation Chester, John A., "Analysis of Password Cracking Methods & Applications" (2015). Honors Research Projects. 7. http://ideaexchange.uakron.edu/honors_research_projects/7 This Honors Research Project is brought to you for free and open access by The Dr. Gary B. and Pamela S. Williams Honors College at IdeaExchange@UAkron, the institutional repository of The nivU ersity of Akron in Akron, Ohio, USA. It has been accepted for inclusion in Honors Research Projects by an authorized administrator of IdeaExchange@UAkron. For more information, please contact [email protected], [email protected]. Analysis of Password Cracking Methods & Applications John A. Chester The University of Akron Abstract -- This project examines the nature of password cracking and modern applications. Several applications for different platforms are studied. Different methods of cracking are explained, including dictionary attack, brute force, and rainbow tables. Password cracking across different mediums is examined. Hashing and how it affects password cracking is discussed. An implementation of two hash-based password cracking algorithms is developed, along with experimental results of their efficiency. I. Introduction Password cracking is the process of either guessing or recovering a password from stored locations or from a data transmission system [1]. -

Modern Password Security for System Designers What to Consider When Building a Password-Based Authentication System

Modern password security for system designers What to consider when building a password-based authentication system By Ian Maddox and Kyle Moschetto, Google Cloud Solutions Architects This whitepaper describes and models modern password guidance and recommendations for the designers and engineers who create secure online applications. A related whitepaper, Password security for users, offers guidance for end users. This whitepaper covers the wide range of options to consider when building a password-based authentication system. It also establishes a set of user-focused recommendations for password policies and storage, including the balance of password strength and usability. The technology world has been trying to improve on the password since the early days of computing. Shared-knowledge authentication is problematic because information can fall into the wrong hands or be forgotten. The problem is magnified by systems that don't support real-world secure use cases and by the frequent decision of users to take shortcuts. According to a 2019 Yubico/Ponemon study, 69 percent of respondents admit to sharing passwords with their colleagues to access accounts. More than half of respondents (51 percent) reuse an average of five passwords across their business and personal accounts. Furthermore, two-factor authentication is not widely used, even though it adds protection beyond a username and password. Of the respondents, 67 percent don’t use any form of two-factor authentication in their personal life, and 55 percent don’t use it at work. Password systems often allow, or even encourage, users to use insecure passwords. Systems that allow only single-factor credentials and that implement ineffective security policies add to the problem. -

Implementation and Performance Analysis of PBKDF2, Bcrypt, Scrypt Algorithms

Implementation and Performance Analysis of PBKDF2, Bcrypt, Scrypt Algorithms Levent Ertaul, Manpreet Kaur, Venkata Arun Kumar R Gudise CSU East Bay, Hayward, CA, USA. [email protected], [email protected], [email protected] Abstract- With the increase in mobile wireless or data lookup. Whereas, Cryptographic hash functions are technologies, security breaches are also increasing. It has used for building blocks for HMACs which provides become critical to safeguard our sensitive information message authentication. They ensure integrity of the data from the wrongdoers. So, having strong password is that is transmitted. Collision free hash function is the one pivotal. As almost every website needs you to login and which can never have same hashes of different output. If a create a password, it’s tempting to use same password and b are inputs such that H (a) =H (b), and a ≠ b. for numerous websites like banks, shopping and social User chosen passwords shall not be used directly as networking websites. This way we are making our cryptographic keys as they have low entropy and information easily accessible to hackers. Hence, we need randomness properties [2].Password is the secret value from a strong application for password security and which the cryptographic key can be generated. Figure 1 management. In this paper, we are going to compare the shows the statics of increasing cybercrime every year. Hence performance of 3 key derivation algorithms, namely, there is a need for strong key generation algorithms which PBKDF2 (Password Based Key Derivation Function), can generate the keys which are nearly impossible for the Bcrypt and Scrypt. -

Hash Crack: Password Cracking Manual

Hash Crack. Copyright © 2017 Netmux LLC All rights reserved. Without limiting the rights under the copyright reserved above, no part of this publication may be reproduced, stored in, or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise) without prior written permission. ISBN-10: 1975924584 ISBN-13: 978-1975924584 Netmux and the Netmux logo are registered trademarks of Netmux, LLC. Other product and company names mentioned herein may be the trademarks of their respective owners. Rather than use a trademark symbol with every occurrence of a trademarked name, we are using the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. The information in this book is distributed on an “As Is” basis, without warranty. While every precaution has been taken in the preparation of this work, neither the author nor Netmux LLC, shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the information contained in it. While every effort has been made to ensure the accuracy and legitimacy of the references, referrals, and links (collectively “Links”) presented in this book/ebook, Netmux is not responsible or liable for broken Links or missing or fallacious information at the Links. Any Links in this book to a specific product, process, website, or service do not constitute or imply an endorsement by Netmux of same, or its producer or provider. The views and opinions contained at any Links do not necessarily express or reflect those of Netmux. -

Optimizing a Password Hashing Function with Hardware-Accelerated Symmetric Encryption

S S symmetry Article Optimizing a Password Hashing Function with Hardware-Accelerated Symmetric Encryption Rafael Álvarez 1,* , Alicia Andrade 2 and Antonio Zamora 3 1 Departamento de Ciencia de la Computación e Inteligencia Artificial (DCCIA), Universidad de Alicante, 03690 Alicante, Spain 2 Fac. Ingeniería, Ciencias Físicas y Matemática, Universidad Central, Quito 170129, Ecuador; [email protected] 3 Departamento de Ciencia de la Computación e Inteligencia Artificial (DCCIA), Universidad de Alicante, 03690 Alicante, Spain; [email protected] * Correspondence: [email protected] Received: 2 November 2018; Accepted: 22 November 2018; Published: 3 December 2018 Abstract: Password-based key derivation functions (PBKDFs) are commonly used to transform user passwords into keys for symmetric encryption, as well as for user authentication, password hashing, and preventing attacks based on custom hardware. We propose two optimized alternatives that enhance the performance of a previously published PBKDF. This design is based on (1) employing a symmetric cipher, the Advanced Encryption Standard (AES), as a pseudo-random generator and (2) taking advantage of the support for the hardware acceleration for AES that is available on many common platforms in order to mitigate common attacks to password-based user authentication systems. We also analyze their security characteristics, establishing that they are equivalent to the security of the core primitive (AES), and we compare their performance with well-known PBKDF algorithms, such as Scrypt and Argon2, with favorable results. Keywords: symmetric; encryption; password; hash; cryptography; PBKDF 1. Introduction Key derivation functions are employed to obtain one or more keys from a master secret. This is especially useful in the case of user passwords, which can be of arbitrary length and are unsuitable to be used directly as fixed-size cipher keys, so, there must be a process for converting passwords into secret keys. -

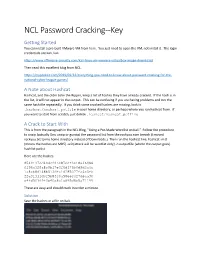

NCL Password Cracking--Key Getting Started You Can Install a Pre-Built Vmware VM from Here

NCL Password Cracking--Key Getting Started You can install a pre-built VMware VM from here. You just need to open the VM, not install it. The login credentials are kali, kali. https://www.offensive-security.com/kali-linux-vm-vmware-virtualbox-image-download/ Then read this excellent blog from NCL. https://cryptokait.com/2019/09/24/everything-you-need-to-know-about-password-cracking-for-the- national-cyber-league-games/ A Note about Hashcat Hashcat, and the older John the Ripper, keep a list of hashes they have already cracked. If the hash is in the list, it will not appear in the output. This can be confusing if you are having problems and run the same hash file repeatedly. If you think some cracked hashes are missing, look in .hashcat/hashcat.potfile in your home directory, or perhaps where you ran hashcat from. If you want to start from scratch, just delete .hashcat/hashcat.potfile A Crack to Start With This is from the paragraph in the NCL Blog, “Using a Pre-Made Wordlist on Kali.” Follow the procedure to unzip (actually Gnu unzip or gunzip) the password list from the rockyou.com breach (I moved rockyou.txt to my home directory instead of Downloads.) Then run the hashcat line, hashcat -m 0 {means the hashes are MD5} -a 0 {attack will be wordlist only} -o outputfile {where the output goes} hashlist pwlist Here are the hashes. 8549137cd494c22ae87eef3e18a46986 0f96a320a8c0bf7e3f6d375b0d9d3a4c 1a8cb8d148b513dfa1d285077fc4e3fb 22a313110bf5b84c0a58eecc27deaa30 e4fd50109f0e40e8c1a895d8e5c71199 These are easy and should crack in under a minute. Solution Save the hashes in a file on Kali. -

Password Security Compliance

Password Security Compliance Reduce risk and ensure compliance by managing password strength and policy. WHITE PAPER 1 PASSWORD SECURITY COMPLIANCE Reduce risk and ensure compliance by managing password strength and policy. Overview Passwords are a critical component of security. Passwords serve to protect user accounts; however, weak passwords may violate compliance standards, be reversed engineered back to plaintext and sold on the dark web, or result in a costly data breach if compromised. A periodic review of password rules is a vital component of your compliance and security strategies. Purpose The purpose of this security brief is to inform organizations of key password requirements and initiate internal compliance conversations. Use this security brief as a tool to enforce or strengthen your existing password policy. Learn more at fusionauth.io 2 According to Verizon’s 2017 Data Breach Investigations Report, 81% of hacking-related breaches leveraged either stolen and/or weak passwords. Financial, healthcare and public sector organizations 81% of hacking-related accounted for over half breaches leveraged of those breaches. either stolen and/or weak Password strength is an important security passwords. concern. With over four billion credentials Verizon BDIR 2017 stolen last year and data breaches averaging $3.62M in direct costs per incident, companies must be prepared for the security risks they face. While all applications should apply password constraints to discourage easy to guess passwords, many organizations are required to do so by law. It is necessary to have stringent password constraints in place in order to comply with industry regulations. Compliance is about fostering a culture that values user data and integrity and that culture starts at the top. -

Key Derivation Functions and Their GPU Implementation

MASARYK UNIVERSITY FACULTY}w¡¢£¤¥¦§¨ OF I !"#$%&'()+,-./012345<yA|NFORMATICS Key derivation functions and their GPU implementation BACHELOR’S THESIS Ondrej Mosnáˇcek Brno, Spring 2015 This work is licensed under a Creative Commons Attribution- NonCommercial-ShareAlike 4.0 International License. https://creativecommons.org/licenses/by-nc-sa/4.0/ cbna ii Declaration Hereby I declare, that this paper is my original authorial work, which I have worked out by my own. All sources, references and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Ondrej Mosnáˇcek Advisor: Ing. Milan Brož iii Acknowledgement I would like to thank my supervisor for his guidance and support, and also for his extensive contributions to the Cryptsetup open- source project. Next, I would like to thank my family for their support and pa- tience and also to my friends who were falling behind schedule just like me and thus helped me not to panic. Last but not least, access to computing and storage facilities owned by parties and projects contributing to the National Grid In- frastructure MetaCentrum, provided under the programme “Projects of Large Infrastructure for Research, Development, and Innovations” (LM2010005), is also greatly appreciated. v Abstract Key derivation functions are a key element of many cryptographic applications. Password-based key derivation functions are designed specifically to derive cryptographic keys from low-entropy sources (such as passwords or passphrases) and to counter brute-force and dictionary attacks. However, the most widely adopted standard for password-based key derivation, PBKDF2, as implemented in most applications, is highly susceptible to attacks using Graphics Process- ing Units (GPUs). -

Analyzing Password Strength

Analyzing Password Strength Martin M.A. Devillers Juli 2010 Password authentication requires no ABSTRACT specialized hardware, such as with finger- print authentication, can be easily imple- Password authentication is still the most mented by developers and just as easily used used authentication mechanism in today’s by users. In short: It’s usable. computer systems. In most systems, the But is it safe? The topic of “Security ver- password is set by the user and must adhere sus Usability” has always been of much de- to certain password requirements. Addition- bate in the computer security world (3). Us- ally, password checkers rank the strength of ers must be protected from harm by security a password to give the user an indication of controls, but these controls may not interfere how secure their password is. In this paper, (much) with the tasks the users want to per- we take a look at a large database of user form. For instance, a firewall that simply chosen passwords to determine the current blocks all traffic can be considered secure, state of affairs. In the end, we extract a mod- but heavily impedes the overall usability of el from the database and provide our own the system. password checker which ranks passwords in various ways. We ran this checker against Security experts or system developers are our dataset which shows that over 90% of usually the ones who have to make this tra- the passwords is highly insecure. deoff, but with password authentication, this task is essentially passed on to the user (3): INTRODUCTION One can either choose a short and simple password, which is easy to remember but As we perform increasingly important also easy to crack, or a very long and com- tasks from our living room computer, the plicated password, which is hard to remem- topic of computer security also becomes in- ber but also hard to crack. -

Password Cracking

Password Cracking Sam Martin and Mark Tokutomi 1 Introduction Passwords are a system designed to provide authentication. There are many different ways to authenticate users of a system: a user can present a physical object like a key card, prove identity using a personal characteristic like a fingerprint, or use something that only the user knows. In contrast to the other approaches listed, a primary benefit of using authentication through a pass- word is that in the event that your password becomes compromised it can be easily changed. This paper will discuss what password cracking is, techniques for password cracking when an attacker has the ability to attempt to log in to the system using a user name and password pair, techniques for when an attacker has access to however passwords are stored on the system, attacks involve observing password entry in some way and finally how graphical passwords and graphical password cracks work. Figure 1: The flow of password attacking possibilities. Figure 1 shows some scenarios attempts at password cracking can occur. The attacker can gain access to a machine through physical or remote access. The user could attempt to try each possible password or likely password (a form of dictionary attack). If the attack can gain access to hashes of the passwords it is possible to use software like OphCrack which utilizes Rainbow Tables to crack passwords[1]. A spammer may use dictionary attacks to gain access to bank accounts or other 1 web services as well. Wireless protocols are vulnerable to some password cracking techniques when packet sniffers are able to gain initialization packets.