Detecting Group Formations Using Ibeacon Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Case 1:18-Cv-00159-LY Document 32 Filed 05/30/18 Page 1 of 20

Case 1:18-cv-00159-LY Document 32 Filed 05/30/18 Page 1 of 20 IN THE UNITED STATES DISTRICT COURT FOR THE WESTERN DISTRICT OF TEXAS AUSTIN DIVISION § UNILOC USA, INC. and § UNILOC LUXEMBOURG, S.A., § Civil Action No. 1:18-cv-00159-LY § Plaintiffs, § § v. § PATENT CASE § APPLE INC., § § Defendant. § § FIRST AMENDED COMPLAINT FOR PATENT INFRINGEMENT Plaintiffs, Uniloc USA, Inc. (“Uniloc USA”) and Uniloc Luxembourg, S.A. (“Uniloc Luxembourg”) (together, “Uniloc”), for their first amended complaint against defendant, Apple Inc. (“Apple”), allege as follows: THE PARTIES 1. Uniloc USA is a Texas corporation having a principal place of business at Legacy Town Center I, Suite 380, 7160 Dallas Parkway, Plano, Texas 75024. 2. Uniloc Luxembourg is a Luxembourg public limited liability company having a principal place of business at 15, Rue Edward Steichen, 4th Floor, L-2540, Luxembourg (R.C.S. Luxembourg B159161). 3. Apple is a California corporation, having a principal place of business in Cupertino, California and regular and established places of business at 12535 Riata Vista Circle and 5501 West Parmer Lane, Austin, Texas. Apple offers its products and/or services, including 2961384.v1 Case 1:18-cv-00159-LY Document 32 Filed 05/30/18 Page 2 of 20 those accused herein of infringement, to customers and potential customers located in Texas and in the judicial Western District of Texas. JURISDICTION 4. Uniloc brings this action for patent infringement under the patent laws of the United States, 35 U.S.C. § 271, et seq. This Court has subject matter jurisdiction under 28 U.S.C. -

Ble to Listen for These Signals and React WHAT IS BLE TECHNOLOGY? Accordingly

THE ULTIMATE GUIDE TO iBEACON Everything From The Basics To Real World Use Cases WHAT ARE BEACONS? Beacons are transmitters that broadcast Think of a beacon like a lighthouse. signals at set intervals so that smart Broadcasting signals just as a lighthouse broadcasts light. devices within its proximity are able to listen for these signals and react WHAT IS BLE TECHNOLOGY? accordingly. They run off of Bluetooth BLE is a type of Bluetooth technology that is low energy. Hence the name- Bluetooth Low energy. BLE communication comprises of advertisements of small packets of data which are broadcast at regular intervals through radio waves. BLE broadcasting low-energy (BLE) wireless technology. is a one-way communication method; it simply advertises its packets of data. These packets of data can then be picked up by smart devices nearby and then be used to trigger things like push messages, app actions, and prompts on the smart device. A typical beacon broadcast signals at the rate of 100ms. If you are using a beacon that is plugged in you can increase the frequency of the beacon without having to worry about battery life. This would allow for quicker discovery by smartphones and other bluetooth enabled devices. BLE technology is idle for contextual and proximity awareness. Beacons typical broadcast range is between 10-30 meters. Some places advertise beacons as broadcasting up to 75 meters. They measure that is an idle setting where the signal will experience nothing being in the way. So you should count on 30 meters as your broadcast range. This is ideal for indoor location tracking and awareness. -

Bluetooth® Low Energy (BLE) Beacons Reference Design

TI Designs Bluetooth® Low Energy (BLE) Beacons Reference Design Description Features This TI Design contains a description of Bluetooth® low • Runs on SimpleLink™ Technology Bluetooth low energy beacons and implementations of three different energy CC2640 Wireless Microcontroller (MCU) beacon standards. These projects demonstrate how • Uses TI Royalty-Free BLE-Stack™ Software both connectable and non-connectable beacons can Development Kit (SDK) be used for various applications, such as asset tracking and location services. The transfer of data can • Easily Runs on CC2650 LaunchPad™ be accomplished with very little power consumption by • Offers Generic Projects That can be Modified to Fit using these beacon implementations. Various Applications • Very Long Battery Life and Excellent Radio Resources Frequency (RF) Range TIDC-Bluetooth-Low-Energy-Beacon Design Folder Applications CC2640 Product Folder CC2650 Product Folder • Micro-Location Services BLE-Stack Tools Folder • Asset Tracking and Identification • Broadcasting Ambient or Sensor Data ASK Our E2E Experts Eddystone Smartphone/ betwork/online .[E .eacon other central platform device An IMPORTANT NOTICE at the end of this TI reference design addresses authorized use, intellectual property matters and other important disclaimers and information. All trademarks are the property of their respective owners. TIDUCD0–September 2016 Bluetooth® Low Energy (BLE) Beacons Reference Design 1 Submit Documentation Feedback Copyright © 2016, Texas Instruments Incorporated System Overview www.ti.com 1 System Overview 1.1 System Description This TI Design includes links to application notes that describe what beacons are, what they can be used for, and how they can be implemented using the Texas Instruments' Bluetooth low energy software stack (BLE-Stack) version 2.2. -

Integrated BLE Beacons Support BLE Beacon Deployments

Connected Mobile Experience (CMX) Will Blake Technology Transitions Driving Digital Transformation Enablers for Digitisation Mobility IoT Analytics Cloud Mobile Traffic Will Exceed IoT Devices Will 76% of Companies Are 80% of Organisations Will Wired Traffic by 2017 Triple by 2020 Planning to or Investing in Use Primarily SaaS by 2018 Big Data The Network20 –Connects,50% Yearly Secures, Increase Automates, in Bandwidth and DemandDelivers Insights Customers Demand a Rich Mobile Experience The Opportunity Is in Mobile Shoppers want to look up online reviews and compare prices while browsing Of lines of business say mobile strategy is very or extremely important to their objectives1 Hotel guests want free Wi-Fi and they want to 56% see available services and amenities Travelers want to find departure gates quickly “Consumers are not only keeping pace, they and receive itinerary updates are in many cases leading the disruption.”2 Patients want location-enabled wayfinding apps Students want to find their way around campus 1 IDC Location Based Services: A Promising Customer-Centric Solution 2014 and receive safety alerts 2 IDC, Chief Digital Officers: Bridging the Innovation Gap Between the CIO and CMO, June 2015 IT’s Role in Impacting the Digital Business Align IT with LoB Drive Real Time Impact Personalised Experiences Movement & Dwell Time 44% Staffing & Asset Management of mobility initiatives are funded or jointly funded by LoB1 Emergency Operations Business Relevance Business Agility Business Intelligence 1 http://www.cisco.com/c/dam/en/us/solutions/collateral/enterprise-networks/mobile-workspace-solution/enterprisemobilitylandscapestudy-spring2014.pdf -

Sensoro Smartbeacon-4AA

Sensoro SmartBeacon-4AA Outlook The SmartBeacon-4AA is built for commercial deployment. Features 1. Ultra-Low Power A Bluetooth® 4.0 (Bluetooth® low energy) Nordic NFR51822 chip with ultra-low power consumption is used. Bluetooth low energy with intelligent energy-saving programs allows the beacon to work for three to five years on four AA batteries. 2. Compatibility Software and firmware are fully compatible with Apple’s iBeacon and Google’s Eddystone (include EID) technical requirements, and can be applied to systems including: iOS 7.0 or above: iPhone 4S, iPhone 5, iPhone 5S, iPad 3, iPad mini, iPad air. Android 4.3 or above: Samsung Galaxy S III, Galaxy S IV, Galaxy Note II, Galaxy Note III and Motorola RAZR, HTC ONE, etc. 3. Demo App and Configuration App The “Yunzi” (demo) app allows you to experience our beacons, while the “Sensoro” app (configuration tools) puts you in control of all of their features. Scan the QR code to download: Signal Stability and Data Transmission 1. Transmission Stability and Distance To achieve signal stability and long-distance data transmission requirements, the SmartBeacon-4AA underwent extensive testing and modification. Its signal remains stable even in complex deployment locations. Radius frequency (RF) power demand configuration can be adjusted to a maximum radius of 80 meters. The RF power demand configuration can reduce its radius to a minimum of 0.15 meters, which covers an area of 0.225 square meters. However, it is possible that no signal will be obtained if the RF power is adjusted to a minimum. 2. Enterprise Class Device Management Support for Enterprises Mass management of device status is an essential component of our enterprise class cloud software solutions. -

Indoor Positioning Using BLE Ibeacon, Smartphone Sensors and Distance-Based Position Correction Algorithm

Template for submitting papers to IETE Journal of Research. 1 Indoor Positioning using BLE iBeacon, Smartphone Sensors and Distance-based Position Correction Algorithm Anh Vu-Tuan Trinh and Thai-Mai Thi Dinh Trinh Vu Tuan Anh is with University of Engineering and Technology, Vietnam National University, Hanoi, Vietnam (e-mail: [email protected]). Dinh Thi Thai Mai is with University of Engineering and Technology, Vietnam National University, Hanoi, Vietnam (corresponding author to provide e-mail: [email protected]). ABSTRACT In this paper, we propose a Bluetooth Low Energy (BLE) iBeacon based localization system, in which we combine two popular positioning methods: Pedestrian Dead Reckoning (PDR) and fingerprinting. As we build the system as an application running on an iPhone, we choose Kalman filter as the fusion algorithm to avoid complex computation. In fingerprinting, a multi-direction- database approach is applied. Finally, in order to reduce the cumulative error of PDR due to smartphone sensors, we propose an algorithm that we name “Distance-based position correction”. The aim of this algorithm is to occasionally correct the tracked position by using the iBeacon nearest to the user. In real experiments, our system can run smoothly on an iPhone, with the average positioning error of only 0.63 m. Keywords: Bluetooth Low Energy; Fingerprinting; iBeacon; Indoor positioning; iOS; Kalman filter; Pedestrian Dead Reckoning; Position fusion; Smartphone sensors interested indoor area [1,2]. We then rely on this database to 1. INTRODUCTION predict the user’s position in the online phase. The main problem of RSS based methods is the instability of the beacons’ Indoor positioning is the process of obtaining a device or a RSS due to noises, multipath fading, non-light-of-sight user’s location in an indoor setting or environment [1]. -

Indoor Positioning System Survey Using Ble Beacons

INDOOR POSITIONING SYSTEM SURVEY USING BLE BEACONS MATHAN KUMAR BALAKRISHNAN novembro de 2017 INDOOR POSITIONING SYSTEM SURVEY USING BLE BEACONS MATHAN KUMAR BALAKRISHNAN Department of Electrical Engineering Masters in Electrical Engineering – Power Systems 2017 Report elaborated for partial fulfillment of the requirements of the DSEE curricular unit – Dissertation for the Master degree in Electrical Engineering – Electrical Systems of Energy Candidate: Mathan Kumar Balakrishnan, Nº 1150253, email [email protected] Scientific advisor: Jorge Manuel Estrela da Silva, [email protected] Company: Creative Systems, C.A, São João da Madeira, Portugal Company supervisor: João Sousa, email [email protected] Department of Electrical Engineering Masters in Electrical Engineering – Power Systems 2017 ACKNOWLEDGEMENTS Foremost, I would like to express my sincere gratitude to my advisor Prof. Jorge Estrela da Silva, Instituto Superior de Engenharia Do Porto for the continuous support of my Master thesis, for his patience, motivation, enthusiasm, and immense knowledge. I could not have imagined having a better advisor and mentor for my project. The door to Prof. Jorge office was always open whenever I ran into a trouble spot or had a question about my research or writing. He consistently allowed this paper to be my own work, but steered me in the right direction whenever he thought I needed it. I would also like to acknowledge Prof. Teresa Alexandra Nogueira Ph.D., Course Director. Besides my advisor, at Instituto Superior de Engenharia Do Porto, I would like to thank the rest of my thesis committee: Mr. João Sousa, Head of Software Development departments and Thesis Supervisor, Creative Systems, São João Madeira and Mr. -

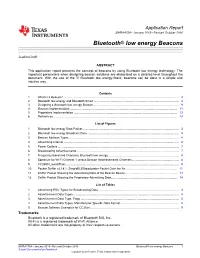

Bluetooth Low Energy Beacons (Rev. A)

Application Report SWRA475A–January 2015–Revised October 2016 Bluetooth® low energy Beacons Joakim Lindh ABSTRACT This application report presents the concept of beacons by using Bluetooth low energy technology. The important parameters when designing beacon solutions are elaborated on a detailed level throughout the document. With the use of the TI Bluetooth low energy-Stack, beacons can be done in a simple and intuitive way. Contents 1 What is a Beacon? .......................................................................................................... 2 2 Bluetooth low energy and Bluetooth Smart .............................................................................. 2 3 Designing a Bluetooth low energy Beacon ............................................................................... 9 4 iBeacon Implementation................................................................................................... 11 5 Proprietary Implementation ............................................................................................... 13 6 References .................................................................................................................. 14 List of Figures 1 Bluetooth low energy Data Packet ........................................................................................ 3 2 Bluetooth low energy Broadcast Data .................................................................................... 4 3 Beacon Address Types .................................................................................................... -

Smart Workplace – Using Ibeacon

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 05 Issue: 03 | Mar-2018 www.irjet.net p-ISSN: 2395-0072 SMART WORKPLACE – USING IBEACON Ms. Punitha.S1, Ms. Saranya Devi.V2, Ms. Kalpana.S3 1,2 Dept. of Electronics and Communication Engineering, Dhanalkshmi srinivasan college of engineering and technology, TN, India. 3 Assistant Professor, Dept. of Electronics and Communication Engineering, Dhanalakshmi srinivasan college of engineering and technology, TN, India. ---------------------------------------------------------------------***--------------------------------------------------------------------- Abstract – IBeacon is a newly developed technology device special offers to nearby smartphones with the iBeacon app. which is used for indoor tracking purpose. The device iBeacon With iBeacons set up, businesses can send messages to can transmits the signals with a long range up to 450 meters. potential ability of their customers (such as special offers or IBeacon is used in several fields which is mainly used for goods) when they enter into market or somewhere the tracking purpose nowadays. Because, today world publish the beacon device located place. new brand product is very important in marketing work place so without the help of marketing representatives, it is not possible at all. Hence organization cannot monitor the employees all the time. So overcome this problem we are using this iBeacon device for tracking purpose and also we can use this device for entry and exit log instead of biometric device. The working of iBeacon device is first paired /connected with android mobiles with iBeacon App via Bluetooth. Then the marketing representatives hold the smart phone, whenever they go for marketing the products. The app automatically activate with the GPS in that mobile for tracking the location. -

Real-Time Indoor Positioning Approach Using Ibeacons and Smartphone Sensors

applied sciences Article Real-Time Indoor Positioning Approach Using iBeacons and Smartphone Sensors Liu Liu , Bofeng Li * , Ling Yang and Tianxia Liu College of Surveying and GeoInformatics, Tongji University, Shanghai 200092, China; [email protected] (L.L.); [email protected] (L.Y.); [email protected] (T.L.) * Correspondence: [email protected] Received: 4 January 2020; Accepted: 10 March 2020; Published: 15 March 2020 Abstract: For localization in daily life, low-cost indoor positioning systems should provide real-time locations with a reasonable accuracy. Considering the flexibility of deployment and low price of iBeacon technique, we develop a real-time fusion workflow to improve localization accuracy of smartphone. First, we propose an iBeacon-based method by integrating a trilateration algorithm with a specific fingerprinting method to resist RSS fluctuations, and obtain accurate locations as the baseline result. Second, as turns are pivotal for positioning, we segment pedestrian trajectories according to turns. Then, we apply a Kalman filter (KF) to heading measurements in each segment, which improves the locations derived by pedestrian dead reckoning (PDR). Finally, we devise another KF to fuse the iBeacon-based approach with the PDR to overcome orientation noises. We implemented this fusion workflow in an Android smartphone and conducted real-time experiments in a building floor. Two different routes with sharp turns were selected. The positioning accuracy of the iBeacon-based method is RMSE 2.75 m. When the smartphone is held steadily, the fusion positioning tests result in RMSE of 2.39 and 2.22 m for the two routes. In addition, the other tests with orientation noises can still result in RMSE of 3.48 and 3.66 m. -

Using Ibeacon for Navigation and Proximity Awareness in Smart Buildings

Project Number: KZP - AA5Z Using iBeacon for Navigation and Proximity Awareness in Smart Buildings A Major Qualifying Project Submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfillment of the requirements for the Degree of Bachelor of Science in Electrical and Computer Engineering By Qusai Alhumoud Chris Connor David Goodrich Advisor: Professor Kaveh Pahlavan Co-Advisor: Professor Jahangir Rahman This report represents work of WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. For more information about the projects program at WPI, see http://www.wpi.edu/Academics/Projects. Abstract Guests in unfamiliar buildings often do not know where to go to or what is around them. This project sought to alleviate this problem using a combination of Android smartphone and Bluetooth iBeacon technology. Released in 2013 by Apple, the iBeacon specification allows an embedded device to broadcast telemetry to various Bluetooth-enabled devices, such as smart phones. This project used iBeacon devices from Estimote, a company that has seen its iBeacon devices used in a variety of real-world applications, such as enhancing the experience of tours at the Guggenheim museum in New York. This project relied on received signal strength (RSS) information obtained from iBeacon devices deployed on the third floor of Atwater Kent Labs on the WPI campus. This information was used to model Atwater Kent Labs as a wireless channel with established path loss characteristics for bluetooth signals. These path loss characteristics were incorporated into an Android application, which localized the user with live telemetry from the iBeacons using a least mean squares (LMS) algorithm and provided contextual information to the user about their surroundings. -

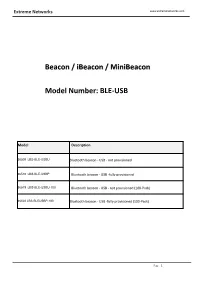

Beacon / Ibeacon / Minibeacon Model Number: BLE-USB

Extreme Networks www.extremenetworks.com Beacon / iBeacon / MiniBeacon Model Number: BLE-USB Model Description 36509 LBS-BLE-USBU Bluetooth beacon - USB - not provisioned 36510 LBS-BLE-USBP Bluetooth beacon - USB -fully provisioned 36519 LBS-BLE-USBU-100 Bluetooth beacon - USB - not provisioned (100-Pack) 36520 LBS-BLEUSBP-100 Bluetooth beacon - USB -fully provisioned (100-Pack) Page 1 Extreme Networks www.extremenetworks.com The Mini USB iBeacon is a mini USB iBeacon with ARM core chipset nRF51822 and leverage BLE 4.0 technology; it is powered by USB slot, accurate hardware and robust firmware. It is designed for the commercial advertising and indoor location-based service. Minew Beacons broadcast 2.4GHz radio signals at regular and adjustable intervals. MiniBeacon can be heard and interpreted by iOS and Android BLE-enabled devices that are equipped with many mobile apps. FEATURES - Programmed MiniBeacon standard firmware - Mini USB iBeacon; - The max. 60 meters advertising distance - Ultra-low power consumption chipset nRF51822 with ARM core - Plug and play; Mini USB iBeacon CERTIFICATIONS Image 1 - iBeacon MFi License (iBC-14-00582) - FCC Regulation (FCC Part 15.) - CE Regulations (Included EN300328/301489/60950/62479) SPECIFICATION Compatibility Configurable Parameters - Supported iOS 7.0+ and Android 4.3+ system; - UUID, Major, Minor, Device Name, Password etc. - Compatible with Apple iBeaconTM standard; - Special Configuration APP; - Compatible with all Bluetooth 4.0 (BLE) devices; Transmission Power Levels No Battery Needed - 8 adjustable levels, range from 0 to 7 - No battery needed, powered by USB slot; - Transmission power range: -30dBm to +4dBm; Soft-reboot Long Range - Reboot the device via command without anytools; - The max.