CS 540 Midterm Exam Topics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture 4 Dynamic Programming

1/17 Lecture 4 Dynamic Programming Last update: Jan 19, 2021 References: Algorithms, Jeff Erickson, Chapter 3. Algorithms, Gopal Pandurangan, Chapter 6. Dynamic Programming 2/17 Backtracking is incredible powerful in solving all kinds of hard prob- lems, but it can often be very slow; usually exponential. Example: Fibonacci numbers is defined as recurrence: 0 if n = 0 Fn =8 1 if n = 1 > Fn 1 + Fn 2 otherwise < ¡ ¡ > A direct translation in:to recursive program to compute Fibonacci number is RecFib(n): if n=0 return 0 if n=1 return 1 return RecFib(n-1) + RecFib(n-2) Fibonacci Number 3/17 The recursive program has horrible time complexity. How bad? Let's try to compute. Denote T(n) as the time complexity of computing RecFib(n). Based on the recursion, we have the recurrence: T(n) = T(n 1) + T(n 2) + 1; T(0) = T(1) = 1 ¡ ¡ Solving this recurrence, we get p5 + 1 T(n) = O(n); = 1.618 2 So the RecFib(n) program runs at exponential time complexity. RecFib Recursion Tree 4/17 Intuitively, why RecFib() runs exponentially slow. Problem: redun- dant computation! How about memorize the intermediate computa- tion result to avoid recomputation? Fib: Memoization 5/17 To optimize the performance of RecFib, we can memorize the inter- mediate Fn into some kind of cache, and look it up when we need it again. MemFib(n): if n = 0 n = 1 retujrjn n if F[n] is undefined F[n] MemFib(n-1)+MemFib(n-2) retur n F[n] How much does it improve upon RecFib()? Assuming accessing F[n] takes constant time, then at most n additions will be performed (we never recompute). -

Exhaustive Recursion and Backtracking

CS106B Handout #19 J Zelenski Feb 1, 2008 Exhaustive recursion and backtracking In some recursive functions, such as binary search or reversing a file, each recursive call makes just one recursive call. The "tree" of calls forms a linear line from the initial call down to the base case. In such cases, the performance of the overall algorithm is dependent on how deep the function stack gets, which is determined by how quickly we progress to the base case. For reverse file, the stack depth is equal to the size of the input file, since we move one closer to the empty file base case at each level. For binary search, it more quickly bottoms out by dividing the remaining input in half at each level of the recursion. Both of these can be done relatively efficiently. Now consider a recursive function such as subsets or permutation that makes not just one recursive call, but several. The tree of function calls has multiple branches at each level, which in turn have further branches, and so on down to the base case. Because of the multiplicative factors being carried down the tree, the number of calls can grow dramatically as the recursion goes deeper. Thus, these exhaustive recursion algorithms have the potential to be very expensive. Often the different recursive calls made at each level represent a decision point, where we have choices such as what letter to choose next or what turn to make when reading a map. Might there be situations where we can save some time by focusing on the most promising options, without committing to exploring them all? In some contexts, we have no choice but to exhaustively examine all possibilities, such as when trying to find some globally optimal result, But what if we are interested in finding any solution, whichever one that works out first? At each decision point, we can choose one of the available options, and sally forth, hoping it works out. -

4 Beyond Classical Search

BEYOND CLASSICAL 4 SEARCH In which we relax the simplifying assumptions of the previous chapter, thereby getting closer to the real world. Chapter 3 addressed a single category of problems: observable, deterministic, known envi- ronments where the solution is a sequence of actions. In this chapter, we look at what happens when these assumptions are relaxed. We begin with a fairly simple case: Sections 4.1 and 4.2 cover algorithms that perform purely local search in the state space, evaluating and modify- ing one or more current states rather than systematically exploring paths from an initial state. These algorithms are suitable for problems in which all that matters is the solution state, not the path cost to reach it. The family of local search algorithms includes methods inspired by statistical physics (simulated annealing)andevolutionarybiology(genetic algorithms). Then, in Sections 4.3–4.4, we examine what happens when we relax the assumptions of determinism and observability. The key idea is that if an agent cannot predict exactly what percept it will receive, then it will need to consider what to do under each contingency that its percepts may reveal. With partial observability, the agent will also need to keep track of the states it might be in. Finally, Section 4.5 investigates online search,inwhichtheagentisfacedwithastate space that is initially unknown and must be explored. 4.1 LOCAL SEARCH ALGORITHMS AND OPTIMIZATION PROBLEMS The search algorithms that we have seen so far are designed to explore search spaces sys- tematically. This systematicity is achieved by keeping one or more paths in memory and by recording which alternatives have been explored at each point along the path. -

Backtrack Parsing Context-Free Grammar Context-Free Grammar

Context-free Grammar Problems with Regular Context-free Grammar Language and Is English a regular language? Bad question! We do not even know what English is! Two eggs and bacon make(s) a big breakfast Backtrack Parsing Can you slide me the salt? He didn't ought to do that But—No! Martin Kay I put the wine you brought in the fridge I put the wine you brought for Sandy in the fridge Should we bring the wine you put in the fridge out Stanford University now? and University of the Saarland You said you thought nobody had the right to claim that they were above the law Martin Kay Context-free Grammar 1 Martin Kay Context-free Grammar 2 Problems with Regular Problems with Regular Language Language You said you thought nobody had the right to claim [You said you thought [nobody had the right [to claim that they were above the law that [they were above the law]]]] Martin Kay Context-free Grammar 3 Martin Kay Context-free Grammar 4 Problems with Regular Context-free Grammar Language Nonterminal symbols ~ grammatical categories Is English mophology a regular language? Bad question! We do not even know what English Terminal Symbols ~ words morphology is! They sell collectables of all sorts Productions ~ (unordered) (rewriting) rules This concerns unredecontaminatability Distinguished Symbol This really is an untiable knot. But—Probably! (Not sure about Swahili, though) Not all that important • Terminals and nonterminals are disjoint • Distinguished symbol Martin Kay Context-free Grammar 5 Martin Kay Context-free Grammar 6 Context-free Grammar Context-free -

Adversarial Search

Artificial Intelligence CS 165A Oct 29, 2020 Instructor: Prof. Yu-Xiang Wang ® ExamplEs of heuristics in A*-search ® GamEs and Adversarial SEarch 1 Recap: Search algorithms • StatE-spacE diagram vs SEarch TrEE • UninformEd SEarch algorithms – BFS / DFS – Depth Limited Search – Iterative Deepening Search. – Uniform cost search. • InformEd SEarch (with an heuristic function h): – Greedy Best-First-Search. (not complete / optimal) – A* Search (complete / optimal if h is admissible) 2 Recap: Summary table of uninformed search (Section 3.4.7 in the AIMA book.) 3 Recap: A* Search (Pronounced “A-Star”) • Uniform-cost sEarch minimizEs g(n) (“past” cost) • GrEEdy sEarch minimizEs h(n) (“expected” or “future” cost) • “A* SEarch” combines the two: – Minimize f(n) = g(n) + h(n) – Accounts for the “past” and the “future” – Estimates the cheapest solution (complete path) through node n function A*-SEARCH(problem, h) returns a solution or failure return BEST-FIRST-SEARCH(problem, f ) 4 Recap: Avoiding Repeated States using A* Search • Is GRAPH-SEARCH optimal with A*? Try with TREE-SEARCH and B GRAPH-SEARCH 1 h = 5 1 A C D 4 4 h = 5 h = 1 h = 0 7 E h = 0 Graph Search Step 1: Among B, C, E, Choose C Step 2: Among B, E, D, Choose B Step 3: Among D, E, Choose E. (you are not going to select C again) 5 Recap: Consistency (Monotonicity) of heuristic h • A heuristic is consistEnt (or monotonic) provided – for any node n, for any successor n’ generated by action a with cost c(n,a,n’) c(n,a,n’) • h(n) ≤ c(n,a,n’) + h(n’) n n’ – akin to triangle inequality. -

Backtracking / Branch-And-Bound

Backtracking / Branch-and-Bound Optimisation problems are problems that have several valid solutions; the challenge is to find an optimal solution. How optimal is defined, depends on the particular problem. Examples of optimisation problems are: Traveling Salesman Problem (TSP). We are given a set of n cities, with the distances between all cities. A traveling salesman, who is currently staying in one of the cities, wants to visit all other cities and then return to his starting point, and he is wondering how to do this. Any tour of all cities would be a valid solution to his problem, but our traveling salesman does not want to waste time: he wants to find a tour that visits all cities and has the smallest possible length of all such tours. So in this case, optimal means: having the smallest possible length. 1-Dimensional Clustering. We are given a sorted list x1; : : : ; xn of n numbers, and an integer k between 1 and n. The problem is to divide the numbers into k subsets of consecutive numbers (clusters) in the best possible way. A valid solution is now a division into k clusters, and an optimal solution is one that has the nicest clusters. We will define this problem more precisely later. Set Partition. We are given a set V of n objects, each having a certain cost, and we want to divide these objects among two people in the fairest possible way. In other words, we are looking for a subdivision of V into two subsets V1 and V2 such that X X cost(v) − cost(v) v2V1 v2V2 is as small as possible. -

A. Local Beam Search with K=1. A. Local Beam Search with K = 1 Is Hill-Climbing Search

4. Give the name that results from each of the following special cases: a. Local beam search with k=1. a. Local beam search with k = 1 is hill-climbing search. b. Local beam search with one initial state and no limit on the number of states retained. b. Local beam search with k = ∞: strictly speaking, this doesn’t make sense. The idea is that if every successor is retained (because k is unbounded), then the search resembles breadth-first search in that it adds one complete layer of nodes before adding the next layer. Starting from one state, the algorithm would be essentially identical to breadth-first search except that each layer is generated all at once. c. Simulated annealing with T=0 at all times (and omitting the termination test). c. Simulated annealing with T = 0 at all times: ignoring the fact that the termination step would be triggered immediately, the search would be identical to first-choice hill climbing because every downward successor would be rejected with probability 1. d. Simulated annealing with T=infinity at all times. d. Simulated annealing with T = infinity at all times: ignoring the fact that the termination step would never be triggered, the search would be identical to a random walk because every successor would be accepted with probability 1. Note that, in this case, a random walk is approximately equivalent to depth-first search. e. Genetic algorithm with population size N=1. e. Genetic algorithm with population size N = 1: if the population size is 1, then the two selected parents will be the same individual; crossover yields an exact copy of the individual; then there is a small chance of mutation. -

Guru Nanak Dev University Amritsar

FACULTY OF ENGINEERING & TECHNOLOGY SYLLABUS FOR B.TECH. COMPUTER ENGINEERING (Credit Based Evaluation and Grading System) (SEMESTER: I to VIII) Session: 2020-21 Batch from Year 2020 To Year 2024 GURU NANAK DEV UNIVERSITY AMRITSAR Note: (i) Copy rights are reserved. Nobody is allowed to print it in any form. Defaulters will be prosecuted. (ii) Subject to change in the syllabi at any time. Please visit the University website time to time. 1 CSA1: B.TECH. (COMPUTER ENGINEERING) SEMESTER SYSTEM (Credit Based Evaluation and Grading System) Batch from Year 2020 to Year 2024 SCHEME SEMESTER –I S. Course Credit Course Title L T P No. Code s 1. CEL120 Engineering Mechanics 3 1 0 4 Engineering Graphics & Drafting 2. MEL121 3 0 1 4 Using AutoCAD 3 MTL101 Mathematics-I 3 1 0 4 4. PHL183 Physics 4 0 1 5 5. MEL110 Introduction to Engg. Materials 3 0 0 3 6. Elective-I 2 0 0 2 SOA- 101 Drug Abuse: Problem, Management 2 0 0 2 7. and Prevention -Interdisciplinary Course (Compulsory) List of Electives–I: 1. PBL121 Punjabi (Compulsory)OR 2 0 0 2. PBL122* w[ZYbh gzikph OR 2 0 0 2 Punjab History & Culture 3. HSL101* 2 0 0 (1450-1716) Total Credits: 20 2 2 24 SEMESTER –II S. Course Code Course Title L T P Credits No. 1. CYL197 Engineering Chemistry 3 0 1 4 2. MTL102 Mathematics-II 3 1 0 4 Basic Electrical &Electronics 3. ECL119 4 0 1 5 Engineering Fundamentals of IT & Programming 4. CSL126 2 1 1 4 using Python 5. -

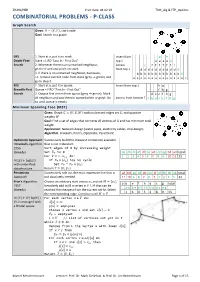

COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), Start Node Goal: Search in a Graph

ZHAW/HSR Print date: 04.02.19 TSM_Alg & FTP_Optimiz COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), start node Goal: Search in a graph DFS 1. Start at a, put it on stack. Insert from g h Depth-First- Stack = LIFO "Last In - First Out" top ↓ e e e e e Search 2. Whenever there is an unmarked neighbour, Access c f f f f f f f go there and and put it on stack from top ↓ d d d d d d d d d d d 3. If there is no unmarked neighbour, backtrack; b b b b b b b b b b b b b i.e. remove current node from stack (grey ⇒ green) and a a a a a a a a a a a a a a a go to step 2. BFS 1. Start at a, put it in queue. Insert from top ↓ h g Breadth-First Queue = FIFO "First In - First Out" f h g Search 2. Output first vertex from queue (grey ⇒ green). Mark d e c f h g all neighbors and put them in queue (white ⇒ grey). Do Access from bottom ↑ a b d e c f h g so until queue is empty Minimum Spanning Tree (MST) Given: Graph 퐺 = (푉, 퐸, 푊) with undirected edges set 퐸, with positive weights 푊 Goal: Find a set of edges that connects all vertices of G and has minimum total weight. Application: Network design (water pipes, electricity cables, chip design) Algorithm: Kruskal's, Prim's, Optimistic, Pessimistic Optimistic Approach Successively build the cheapest connection available =Kruskal's algorithm that is not redundant. -

A System for Bidirectional Robotic Pathfinding

Portland State University PDXScholar Computer Science Faculty Publications and Presentations Computer Science 11-2012 A System for Bidirectional Robotic Pathfinding Tesca Fitzgerald Portland State University, [email protected] Follow this and additional works at: https://pdxscholar.library.pdx.edu/compsci_fac Part of the Artificial Intelligence and Robotics Commons Let us know how access to this document benefits ou.y Citation Details Fitzgerald, Tesca, "A System for Bidirectional Robotic Pathfinding" (2012). Computer Science Faculty Publications and Presentations. 226. https://pdxscholar.library.pdx.edu/compsci_fac/226 This Technical Report is brought to you for free and open access. It has been accepted for inclusion in Computer Science Faculty Publications and Presentations by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. A System for Bidirectional Robotic Pathfinding Tesca K. Fitzgerald Department of Computer Science, Portland State University PO Box 751 Portland, OR 97207 USA [email protected] TR 12-02 November 2012 Abstract The accuracy of an autonomous robot's intended navigation can be impacted by environmental factors affecting the robot's movement. When sophisticated localizing sensors cannot be used, it is important for a pathfinding algorithm to provide opportunities for landmark usage during route execution, while balancing the efficiency of that path. Although current pathfinding algorithms may be applicable, they often disfavor paths that balance accuracy and efficiency needs. I propose a bidirectional pathfinding algorithm to meet the accuracy and efficiency needs of autonomous, navigating robots. 1. Introduction While a multitude of pathfinding algorithms navigation without expensive sensors. As low- exist, pathfinding algorithms tailored for the cost navigating robots need to account for robotics domain are less common. -

Beam Search for Integer Multi-Objective Optimization

Beam Search for integer multi-objective optimization Thibaut Barthelemy Sophie N. Parragh Fabien Tricoire Richard F. Hartl University of Vienna, Department of Business Administration, Austria {thibaut.barthelemy,sophie.parragh,fabien.tricoire,richard.hartl}@univie.ac.at Abstract Beam search is a tree search procedure where, at each level of the tree, at most w nodes are kept. This results in a metaheuristic whose solving time is polynomial in w. Popular for single-objective problems, beam search has only received little attention in the context of multi-objective optimization. By introducing the concepts of oracle and filter, we define a paradigm to understand multi-objective beam search algorithms. Its theoretical analysis engenders practical guidelines for the design of these algorithms. The guidelines, suitable for any problem whose variables are integers, are applied to address a bi-objective 0-1 knapsack problem. The solver obtained outperforms the existing non-exact methods from the literature. 1 Introduction Everyday life decisions are often a matter of multi-objective optimization. Indeed, life is full of tradeoffs, as reflected for instance by discussions in parliaments or boards of directors. As a result, almost all cost-oriented problems of the classical literature give also rise to quality, durability, social or ecological concerns. Such goals are often conflicting. For instance, lower costs may lead to lower quality and vice versa. A generic approach to multi-objective optimization consists in computing a set of several good compromise solutions that decision makers discuss in order to choose one. Numerous methods have been developed to find the set of compromise solutions to multi-objective combi- natorial problems. -

Lecture 9: Games I

Lecture 9: Games I CS221 / Spring 2018 / Sadigh Course plan Search problems Markov decision processes Constraint satisfaction problems Adversarial games Bayesian networks Reflex States Variables Logic "Low-level intelligence" "High-level intelligence" Machine learning CS221 / Spring 2018 / Sadigh 1 • This lecture will be about games, which have been one of the main testbeds for developing AI programs since the early days of AI. Games are distinguished from the other tasks that we've considered so far in this class in that they make explicit the presence of other agents, whose utility is not generally aligned with ours. Thus, the optimal strategy (policy) for us will depend on the strategies of these agents. Moreover, their strategies are often unknown and adversarial. How do we reason about this? A simple game Example: game 1 You choose one of the three bins. I choose a number from that bin. Your goal is to maximize the chosen number. A B C -50 50 1 3 -5 15 CS221 / Spring 2018 / Sadigh 3 • Which bin should you pick? Depends on your mental model of the other player (me). • If you think I'm working with you (unlikely), then you should pick A in hopes of getting 50. If you think I'm against you (likely), then you should pick B as to guard against the worst case (get 1). If you think I'm just acting uniformly at random, then you should pick C so that on average things are reasonable (get 5 in expectation). Roadmap Games, expectimax Minimax, expectiminimax Evaluation functions Alpha-beta pruning CS221 / Spring 2018 / Sadigh 5 Game tree Key idea: game tree Each node is a decision point for a player.