Examining the Performance of Java Static Analyzers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Oracle's Commitment to the Eclipse Community

Oracle’s Commitment to the Eclipse Community An Oracle White Paper March 2007 INTRODUCTION Eclipse has gained strong market adoption for core Java development and is adding support for other languages as well. It enjoys great popularity, in part because it’s free, but also due to its extensibility via plug-ins and the strong ecosystem around it. The advent of emerging trends and technologies like Web 2.0 and SOA amplified the need for an integrated and comprehensive Java IDE that provides pre- packaged and tested support for all major Java EE 5 and Web services standards. Oracle’s tools vision is “productivity with choice”. This means making application development for the Oracle platform as easy as possible regardless of what toolset developers employ be it Oracle JDeveloper or Eclipse. Oracle’s JDeveloper provides a great out-of-the box user experience and dramatically increases productivity for building Java Enterprise Edition (Java EE) and SOA based applications. For various reasons, some Oracle customers elect to use Eclipse for Java development. We believe those users should get similar productivity that other Oracle users are already enjoying with JDeveloper. That’s why Oracle chose to join the Eclipse Foundation as a Strategic Developer and Board Member, and contribute world class talent to lead a variety of Eclipse projects and address its customers' needs. EVOLVING REQUIREMENTS Three major enterprise computing trends are coming together to form a new platform for application development. These trends are Java EE 5, Service Oriented Architecture (SOA), and Web 2.0. Today, end users expect highly interactive user interfaces with real-time updates and desktop-like capabilities. -

Enabling Application Lifecycle Development in Jdeveloper

Enabling Application Lifecycle Development in JDeveloper Susan Duncan Oracle Corporation United Kingdom Keywords: Application Lifecycle Management,ALM, JDeveloper, Team Productivity Center, SCM, Versioning Introduction Application Lifecycle Management has been defined as “The process of delivering software as a continuously repeating cycle of inter-related steps.” It can be described as the management of the lifecycle development practices (requirements, build, test, change control, defect management etc) integrated together through application of process, reporting, traceability and collaboration. The better this integration, the better the software. However, in the past many of these practices worked in isolation - in functional silos that did not lend themselves to collaboration. But collaboration has become increasingly necessary in the software development world where teams work across organizations, time zones, cultures and business functions. Oracle Fusion Middleware encompasses a number of features to facilitate this mode of distributed working where collaboration is paramount including remote deployment and debugging, a shared resource catalog, SCM system integration, built-in testing with JUnit, functional, load and test management with Oracle Application Quality Management Suite and lifecycle management of SOA composites with ClearApp. This paper concentrates on some of the practical ALM features that are available to the application developer using JDeveloper in her day-to-day working environment Versioning One of the most obvious areas of ALM is versioning or source code management. It would be obvious to say that every development team’s process includes SCM. Generally there are two main types of versioning systems: Lock – Modify – Unlock Although a very safe and reliable system this is not necessarily best suited to a distributed development team. -

Jalopy User's Guide V. 1.9.4

Jalopy - User’s Guide v. 1.9.4 Jalopy - User’s Guide v. 1.9.4 Copyright © 2003-2010 TRIEMAX Software Contents Acknowledgments . vii Introduction . ix PART I Core . 1 CHAPTER 1 Installation . 3 1.1 System requirements . 3 1.2 Prerequisites . 3 1.3 Wizard Installation . 4 1.3.1 Welcome . 4 1.3.2 License Agreement . 5 1.3.3 Installation Features . 5 1.3.4 Online Help System (optional) . 8 1.3.5 Settings Import (optional) . 9 1.3.6 Configure plug-in Defaults . 10 1.3.7 Confirmation . 11 1.3.8 Installation . 12 1.3.9 Finish . 13 1.4 Silent Installation . 14 1.5 Manual Installation . 16 CHAPTER 2 Configuration . 17 2.1 Overview . 17 2.1.1 Preferences GUI . 18 2.1.2 Settings files . 29 2.2 Global . 29 2.2.1 General . 29 2.2.2 Misc . 32 2.2.3 Auto . 35 2.3 File Types . 36 2.3.1 File types . 36 2.3.2 File extensions . 37 2.4 Environment . 38 2.4.1 Custom variables . 38 2.4.2 System variables . 40 2.4.3 Local variables . 41 2.4.4 Usage . 42 2.4.5 Date/Time . 44 2.5 Exclusions . 44 2.5.1 Exclusion patterns . 45 2.6 Messages . 46 2.6.1 Categories . 47 2.6.2 Logging . 48 2.6.3 Misc . 49 2.7 Repository . 49 2.7.1 Searching the repository . 50 2.7.2 Displaying info about the repository . 50 2.7.3 Adding libraries to the repository . 50 2.7.4 Removing the repository . -

Installation Guide for Oracle Jdeveloper 11G Release 2 (11.1.2.1.0)

Oracle® Fusion Middleware Installation Guide for Oracle JDeveloper 11g Release 2 (11.1.2.1.0) E17074-02 September 2011 This document provides information on: ■ Section 1, "JDeveloper System Requirements" ■ Section 2, "JDeveloper Certification Information" ■ Section 3, "Installing the Oracle JDeveloper Studio Edition" ■ Section 4, "Installing the JDeveloper Java Edition" ■ Section 5, "Using JDeveloper on Windows" ■ Section 6, "Using JDeveloper on Linux and UNIX Systems" ■ Section 7, "Using JDeveloper on Mac OS X Platforms" ■ Section 8, "Migrating from a Previous Version" ■ Section 9, "Enabling JDeveloper Extensions" ■ Section 10, "Setting the User Home Directory" ■ Section 11, "Using JDeveloper in a Multiuser Environment" ■ Section 12, "Using Oracle WebLogic Server with JDeveloper" ■ Section 13, "JDeveloper Accessibility Information" ■ Section 14, "Uninstalling JDeveloper" ■ Section 15, "Oracle on the Web" ■ Section 16, "Documentation Accessibility" 1 JDeveloper System Requirements This release of Oracle JDeveloper is tested and supported on the specific versions Windows, Linux, and Mac OS X, as listed in Section 1.1, "Recommended CPU, Memory, Display, and Hard Drive Configurations". In addition, it is supported on any operating system that supports Java SE Development Kit (JDK) 6 Update 24 or later. 1.1 Recommended CPU, Memory, Display, and Hard Drive Configurations The following tables list the recommended CPU, memory, display, and hard drive requirements for the different operating systems: ■ Table 1, " Recommended CPU, Memory, Display, -

Developers Choice

IDE Scorecard Developers’ Choice IDE Scorecard Definitive Rankings of the Top 11 IDEs by Over 1,200 Developers June 2006 Evans Data Corp, 740 Front St, Santa Cruz, CA 95060 www.evansdata.com (800) 831 3080 IDE Scorecard I NSIDE T HIS R EPORT Overview 1 Overview Abstract and Methodology 3 Overall Usage In Spring 2006, Evans Data Corp conducted primary market research on IDEs, interviewing over 1200 developers worldwide and asking them to rate 4 Feature Rankings the top IDEs. Developers only rated the features of those IDEs that they had 5 Further Information used. They were asked to rank sixteen different features commonly found in IDEs. Each feature could be ranked as “excellent, “very good” “adequate”, “needs improvement” or “NA”. During processing, the first four rankings were assigned a numerical value according to their relative significance. The last value (“N/A”) was discarded. Values were then combined to produce a score for each element for every IDE and also for an overall total score. The top 11 IDEs ranked were: “Eclipse is well on its Adobe/Macromedia Studio 8 way to becoming one Borland Delphi of the most popular Borland JBuilder IDEs for any Eclipse language..” IBM Rational Application Developer IBM WebSphere Studio Microsoft Visual Studio .NET NetBeans Oracle JDeveloper Sun Java Studio Sybase PowerBuilder Features and capabilities rated were: Compiler/Interpreter Debugger Editor Make/Build Functions Documentation Application Modeling Tools Web Design Tools Sample Applications Profiler 1 IDE Scorecard IDE Scorecard Overview Cont’ Compiler Performance Performance of Resulting Applications Ease of Use Ability to Integrate 3rd Party Tools Availability of 3rd Party Tools Quality of Technical Support Options Size and Quality of Developer Community In addition, developers were asked to rate the IDEs based on how well suited they are for creating a particular type of application (server-centric, desktop, thin-client, rich-client, and embedded systems). -

Oracle® Fusion Middleware 1 Oracle Jdeveloper System Requirements

Oracle® Fusion Middleware Installation Guide for Oracle JDeveloper 11g Release 1 (11.1.1) E13666-08 January 2011 This document provides information on: ■ Section 1, "Oracle JDeveloper System Requirements" ■ Section 2, "Installing the Oracle JDeveloper Studio Edition" ■ Section 3, "Installing the Oracle JDeveloper Java Edition" ■ Section 4, "Using Oracle JDeveloper on Windows" ■ Section 5, "Using Oracle JDeveloper on Linux and UNIX Systems" ■ Section 6, "Using Oracle JDeveloper on Mac OS X Platforms" ■ Section 7, "Migrating from a Previous Version" ■ Section 8, "Enabling Oracle JDeveloper Extensions" ■ Section 9, "Installing Additional Oracle Fusion Middleware Design Time Components" ■ Section 10, "Setting the User Home Directory" ■ Section 11, "Using Oracle JDeveloper in a Multiuser Environment" ■ Section 12, "Using Oracle WebLogic Server with Oracle JDeveloper" ■ Section 13, "Oracle JDeveloper Certification Information" ■ Section 14, "Oracle JDeveloper Accessibility Information" ■ Section 15, "Uninstalling Oracle JDeveloper" ■ Section 16, "Oracle on the Web" ■ Section 17, "Documentation Accessibility" 1 Oracle JDeveloper System Requirements This release of Oracle JDeveloper is tested and supported on the specific versions Windows, Linux, and Mac OS X, as listed in Section 1.1, "Recommended CPU, Memory, Display, and Hard Drive Configurations". In addition, it is supported on any operating system that supports Sun Java SE Development Kit (JDK) 6 Update 21 or later. ORACLE CONFIDENTIAL. 1.1 Recommended CPU, Memory, Display, and Hard Drive -

When the Automobile Driver Is Wearing Spectacles. 38 7.3

VISVESVARAYA TECHNOLOGICAL UNIVERSITY “Jnana Sangama”, Belagavi– 590 018 A PROJECT REPORT ON “MONITORING DRIVER'S ATTENTION LEVEL” Submitted in partial fulfillment for the award of the degree of BACHELOR OF ENGINEERING IN COMPUTER SCIENCE AND ENGINEERING BY SISIR DAS K (1NH12CS118) SIDDHARTHKUMAR PATEL (1NH12CS117) Under the guidance of Ms.Pramilarani K (Senior Assistant Professor, Dept. of CSE, NHCE) DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING NEW HORIZON COLLEGE OF ENGINEERING (ISO-9001:2000 certified, Accredited by NAAC ‘A’, Permanently affiliated to VTU) Outer Ring Road, Panathur Post, Near Marathalli, Bangalore – 560103 NEW HORIZON COLLEGE OF ENGINEERING (ISO-9001:2000 certified, Accredited by NAAC ‘A’ Permanently affiliated to VTU) Outer Ring Road, Panathur Post, Near Marathalli, Bangalore-560 103 DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING CERTIFICATE Certified that the project work entitled “MONITORING DRIVER’S ATTENTION LEVEL” carried out by SISIR DAS K (1NH12CS118) and SIDDHARTHKUMAR PATEL (1NH12CS117) bonafide students of NEW HORIZON COLLEGE OF ENGINEERING in partial fulfilment for the award of Bachelor Of Engineering in Computer Science and Engineering of the Visvesvaraya Technological University, Belgavi during the year 2015-2016. It is certified that all corrections/suggestions indicated for Internal Assessment have been incorporated in the report deposited in the department library. The project report has been approved as it satisfies the academic requirements in respect of Project work prescribed for the said Degree. Name & Signature of Guide Name & Signature of HOD Signature of Principal (Ms. K Pramilarani) (Dr.Prashanth C.S.R.) (Dr.Manjunatha) External Viva Name of Examiner Signature with date 1. 2. ACKNOWLEDGEMENT The satisfaction and euphoria that accompany the successful completion of any task would be, but impossible without the mention of the people who made it possible, whose constant guidance and encouragement crowned our efforts with success. -

Curriculum Vitae General

Branko Kokanović Curriculum Vitae General • Location: Belgrade, Serbia • Date of birth: 19.06.1983. • Cell phone: +38169π (+38169 3.141592) • kokanovic.org • E-mail: [email protected] • GitHub: @stalker314314 Education • Faculty of Electrical Engineering, Department for Computer Science and Engineering, Belgrade University, Serbia. o Graduated on: Binding programming system Test with Moodle LMS with average grade 8.4. o (2002 – 2009) • Electro technical school "Nikola Tesla", telecommunication profile, Belgrade, Serbia. o Main subjects: Mathematics, Computer Science, Electronics, Analog and Digital Telecommunications. o Graduated on: FHSS and DSSS Spread Spectrum analysis o (1998 – 2002) Working experience • Microsoft – Principal software engineer (with a trip to lead role, worked on SQL core engine – predictable query performance and query optimization, as well as SQL Azure DB – survived implementing service from ground up) (2012 -) • RT-RK – Senior software engineer (embedded software development, kernel drivers writing, Assembler/C/C++, 300 developers) (2011 - 2012) • Mozzart Sports Betting – Software architect (Java based distributed software solution, SCRUM based development, 40 developers) (2007 - 2011), • MikroDisk – Mikro magazine give-away DVD content manager including template generator (Python/GTK, SQLite, Cheetah template engine), • Laboratory assistant at "Computer science" department, faculty of Electrical Engineering, Belgrade University (2003 – 2007), • Junior programmer at Inovacation Center, ETF. Worked on VB.NET information -

Oracle Fusion Development Capability DG

Oracle Fusion Development Capability Discussion Guide Last updated: 10/01/07 Oracle Introduction Sell MW as extension of DB and applications. JDeveloper 10.1.3.2 includes the following new Continuing acquisition strategy: features: Oracle‟s Profile: o Consolidate Enterprise Resource Planning (ERP), Customer o Oracle WebCenter extension provides tools and Oracle was founded in 1977, with offices in 145+ countries. Relationship Management (CRM), and Supply Chain services to embed portlets, content, components, and As of 2007, Oracle employs ˜75,000 people. Management (SCM), and Business Intelligence (BI) market web services directly into the JSF application. segments. Is the leading enterprise software company. o Drag and drop portlets: Drag portlets directly from o Build vertical industry expertise. Is No. 1 in database (DB), No. 2 in business intelligence (BI), the component palette onto a JSF page. o Fill MW gaps. and No. 3 in middleware (MW). o Inter-component communication, which allows Claims $1 billion in MW sales—more than BEA. developers to enable different types of components to Oracle Fusion Development Offerings communicate with each other. Oracle Fusion Middleware includes all Oracle software other than applications or database. Oracle MW Web site: o Runtime Customization provides the ability to The first version of JDeveloper (1998) was based on a licensing of www.oracle.com/products/middleware customize application behavior at runtime. Borland’s JBuilder product. In 2001, Oracle rewrote the code to Oracle‟s Strategic Goals: create the Java-based JDeveloper 9i. The 10g version (9.0.5) is the For more information on Oracle ADF Framework architecture, first to use Oracle Application Development Framework (ADF). -

Jprofiler Manual

JProfiler Manual © 2017 ej-technologies GmbH. All rights reserved. Index JProfiler help .......................................................................................................................................... 8 How to order ......................................................................................................................................... 9 A Help topics ........................................................................................................................................ 10 A.1 Profiling ...................................................................................................................................... 10 A.1.1 Profiling modes .................................................................................................................. 10 A.1.2 Remote profiling ................................................................................................................ 12 A.1.3 Behind the scenes ............................................................................................................. 16 A.2 Configuration ............................................................................................................................ 20 A.2.1 Session settings ................................................................................................................. 20 A.2.2 Method call recording ....................................................................................................... 23 A.2.3 Configuring filters ............................................................................................................. -

Towards Left Duff S Mdbg Holt Winters Gai Incl Tax Drupal Fapi Icici

jimportneoneo_clienterrorentitynotfoundrelatedtonoeneo_j_sdn neo_j_traversalcyperneo_jclientpy_neo_neo_jneo_jphpgraphesrelsjshelltraverserwritebatchtransactioneventhandlerbatchinsertereverymangraphenedbgraphdatabaseserviceneo_j_communityjconfigurationjserverstartnodenotintransactionexceptionrest_graphdbneographytransactionfailureexceptionrelationshipentityneo_j_ogmsdnwrappingneoserverbootstrappergraphrepositoryneo_j_graphdbnodeentityembeddedgraphdatabaseneo_jtemplate neo_j_spatialcypher_neo_jneo_j_cyphercypher_querynoe_jcypherneo_jrestclientpy_neoallshortestpathscypher_querieslinkuriousneoclipseexecutionresultbatch_importerwebadmingraphdatabasetimetreegraphawarerelatedtoviacypherqueryrecorelationshiptypespringrestgraphdatabaseflockdbneomodelneo_j_rbshortpathpersistable withindistancegraphdbneo_jneo_j_webadminmiddle_ground_betweenanormcypher materialised handaling hinted finds_nothingbulbsbulbflowrexprorexster cayleygremlintitandborient_dbaurelius tinkerpoptitan_cassandratitan_graph_dbtitan_graphorientdbtitan rexter enough_ram arangotinkerpop_gremlinpyorientlinkset arangodb_graphfoxxodocumentarangodborientjssails_orientdborientgraphexectedbaasbox spark_javarddrddsunpersist asigned aql fetchplanoriento bsonobjectpyspark_rddrddmatrixfactorizationmodelresultiterablemlibpushdownlineage transforamtionspark_rddpairrddreducebykeymappartitionstakeorderedrowmatrixpair_rddblockmanagerlinearregressionwithsgddstreamsencouter fieldtypes spark_dataframejavarddgroupbykeyorg_apache_spark_rddlabeledpointdatabricksaggregatebykeyjavasparkcontextsaveastextfilejavapairdstreamcombinebykeysparkcontext_textfilejavadstreammappartitionswithindexupdatestatebykeyreducebykeyandwindowrepartitioning -

Integrated Development Environments

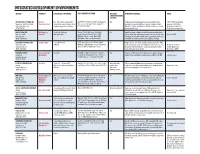

INTEGRATED DEVELOPMENT ENVIRONMENTS VENDOR PRODUCT LANGUAGES SUPPORTED DEPLOYMENT PLATFORM INCLUDES OTHER KEY FEATURES PRICE CODE-VERSIONING SYSTEM? ACTIVESTATE SOFTWARE INC. Komodo Perl, PHP, Python, Ruby, XSLT; Any PHP, Perl, Python or Ruby-based applica- Yes Fully-integrated XSLT support, including an XSLT editor, $295; $495 bundled with Vancouver, British Columbia Professional 3.5.3 customizable context-aware editor tion server; client applications supported debugger and code intelligence. Komodo installs on Linux, one year of ActivePerl www.activestate.com supports many other languages through GUI builder Mac OS X, Solaris and Windows; all four platforms are includ- Profesional or ActiveTcl (778) 786-1114 ed with each license Professional subscription service BEA SYSTEMS INC. BEA Workshop Java, SOA, J2EE and Support for debugging and deployment Yes AppXRay creates a database of all the artifacts and their hierar- San Jose, Calif. Studio 3.0 Web applications requires Apache Jakarta Tomcat, BEA chy of relationships and interdependencies; benefits include code Starts at $899 www.bea.com WebLogic Server, Caucho Resin, IBM completion that reaches multiple levels, page variable names and (408) 570-8000 WebSphere, JBoss or Mortbay Jetty their fields, real-time consistency and validation checking BORLAND SOFTWARE CORP. Jbuilder 2006 Java J2EE 1.4 and J2EE application servers, including BEA Yes Eclipse-based; JSF, Struts and Web Services designers, UML Cupertino, Calif. JDK 5.0 WebLogic, IBM WebSphere, Sybase EAServer, code visualization,