Security Evaluation of Veracrypt

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Course 5 Lesson 2

This material is based on work supported by the National Science Foundation under Grant No. 0802551 Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author (s) and do not necessarily reflect the views of the National Science Foundation C5L3S1 With the advent of the Internet, social networking, and open communication, a vast amount of information is readily available on the Internet for anyone to access. Despite this trend, computer users need to ensure private or personal communications remain confidential and are viewed only by the intended party. Private information such as a social security numbers, school transcripts, medical histories, tax records, banking, and legal documents should be secure when transmitted online or stored locally. One way to keep data confidential is to encrypt it. Militaries,U the governments, industries, and any organization having a desire to maintain privacy have used encryption techniques to secure information. Encryption helps to boost confidence in the security of online commerce and is necessary for secure transactions. In this lesson, you will review encryption and examine several tools used to encrypt data. You will also learn to encrypt and decrypt data. Anyone who desires to administer computer networks and work with private data must have some familiarity with basic encryption protocols and techniques. C5L3S2 You should know what will be expected of you when you complete this lesson. These expectations are presented as objectives. Objectives are short statements of expectations that tell you what you must be able to do, perform, learn, or adjust after reviewing the lesson. -

Public-Key Cryptography

Public Key Cryptography EJ Jung Basic Public Key Cryptography public key public key ? private key Alice Bob Given: Everybody knows Bob’s public key - How is this achieved in practice? Only Bob knows the corresponding private key Goals: 1. Alice wants to send a secret message to Bob 2. Bob wants to authenticate himself Requirements for Public-Key Crypto ! Key generation: computationally easy to generate a pair (public key PK, private key SK) • Computationally infeasible to determine private key PK given only public key PK ! Encryption: given plaintext M and public key PK, easy to compute ciphertext C=EPK(M) ! Decryption: given ciphertext C=EPK(M) and private key SK, easy to compute plaintext M • Infeasible to compute M from C without SK • Decrypt(SK,Encrypt(PK,M))=M Requirements for Public-Key Cryptography 1. Computationally easy for a party B to generate a pair (public key KUb, private key KRb) 2. Easy for sender to generate ciphertext: C = EKUb (M ) 3. Easy for the receiver to decrypt ciphertect using private key: M = DKRb (C) = DKRb[EKUb (M )] Henric Johnson 4 Requirements for Public-Key Cryptography 4. Computationally infeasible to determine private key (KRb) knowing public key (KUb) 5. Computationally infeasible to recover message M, knowing KUb and ciphertext C 6. Either of the two keys can be used for encryption, with the other used for decryption: M = DKRb[EKUb (M )] = DKUb[EKRb (M )] Henric Johnson 5 Public-Key Cryptographic Algorithms ! RSA and Diffie-Hellman ! RSA - Ron Rives, Adi Shamir and Len Adleman at MIT, in 1977. • RSA -

Basic Cryptography

Basic cryptography • How cryptography works... • Symmetric cryptography... • Public key cryptography... • Online Resources... • Printed Resources... I VP R 1 © Copyright 2002-2007 Haim Levkowitz How cryptography works • Plaintext • Ciphertext • Cryptographic algorithm • Key Decryption Key Algorithm Plaintext Ciphertext Encryption I VP R 2 © Copyright 2002-2007 Haim Levkowitz Simple cryptosystem ... ! ABCDEFGHIJKLMNOPQRSTUVWXYZ ! DEFGHIJKLMNOPQRSTUVWXYZABC • Caesar Cipher • Simple substitution cipher • ROT-13 • rotate by half the alphabet • A => N B => O I VP R 3 © Copyright 2002-2007 Haim Levkowitz Keys cryptosystems … • keys and keyspace ... • secret-key and public-key ... • key management ... • strength of key systems ... I VP R 4 © Copyright 2002-2007 Haim Levkowitz Keys and keyspace … • ROT: key is N • Brute force: 25 values of N • IDEA (international data encryption algorithm) in PGP: 2128 numeric keys • 1 billion keys / sec ==> >10,781,000,000,000,000,000,000 years I VP R 5 © Copyright 2002-2007 Haim Levkowitz Symmetric cryptography • DES • Triple DES, DESX, GDES, RDES • RC2, RC4, RC5 • IDEA Key • Blowfish Plaintext Encryption Ciphertext Decryption Plaintext Sender Recipient I VP R 6 © Copyright 2002-2007 Haim Levkowitz DES • Data Encryption Standard • US NIST (‘70s) • 56-bit key • Good then • Not enough now (cracked June 1997) • Discrete blocks of 64 bits • Often w/ CBC (cipherblock chaining) • Each blocks encr. depends on contents of previous => detect missing block I VP R 7 © Copyright 2002-2007 Haim Levkowitz Triple DES, DESX, -

Operating System Boot from Fully Encrypted Device

Masaryk University Faculty of Informatics Operating system boot from fully encrypted device Bachelor’s Thesis Daniel Chromik Brno, Fall 2016 Replace this page with a copy of the official signed thesis assignment and the copy of the Statement of an Author. Declaration Hereby I declare that this paper is my original authorial work, which I have worked out by my own. All sources, references and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Daniel Chromik Advisor: ing. Milan Brož i Acknowledgement I would like to thank my advisor, Ing. Milan Brož, for his guidance and his patience of a saint. Another round of thanks I would like to send towards my family and friends for their support. ii Abstract The goal of this work is description of existing solutions for boot- ing Linux and Windows from fully encrypted devices with Secure Boot. Before that, though, early boot process and bootloaders are de- scribed. A simple Linux distribution is then set up to boot from a fully encrypted device. And lastly, existing Windows encryption solutions are described. iii Keywords boot process, Linux, Windows, disk encryption, GRUB 2, LUKS iv Contents 1 Introduction ............................1 1.1 Thesis goals ..........................1 1.2 Thesis structure ........................2 2 Boot Process Description ....................3 2.1 Early Boot Process ......................3 2.2 Firmware interfaces ......................4 2.2.1 BIOS – Basic Input/Output System . .4 2.2.2 UEFI – Unified Extended Firmware Interface .5 2.3 Partitioning tables ......................5 2.3.1 MBR – Master Boot Record . -

A Quantitative Study of Advanced Encryption Standard Performance

United States Military Academy USMA Digital Commons West Point ETD 12-2018 A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility Daniel Hawthorne United States Military Academy, [email protected] Follow this and additional works at: https://digitalcommons.usmalibrary.org/faculty_etd Part of the Information Security Commons Recommended Citation Hawthorne, Daniel, "A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility" (2018). West Point ETD. 9. https://digitalcommons.usmalibrary.org/faculty_etd/9 This Doctoral Dissertation is brought to you for free and open access by USMA Digital Commons. It has been accepted for inclusion in West Point ETD by an authorized administrator of USMA Digital Commons. For more information, please contact [email protected]. A QUANTITATIVE STUDY OF ADVANCED ENCRYPTION STANDARD PERFORMANCE AS IT RELATES TO CRYPTOGRAPHIC ATTACK FEASIBILITY A Dissertation Presented in Partial Fulfillment of the Requirements for the Degree of Doctor of Computer Science By Daniel Stephen Hawthorne Colorado Technical University December, 2018 Committee Dr. Richard Livingood, Ph.D., Chair Dr. Kelly Hughes, DCS, Committee Member Dr. James O. Webb, Ph.D., Committee Member December 17, 2018 © Daniel Stephen Hawthorne, 2018 1 Abstract The advanced encryption standard (AES) is the premier symmetric key cryptosystem in use today. Given its prevalence, the security provided by AES is of utmost importance. Technology is advancing at an incredible rate, in both capability and popularity, much faster than its rate of advancement in the late 1990s when AES was selected as the replacement standard for DES. Although the literature surrounding AES is robust, most studies fall into either theoretical or practical yet infeasible. -

Advanced Encryption Standard Real-World Alternatives

Outline Multiple Encryption Birthday Attack Advanced Encryption Standard Real-World Alternatives CPSC 367: Cryptography and Security Michael Fischer Lecture 7 February 5, 2019 Thanks to Ewa Syta for the slides on AES CPSC 367, Lecture 7 1/58 Outline Multiple Encryption Birthday Attack Advanced Encryption Standard Real-World Alternatives Multiple Encryption Composition Group property Birthday Attack Advanced Encryption Standard AES Real-World Issues Alternative Private Key Block Ciphers CPSC 367, Lecture 7 2/58 Outline Multiple Encryption Birthday Attack Advanced Encryption Standard Real-World Alternatives Multiple Encryption CPSC 367, Lecture 7 3/58 Outline Multiple Encryption Birthday Attack Advanced Encryption Standard Real-World Alternatives Composition Composition of cryptosystems Encrypting a message multiple times with the same or different ciphers and keys seems to make the cipher stronger, but that's not always the case. The security of the composition can be difficult to analyze. For example, with the one-time pad, the encryption and decryption functions Ek and Dk are the same. The composition Ek ◦ Ek is the identity function! CPSC 367, Lecture 7 4/58 Outline Multiple Encryption Birthday Attack Advanced Encryption Standard Real-World Alternatives Composition Composition within practical cryptosystems Practical symmetric cryptosystems such as DES and AES are built as a composition of simpler systems. Each component offers little security by itself, but when composed, the layers obscure the message to the point that it is difficult for an adversary to recover. The trick is to find ciphers that successfully hide useful information from a would-be attacker when used in concert. CPSC 367, Lecture 7 5/58 Outline Multiple Encryption Birthday Attack Advanced Encryption Standard Real-World Alternatives Composition Double Encryption Double encryption is when a cryptosystem is composed with itself. -

Low-Latency Hardware Masking with Application to AES

Low-Latency Hardware Masking with Application to AES Pascal Sasdrich, Begül Bilgin, Michael Hutter, and Mark E. Marson Rambus Cryptography Research, 425 Market Street, 11th Floor, San Francisco, CA 94105, United States {psasdrich,bbilgin,mhutter,mmarson}@rambus.com Abstract. During the past two decades there has been a great deal of research published on masked hardware implementations of AES and other cryptographic primitives. Unfortunately, many hardware masking techniques can lead to increased latency compared to unprotected circuits for algorithms such as AES, due to the high-degree of nonlinear functions in their designs. In this paper, we present a hardware masking technique which does not increase the latency for such algorithms. It is based on the LUT-based Masked Dual-Rail with Pre-charge Logic (LMDPL) technique presented at CHES 2014. First, we show 1-glitch extended strong non- interference of a nonlinear LMDPL gadget under the 1-glitch extended probing model. We then use this knowledge to design an AES implementation which computes a full AES-128 operation in 10 cycles and a full AES-256 operation in 14 cycles. We perform practical side-channel analysis of our implementation using the Test Vector Leakage Assessment (TVLA) methodology and analyze univariate as well as bivariate t-statistics to demonstrate its DPA resistance level. Keywords: AES · Low-Latency Hardware · LMDPL · Masking · Secure Logic Styles · Differential Power Analysis · TVLA · Embedded Security 1 Introduction Masking countermeasures against side-channel analysis [KJJ99] have received a lot of attention over the last two decades. They are popular because they are relatively easy and inexpensive to implement, and their strengths and limitations are well understood using theoretical models and security proofs. -

The Data Encryption Standard (DES) – History

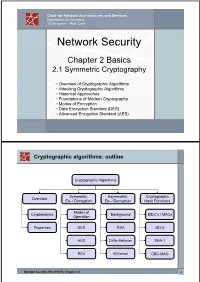

Chair for Network Architectures and Services Department of Informatics TU München – Prof. Carle Network Security Chapter 2 Basics 2.1 Symmetric Cryptography • Overview of Cryptographic Algorithms • Attacking Cryptographic Algorithms • Historical Approaches • Foundations of Modern Cryptography • Modes of Encryption • Data Encryption Standard (DES) • Advanced Encryption Standard (AES) Cryptographic algorithms: outline Cryptographic Algorithms Symmetric Asymmetric Cryptographic Overview En- / Decryption En- / Decryption Hash Functions Modes of Cryptanalysis Background MDC’s / MACs Operation Properties DES RSA MD-5 AES Diffie-Hellman SHA-1 RC4 ElGamal CBC-MAC Network Security, WS 2010/11, Chapter 2.1 2 Basic Terms: Plaintext and Ciphertext Plaintext P The original readable content of a message (or data). P_netsec = „This is network security“ Ciphertext C The encrypted version of the plaintext. C_netsec = „Ff iThtIiDjlyHLPRFxvowf“ encrypt key k1 C P key k2 decrypt In case of symmetric cryptography, k1 = k2. Network Security, WS 2010/11, Chapter 2.1 3 Basic Terms: Block cipher and Stream cipher Block cipher A cipher that encrypts / decrypts inputs of length n to outputs of length n given the corresponding key k. • n is block length Most modern symmetric ciphers are block ciphers, e.g. AES, DES, Twofish, … Stream cipher A symmetric cipher that generats a random bitstream, called key stream, from the symmetric key k. Ciphertext = key stream XOR plaintext Network Security, WS 2010/11, Chapter 2.1 4 Cryptographic algorithms: overview -

Investigating Profiled Side-Channel Attacks Against the DES Key

IACR Transactions on Cryptographic Hardware and Embedded Systems ISSN 2569-2925, Vol. 2020, No. 3, pp. 22–72. DOI:10.13154/tches.v2020.i3.22-72 Investigating Profiled Side-Channel Attacks Against the DES Key Schedule Johann Heyszl1[0000−0002−8425−3114], Katja Miller1, Florian Unterstein1[0000−0002−8384−2021], Marc Schink1, Alexander Wagner1, Horst Gieser2, Sven Freud3, Tobias Damm3[0000−0003−3221−7207], Dominik Klein3[0000−0001−8174−7445] and Dennis Kügler3 1 Fraunhofer Institute for Applied and Integrated Security (AISEC), Germany, [email protected] 2 Fraunhofer Research Institution for Microsystems and Solid State Technologies (EMFT), Germany, [email protected] 3 Bundesamt für Sicherheit in der Informationstechnik (BSI), Germany, [email protected] Abstract. Recent publications describe profiled single trace side-channel attacks (SCAs) against the DES key-schedule of a “commercially available security controller”. They report a significant reduction of the average remaining entropy of cryptographic keys after the attack, with surprisingly large, key-dependent variations of attack results, and individual cases with remaining key entropies as low as a few bits. Unfortunately, they leave important questions unanswered: Are the reported wide distributions of results plausible - can this be explained? Are the results device- specific or more generally applicable to other devices? What is the actual impact on the security of 3-key triple DES? We systematically answer those and several other questions by analyzing two commercial security controllers and a general purpose microcontroller. We observe a significant overall reduction and, importantly, also observe a large key-dependent variation in single DES key security levels, i.e. -

Block Ciphers and the Data Encryption Standard

Lecture 3: Block Ciphers and the Data Encryption Standard Lecture Notes on “Computer and Network Security” by Avi Kak ([email protected]) January 26, 2021 3:43pm ©2021 Avinash Kak, Purdue University Goals: To introduce the notion of a block cipher in the modern context. To talk about the infeasibility of ideal block ciphers To introduce the notion of the Feistel Cipher Structure To go over DES, the Data Encryption Standard To illustrate important DES steps with Python and Perl code CONTENTS Section Title Page 3.1 Ideal Block Cipher 3 3.1.1 Size of the Encryption Key for the Ideal Block Cipher 6 3.2 The Feistel Structure for Block Ciphers 7 3.2.1 Mathematical Description of Each Round in the 10 Feistel Structure 3.2.2 Decryption in Ciphers Based on the Feistel Structure 12 3.3 DES: The Data Encryption Standard 16 3.3.1 One Round of Processing in DES 18 3.3.2 The S-Box for the Substitution Step in Each Round 22 3.3.3 The Substitution Tables 26 3.3.4 The P-Box Permutation in the Feistel Function 33 3.3.5 The DES Key Schedule: Generating the Round Keys 35 3.3.6 Initial Permutation of the Encryption Key 38 3.3.7 Contraction-Permutation that Generates the 48-Bit 42 Round Key from the 56-Bit Key 3.4 What Makes DES a Strong Cipher (to the 46 Extent It is a Strong Cipher) 3.5 Homework Problems 48 2 Computer and Network Security by Avi Kak Lecture 3 Back to TOC 3.1 IDEAL BLOCK CIPHER In a modern block cipher (but still using a classical encryption method), we replace a block of N bits from the plaintext with a block of N bits from the ciphertext. -

Crypto Wars of the 1990S

Danielle Kehl, Andi Wilson, and Kevin Bankston DOOMED TO REPEAT HISTORY? LESSONS FROM THE CRYPTO WARS OF THE 1990S CYBERSECURITY June 2015 | INITIATIVE © 2015 NEW AMERICA This report carries a Creative Commons license, which permits non-commercial re-use of New America content when proper attribution is provided. This means you are free to copy, display and distribute New America’s work, or in- clude our content in derivative works, under the following conditions: ATTRIBUTION. NONCOMMERCIAL. SHARE ALIKE. You must clearly attribute the work You may not use this work for If you alter, transform, or build to New America, and provide a link commercial purposes without upon this work, you may distribute back to www.newamerica.org. explicit prior permission from the resulting work only under a New America. license identical to this one. For the full legal code of this Creative Commons license, please visit creativecommons.org. If you have any questions about citing or reusing New America content, please contact us. AUTHORS Danielle Kehl, Senior Policy Analyst, Open Technology Institute Andi Wilson, Program Associate, Open Technology Institute Kevin Bankston, Director, Open Technology Institute ABOUT THE OPEN TECHNOLOGY INSTITUTE ACKNOWLEDGEMENTS The Open Technology Institute at New America is committed to freedom The authors would like to thank and social justice in the digital age. To achieve these goals, it intervenes Hal Abelson, Steven Bellovin, Jerry in traditional policy debates, builds technology, and deploys tools with Berman, Matt Blaze, Alan David- communities. OTI brings together a unique mix of technologists, policy son, Joseph Hall, Lance Hoffman, experts, lawyers, community organizers, and urban planners to examine the Seth Schoen, and Danny Weitzner impacts of technology and policy on people, commerce, and communities. -

Revention of Coldboot Attack on Linux Systems Measures to Prevent Coldboot Attack

Special Issue - 2017 International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 ICIATE - 2017 Conference Proceedings Prevention of Coldboot Attack on Linux Systems Measures to Prevent Coldboot Attack Siddhesh Patil Ekta Patel Nutan Dhange Information Technology, Atharva Information Technology, Atharva Assistant Professor College of Engineering College of Engineering Information Technology, Atharva Mumbai University Mumbai University College of Engineering Mumbai, India Mumbai, India Mumbai University Mumbai, India Abstract— Contrary to the popular belief, DDR type RAM boot attack techniques. We implement measures to ensure that data modules retain stored memory even after power is cut off. stays secure on system shutdown. Data security is critical for most Provided physical access to the cryptosystem, a hacker or a of the business and even home computer users. RAM is used to store forensic specialist can retrieve information stored in the RAM non-persistent information into it. When an application is in use, by installing it on another system and booting from a USB drive user-specific or application-specific data may be stored in RAM. to take a RAM dump. With adequate disassembly and analytic This data can be sensitive information like client information, tools, this information stored in the RAM dump can be payment details, personal files, bank account details, etc. All this deciphered. Hackers thrive on such special-case attack information, if fallen into wrong hands, can be potentially techniques to gain access to systems with sensitive information. dangerous. The goal of most unethical hackers / attackers is to An unencrypted RAM module will contain the decryption key disrupt the services and to steal information.