Bassok, Miriam Learning from Examples Via Self-Explanations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

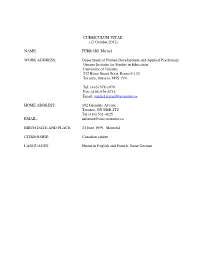

CV 10 October 2012

CURRICULUM VITAE (12 October 2012) NAME: FERRARI, Michel WORK ADDRESS: Department of Human Development and Applied Psychology Ontario Institute for Studies in Education University of Toronto 252 Bloor Street West, Room 9-132 Toronto, Ontario, M5S 1V6 Tel: (416) 978-1070 Fax: (416) 926-4713 Email: [email protected] HOME ADDRESS: 102 Glendale Avenue Toronto, ON M6R 2T2 Tel (416) 531-4825 EMAIL: [email protected] BIRTH DATE AND PLACE: 23 June 1959, Montréal CITIZENSHIP: Canadian citizen LANGUAGES: Fluent in English and French; Some German Michel Ferrari, Vita 2 DEGREES Concordia University Western Society & Culture B.A. 1983 Université du Québec à Montréal (UQAM) Psychology B.A (Core courses) 1986 Université du Québec à Montréal (UQAM) Psychology Master's 1989 Université du Québec à Montréal (UQAM) Psychology Doctorate 1994 THESES Ferrari, M (1989). Rôle de l'autorégulation dans la performance motrice d'experts et de novices (The role of self-regulation in expert and novice motor performance). Unpublished master's thesis. Université du Québec à Montréal (UQAM), Montréal. Ferrari, M (1994). L’amélioration de l’autorégulation durant l’apprentissage d’une tâche motrice (Improving self-regulation during motor learning). Unpublished doctoral dissertation. Université du Québec à Montréal (UQAM), Montréal. EXPERIENCE Research Positions Research Assistant at the Laboratory of Human Ethology. (06/88 to 12/88, and 09/ 89 to 12/89, under the supervision of Fred Strayer). Participation in all aspects of a study concerning mother- child socio-affective relations. Tasks included: filming and coding videotaped data; developing a coding scheme to observe cognitive and affective mother-child interactions; statistical analyses; preparing scientific communications. -

Conference Proceedings from the Annual Carnegie Cognition Symposium (10Th, Vail, Colorado, June 2-8, 1974)

DOCUMENT RESUME ED 123 584 CS 002 668 AUTHOR Klahr, David, Ed. TITLE Cognition and Instruction; Conference Proceedings from the Annual Carnegie Cognition Symposium (10th, Vail, Colorado, June 2-8, 1974). INSTITUTION Carnegie-Mellon Univ., Pittsburgh, Pa. Dept. of Psychology. SPONS AGENCY Office of Naval Research, Washington, D.C. Personnel and Training Research Programs Office. PUB DATE May 76 CONTRACT N00014-73-C-04^5 NOTE 356p.; Some parts of text may not reproduce clearly due to smallness of type* ' EDRS PRICE *MF-$0.83 HC-$19.41 Plus Postage. DESCPIPTOES *Cognitive Development; *Cognitive Processes; Comprehension; Conference Reports; Educational Psychology; *Instruction; *Instructional Design; *Learning; Memory; Problem Solving; *Research ABSTRACT This book, containing conference papers and a summary of activities, focuses on the contributions which current research in cognitive psychology can make to the solution of problems in instructional design. The first three parts of the book include sets of research contributions followed by discussions: part one deals with different strategies for instructional research, part two concerns process and structure in learning, and part three concentrates on the processes that underlie the comprehension of verbal instructions. The fourth part contains three Chapters that offer critiques, syntheses, and evaluations of various aspects of the preceding chapters. A list of references and author and subject indexes are included. (JM) *********************************************************************** Documents acquired by ERIC include many informal unpublished * materials not available from other sources. ERIC makes,every effort * * to obtain the best copy available. Nevertheless, items of marginal * * reproducibility are often encountered and this affects the quality * * of the microfiche and hardcopy reproddctions ERIC makes available * * via, the ERIC Document Reproduction Service (EDRS). -

Introduction to Summit Learning

-~ -~ -~ ThE ReSeArCh tHaT SUPPOrTS SUMMit LeArNiNg Summit Learning was developed by Summit Public Schools over the course of 15 years, in partnership with nationally acclaimed learning f!~ PERTS • BEFUTURE - READY scientists, researchers, and academics. 00 LEARNING POLICY III !~..~! 1I~}:~ Center for Education PolicyResearch NAT I O NAL EQUITY HARVARD UNIVERSITY :fJ.. .-P R O J EC T ' MINDSET Yale SCHOLARS S<;.Q~t~ NETWORK Op portunity Policy in Educat ion Centerfor Emotio11c1I lntclligmcc Dig into the research with the BUCK IKSTITUTE FOR EDUCATION ••a ~l m".UMSRI Education ~ Science of Summit white paper: I mREDESIGN UCHICAGO Consortium Making Education Marr Relevant on School Research summitleaming.org/research ': ' DESiGninG FOr STUDENT SUCCESS STUDENT SUMMIT LEARNING SCHOOL OUTCOMES COMPONENTS DESIGN Mentoring Projects - --- -- -- Self-Direction The student experience is defined by specific design choices that help students achieve three key outcomes. -~ -~ Students demonstrate proficiency in the following three outcomes • ~,e I Essential and Understanding and Mindsets and behaviors transferable application of complex, that support academic lifelong skills challenging facts and achievement and concepts well-being ., ThE coMrOnEnTS OF SUMW\It LeArNiNg • Mentoring elf-Di e tio Students meet 1:1 with a Students are guided through a dedicated mentor who knows learning cycle that develops them deeply and supports them self-direction by teaching them how in setting and achieving their to set goals, make plans, short- and long-term goals. Projects demonstrate their skills and Students apply their acquired knowledge, and reflect. knowledge, skills and habits to projects that prepare them for the real-world scenarios they'll encounter in life after school. -

Celebrating 50 Years of LRDC (PDF)

UNIVERSITY OF PITTSBURGH Celebrating 50 Years of LRDC This report was published in 2014 by the University of Pittsburgh Learning Research and Development Center. THIS REPORT CELEBRATES THE UNIVERSITY OF PITTSBURGH LEARNING RESEARCH AND DEVELOPMENT CENTER’S (LRDC) 50 YEARS AS A LEADING INTERDISCIPLINARY CENTER FOR RESEARCH ON LEARNING AND EDUCATION. IT PROVIDES GLIMPSES OF LRDC OVER THE YEARS AND HIGHLIGHTS SOME OF THE EXCITING WORK THAT OCCUPIES OUR CURRENT RESEARCH AND DEVELOPMENT AGENDA. The Center’s interconnected programs of research and development have reflected its mission of stimulating interaction between research and practice across a broad spectrum of problems, from the neural basis of learning to the development of intelligent tutors to educational policy. Among research institutions in learning and education, this interconnected breadth is unique. The Center’s research has been equally wide-ranging in the domains of learning it has studied. Reading, mathematics, and science—staples of education—have been a continuing focus over much of LRDC’s 50 years. However, the Center also has addressed less-studied learning domains (e.g., history, geography, avionics, and law) as well as the reasoning and intellectual abilities that serve learning across domains. Moreover, social settings for learning, including those outside schools; teaching effectiveness; and technol- ogy for learning are all part of LRDC’s research story. LRDC’s ability to sustain research programs across these diverse, intersecting problems owes much to the cooperation of its partnering schools and depart- ments in the University. The leadership of the University of Pittsburgh has made possible what is often very difficult: a research center that has been able to effectively pursue truly cross-disciplinary research programs. -

Michelene (Micki) T

Michelene (Micki) T. H. Chi Curriculum Vitae June 21, 2019 [older] PERSONAL DATA Birth Place: Chiang-Mai, Thailand Immigrant: From Indonesia (now a U.S. citizen) Home Address Office Address Email & Phone 1770 E Carver Rd Learning and Cognition Lab [email protected] Tempe, AZ 85284 Payne Hall, Room 122F Office: 480-727-0041 Home phone: 480-268-9188 1000 S Forest Mall Tempe, AZ 85287-2111 EDUCATION 1970 B.Sc. in Mathematics, Carnegie-Mellon University 1975 Ph.D. in Psychology, Carnegie-Mellon University Thesis: The Development of Short-term Memory Capacity Committee: David Klahr, Patricia Carpenter, and Herbert Simon (Nobel Laureate) RESEARCH AND TEACHING POSITIONS 1970-1974 NIE Trainee, supervised by Prof. David Klahr, Cognitive Developmental Group, Dept. of Psychology, Carnegie Mellon University. 1974-1975 Post-Doctoral Trainee, The Experimental Group, supervised by Profs. Mike Posner & Steve Keele, Department of Psychology, University of Oregon 1975-1977 Post-Doctoral Fellow, supervised by Prof. Robert Glaser, Learning Research and Development Center (LRDC), University of Pittsburgh 1977-2008 Research Associate to Senior Scientist Learning Research and Development Center, University of Pittsburgh 1982-1990 Assistant to Associate to Full Professor, Department of Psychology, University of Pittsburgh 1990-2008 Professor of Psychology, Department of Psychology, University of Pittsburgh 1994-1998 Cognitive Program Chair, Department of Psychology, University of Pittsburgh 1996-2001 Adjunct Faculty Member, Section of Medical Informatics, -

Toward the Thinking Curriculum: Current Cognitive Research

DOCUMENT RESUME ED 328 871 CS 009 714 AUTHOR Resnick, Lauren B., Ed.; Klopfer, Leopold E., Ed. TITLE Toward the Thinking Curriculum: Current Cognitive Research. 1989 ASCD Yearbook. INSTITUTION Association for Supervision and Curriculum Development, Alexandria, Va. REPORT NO ISBN-0-87120-156-9; ISSN-1042-9018 PUB DATE 89 NOTE 231p. AVAILABLE FROMAssociation for Supervision and Curriculum Development, 1250 N. Pitt St., Alexandria, VA 22314-1403 ($15.95). PUB TYPE Reports - Research/Technical (143) -- Collected Works - Serials (022) EDRS PRICE MF01 Plus Postage. PC Not Available from EDRS. DESCRIPTORS *Computer Assisted Instruction; *Critical Thinking; *Curriculum Development; Curriculum Research; Higher Education; Independent Reading; *Mathematics Instruction; Problem Solving; *Reading Comprehension; *Science Instruction; Writing Research IDENTIFIERS *Cognitive Research; Knowledge Acquisition ABSTRACT A project of the Center for the Study of Learning at the University of Pittsburgh, this yearbook combines the two major trends/concerns impacting the future of educational development for the next decade: knowledge and thinking. The yearbook comprises tLa following chapters: (1) "Toward the Thinking Curriculum: An Overview" (Lauren B. Resnick and Leopold E. Klopfer); (2) "Instruction for Self-Regulated Reading" (Annemarie Sullivan Palincsar and Ann L. Brown);(3) "Improving Practice through Jnderstanding Reading" (Isabel L. Beck);(4) "Teaching Mathematics Concepts" (Rochelle G. Kaplan and others); (5) "Teaching Mathematical Thinking and Problem Solving" (Alan H. Schoenfeld); (6) "Research on Writing: Building a Cognitive and Social Understanding of Composing" (Glynda Ann Hull); (7) "Teaching Science for Understanding" (James A. Minstrell); (8) "Research on Teaching Scientific ThInking: Implications for Computer-Based Instruction" (Jill H. Larkin and Ruth W. Chabay); and (9) "A Perspective on Cognitive Research and Its Implications for Instruction" (John D. -

Stephanie Danell Teasley

Stephanie Danell Teasley Work Address: 4433 North Quad 105 S. State St. Ann Arbor, MI 48109-1285 Phone: (734) 763-8124; Fax: (734) 647-8045 Email: [email protected] Education & Experience Degrees Awarded Ph.D.: 1992, Learning, Development, and Cognition; Department of Psychology, University of Pittsburgh, Pittsburgh, PA. Dissertation title: Communication and collaboration: The role of talk in children’s peer collaborations. Committee: Lauren B. Resnick (Chair), Michelene Chi, David Klahr, & Leona Schauble B.A.: 1981, Psychology, Kalamazoo College, Kalamazoo, MI. Academic Positions. 09/11 – present Research Professor. School of Information University of Michigan, Ann Arbor, MI. 12/02 – present Director. Learning, Education and Design (LED) Lab (formerly USE Lab) University of Michigan, Ann Arbor, MI. 08/06 – 09/12 Director. Doctoral Program, School of Information University of Michigan, Ann Arbor, MI. 06/11 – 01/12 Visiting Scholar. Department of Human Centered Design & Engineering College of Engineering, University of Washington, Seattle, WA 11/03 – 08/11 Research Associate Professor. School of Information University of Michigan, Ann Arbor, MI. 09/01 – 11/03 Senior Associate Research Scientist. School of Information University of Michigan, Ann Arbor, MI. 03/96 - 09/01 Senior Assistant Research Scientist. Collaboratory for Research on Electronic Work School of Information, University of Michigan, Ann Arbor, MI. 09/94 - 09/96 Visiting Scholar. Psychology Department University of Michigan, Ann Arbor, MI. 06/92 - 09/94 Postdoctoral Research Fellow. Psychology Department University of Michigan, Ann Arbor, MI. Stephanie D. Teasley 2 Scholarship Citation metrics: http://scholar.google.com/citations?user=2b3mJYcAAAAJ&hl=en Note. Early publications includes those under former name, S. -

Introduction to “New Conceptualizations of Transfer of Learning”

HEDP #695710, VOL 47, ISS 3 Introduction to “New Conceptualizations of Transfer of Learning” Robert L. Goldstone and Samuel B. Day QUERY SHEET This page lists questions we have about your paper. The numbers displayed at left can be found in the text of the paper for reference. In addition, please review your paper as a whole for correctness. Q1. Au: Is “Susquehanna University” the correct affiliation for Dr. Day? The email address we have for him is for Indiana University. Q2. Au: Leeper (1935) does not have a matching reference. Provide complete reference or remove citation. Q3. Au: Ball (1999): Provide reference or remove citation. Q4. Au: Chi (2005): Provide complete reference or remove citation. Q5. Au: Provide page number of illustration in Leeper 1935, name of copyright holder (the publisher of The Pedagogical Seminary and Journal of Genetic Psychology), and year of copyright. Q6. Au: Your original manuscript said Figure 2 was “reprinted with permission from the publisher.” Please provide a copy of the communication from the publisher granting you permission to reproduce this figure. Q7. Au: Nokes-Malach & Belenky, in press, does not have a matching reference. Provide complete reference or remove citation. Q8. Au: Chi (2012): Provide complete reference or remove citation. Q9. Au: Ball (2011) does not have a matching text citation; please cite in text or delete. Q10. Au: Schwartz et al. (2007): Please provide chapter page range. TABLE OF CONTENTS LISTING The table of contents for the journal will list your paper exactly as it appears below: Introduction to “New Conceptualizations of Transfer of Learning” Robert L. -

Deliberation-Based Virtual Team Formation, Discussion Mining and Support Miaomiao Wen September 2016

August 30, 2016 DRAFT Investigating Virtual Teams in Massive Open Online Courses: Deliberation-based Virtual Team Formation, Discussion Mining and Support Miaomiao Wen September 2016 School of Computer Science Carnegie Mellon University Pittsburgh, PA 15213 Thesis Committee: Carolyn P. Rose´ (Carnegie Mellon University), Chair James Herbsleb (Carnegie Mellon University) Steven Dow (University of California, San Diego) Anne Trumbore (Wharton Online Learning Initiatives) Candace Thille (Stanford University) Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy. Copyright c 2016 Miaomiao Wen August 30, 2016 DRAFT Keywords: Massive Open Online Course, MOOC, virtual team, transactivity, online learning August 30, 2016 DRAFT Abstract August 30, 2016 DRAFT To help learners foster the competencies of collaboration and communication in practice, there has been interest in incorporating a collaborative team-based learning component in Massive Open Online Courses (MOOCs) ever since the beginning. Most researchers agree that simply placing students in small groups does not guar- antee that learning will occur. In previous work, team formation in MOOCs occurs through personal messaging early in a course and is typically based on scant learner profiles, e.g. demographics and prior knowledge. Since MOOC students have di- verse background and motivation, there has been limited success in the self-selected or randomly assigned MOOC teams. Being part of an ineffective or dysfunctional team may well be inferior to independent study in promoting learning and can lead to frustration. This dissertation studies how to coordinate team based learning in MOOCs with a learning science concept, namely Transactivity. A transactivite dis- cussion is one where participants elaborate, build upon, question or argue against previously presented ideas [20]. -

Michelene T.H

Michelene (Micki) T. H. Chi Curriculum Vitae February 18, 2019 [older] PERSONAL DATA Birth Place: Chiang-Mai, Thailand Immigrant: From Indonesia (now a U.S. citizen) Home Address Office Address Email & Phone 1770 E Carver Rd Learning and Cognition Lab [email protected] Tempe, AZ 85284 Payne Hall, Room 122F Office: 480-727-0041 Home phone: 480-268-9188 1000 S Forest Mall Tempe, AZ 85287-2111 EDUCATION 1970 B.Sc. in Mathematics, Carnegie-Mellon University 1975 Ph.D. in Psychology, Carnegie-Mellon University Thesis: The Development of Short-term Memory Capacity Committee: David Klahr, Patricia Carpenter, and Herbert Simon (Nobel Laureate) RESEARCH AND TEACHING POSITIONS 1970-1974 NIE Trainee, supervised by Prof. David Klahr, Cognitive Developmental Group, Dept. of Psychology, Carnegie Mellon University. 1974-1975 Post-Doctoral Trainee, The Experimental Group, supervised by Profs. Mike Posner & Steve Keele, Department of Psychology, University of Oregon 1975-1977 Post-Doctoral Fellow, supervised by Prof. Robert Glaser, Learning Research and Development Center (LRDC), University of Pittsburgh 1977-2008 Research Associate to Senior Scientist Learning Research and Development Center, University of Pittsburgh 1982-1990 Assistant to Associate to Full Professor, Department of Psychology, University of Pittsburgh 1990-2008 Professor of Psychology, Department of Psychology, University of Pittsburgh 1994-1998 Cognitive Program Chair, Department of Psychology, University of Pittsburgh 1996-2001 Adjunct Faculty Member, Section of Medical Informatics, -

National Center for Education Research Publication Handbook

National Center for Education Research Publication Handbook Publications from funded education research grants FY 2002 to FY 2013 November 2013 Since its inception in 2002, the National Center for Education Research (NCER) in the Institute of Education Sciences (IES) has funded over 700 education research grants and over 60 education training grants. The research grants have supported have supported exploratory research to build theory or generate hypotheses on factors that may affect educational outcomes, development and innovation research to create or refine academic interventions, evaluation studies to test the efficacy and effectiveness of interventions, and measurement work to help develop more accurate and valid assessments, and the training grants have helped prepare the next generation of education researchers. NCER‘s education research grantees have focused on the needs of a wide range of students, from pre- kindergarten through postsecondary and adult education, and have tackled a variety of topic areas. The portfolio of research includes cognition, social and behavioral research, math, science, reading, writing, school systems and policies, teacher quality, statistical and research methods, just to name a few. Each year, our grantees are contributing to the wealth of knowledge across disciplines. What follows is a listing of the publications that these grants have contributed along with a full listing of all the projects funded through NCER‘s education research grant programs from 2002 to 2013. The publications are presented according to the topic area and arranged by the year that the grant was awarded. Where applicable, we have noted related grant projects and project websites and have provided links to publications that are listed in the IES ERIC database. -

Michelene T.H

Michelene (Micki) T. H. Chi Curriculum Vitae [current] July 23, 2020 (older) PERSONAL DATA Birth Place: Chiang-Mai, Thailand Immigrant: From Indonesia (naturalized U.S. citizen at age 16) Home Address Office Address Email & Phone 1770 E Carver Rd Learning and Cognition Lab [email protected] Tempe, AZ 85284 Payne Hall, Room 122F Office: 480-727-0041 Home phone: 480-268-9188 1000 S Forest Mall Tempe, AZ 85287-2111 EDUCATION 1970 B.Sc. in Mathematics, Carnegie-Mellon University 1975 Ph.D. in Psychology, Carnegie-Mellon University Thesis: The Development of Short-term Memory Capacity Committee: David Klahr, Patricia Carpenter, and Herbert Simon (Nobel Laureate in Economics) RESEARCH AND TEACHING POSITIONS 1970-1974 NIE Trainee, supervised by Prof. David Klahr, Cognitive Developmental Group, Dept. of Psychology, Carnegie Mellon University. 1974-1975 Post-Doctoral Trainee, The Experimental Group, supervised by Profs. Mike Posner & Steve Keele, Department of Psychology, University of Oregon 1975-1977 Post-Doctoral Fellow, supervised by Prof. Robert Glaser, Learning Research and Development Center (LRDC), University of Pittsburgh 1977-2008 Research Associate to Senior Scientist LRDC, University of Pittsburgh 1982-1990 Assistant to Associate to Full Professor, Department of Psychology, University of Pittsburgh 1990-2008 Professor of Psychology, Department of Psychology, University of Pittsburgh 1994-1998 Cognitive Program Chair, Department of Psychology, University of Pittsburgh 1996-2001 Adjunct Faculty Member, Section of Medical Informatics,