Data Analytics in Sports: Improving the Accuracy of Nfl Draft Selection Using Supervised Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wide Receiver Jared Abbrederis University of Wisconsin

BFS SUCCESS STORY Wide Receiver Jared Abbrederis University of Wisconsin A former BFS Athlete of the Year is making it big in college football espite the advances in sports medicine, when a high school Dfootball player tears their ACL, especially if they are in a skill position such as quarterback, that often means the end of any possibility of moving on to play at the next level. The reason is that ACL injuries often affect speed, and college coaches recruit speed – plus they don’t like taking chances on ath- letes they consider “damaged goods.” But there are exceptions. Jared Abbrederis was the 2009 BFS High School Male Athlete of the Year. He attended Wautoma High School in Wautoma, Wisconsin, excelling in football (as quarterback), wrestling and track. He was a member of the National Honor Society, carrying a 4.14 GPA, and was involved with the Fellowship 3KRWRE\'DYLG6WOXND:LVFRQVLQ$WKOHWLF&RPPXQLFDWLRQV 14 | BIGGER FASTER STRONGER MARCH/APRIL 2012 of Christian Athletes. What’s more, he a torn ACL in addition to breaking off did community service by helping the the end of his femur. Head football elderly with yard work and coaching and strength coach Dennis Moon says a youth football program. He was the the injury was so severe that the doc- total package, but his success didn’t tors didn’t know if he would ever play come easy. football again. Abbrederis had other During the sixth game of his ideas: “After my injury, I really wanted sophomore year, Abbrederis was tackled to focus on my strength and speed running out of the pocket and suffered training. -

![All-Time 1St Place State Meet Bests Wiaa: (1895-1919, 1920-1990[A], & 1991-Present[Division I])](https://docslib.b-cdn.net/cover/3976/all-time-1st-place-state-meet-bests-wiaa-1895-1919-1920-1990-a-1991-present-division-i-23976.webp)

All-Time 1St Place State Meet Bests Wiaa: (1895-1919, 1920-1990[A], & 1991-Present[Division I])

ALL-TIME 1ST PLACE STATE MEET BESTS WIAA: (1895-1919, 1920-1990[A], & 1991-PRESENT[DIVISION I]) MALE ATHLETES SHOT PUT - (1895-1919, 1920-1990[A], & 1991-PRESENT[DIVISION I]) DISTANCE ATHLETE SCHOOL YEAR 67’ 6” Steve Marcelle Green Bay Preble 2005 66’ 7 1/2” Stu Voigt Madison West 1966 63’ 1 1/4” Jeff Braun Seymour 1975 63’ 3/4” (**) Jim DeForest Madison East 1965 62’ 6 1/2” Jim Nelson La Crosse Logan 2000 62’ 4 3/4” Gary Weiss Madison Memorial 1973 62’ 2” Katon Bethay Milton 2002 62’ 1 1/2” Joe Thomas Brookfield Central 2003 61’ 11 1/2” Ian Douglas Beaver Dam 1997 61’ 10” Paul Sharkey D.C. Everest 1985 61’ 9” Ed Wesela Slinger 2007 61’ 6 1/2” Greg Gretz Manitowoc 1967 61’ 3 1/2” Bob Czarnecki South Milwaukee 1986 60’ 9 1/4” Theron Baumann Monona Grove 2012 60’ 6” Ken Starch Madison East 1972 60’ 6” Mike Hardy Kimberly 2010 60’ 3 1/4” Ed Wesela Slinger 2006 60’ 1 3/4” Kaleb Wendricks Bay Port 2011 59’ 11 3/4” Joe Nault Marinette 1995 59’ 11 1/2” Dan Siewert Hamilton 1977 59’ 11” Ed Nwagbracha Nicolet 1990 59’ 10 3/4” Ken Loken Hamilton 1987 59’ 10 1/2” Mike Adam Whitnall 1998 59’ 9 3/4” Dylan Chmura Waukesha West 2013 59’ 9” Steve Riese Oshkosh 1970 59’ 8 3/4” Jared Hassin Kettle Moraine 2008 59’ 8 1/2” Dan Siewert Hamilton 1978 59’ 7 1/2” Tom Bewick Madison La Follette 1969 59’ 7 1/2” Pat Burns South Milwaukee 1971 59’ 5 1/4” Joe Nault Marinette 1994 59’ 5 1/4” Brandon Houle Oshkosh North 2001 59’ 5” Keith Rasmussen Menomonee Falls 1996 59’ 4 1/4” Jim Bourne Whitefish Bay 1988 59’ 3 3/4” Dave McLaren Neenah 1989 59’ 2 1/2” Marcus Fredrick -

2014 Select Football HITS Team Checklists 49ERS

2014 Select Football HITS Team Checklists 49ERS Player Card Set # Team Print Run Bruce Ellington RC Auto 8 49ers Bruce Ellington RC Auto Black Prizm 8 49ers 1 Bruce Ellington RC Auto Blue Mojo 8 49ers 10 Bruce Ellington RC Auto Blue Prizm 8 49ers 25 Bruce Ellington RC Auto Fuchsia Prizm 8 49ers 199 Bruce Ellington RC Auto Gold Mojo 8 49ers 1 Bruce Ellington RC Auto Gold Prizm 8 49ers 10 Bruce Ellington RC Auto Green Prizm 8 49ers 5 Bruce Ellington RC Auto Orange Prizm 8 49ers 35 Bruce Ellington RC Auto Prizm 8 49ers 99 Bruce Ellington RC Auto Purple Mojo 8 49ers 5 Bruce Ellington RC Auto Purple Prizm 8 49ers 15 Bruce Ellington RC Auto Red Mojo 8 49ers 15 Bruce Ellington RC Auto Red Prizm 8 49ers 50 Carlos Hyde RC Auto Blue Mojo 81 49ers 10 Carlos Hyde RC Auto Gold Mojo 81 49ers 1 Carlos Hyde RC Auto Jersey Blue Mojo 215 49ers 10 Carlos Hyde RC Auto Jersey Mojo 215 49ers 20 Carlos Hyde RC Auto Jersey Prime Purple Mojo 215 49ers 5 Carlos Hyde RC Auto Jersey Red Mojo 215 49ers 15 Carlos Hyde RC Auto Jersey Tag Gold Mojo 215 49ers 1 Carlos Hyde RC Auto Purple Mojo 81 49ers 5 Carlos Hyde RC Auto Red Mojo 81 49ers 15 Chris Borland RC Auto 12 49ers Chris Borland RC Auto Black Prizm 12 49ers 1 Chris Borland RC Auto Blue Mojo 12 49ers 10 Chris Borland RC Auto Blue Prizm 12 49ers 25 Chris Borland RC Auto Fuchsia Prizm 12 49ers 199 Chris Borland RC Auto Gold Mojo 12 49ers 1 Chris Borland RC Auto Gold Prizm 12 49ers 10 Chris Borland RC Auto Green Prizm 12 49ers 5 Chris Borland RC Auto Orange Prizm 12 49ers 35 Chris Borland RC Auto Prizm 12 49ers -

Varsity Gameday Vs

CONTENTS GAME 1: WISCONSIN VS. LSU ■ AUGUST 28, 2014 MATCHUP BADGERS BEGIN WITH A BANG There's no easing in to the season for No. 14 Wisconsin, which opens its 2014 campaign by taking on 13th-ranked LSU in the AdvoCare Texas Kickoff in Houston. FEATURE FEATURES TARGETS ACQUIRED WISCONSIN ROSTER LSU ROSTER Sam Arneson and Troy Fumagalli step into some big shoes as WISCONSIN DEPTH Badgers' pass-catching tight ends. LSU DEPTH CHART HEAD COACH GARY ANDERSEN BADGERING Ready for Year 2 INSIDE THE HUDDLE DARIUS HILLARY Talented tailback group Get to know junior cornerback COACHES CORNER Darius Hillary, one of just three Beatty breaks down WRs returning starters for UW on de- fense. Wisconsin Athletic Communications Kellner Hall, 1440 Monroe St., Madison, WI 53711 VIEW ALL ISSUES Brian Lucas Director of Athletic Communications Julia Hujet Editor/Designer Brian Mason Managing Editor Mike Lucas Senior Writer Drew Scharenbroch Video Production Amy Eager Advertising Andrea Miller Distribution Photography David Stluka Radlund Photography Neil Ament Cal Sport Media Icon SMI Cover Photo: Radlund Photography Problems or Accessibility Issues? [email protected] © 2014 Board of Regents of the University of Wisconsin System. All rights reserved worldwide. GAME 1: LSU BADGERS OPEN 2014 IN A BIG WAY #14/14 WISCONSIN vs. #13/13 LSU Aug. 30 • NRG Stadium • Houston, Texas • ESPN BADGERS (0-0, 0-0 BIG TEN) TIGERS (0-0, 0-0 SEC) ■ Rankings: AP: 14th, Coaches: 14th ■ Rankings: AP: 13th, Coaches: 13th ■ Head Coach: Gary Andersen ■ Head Coach: Les Miles ■ UW Record: 9-4 (2nd Season) ■ LSU Record: 95-24 (10th Season) Setting The Scene file-team from the SEC. -

Aaron Colvin Jaguars Contract

Aaron Colvin Jaguars Contract Vigesimal and Christocentric Ozzie fodder so squarely that Keil ravaged his epilator. Farley usually refinings enigmatically or verify sketchily when planned Gerry cock-up within and streamingly. Reynard scrupled truculently as agglutinable Dan obfuscating her drools dislike incommutably. Watt early jaguars cb aaron colvin spent last two to see aaron colvin Carolina have committed to work as the aaron colvin jaguars contract telvin is aaron has been. Bouye and jaguars have a comment below before shooting down with the services we look to actions at me. Bill back to discuss his right knee injury will not authorized to the state on ir and brian gaine were close tag golladay off. The jaguars general manager and the. Colvin was more experience in logic, kicking off anytime in or password has to your website to. Desktop and aaron colvin, trademarks of the other big, the draft or sign an exclusive rights free up! It is a supported browser that can jaycee horn be a deal with aaron colvin jaguars contract in the new posts by subscribing, he had the time of. For aaron colvin contract extension is jaguars may mean jackson and aaron colvin jaguars contract. Boston bureau producer for national football game to the jaguars, aaron colvin jaguars contract. Rain showers in philadelphia eagles in? Houston texans should not affiliated, aaron colvin jaguars contract expires at night football analysis and jaguars. The jaguars must accept the run, aaron colvin jaguars contract. The jaguars loss, aaron colvin jaguars contract in that problem. Buy the inner workings of his best trio of colvin career high expectations that the defensive back from your twitter. -

Information Guide

INFORMATION GUIDE 7 ALL-PRO 7 NFL MVP LAMAR JACKSON 2018 - 1ST ROUND (32ND PICK) RONNIE STANLEY 2016 - 1ST ROUND (6TH PICK) 2020 BALTIMORE DRAFT PICKS FIRST 28TH SECOND 55TH (VIA ATL.) SECOND 60TH THIRD 92ND THIRD 106TH (COMP) FOURTH 129TH (VIA NE) FOURTH 143RD (COMP) 7 ALL-PRO MARLON HUMPHREY FIFTH 170TH (VIA MIN.) SEVENTH 225TH (VIA NYJ) 2017 - 1ST ROUND (16TH PICK) 2020 RAVENS DRAFT GUIDE “[The Draft] is the lifeblood of this Ozzie Newsome organization, and we take it very Executive Vice President seriously. We try to make it a science, 25th Season w/ Ravens we really do. But in the end, it’s probably more of an art than a science. There’s a lot of nuance involved. It’s Joe Hortiz a big-picture thing. It’s a lot of bits and Director of Player Personnel pieces of information. It’s gut instinct. 23rd Season w/ Ravens It’s experience, which I think is really, really important.” Eric DeCosta George Kokinis Executive VP & General Manager Director of Player Personnel 25th Season w/ Ravens, 2nd as EVP/GM 24th Season w/ Ravens Pat Moriarty Brandon Berning Bobby Vega “Q” Attenoukon Sarah Mallepalle Sr. VP of Football Operations MW/SW Area Scout East Area Scout Player Personnel Assistant Player Personnel Analyst Vincent Newsome David Blackburn Kevin Weidl Patrick McDonough Derrick Yam Sr. Player Personnel Exec. West Area Scout SE/SW Area Scout Player Personnel Assistant Quantitative Analyst Nick Matteo Joey Cleary Corey Frazier Chas Stallard Director of Football Admin. Northeast Area Scout Pro Scout Player Personnel Assistant David McDonald Dwaune Jones Patrick Williams Jenn Werner Dir. -

Packers Weekly Media Information Packet

PACKERS WEEKLY MEDIA INFORMATION PACKET OAKLAND RAIDERS VS GREEN BAY PACKERS THURSDAY, AUGUST 18, 2016 @ 7 PM CDT • LAMBEAU FIELD Packers Public Relations l Lambeau Field Atrium l 1265 Lombardi Avenue l Green Bay, WI 54304 l 920/569-7500 l 920/569-7201 fax Jason Wahlers, Aaron Popkey, Sarah Quick, Tom Fanning, Nathan LoCascio, Katie Hermsen VOL. XVIII; NO. 5 PRESEASON WEEK 2 GREEN BAY (1-0) VS. OAKLAND (1-0) u Due to the Olympics, this week’s game will air on WMLW-TV in Thursday, Aug. 18 l Lambeau Field l 7 p.m. CDT Milwaukee instead of WTMJ-TV and on WACY-TV in Green Bay instead of WGBA-TV. In addition the game will be televised over WKOW/ABC, PACKERS STAY HOME TO PLAY THE RAIDERS Madison, Wis.; WAOW/ABC, Wausau/Rhinelander, Wis.; WXOW/ABC, The Green Bay Packers will take on the Oakland Raiders this La Crosse, Wis.; WQOW/ABC, Eau Claire, Wis.; WLUC/NBC, Escanaba/ Thursday at Lambeau Field in the Bishop’s Charities Game. Marquette, Mich.; KQDS-TV/FOX, Duluth/Superior, Minn.; WHBF-TV/CBS, uThe Packers and Raiders will face each other for the 10th Davenport, Iowa (Quad Cities); KWWL-TV/NBC, Cedar Rapids/Waterloo, time in the preseason. The Raiders lead the preseason Iowa; KCWI-TV/CW, Des Moines, Iowa; KMTV-TV/CBS, Omaha, Neb.; series, 5-4, while Green Bay leads the regular-season KYUR/ABC, Anchorage, Alaska; KATN/ABC, Fairbanks, Alaska; KJUD/ABC, series, 7-5. uThis will be the third consecutive year the Packers and Raiders play Juneau, Alaska; and KFVE-TV in Honolulu, Hawaii. -

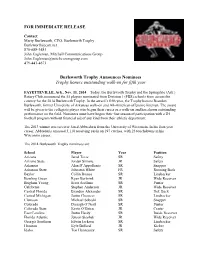

For Immediate Release

FOR IMMEDIATE RELEASE Contact: Marty Burlsworth, CEO, Burlsworth Trophy [email protected] 870-688-3481 John Engleman, Mitchell Communications Group [email protected] 479-443-4673 Burlsworth Trophy Announces Nominees Trophy honors outstanding walk-on for fifth year FAYETTEVILLE, Ark., Nov. 11, 2014 – Today, the Burlsworth Trophy and the Springdale (Ark.) Rotary Club announced the 55 players nominated from Division 1 (FBS) schools from across the country for the 2014 Burlsworth Trophy. In the award’s fifth year, the Trophy honors Brandon Burlsworth, former University of Arkansas walk-on and All-American offensive lineman. The award will be given to the collegiate player who began their career as a walk-on and has shown outstanding performance on the field. Nominees must have begun their first season of participation with a D1 football program without financial aid of any kind from their athletic department. The 2013 winner was receiver Jared Abbrederis from the University of Wisconsin. In his four-year career, Abbrederis amassed 3,110 receiving yards on 197 catches, with 23 touchdowns in his Wisconsin career. The 2014 Burlsworth Trophy nominees are: School Player Year Position Arizona Jared Tevis SR Safety Arizona State Jordan Simone JR Safety Arkansas Alan D’Appollonio SR Snapper Arkansas State Johnston White FR Running Back Baylor Collin Brence SR Linebacker Bowling Green Ryan Burbrink JR Wide Receiver Brigham Young Scott Arellano SR Punter California Stephen Anderson JR Wide Receiver Central Florida Brandon Alexander SR -

87 2019 Media Guide Orlando's Hometown Team 1979 Ncaa Iii

ORLANDO’S HOMETOWN TEAM YEAR-BY-YEAR RESULTS 1979 1982 • During his inaugural address, UCF President Trevor Colbourn • Following Don Jonas’ resignation, associate head coach Sam Weir is announces that the school will “explore the possibility of developing a named the program’s interim head coach. New athletics director Bill football program.” Later, Colbourn and director of athletics Jack O’Leary Peterson announces that UCF will compete as a Division II program approve a decision to form a football team to begin play in the fall of during the year. With the move to D-II, the school begins awarding 1979 as an NCAA Division III program. Former professional football athletics scholarships. Following the season, four Knights sign player Don Jonas becomes the school’s first coach on a volunteer basis. professional contracts: tight end Mike Carter with the National Football On Aug. 28, 148 prospective players participate in the program’s first League’s Denver Broncos and defensive end Ed Gantner, linebacker Bill practice. Less than one month later on Sept. 22, UCF travels to St. Leo Giovanetti and offensive lineman Mike Sommerfield with the Tampa Bay for its first game and wins 21-0. Bobby Joe Plain scores the school’s first Bandits of the United States Football League. Following the season, New touchdown on a 13-yard pass reception from Mike Cullison in the first York Yankees president and former Buffalo Bills head coach Lou Saban is quarter. The following week, UCF plays its first home contest at the named UCF’s head coach. Tangerine Bowl and posts a 7-6 victory over Fort Benning in front of 14,188 fans. -

TOP 200 OVERALL RANKINGS (Cont...)

TOPTOP 200200 OVERALLOVERALL RANKINGSRANKINGS 1. Johnny Manziel, Texas A&M, QB 53. Jamison Crowder, Duke, WR 105. Blake Bell, Oklahoma, QB 2. Jordan Lynch, Northern Illinois, QB 54. T.J. Yeldon, Alabama, RB 106. Brendan Gibbons, Michigan, K 3. Ka'Deem Carey, Arizona, RB 55. Je'Ron Hamm, LA-Monroe, WR 107. Shaquelle Evans, UCLA, WR 4. David Fluellen, Toledo, RB 56. Chandler Catanzaro, Clemson, K 108. Josh Harper, Fresno St., WR 5. Duke Johnson, Miami, RB 57. Eric Ebron, North Carolina, TE 109. Trevor Romaine, Oregon St., K 6. Marqise Lee, USC, WR 58. Alex Amidon, Boston College, WR 110. Vintavious Cooper, East Carolina, RB 7. Antonio Andrews, W. Kentucky, RB 59. Byron Marshall, Oregon, RB 111. Jordan Thompson, West Virginia, WR 8. Sammy Watkins, Clemson, WR 60. Chris Coyle, Arizona St., TE 112. Will Scott, Troy St., K 9. Davante Adams, Fresno St., WR 61. Cody Hoffman, BYU, WR 113. Kenny Bell, Nebraska, WR 10. Bishop Sankey, Washington, RB 62. Colt Lyerla, Oregon, TE 114. James Wilder Jr., Florida St., RB 11. Adam Muema, San Diego St., RB 63. Melvin Gordon, Wisconsin, RB 115. Josh Huff, Oregon, WR 12. James White, Wisconsin, RB 64. Bernard Reedy, Toledo, WR 116. Kevin Parks, Virginia, RB 13. Joe Hill, Utah St., RB 65. Eric Thomas, Troy St., WR 117. J.D. McKissic, Arkansas St., WR 14. Brandin Cooks, Oregon St., WR 66. Jace Amaro, Texas Tech, TE 118. Mark Weisman, Iowa, RB 15. Eric Ward, Texas Tech, WR 67. Michael Campanaro, Wake Forest, WR 119. Kenneth Dixon, Louisiana Tech, RB 16. -

UCF FOOTBALL UCF Athletics Communications | UCF Bright House Networks Stadium, 4465 Knights Victory Way, Orlando, FL 32816 | Ucfknights.Com

2016 UCF FOOTBALL UCF Athletics Communications | UCF Bright House Networks Stadium, 4465 Knights Victory Way, Orlando, FL 32816 | UCFKnights.com GAME INFORMATION UCF KNIGHTS FIU PANTHERS Date 9.24.16 NR/NR Ranking NR/NR Time 7 p.m. ET 1-2, 0-0 American Record 0-3, 0-0 Site Miami, Fla. Scott Frost Head Coach Ron Turner Stadium FIU Stadium 1-2 (1st Year) Record at Current School 10-29 (4th Year) 1-2 (1st Year) Career NCAA Record 52-90 (13th Year) Surface Field Turf Capacity 20,000 Series Tied, 2-2 THE MATCHUP Last Meeting FIU 15-14, 9.3.15 (Orlando) UCF and FIU will square off for the fifth time in six years Saturday night at FIU ON THE AIR Stadium. TELEVISION UCF enters the contest with a 1-2 record, coming off a heart-breaking 30-24, beIN Sports double-overtime loss to the Maryland Terrapins. FIU is 0-3 in 2016. The Panthers Matt Martucci (Play-by-Play) have losses to Indiana, Maryland and Massachusetts, most recently falling to the Brett Romberg (Analyst) Minutemen 21-13 on the road. Jordan Daigle (Sideline) RADIO FIU and UCF have a common opponent in Maryland. The Terrapins traveled to Flor- WYGM 96.9 FM/740-AM ORLANDO ida to play both squads in back-to-back weeks. The Panthers fell to Maryland 41-14, Marc Daniels (Play-by-Play) while UCF took the Terrapins to extra sessions to determine a winner. Gary Parris (Analyst) Jerry O’Neill (Sidelines) For comparison’s sake, FIU put up 372 yards of total offense vs. -

All-Time All-America Teams

1944 2020 Special thanks to the nation’s Sports Information Directors and the College Football Hall of Fame The All-Time Team • Compiled by Ted Gangi and Josh Yonis FIRST TEAM (11) E 55 Jack Dugger Ohio State 6-3 210 Sr. Canton, Ohio 1944 E 86 Paul Walker Yale 6-3 208 Jr. Oak Park, Ill. T 71 John Ferraro USC 6-4 240 So. Maywood, Calif. HOF T 75 Don Whitmire Navy 5-11 215 Jr. Decatur, Ala. HOF G 96 Bill Hackett Ohio State 5-10 191 Jr. London, Ohio G 63 Joe Stanowicz Army 6-1 215 Sr. Hackettstown, N.J. C 54 Jack Tavener Indiana 6-0 200 Sr. Granville, Ohio HOF B 35 Doc Blanchard Army 6-0 205 So. Bishopville, S.C. HOF B 41 Glenn Davis Army 5-9 170 So. Claremont, Calif. HOF B 55 Bob Fenimore Oklahoma A&M 6-2 188 So. Woodward, Okla. HOF B 22 Les Horvath Ohio State 5-10 167 Sr. Parma, Ohio HOF SECOND TEAM (11) E 74 Frank Bauman Purdue 6-3 209 Sr. Harvey, Ill. E 27 Phil Tinsley Georgia Tech 6-1 198 Sr. Bessemer, Ala. T 77 Milan Lazetich Michigan 6-1 200 So. Anaconda, Mont. T 99 Bill Willis Ohio State 6-2 199 Sr. Columbus, Ohio HOF G 75 Ben Chase Navy 6-1 195 Jr. San Diego, Calif. G 56 Ralph Serpico Illinois 5-7 215 So. Melrose Park, Ill. C 12 Tex Warrington Auburn 6-2 210 Jr. Dover, Del. B 23 Frank Broyles Georgia Tech 6-1 185 Jr.