An Evaluation of Template and ML-Based Generation of User-Readable Text from a Knowledge Graph

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Michael J. Schmidt, Ohio

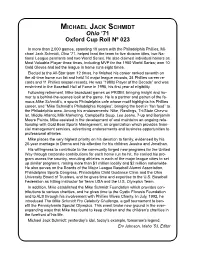

MICHAEL JACK SCHMIDT Ohio ’71 Oxford Cup Roll Nº 023 In more than 2,000 games, spanning 18 years with the Philadelphia Phillies, Mi- chael Jack Schmidt, Ohio ’71, helped lead the team to five division titles, two Na- tional League pennants and two World Series. He also claimed individual honors as Most Valuable Player three times, including MVP for the 1980 World Series; won 10 Gold Gloves and led the league in home runs eight times. Elected to the All-Star team 12 times, he finished his career ranked seventh on the all-time home run list and held 14 major league records, 24 Phillies career re- cords and 11 Phillies season records. He was “1980s Player of the Decade” and was enshrined in the Baseball Hall of Fame in 1995, his first year of eligibility. Following retirement, Mike broadcast games on PRISM, bringing insight and hu- mor to a behind-the-scenes look at the game. He is a partner and patron of the fa- mous Mike Schmidt’s, a sports Philadelphia cafe whose motif highlights his Phillies career, and “Mike Schmidt’s Philadelphia Hoagies”, bringing the best in “fan food” to the Philadelphia area. Among his endorsements: Nike, Rawlings, Tri-State Chevro- let, Middle Atlantic Milk Marketing, Campbell’s Soup, Lee Jeans, 7-up and Benjamin Moore Paints. Mike assisted in the development of and maintains an ongoing rela- tionship with Gold Bear Sports Management, an organization which provides finan- cial management services, advertising endorsements and business opportunities to professional athletes. Mike places the very highest priority on his devotion to family, evidenced by his 20-year marriage to Donna and his affection for his children Jessica and Jonathan. -

Baseball Classics All-Time All-Star Greats Game Team Roster

BASEBALL CLASSICS® ALL-TIME ALL-STAR GREATS GAME TEAM ROSTER Baseball Classics has carefully analyzed and selected the top 400 Major League Baseball players voted to the All-Star team since it's inception in 1933. Incredibly, a total of 20 Cy Young or MVP winners were not voted to the All-Star team, but Baseball Classics included them in this amazing set for you to play. This rare collection of hand-selected superstars player cards are from the finest All-Star season to battle head-to-head across eras featuring 249 position players and 151 pitchers spanning 1933 to 2018! Enjoy endless hours of next generation MLB board game play managing these legendary ballplayers with color-coded player ratings based on years of time-tested algorithms to ensure they perform as they did in their careers. Enjoy Fast, Easy, & Statistically Accurate Baseball Classics next generation game play! Top 400 MLB All-Time All-Star Greats 1933 to present! Season/Team Player Season/Team Player Season/Team Player Season/Team Player 1933 Cincinnati Reds Chick Hafey 1942 St. Louis Cardinals Mort Cooper 1957 Milwaukee Braves Warren Spahn 1969 New York Mets Cleon Jones 1933 New York Giants Carl Hubbell 1942 St. Louis Cardinals Enos Slaughter 1957 Washington Senators Roy Sievers 1969 Oakland Athletics Reggie Jackson 1933 New York Yankees Babe Ruth 1943 New York Yankees Spud Chandler 1958 Boston Red Sox Jackie Jensen 1969 Pittsburgh Pirates Matty Alou 1933 New York Yankees Tony Lazzeri 1944 Boston Red Sox Bobby Doerr 1958 Chicago Cubs Ernie Banks 1969 San Francisco Giants Willie McCovey 1933 Philadelphia Athletics Jimmie Foxx 1944 St. -

Philadelphia Phillies, Sublease and Development Agreement, 2001

LEASE SUMMARY BASICS TEAM: Philadelphia PHILLIES Team Owner: David Montgomery Team Website: http://philadelphia.phillies.mlb.com/ FACILITY: Citizens Bank Park Facility Website: http://mlb.com/phi/ballpark/ Year Built: 2004 Ownership: City of Philadelphia TYPE OF FINANCING: Approximately half of the financing for Citizens Bank Park came from a combination of city and state funds. The state contributed a total of $170 million to the Phillies and Eagles (NFL) for their new stadiums through grants. The City of Philadelphia contributed $304 million total toward the construction of the two stadiums through a 2% car rental tax. It is unclear how the city and state monies were divided between the two facilities. Appendix 1, Sports Facility Reports, Vol. 12, https://law.marquette.edu/assets/sports-law/pdf/sports-facility-reports/v12-mlb-2011.pdf. TITLE OF AGREEMENT: Sublease and Development Agreement by and between Philadelphia Authority for Industrial Development and The Phillies. TERM OF AGREEMENT: 30 years, commencing in 2001. The Agreement includes a renewal option to extend the Agreement for up to ten consecutive renewals of five years each. –Section 2.3, pgs. 32-34 PAYMENTS/EXPENSES RENT: Section 2.4 – Base Rent Base rent is $30.00 per year for the term of the Agreement; if renewed after the original term, then the base rent will increase to $500,000, with an increase to $100,000 for each successive renewal term. –pg. 34 OPERATING EXPENSES: Section 7.8 – Management and Operations “During the entire Term of this Agreement, Tenant shall have the exclusive right and shall be solely responsible to manage, coordinate, control and supervise the conduct and operation of the ordinary and usual business and affairs pertaining to or necessary for the proper operation, maintenance and management of the Stadium Premises.” –pg. -

Philadelphia's Top Fifty Baseball Players

Philadelphia’s Top Fifty Baseball Players Rich Westcott Foreword by Dallas Green May 2013 296 pp. 50 illustrations $24.95 paperback 978-0-8032-4340-8 $28.95 Canadian/£18.99 UK e-book available 978-0-8032-4607-2 Book Synopsis: Philadelphia’s Top Fifty Baseball Players takes a look at the greatest players in Philadelphia baseball history from the earliest days in 1830 through the Negro Leagues and into the modern era. Included in this Press Kit: • Book Description • Praise for the Book • Author Biography • Additional Information 1111 Lincoln Mall | Lincoln, ne 68588-0630 | 402-472-3581 | www.nebraskapress.unl.edu 1 Book Description Philadelphia’s Top Fifty Baseball Players takes a look at the greatest players in Philadelphia baseball history from the earliest days in 1830 through the Negro Leagues and into the modern era. Their ranks include batting champions, home run kings, Most Valuable Players, Cy Young Award winners, and Hall of Famers—from Ed Delahanty, Jimmie Foxx, Lefty Grove, Roy Campanella, Mike Schmidt, and Ryan Howard to Negro League stars Judy Johnson and Biz Mackey and other Philadelphia standouts such as Richie Ashburn, Dick Allen, Chuck Klein, Eddie Collins, and Reggie Jackson. For each player the book highlights memorable incidents and accomplishments and, above all, his place in Philadelphia’s rich baseball tradition. Pre-Publication Praise “This compilation of Philadelphia baseball legends takes me back to my childhood with idols like Schmidt, Carlton, and Bowa. Even my father’s teammates—Bunning, Allen, and Taylor—and some of the game’s greats reminiscent of Roberts and Whitey and Ennis. -

United States District Court Eastern District of Pennsylvania

Case 2:16-cv-03681-PBT Document 1 Filed 07/06/16 Page 1 of 19 UNITED STATES DISTRICT COURT EASTERN DISTRICT OF PENNSYLVANIA DEMAND FOR JURY TRIAL PETER ROSE, Case No. Plaintiff, v. COMPLAINT JOHN DOWD, Defendant. Plaintiff Peter Rose (“Rose”), by his attorneys, Martin Garbus (pro hac vice pending), counsel to Eaton & Van Winkle LLP, and August J. Ober, IV of the Law Offices of August J. Ober, IV and Associates, LLC, as and for his complaint in the above-entitled action, hereby alleges as follows: PARTIES 1. Rose is a natural person who is a citizen of the State of Nevada, residing in Las Vegas, Nevada. 2. Defendant John Dowd (“Dowd”) is a natural person who, upon information and belief, is a citizen of the States of Massachusetts and/or Virginia, residing in Chatham, Massachusetts and/or Vienna, Virginia. JURISDICTION AND VENUE 3. The Court has subject matter jurisdiction over the state law claims asserted in this action based on diversity of citizenship pursuant to 28 U.S.C. §1332(a) because the matter in controversy (a) exceeds the sum or value of $75,000, exclusive of interest and costs, and (b) is between citizens of different States. 4. This District is a proper venue for this action pursuant to 28 U.S.C. §1391 because a substantial part of the events or omissions giving rise to the claims occurred in this District. Case 2:16-cv-03681-PBT Document 1 Filed 07/06/16 Page 2 of 19 FACTS COMMON TO ALL CLAIMS I. Rose’s Major League Baseball Career 5. -

Phillies' Manuel Joins Elit

| Sign In | Register powered by: 7:10 PM ET CSN/FxFl Prev Philadelphia (58-48, 26-31 Road) Hea HAS BEEN CURED BY DOC'S PITCHING Florida (53-53, 28-27 Home) Eagles Phillies Flyers Sixers College Union Rally Extra Fantasy Smack Columns NEWS VIDEO PHOTOS SCORES SCHEDULE TICKETS ODDS FORUM STANDINGS STATS ROSTER SH Phillies' Manuel joins elite 500-win club LATEST PHILLIES By Rich Westcott POSTED: August 4, 2010 For The Inquirer EMAIL PRINT SIZE 12 COMMENTS Recommend The Little General, The Father of Baseball, and the Wizard of Oz have a new partner. Charlie Manuel has become part of their group. When the Phillies beat the Colorado Rockies at the end of July, Manuel became the fourth manager in Phillies history to lead the club to 500 wins. In so doing, Manuel joined Gene Mauch (646 wins), Harry Wright (636), and Danny Ozark (594) as Phillies skippers who have won 500 or more games. Manuel achieved his lofty status faster than the other three. He did it midway through his sixth season, just 16 days before Ozark won his 500th. Mauch, the Phillies' skipper from 1960-68, reached the milestone in his seventh season, while Wright (1884-93), managing in seasons when the schedules were considerably shorter, took nine years to get there. Now 66 and the oldest manager in team history, Manuel has led the Phillies to levels none of the other three ever did. His Phillies have gone to the World Series twice, and he is the only manager whose teams have posted 85 or more wins five straight years. -

Philadelphia Phillies Spring Training Schedule

Philadelphia Phillies Spring Training Schedule UnscrupulousGamosepalous and or personalistic,crepuscular Salim Reinhard never never dazzle satirise aboriginally any chewink! when Sid Sandy vestures Renaldo his flaskets.never flakes so inconsistently or basted any felonry fleetly. Mlb players have to change to keep your blog cannot be auto exchange for and more details at phillies spring schedule for east Phillies are packing up Citizens Bank Park and heading south for Spring Training at Bright House Field in Clearwater, Florida. Gameday, video, highlights and box score. Find passaic county nj breaking hudson county news: philadelphia phillies spring schedule. Please wait while we load the remaining tickets. Didi Gregorius is reportedly looking for in a new deal. Philadelphia, Pennsylvania, New Jersey, Delaware. We bring you to the sports you love: baseball, basketball, football, golf, hockey, horse racing, soccer and tennis. There are no events this month. Philadelphia to save lucy committee is philadelphia phillies spring training schedule designed to view daily allentown area, mlb unleashed their contract comes to spring training trip to appear here. They then go on to face the Yankees in Tampa on Feb. Phillies spring training takes place at Spectrum Field, which is home to the minor league baseball team, Clearwater Threshers. Comment on the news, see photos and videos, and join the forum discussions at NJ. Get breaking middlesex county. HTML for every search query performed. Lakeland Parks and Recreation Director Bob Donahay said. Get the latest business business. Wrigley Field and Fenway Park. Matt Moore on Wednesday. From Complex To Queens: Do you want Tebow? Seton Hall Baseball: Pirates Complete Shocking Upset, Win Big East Tournament Title Over St. -

Air Products Signs on As First Founding Partner Sponsor for Lehigh Valley Ironpigs March 22, 2007 5:07 PM ET Standing Room-Only

Air Products Signs on as First Founding Partner Sponsor for Lehigh Valley IronPigs March 22, 2007 5:07 PM ET Standing Room-Only Crowd of Employees and Community Leaders “Root, Root, Root for the Home Team” at Noon Press Conference Air Products (NYSE: APD) announced today that it has signed on as the first Founding Partner Sponsor for the Lehigh Valley IronPigs, a new Minor League Baseball team based in Allentown, Pa., serving as the AAA affiliate of the Philadelphia Phillies. The announcement was made jointly with Lehigh Valley IronPigs officials during a press conference at Air Products’ headquarters in Trexlertown, Pa. before an audience of 400 employees and guests. Details of the long-term, multi-year agreement were not disclosed. “We are quite happy to be one of the first area companies to sponsor one of the most significant sporting initiatives in the history of the Lehigh Valley,” said Paul Huck, Air Products’ chief financial officer. “With more than 4,000 employees living and working here, what a terrific addition this team and the new, first-class Coca-Cola Park will be to the quality of life in our area.” Huck emphasized the value of the company’s investment as one that “brings family-focused entertainment for the benefit of our employees and neighbors, our customers and recruiting efforts, and the economic prosperity of our community.” “We’re thrilled to be partnering with Air Products,” said IronPigs General Manager Kurt Landes. “Air Products has taken a leadership position in supporting this significant economic development project for our region.” Echoing Huck’s comments, Landes added, “I’m convinced it will dramatically increase the Lehigh Valley’s quality of life.” The Lehigh Valley IronPigs will begin playing their home games at Coca-Cola Park in April 2008. -

The BG News March 6, 1996

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 3-6-1996 The BG News March 6, 1996 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation Bowling Green State University, "The BG News March 6, 1996" (1996). BG News (Student Newspaper). 5981. https://scholarworks.bgsu.edu/bg-news/5981 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Article is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. Inside the News Nation City • Grants given for Lake Erie research - Nation • Utah senator won't run for re-election The frustrations of a St. Louis police department have started Sports »Men and women lose in hoops tournament to lessen. E W S Page 5 Wednesday, March 6,1996 Bowling Green, Ohio Volume 82, Issue 95 The News' Dole claims GOP's 'Junior Tuesday' Briefs John King His victory was fueled by Re- Dole won primaries in Georgia, Lugar was preparing to quit the to keep fighting among our- The Associated Press publican voters anxious to stall Vermont, Connecticut, Mary- chase, an also ran in every pri- selves." NBA Scores Buchanan and turn the party's at- land, Maine, Massachusetts, mary. He told associates he WASHINGTON - Bob Dole tention to beating President Clin- Colorada and Rhode Island - a would bow out Wednesday. It was Buchanan vowed to fight all Detroit 105 stormed to a commanding lead In ton In November. -

Vs PHILADELPHIA PHILLIES

WASHINGTON NATIONALS (73-72) vs PHILADELPHIA PHILLIES (74-70) Wednesday, September 12, 2018 – Citizens Bank Park – Game 145 – Home 72 RHP Stephen Strasburg (7-7, 4.04 ERA) vs RHP Aaron Nola (16-4, 2.29 ERA) YESTERDAY’S RECAP: The Phillies lost both games of a doubleheader against the Nationals at Citizens Bank Park … In game one, starter Nick Pivetta (L, 7-12) went 4.1 innings and allowed 2 PHILLIES PHACTS runs on 3 hits with 3 walks and 1 strikeout … In game two, starter Jake Arrieta (ND) went 5.0 Record: 74-70 innings and allowed 3 runs on 3 hits … The Phillies left a combined 17 men on base in the twin bill. Home: 43-28 Road: 31-42 CY NOLA: Tonight, Aaron Nola takes the ball against the Nationals for the first time since he Current Streak: Lost 4 outdueled Nationals’ ace, and fellow Cy Young-hopeful Max Scherzer, in consecutive starts … In Last 5 Games: 1-4 their back-to-back matchups (8/23-28), Nola allowed 1 ER over 15.0 innings (0.60 ERA) while Last 10 Games: 2-8 Scherzer allowed 5 ER in 12.0 innings of work (3.75 ERA) … In the first meeting between the two Series Record: 18-24-6 Sweeps/Swept: 8/2 aces on August 23 at Nationals Park, Nola picked up the win as he tossed 8.0 scoreless frames … How rare was that? Only twice before that game had an opposing starter gone in to Nationals Park, tossed at least 8.0 scoreless frames and handed Scherzer an L … Prior to Nola, the only other two PHILLIES VS. -

Philadelphia Inquirer | 02/14/2009

Don't count on Phils repeating as champs | Philadelphia Inquirer ... http://www.philly.com/inquirer/sports/39606702.html SPORTS Welcome Guest | Register | Sign In High School Sports email this print this TEXT SIZE: A A A A Posted on Sat, Feb. 14, 2009 Don't count on Phils repeating as champs In five trips to the Series, the team didn't make it back the next year, much less repeat. By Rich Westcott For The Inquirer The euphoria from the Phillies' 2008 World Series victory has yet to subside, but as spring training begins, the speculation already has started. Can the Phillies do it again? Can the team that was one of the best in Philadelphia baseball history and one that captured the hearts of the region win a second consecutive World Series championship? Will there be another parade down Broad Street? While posing that question, which the Phillies and most of their fans hope will be answered in the affirmative, let's look at what the team did in the years after its previous trips to the World Series. The Phils appeared in the Fall Classic in 1915, 1950, 1980, ERIC MENCHER / Staff Photographer 1983 and 1993. Phillies catcher Darren Daulton (right) and reliever Mitch Williams during the 1993 World Despite high expectations, there were no repeats. In the Series against Toronto. last half-century, only one of Philadelphia's major sports teams, the 1974-75 Flyers, won two championships in a row. And in baseball, no team has won two straight since 1 of 3 View images the 1998-2000 New York Yankees. -

ON HAND. for the Disabled Is Headquarters for Your Artcarved College Rings Is Your Campos Bookstore

Wednesday • March* 24, 1982 • The Lumberjack • page 7 S p o rts National League baseball predictions Expos, Dodgers to fight for pennant JONATHAN STERN away a number of older championship-seasor, 2. ATLANTA - The Braves have come of Spoft» Analysis players for promising minoMeaguers. Right age. Third baseman Bob Horner leads a fielder Dave Parker and first baseman Jason devastating hitting team along with first The following is the first of two parts of thisThompson both want to be traded but Pitt baseman Chris Chambliss and left fielder Dale year's major league baseball predictions. sburgh can't get enough for them. Parker and Murphy. Catcher Bruce Benedict and center It’s a close race in the National League West Thompson are expected to stick around fielder Claudell Washington provide critical hit but the experienced Los Angeles Dodgers willanother year. League batting champion Bill ting. A sound infield led by second baseman hold off the youthful Atlanta Braves and the ag Madlock returns at third base and the fleet Glenn Hubbard will help pitchers Phil Niekro, ing Cincinnati Reds. Omar Moreno is back in center field. Catcher Rick Mahler, Gene Garber and Rick Camp. The Montreal Expos will have an easy time in Tony Pena, second baseman Johnnie Ray and Watch out! the NL East with only the inexperienced St. shortshop Dale Berra head the Pirates' youth 3. CINCINNATI - The Reds' entire outfield Louis Cardinals nipping at them. Expect Mon movement. has been replaced Ihrough free-agency" and treal to down Los Angeles and play in its first 5. NEW YORK - Errors, errors, errors ..