Designing and Composing for Interdependent Collaborative

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

5-Stepcoordination Challenge Pat Travers’ Sandy Gennaro Lessons Learned Mike Johnston Redefining “Drum Hero”

A WILD ZEBRA BLACK FADE DRUMKIT FROM $ WIN DIXON VALUED OVER 9,250 • HAIM • WARPAINT • MIKE BORDIN THE WORLD’S #1 DRUM MAGAZINE APRIL 2014 DARKEST HOUR’S TRAVIS ORBIN BONUS! MIKE’S LOVES A GOOD CHALLENGE 5-STEPCOORDINATION CHALLENGE PAT TRAVERS’ SANDY GENNARO LESSONS LEARNED MIKE JOHNSTON REDEFINING “DRUM HERO” MODERNDRUMMER.com + SABIAN CYMBAL VOTE WINNERS REVIEWED + VISTA CHINO’S BRANT BJORK TELLS IT LIKE IT IS + OLSSON AND MAHON GEAR UP FOR ELTON JOHN + BLUE NOTE MASTER MICKEY ROKER STYLE AND ANALYSIS NICKAUGUSTO TRIVIUM LEGENDARYIT ONLYSTARTS BEGINS TO HERE.DESCRIBE THEM. “The excitement of getting my first kit was like no other, a Wine Red 5 piece Pearl Export. I couldn’t stop playing it. Export was the beginning of what made me the drummer I am today. I may play Reference Series now but for me, it all started with Export.” - Nick Augusto Join the Export family at pearldrum.com. ® CONTENTS Cover and contents photos by Elle Jaye Volume 38 • Number 4 EDUCATION 60 ROCK ’N’ JAZZ CLINIC Practical Independence Challenge A 5-Step Workout for Building Coordination Over a Pulse by Mike Johnston 66 AROUND THE WORLD Implied Brazilian Rhythms on Drumset Part 3: Cô co by Uka Gameiro 68 STRICTLY TECHNIQUE Rhythm and Timing Part 2: Two-Note 16th Groupings by Bill Bachman 72 JAZZ DRUMMER’S WORKSHOP Mickey Roker Style and Analysis by Steve Fidyk EQUIPMENT On the Cover 20 PRODUCT CLOSE˜UP • DW Collector’s Series Cherry Drumset • Sabian 2014 Cymbal Vote Winners • Rich Sticks Stock Series Drumsticks • TnR Products Booty Shakers and 50 MIKE JOHNSTON Little Booty Shakers by Miguel Monroy • Magnus Opus FiBro-Tone Snare Drums Back in the day—you know, like ve years ago—you 26 ELECTRONIC REVIEW had to be doing world tours or making platinum records Lewitt Audio DTP Beat Kit Pro 7 Drum to in uence as many drummers as this month’s cover Microphone Pack and LCT 240 Condensers star does with his groundbreaking educational website. -

Estimation of Direction of Arrival of Acoustic Signals Using Microphone

Time-Delay-Estimate Based Direction-of-Arrival Estimation for Speech in Reverberant Environments by Krishnaraj Varma Thesis submitted to the Faculty of The Bradley Department of Electrical and Computer Engineering Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Science in Electrical Engineering APPROVED Dr. A. A. (Louis) Beex, Chairman Dr. Ira Jacobs Dr. Douglas K. Lindner October 2002 Blacksburg, VA KEYWORDS: Microphone array processing, Beamformer, MUSIC, GCC, PHAT, SRP-PHAT, TDE, Least squares estimate © 2002 by Krishnaraj Varma Time-Delay-Estimate Based Direction-of-Arrival Estimation for Speech in Reverberant Environments by Krishnaraj Varma Dr. A. A. (Louis) Beex, Chairman The Bradley Department of Electrical and Computer Engineering (Abstract) Time delay estimation (TDE)-based algorithms for estimation of direction of arrival (DOA) have been most popular for use with speech signals. This is due to their simplicity and low computational requirements. Though other algorithms, like the steered response power with phase transform (SRP-PHAT), are available that perform better than TDE based algorithms, the huge computational load required for this algorithm makes it unsuitable for applications that require fast refresh rates using short frames. In addition, the estimation errors that do occur with SRP-PHAT tend to be large. This kind of performance is unsuitable for an application such as video camera steering, which is much less tolerant to large errors than it is to small errors. We propose an improved TDE-based DOA estimation algorithm called time delay selection (TIDES) based on either minimizing the weighted least squares error (MWLSE) or minimizing the time delay separation (MWTDS). -

TA-1VP Vocal Processor

D01141720C TA-1VP Vocal Processor OWNER'S MANUAL IMPORTANT SAFETY PRECAUTIONS ªª For European Customers CE Marking Information a) Applicable electromagnetic environment: E4 b) Peak inrush current: 5 A CAUTION: TO REDUCE THE RISK OF ELECTRIC SHOCK, DO NOT REMOVE COVER (OR BACK). NO USER- Disposal of electrical and electronic equipment SERVICEABLE PARTS INSIDE. REFER SERVICING TO (a) All electrical and electronic equipment should be QUALIFIED SERVICE PERSONNEL. disposed of separately from the municipal waste stream via collection facilities designated by the government or local authorities. The lightning flash with arrowhead symbol, within equilateral triangle, is intended to (b) By disposing of electrical and electronic equipment alert the user to the presence of uninsulated correctly, you will help save valuable resources and “dangerous voltage” within the product’s prevent any potential negative effects on human enclosure that may be of sufficient health and the environment. magnitude to constitute a risk of electric (c) Improper disposal of waste electrical and electronic shock to persons. equipment can have serious effects on the The exclamation point within an equilateral environment and human health because of the triangle is intended to alert the user to presence of hazardous substances in the equipment. the presence of important operating and (d) The Waste Electrical and Electronic Equipment (WEEE) maintenance (servicing) instructions in the literature accompanying the appliance. symbol, which shows a wheeled bin that has been crossed out, indicates that electrical and electronic equipment must be collected and disposed of WARNING: TO PREVENT FIRE OR SHOCK separately from household waste. HAZARD, DO NOT EXPOSE THIS APPLIANCE TO RAIN OR MOISTURE. -

Re-20 Om.Pdf

RE-20_e.book 1 ページ 2007年6月8日 金曜日 午後4時32分 Thank you, and congratulations on your choice of the BOSS RE-20 Space Echo. Before using this unit, carefully read the sections entitled: “USING THE UNIT SAFELY” and “IMPORTANT NOTES” (separate sheet). These sections provide important information concerning the proper operation of the unit. Additionally, in order to feel assured that you have gained a good understanding of every feature provided by your new unit, this manual should be read in its entirety. The manual should be saved and kept on hand as a convenient reference. Main Features ● The RE-20 uses COSM technology to faithfully simulate the characteristics of the famed Roland SPACE ECHO RE-201. ● Faithfully reproduces the characteristics of the RE-201, including the echo’s distinctive wow- and flutter-induced wavering and the compressed sound obtained with magnetic saturation. ● The Mode Selector carries on the tradition of the RE-201, offering twelve different reverberation effects through various combinations of the three playback heads and reverb. ● You can set delay times with the TAP input pedal and use an expression pedal (sold separately) for controlling parameters. ● Equipped with a “Virtual Tape Display,” which produces a visual image of a running tape. About COSM (Composite Object Sound Modeling) Composite Object Sound Modeling—or “COSM” for short—is BOSS/Roland’s innovative and powerful technology that’s used to digitally recreate the sound of classic musical instruments and effects. COSM analyzes the many factors that make up the original sound—including its electrical and physical characteristics—and creates a digital model that accurately reproduces the original. -

Implementing an M-Fold Wah-Wah Filter in Matlab

“What if we had, not one Wah Wah Filter, not two, but 20?”: Implementing an M-Fold Wah-Wah Filter in Matlab Digital Audio Systems, DESC9115, 2020 Master of Interaction Design & Electronic Arts (Audio and Acoustics) Sydney School of Architecture, Design and Planning, The University of Sydney ABSTRACT An M-fold Wah-Wah filter can be described as an effect where multiple Wah-Wah filters are applied to a signal, each at a certain frequency range. This report describes the implementation of such a filter in Matlab. By using preexisting code on a single state-variable bandpass filter, multiple bandpass filters are implemented across a defined frequency spectrum. The filter is adjustable through a number of variables, these being: the number of bandpass filters (M), the damping factor of each filter, the spectrum for which the filters are applied, as well as the Wah Frequency, i.e. the number of cycles through each bandpass. 1. INTRODUCTION The Wah-Wah filter is commonly used by guitarist to alter the shape and tone of the note(s) they are playing. The effect can be described as the combination of ‘u’ and ‘ah’ sounds created by human voice. The mouth’s shape here going from a small O to a big O. The center frequencies are called “formants”. The Wah-Wah pedal works in a similar manner, the formants shifts creating a “wah” sound. The Wah-Wah filter is a time-varying delay line filter. Each filter has a set of unique characteristics such as the range of frequencies the effect is applied to and its Wah- Frequency, i.e. -

Recording and Amplifying of the Accordion in Practice of Other Accordion Players, and Two Recordings: D

CA1004 Degree Project, Master, Classical Music, 30 credits 2019 Degree of Master in Music Department of Classical music Supervisor: Erik Lanninger Examiner: Jan-Olof Gullö Milan Řehák Recording and amplifying of the accordion What is the best way to capture the sound of the acoustic accordion? SOUNDING PART.zip - Sounding part of the thesis: D. Scarlatti - Sonata D minor K 141, V. Trojan - The Collapsed Cathedral SOUND SAMPLES.zip – Sound samples Declaration I declare that this thesis has been solely the result of my own work. Milan Řehák 2 Abstract In this thesis I discuss, analyse and intend to answer the question: What is the best way to capture the sound of the acoustic accordion? It was my desire to explore this theme that led me to this research, and I believe that this question is important to many other accordionists as well. From the very beginning, I wanted the thesis to be not only an academic material but also that it can be used as an instruction manual, which could serve accordionists and others who are interested in this subject, to delve deeper into it, understand it and hopefully get answers to their questions about this subject. The thesis contains five main chapters: Amplifying of the accordion at live events, Processing of the accordion sound, Recording of the accordion in a studio - the specifics of recording of the accordion, Specific recording solutions and Examples of recording and amplifying of the accordion in practice of other accordion players, and two recordings: D. Scarlatti - Sonata D minor K 141, V. Trojan - The Collasped Cathedral. -

Subwoofer Arrays: a Practical Guide

Subwoofer Arrays A Practical Guide VVVeVeeerrrrssssiiiioooonnnn 111 EEElEllleeeeccccttttrrrroooo----VVVVooooiiiicccceeee,,,, BBBuBuuurrrrnnnnssssvvvviiiilllllleeee,,,, MMMiMiiinnnnnneeeessssoooottttaaaa,,,, UUUSUSSSAAAA AAApAppprrrriiiillll,,,, 22202000009999 © Bosch Security Systems Inc. Subwoofer Arrays A Practical Guide Jeff Berryman Rev. 1 / June 7, 2010 TABLE OF CONTENTS 1. Introduction .......................................................................................................................................................1 2. Acoustical Concepts.......................................................................................................................................2 2.1. Wavelength ..........................................................................................................................................2 2.2. Basic Directivity Rule .........................................................................................................................2 2.3. Horizontal-Vertical Independence...................................................................................................3 2.4. Multiple Sources and Lobing ...........................................................................................................3 2.5. Beamforming........................................................................................................................................5 3. Gain Shading....................................................................................................................................................6 -

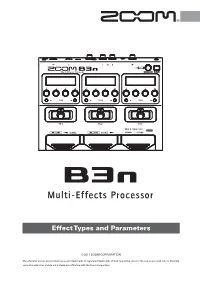

Effect Types and Parameters

Effect Types and Parameters © 2017 ZOOM CORPORATION Manufacturer names and product names are trademarks or registered trademarks of their respective owners. The names are used only to illustrate sonic characteristics and do not indicate any affiliation with the Zoom Corporation. Effect explanation overview Pedal control possible icon Effect type Effect explanation Parameter range PDL Vol The volume curve of the volume pedal can be set. VOL Adjusts the volume. 0 – 100 P Min Adjusts the volume when the pedal is at minimum position. 0 – 100 Max Adjusts the volume when the pedal is at maximum position. 0 – 100 Curve Sets the volume curve. A , B Effect Screen Parameter Parameter explanation Tempo synchronization possible icon Contents DYNAMICS 3 FILTER 4 DRIVE 6 AMP 8 CABINET 9 MODULATION 10 SFX 12 DELAY 13 REVERB 14 PEDAL 15 Additional tables 16 2 3 Effect Types and Parameters [ DYNAMICS ] SlowATTCK This effect slows the attack of each note, resulting in a violin-like performance. Time Adjusts the attack time. 1 – 50 Curve Set the curve of volume change during attack. 0 – 10 Tone Adjusts the tone. 0 – 100 VOL Adjusts the volume. 0 – 100 ZOOM's unique noise reduction cuts noise during pauses in playing without affecting ZNR the tone. GTRIN , DETCT Sets control signal detection level. EFXIN Depth Sets the depth of noise reduction. 0 – 100 THRSH Adjusts the effect sensitivity. 0 – 100 Decay Adjust the envelope release. 0 – 100 This is a simulation of the Demeter COMP-1 Compulator. BlackOpt Added parameters allow you to adjust the tone. Comp Adjusts the depth of the compression. -

Digital Guitar Effects Pedal

Digital Guitar Effects Pedal 01001000100000110000001000001100 010010001000 Jonathan Fong John Shefchik Advisor: Dr. Brian Nutter Texas Tech University SPRP499 [email protected] Presentation Outline Block Diagram Input Signal DSP Configuration (Audio Processing) Audio Daughter Card • Codec MSP Configuration (User Peripheral Control) Pin Connections User Interfaces DSP Connection MSP and DSP Connections Simulink Modeling MSP and DSP Software Flowcharts 2 Objective \ Statements To create a digital guitar effects pedal All audio processing will be done with a DSP 6711 DSK board With an audio daughter card All user peripherals will be controlled using a MSP430 evaluation board Using the F149 model chip User Peripherals include… Floor board switches LCD on main unit Most guitar effect hardware that is available on the market is analog. Having a digital system would allow the user to update the system with new features without having to buy new hardware. 3 Block Diagram DSP 6711 DSK Board Audio Daughter Card Input from Output to Guitar ADC / DAC Amplifier Serial 2 RX Serial 2 [16 bit] Data Path Clock Frame Sync Serial 2 TX MSP 430 Evaluation Board [16 bit] GPIO (3) Outlet MSP430F149 TMS320C6711 Power Supply GND +5V Data Bus (8) RS LEDs (4) EN Voltage +3.3V to MSP R/W Switch Select (4) +5V to LCD LCD Footboard Regulator +0.7V to LCD Drive Display Switch 4 Guitar Input Signal Voltage Range of Input Signal Nominal ~ 300 mV peak-to-peak Maximum ~ 3 V Frequency Range Standard Tuning 500 Hz – 1500 Hz 5 Hardware: DSP -

Microkorg Owner's Manual

E 2 ii Precautions Data handling Location THE FCC REGULATION WARNING (for U.S.A.) Unexpected malfunctions can result in the loss of memory Using the unit in the following locations can result in a This equipment has been tested and found to comply with the contents. Please be sure to save important data on an external malfunction. limits for a Class B digital device, pursuant to Part 15 of the data filer (storage device). Korg cannot accept any responsibility • In direct sunlight FCC Rules. These limits are designed to provide reasonable for any loss or damage which you may incur as a result of data • Locations of extreme temperature or humidity protection against harmful interference in a residential loss. • Excessively dusty or dirty locations installation. This equipment generates, uses, and can radiate • Locations of excessive vibration radio frequency energy and, if not installed and used in • Close to magnetic fields accordance with the instructions, may cause harmful interference to radio communications. However, there is no Printing conventions in this manual Power supply guarantee that interference will not occur in a particular Please connect the designated AC adapter to an AC outlet of installation. If this equipment does cause harmful interference Knobs and keys printed in BOLD TYPE. the correct voltage. Do not connect it to an AC outlet of to radio or television reception, which can be determined by Knobs and keys on the panel of the microKORG are printed in voltage other than that for which your unit is intended. turning the equipment off and on, the user is encouraged to BOLD TYPE. -

Shure Sm57 Specification Pdf

Shure sm57 specification pdf Continue The Shure SM57 The Shure SM57 microphone is a slightly cardioid dynamic microphone made by Shure Incorporated and is usually found in sound reinforcement and studio recording. It is one of the best-selling microphones in the world. It is widely used in amplified music and has been used for speeches by every American president since its introduction in 1965. In 2004, in honor of the four decades of solid and reliable performance, he was inducted into the first Hall of Fame of the TEC Awards. [1] SM57's background may have been traced back to 1937, when bull engineer Benjamin Bauer developed the first directional microphone of a single element, Unidyne, which had a cardoid collection pattern. In 1959, another bull engineer, Ernie Siler, promoted the art of microphone design significantly with the Unidyne III. [1] Seiler examined the torture of Unidyne III during three years of research and development, producing the SM series of rough and reliable ox mic capsules. [1] The SM represents a studio microphone; Siller was a proponent of classical music and expected the SM57 to be used for orchestras. Because he loathed rock music, the TEC Foundation said it was ironic that the microphone had become central to rock music. Features that the SM57 uses the same capsule as the popular SM58. Like the SM58, the SM57 is equipped with an XLR connector and features balanced output, helping to minimize electrical buzzing and noise gathering. According to Shore, the frequency response extends from 40 Hz (Hz) to 15 kHz. -

Flanger, Chorus, Slapback, Echo)

Initial Technology Review 1 Delay Based Audio Effects (Flanger, Chorus, Slapback, Echo) Sam Johnson #420081870 Digital Audio Systems, DESC9115, Semester 1 2012 Graduate Program in Audio and Acoustics Faculty of Architecture, Design and Planning, The University of Sydney ABSTRACT o m In many physical acoustic spaces, sound waves will often b reflect off surfaces causing a perceived delay to the human ear. These delays have various effects in the physical domain, but F are sometimes desired in the digital domain for musical effects, i to compliment a post production visual for continuity, or even l just to make something sound different and unique. It is t important to replicate these delayed sounds in a realistic way e and realistic way to let the listener know what is happening, r rather than having an unnatural sounding output. By using a mix of acoustical phenomena and digital algorithms, a digital system will be able to take an input signal and replicate some of these sounds caused by a “digital delay”. INTRODUCTION When a sound wave travels from the source it will disperse in different directions. The sound waves will continue until it is either out of energy or it hits a surface and reflects a different direction. When the receiver (an ear or microphone) hears the sound, it hears the direct sound coming straight from the sound FIR Comb Filter source before hearing a delayed signal from a reflected surface. If the sound must travels further then there is more time for the The behavior of this type of comb filter is interesting in the way direct signal to be heard, creating an echo, where as if it is close that the time domain affects the frequency domain.