Mysql for PCF | Pivotal Docs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mariadb Presentation

THE VALUE OF OPEN SOURCE MICHAEL ”MONTY” WIDENIUS Entrepreneur, MariaDB Hacker, MariaDB CTO MariaDB Corporation AB 2019-09-25 Seoul 11 Reasons Open Source is Better than Closed Source ● Using open standards (no lock in into proprietary standards) ● Resource friendly; OSS software tend to work on old hardware ● Lower cost; Usually 1/10 of closed source software ● No cost for testing the full software ● Better documentation and more troubleshooting resources ● Better support, in many cases directly from the developers ● Better security, auditability (no trap doors and more eye balls) ● Better quality; Developed together with users ● Better customizability; You can also participate in development ● No vendor lock in; More than one vendor can give support ● When using open source, you take charge of your own future Note that using open source does not mean that you have to become a software producer! OPEN SOURCE, THE GOOD AND THE BAD ● Open source is a better way to develop software ● More developers ● More spread ● Better code (in many cases) ● Works good for projects that can freely used by a lot of companies in their production or products. ● It's very hard to create a profitable company developing an open source project. ● Not enough money to pay developers. ● Hard to get money and investors for most projects (except for infrastructure projects like libraries or daemon services). OPEN SOURCE IS NATURAL OR WHY OPEN SOURCE WORKS ● You use open source because it's less expensive (and re-usable) ● You solve your own problems and get free help and development efforts from others while doing it. -

GIS Features in Mariadb and Mysql What Has Happened in Recent Years?

GIS features in MariaDB and MySQL What has happened in recent years? Hartmut Holzgraefe Principal Support Engineer at MariaDB Inc. [email protected] August 20, 2016 Hartmut Holzgraefe (MariaDB Inc.) GIS features in MariaDB and MySQL August 20, 2016 1 / 35 Overview 1 GIS Introduction 2 MySQL GIS History 3 Other Open Source GIS Databases 4 Performance 5 The End ... Hartmut Holzgraefe (MariaDB Inc.) GIS features in MariaDB and MySQL August 20, 2016 2 / 35 GIS Introduction 1 GIS Introduction Examples 2 MySQL GIS History 3 Other Open Source GIS Databases 4 Performance 5 The End ... Hartmut Holzgraefe (MariaDB Inc.) GIS features in MariaDB and MySQL August 20, 2016 3 / 35 GIS Data Types Geospatial Information System (GIS) data types describe geometries in a (usually) two-dimensional space. There are several different geometric subtypes: Simple types: POINT, LINESTRING, POLYGON, GEOMETRY Collection types: MULTIPOINT, MULTILINESTRING, MULTIPOLYGON, GEOMETRYCOLLECTION Hartmut Holzgraefe (MariaDB Inc.) GIS features in MariaDB and MySQL August 20, 2016 4 / 35 Spatial Properties Spatial properties of a geometry can be: Coordinates Length Area Is Closed Bounding Rectangle ... Hartmut Holzgraefe (MariaDB Inc.) GIS features in MariaDB and MySQL August 20, 2016 5 / 35 Spatial Relationships The most important spatial relationships between two geometries: Hartmut Holzgraefe (MariaDB Inc.) GIS features in MariaDB and MySQL August 20, 2016 6 / 35 Examples 1 GIS Introduction Examples 2 MySQL GIS History 3 Other Open Source GIS Databases 4 Performance 5 The -

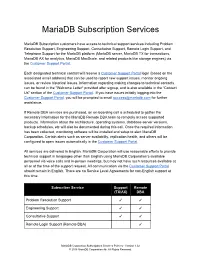

Mariadb Subscription Services Agreement

MARIADB CORPORATION MariaDB Subscription Services MariaDB Subscription customers have access to technical support services including Problem Resolution Support, Engineering Support, Consultative Support, Remote Login Support, and Telephone Support for the MariaDB platform (MariaDB server, MariaDB TX for transactions, MariaDB AX for analytics, MariaDB MaxScale, and related products like storage engines) via the Customer Support Portal. Each designated technical contact will receive a Customer Support Portal login (based on the associated email address) that can be used to report new support issues, monitor ongoing issues, or review historical issues. Information regarding making changes to technical contacts can be found in the "Welcome Letter" provided after signup, and is also available in the “Contact Us” section of the Customer Support Portal. If you have issues initially logging into the Customer Support Portal, you will be prompted to email [email protected] for further assistance. If Remote DBA services are purchased, an on-boarding call is scheduled to gather the necessary information for the MariaDB Remote DBA team to remotely access supported products. Information about the architecture, operating systems, database server versions, backup schedules, etc will also be documented during this call. Once the required information has been collected, monitoring software will be installed and setup to alert MariaDB Corporation. Certain alerts such as server availability, replication health, and others will be configured to open issues automatically in the Customer Support Portal. All services are delivered in English. MariaDB Corporation will use reasonable efforts to provide technical support in languages other than English using MariaDB Corporation’s available personnel via voice calls and in-person meetings, but may not have such resources available at all or at the time of the support request. -

Mysql Cluster – Evaluation and Tests, OCTOBER 2, 2012 1 Mysql Cluster – Evaluation and Tests

MySQL Cluster – Evaluation and Tests, OCTOBER 2, 2012 1 MySQL Cluster – Evaluation and Tests Michael Raith (B.Sc.), Master-Student F Abstract Websites or web applications, whether they represent shopping systems, on demand services or a social networks, have something in common: data must be stored somewhere and somehow. This job can be achieved by various solutions with very different performance characteristics, e.g. based on simple data files, databases or high performance RAM storage solutions. For today’s popular web applications it is important to handle database operations in a minimum amount of time, because they are struggling with a vast increase in visitors and user generated data. Therefore, a major requirement for modern database application is to handle huge data (also called “big data”) in a short amount of time and to provide high availability for that data. A very popular database application in the open source community is MySQL, which was originally developed by a swedisch company called MySQL AB and is now maintenanced by Oracle. MySQL is shipped in a bundle with the Apache web server and therefore has a large distribution. This database is easily installed, maintained and administrated. By default MySQL is shipped with the MyISAM storage engine, which has good performance on read requests, but a poor one on massive parallel write requests. With appropriate tuning of various database settings, special architecture setups (replication, partitioning, etc.) or other storage engines, MySQL can be turned into a fast database application. For example Wikipedia uses MySQL for their backend data storage. In the lecture “Ultra Large Scale Systems” and “System Engineering” teached by Walter Kriha at Media University Stuttgart, the question “Can a MySQL database application handle more then 3000 database requests per second?” came up some time. -

Innodb 1.1 for Mysql 5.5 User's Guide Innodb 1.1 for Mysql 5.5 User's Guide

InnoDB 1.1 for MySQL 5.5 User's Guide InnoDB 1.1 for MySQL 5.5 User's Guide Abstract This is the User's Guide for the InnoDB storage engine 1.1 for MySQL 5.5. Beginning with MySQL version 5.1, it is possible to swap out one version of the InnoDB storage engine and use another (the “plugin”). This manual documents the latest InnoDB plugin, version 1.1, which works with MySQL 5.5 and features cutting-edge improvements in performance and scalability. This User's Guide documents the procedures and features that are specific to the InnoDB storage engine 1.1 for MySQL 5.5. It supplements the general InnoDB information in the MySQL Reference Manual. Because InnoDB 1.1 is integrated with MySQL 5.5, it is generally available (GA) and production-ready. WARNING: Because the InnoDB storage engine 1.0 and above introduces a new file format, restrictions apply to the use of a database created with the InnoDB storage engine 1.0 and above, with earlier versions of InnoDB, when using mysqldump or MySQL replication and if you use the older InnoDB Hot Backup product rather than the newer MySQL Enterprise Backup product. See Section 1.4, “Compatibility Considerations for Downgrade and Backup”. For legal information, see the Legal Notices. Document generated on: 2014-01-30 (revision: 37565) Table of Contents Preface and Legal Notices .................................................................................................................. v 1 Introduction to InnoDB 1.1 ............................................................................................................... 1 1.1 Features of the InnoDB Storage Engine ................................................................................ 1 1.2 Obtaining and Installing the InnoDB Storage Engine ............................................................... 3 1.3 Viewing the InnoDB Storage Engine Version Number ............................................................ -

Mysql Backup and Recovery Abstract

MySQL Backup and Recovery Abstract This is the MySQL Backup and Recovery extract from the MySQL 8.0 Reference Manual. For legal information, see the Legal Notices. For help with using MySQL, please visit the MySQL Forums, where you can discuss your issues with other MySQL users. Document generated on: 2021-10-01 (revision: 70946) Table of Contents Preface and Legal Notices .................................................................................................................. v 1 Backup and Recovery ..................................................................................................................... 1 1.1 Backup and Recovery Types ................................................................................................ 2 1.2 Database Backup Methods ................................................................................................... 5 1.3 Example Backup and Recovery Strategy ............................................................................... 7 1.3.1 Establishing a Backup Policy ..................................................................................... 8 1.3.2 Using Backups for Recovery .................................................................................... 10 1.3.3 Backup Strategy Summary ....................................................................................... 10 1.4 Using mysqldump for Backups ............................................................................................ 10 1.4.1 Dumping Data in SQL Format with mysqldump ........................................................ -

In Mysql/Mariadb?

T h e O W A S P F o u n d a t i o n h t t p : / / w w w . o w a s p . o r g O W A S P E U T o u r B u c h a Do you r e s“GRANT ALL PRIVILEGES” t ... in MySQL/MariaDB? 2 0 1 DevOps Engineer 3 Gabriel PREDA [email protected] @eRadical Co pyr igh t © Th e O W AS P Fo un dat ion Per mi ssi on is gr ant ed to co py, dis tri bu te an d/ or mo dif y thi s do cu me nt un de r the ter ms of the O W AS P Lic en se. 2 DevOps = new BORG DevOps Engineer ??? ● Development – Web Applications (“Certified MySQL Associate”, “Zend Certified Engineer”) – Real Time Analytics ● Operations – MySQL DBA (15+ instances) – Sysadmin (<25 virtual & physical servers) 3 My MySQL● Over 15 MariaDB / TokuDBMariaDB(s) instances ● Statistics in MariaDB – < 1TB from Oct 2012 – < 12G raw data daily – < 12,000,000 events processed daily – < 90,000,000 rows added daily BigData? NO!!! ● I can copy all of that to my laptop ● “Working data set” - less than 1G & less than 7,500,000 rows 4 MySQL History ● 1983 – first version of MySQL created by Monty Wideniuns ● 1994 – MySQL is released OpenSource ● 2004 Oct – MySQL 4.1 GA ● 2005 Oct – InnoDB (Innobase) is bought by Oracle – Black Friday ● 2008 Ian – MySQL AB is bought by Sun (1bn $) ● 2008 Nov – MySQL 5.1 GA ● 2009 Apr – Sun is bought by Oracle (7,4 bn $) ● 2010 Dec – MySQL 5.5 GA ● 2012 Apr – MariaDB 5.5 GA ● 2013 Feb – MySQL 5.6 – first version made by Oracle ● 2013 Feb – MySQL will be replaced by MariaDB in Fedora & OpenSuSE * Max Mether – SkySQL “MySQL and MariaDB: Past, Present and Future” 5 Where are we NOW()? Drizzle MySQL TokuDB (Oracle) (Tokutek) Percona Server (Percona) MariaDB (Monty Program, Brighthouse MariaDB Foundation) (Infobright) Replication: ● Asynchronous InfiniDB ● Semi-synchronous (Calpont) ● Galera Synchronous (Codership) ● Tungsten Replication (Continuent) 6 Elementary.. -

Mysql Architectures for Oracle DBA's

MySQL Architectures for Oracle DBA's UKOUG Conference 2011 December 5th, Birmingham Oli Sennhauser Senior MySQL Consultant, FromDual [email protected] www.fromdual.com 1 FromDual ● FromDual provides neutral and independent: ● Consulting for MySQL (on-site and remote) ● Remote-DBA / MySQL operations ● Support for Galera (synchronous MySQL Replication) ● Support for MySQL (Basic and Silver) ● Training for MySQL ● Consulting Partner of Open Database Alliance (ODBA.org) ● Oracle Silver Partner (OPN) ● More informations at: www.fromdual.com www.fromdual.com 2 Our customer www.fromdual.com 3 Content ● The LAMP Stack ● History of MySQL ● Open Source ● Branches and Forks ● Move from Oracle? ● MySQL Architecture ● Pluggable Storage Engines ● Differences between Oracle and MySQL ● Scale-Up vs. Scale-Out ● High-Availability solutions ● Architectures put in place www.fromdual.com 4 The LAMP Stack We are the Web! Who is Alternatives behind? Perl, Java, Python, Zend Technologies PHP Ruby MySQL MySQL/Oracle PostgreSQL, others Apache Software Apache Foundation Lighty (lighttpd), IIS Windows, Solaris, Linux Foundation Linux BSD, others www.fromdual.com 5 History of MySQL Oracle tries to 1995: project started buy MySQL by Monty Widenius, Oct 2005: David Axmark and Oracle buys Allan Larsson Innobase OY InnoDB Friday IPO is announced for 2008 ... 2000 01 02 03 04 2005 06 07 08 09 2010 Jun Jan 2008: Sun buys 2000: MySQL for USD 1000 GPL Mio Apr 2009: Jan 2001: v3.23 2008: Branching Oracle buys GA of MySQL begins Sun for USD 7400 Mio www.fromdual.com -

Data Management Organization Charter

Large Synoptic Survey Telescope (LSST) Database Design Jacek Becla, Daniel Wang, Serge Monkewitz, K-T Lim, Douglas Smith, Bill Chickering LDM-135 08/02/2013 LSST Database Design LDM-135 08/02/13 Change Record Version Date Description Revision Author 1.0 6/15/2009 Initial version Jacek Becla 2.0 7/12/2011 Most sections rewritten, added scalability test Jacek Becla section 2.1 8/12/2011 Refreshed future-plans and schedule of testing Jacek Becla, sections, added section about fault tolerance Daniel Wang 3.0 8/2/2013 Synchronized with latest changes to the Jacek Becla, requirements (LSE-163). Rewrote most of the Daniel Wang, “Implementation” chapter. Documented new Serge Monkewitz, tests, refreshed all other chapters. Kian-Tat Lim, Douglas Smith, Bill Chickering 2 LSST Database Design LDM-135 08/02/13 Table of Contents 1. Executive Summary.....................................................................................................................8 2. Introduction..................................................................................................................................9 3. Baseline Architecture.................................................................................................................10 3.1 Alert Production and Up-to-date Catalog..........................................................................10 3.2 Data Release Production....................................................................................................13 3.3 User Query Access.............................................................................................................13 -

Mariadb Subscription Services

MariaDB Subscription Services MariaDB Subscription customers have access to technical support services including Problem Resolution Support, Engineering Support, Consultative Support, Remote Login Support, and Telephone Support for the MariaDB platform (MariaDB server, MariaDB TX for transactions, MariaDB AX for analytics, MariaDB MaxScale, and related products like storage engines) via the Customer Support Portal. Each designated technical contact will receive a Customer Support Portal login (based on the associated email address) that can be used to report new support issues, monitor ongoing issues, or review historical issues. Information regarding making changes to technical contacts can be found in the "Welcome Letter" provided after signup, and is also available in the “Contact Us” section of the Customer Support Portal. If you have issues initially logging into the Customer Support Portal, you will be prompted to email [email protected] for further assistance. If Remote DBA services are purchased, an on-boarding call is scheduled to gather the necessary information for the MariaDB Remote DBA team to remotely access supported products. Information about the architecture, operating systems, database server versions, backup schedules, etc will also be documented during this call. Once the required information has been collected, monitoring software will be installed and setup to alert MariaDB Corporation. Certain alerts such as server availability, replication health, and others will be configured to open issues automatically in the Customer Support Portal. All services are delivered in English. MariaDB Corporation will use reasonable efforts to provide technical support in languages other than English using MariaDB Corporation’s available personnel via voice calls and in-person meetings, but may not have such resources available at all or at the time of the support request. -

(Ab)Use the Kernel: What a Database Server Can Do to Your Kernel

(Ab)use the Kernel: What a database server can do to your kernel Stewart Smith Kernel Conference Core Drizzle Developer Australia 2009 Sun Microsystems Inc Brisbane, 15-17 July (Ab)use the Kernel: What a database server can do to your kernel Stewart Smith Kernel Conference Dark Lord of the Drizzle Kernel Australia 2009 Sun Microsystems Inc Brisbane, 15-17 July Databases ● MySQL ● InnoDB ● MySQL Cluster (NDB) ● HA Clustered Database ● Drizzle What is MySQL ● This small database that is used by a couple of people What is MySQL Cluster? Application Application Application Application INSERT UPDATE DELETE CREATE MySQL Server Storage Engine API MyISAM InnoDB NDB Falcon Cluster Perl Mono PHP .NET mysql Ruby DELETE INSERT UPDATE mysqld mysqld mysqld Management Server Mgm client Data NDB API Nodes NDB API update() update() http://www.flickr.com/photos/xjs-khaos/520473155/ Row in memory part on disk part Checkpointing ● Checkpoint to disk ● On Cluster failure, ● Recover to previous Global CheckPoint (GCP) ● GCP is a Local Check Point (LCP) + REDO ● Disk data is Data + UNDO ● Durability is 2PC across replicas ● Txn committed when in memory on all replicas Memory allocation ● Since in memory data, malloc() large amounts ● Deterministic means not swapping ● Lock pgaes ● 32GB machine ● 31.5GB locked Disk IO ● IO with that much locked memory ● O_DIRECT ● Fsync() latency ● consistency Network IO ● Sometimes... ● 1 CPU for network interrupts ● Spinning better than waiting for IO ● Must run DB on different CPUs than network interrupts ● Bind specific -

Mysql NDB Cluster 7.3, Mysql NDB Cluster 7.4 Abstract

MySQL NDB Cluster 7.3, MySQL NDB Cluster 7.4 Abstract This is the MySQL NDB Cluster 7.3 and MySQL NDB Cluster 7.4 extract from the MySQL 8.0 Reference Manual. For legal information, see the Legal Notices. For help with using MySQL, please visit the MySQL Forums, where you can discuss your issues with other MySQL users. Document generated on: 2021-09-24 (revision: 70892) Table of Contents Preface and Legal Notices ........................................................................................................... vii 1 General Information ................................................................................................................... 1 2 NDB Cluster Overview ............................................................................................................... 3 2.1 NDB Cluster Core Concepts ............................................................................................ 5 2.2 NDB Cluster Nodes, Node Groups, Fragment Replicas, and Partitions ............................... 7 2.3 NDB Cluster Hardware, Software, and Networking Requirements .................................... 10 2.4 What is New in MySQL NDB Cluster ............................................................................. 11 2.4.1 What is New in NDB Cluster 7.3 ......................................................................... 12 2.4.2 What is New in NDB Cluster 7.4 ......................................................................... 13 2.5 NDB: Added, Deprecated, and Removed Options, Variables, and Parameters .................