UNIT-I Q.1 Explain Differential and Linear Cryptanalysis of DES

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Security Evaluation of Stream Cipher Enocoro-128V2

Security Evaluation of Stream Cipher Enocoro-128v2 Hell, Martin; Johansson, Thomas 2010 Link to publication Citation for published version (APA): Hell, M., & Johansson, T. (2010). Security Evaluation of Stream Cipher Enocoro-128v2. CRYPTREC Technical Report. Total number of authors: 2 General rights Unless other specific re-use rights are stated the following general rights apply: Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal Read more about Creative commons licenses: https://creativecommons.org/licenses/ Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. LUND UNIVERSITY PO Box 117 221 00 Lund +46 46-222 00 00 Security Evaluation of Stream Cipher Enocoro-128v2 Martin Hell and Thomas Johansson Abstract. This report presents a security evaluation of the Enocoro- 128v2 stream cipher. Enocoro-128v2 was proposed in 2010 and is a mem- ber of the Enocoro family of stream ciphers. This evaluation examines several different attacks applied to the Enocoro-128v2 design. No attack better than exhaustive key search has been found. -

The Data Encryption Standard (DES) – History

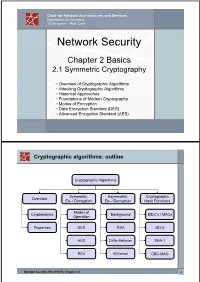

Chair for Network Architectures and Services Department of Informatics TU München – Prof. Carle Network Security Chapter 2 Basics 2.1 Symmetric Cryptography • Overview of Cryptographic Algorithms • Attacking Cryptographic Algorithms • Historical Approaches • Foundations of Modern Cryptography • Modes of Encryption • Data Encryption Standard (DES) • Advanced Encryption Standard (AES) Cryptographic algorithms: outline Cryptographic Algorithms Symmetric Asymmetric Cryptographic Overview En- / Decryption En- / Decryption Hash Functions Modes of Cryptanalysis Background MDC’s / MACs Operation Properties DES RSA MD-5 AES Diffie-Hellman SHA-1 RC4 ElGamal CBC-MAC Network Security, WS 2010/11, Chapter 2.1 2 Basic Terms: Plaintext and Ciphertext Plaintext P The original readable content of a message (or data). P_netsec = „This is network security“ Ciphertext C The encrypted version of the plaintext. C_netsec = „Ff iThtIiDjlyHLPRFxvowf“ encrypt key k1 C P key k2 decrypt In case of symmetric cryptography, k1 = k2. Network Security, WS 2010/11, Chapter 2.1 3 Basic Terms: Block cipher and Stream cipher Block cipher A cipher that encrypts / decrypts inputs of length n to outputs of length n given the corresponding key k. • n is block length Most modern symmetric ciphers are block ciphers, e.g. AES, DES, Twofish, … Stream cipher A symmetric cipher that generats a random bitstream, called key stream, from the symmetric key k. Ciphertext = key stream XOR plaintext Network Security, WS 2010/11, Chapter 2.1 4 Cryptographic algorithms: overview -

Advanced Encryption Standard (Aes) Modes of Operation

ADVANCED ENCRYPTION STANDARD (AES) MODES OF OPERATION 1 Arya Rohan Under the guidance of Dr. Edward Schneider University of Maryland, College Park MISSION: TO SIMULATE BLOCK CIPHER MODES OF OPERATION FOR AES IN MATLAB Simulation of the AES (Rijndael Algorithm) in MATLAB for 128 bit key-length. Simulation of the five block cipher modes of operation for AES as per FIPS publication. Comparison of the five modes based on Avalanche Effect. Future Work 2 OUTLINE A brief history of AES Galois Field Theory De-Ciphering the Algorithm-ENCRYPTION De-Ciphering the Algorithm-DECRYPTION Block Cipher Modes of Operation Avalanche Effect Simulation in MATLAB Conclusion & Future Work References 3 A BRIEF HISTORY OF AES 4 In January 1997, researchers world-over were invited by NIST to submit proposals for a new standard to be called Advanced Encryption Standard (AES). From 15 serious proposals, the Rijndael algorithm proposed by Vincent Rijmen and Joan Daemen, two Belgian cryptographers won the contest. The Rijndael algorithm supported plaintext sizes of 128, 192 and 256 bits, as well as, key-lengths of 128, 192 and 256 bits. The Rijndael algorithm is based on the Galois field theory and hence it gives the algorithm provable 5 security properties. GALOIS FIELD 6 GALOIS FIELD - GROUP Group/Albelian Group: A group G or {G, .} is a set of elements with a binary operation denoted by . , that associates to each ordered pair (a, b) of elements in G an element (a . b) such that the following properties are obeyed: Closure: If a & b belong to G, then a . b also belongs to G. -

Block Ciphers and the Data Encryption Standard

Lecture 3: Block Ciphers and the Data Encryption Standard Lecture Notes on “Computer and Network Security” by Avi Kak ([email protected]) January 26, 2021 3:43pm ©2021 Avinash Kak, Purdue University Goals: To introduce the notion of a block cipher in the modern context. To talk about the infeasibility of ideal block ciphers To introduce the notion of the Feistel Cipher Structure To go over DES, the Data Encryption Standard To illustrate important DES steps with Python and Perl code CONTENTS Section Title Page 3.1 Ideal Block Cipher 3 3.1.1 Size of the Encryption Key for the Ideal Block Cipher 6 3.2 The Feistel Structure for Block Ciphers 7 3.2.1 Mathematical Description of Each Round in the 10 Feistel Structure 3.2.2 Decryption in Ciphers Based on the Feistel Structure 12 3.3 DES: The Data Encryption Standard 16 3.3.1 One Round of Processing in DES 18 3.3.2 The S-Box for the Substitution Step in Each Round 22 3.3.3 The Substitution Tables 26 3.3.4 The P-Box Permutation in the Feistel Function 33 3.3.5 The DES Key Schedule: Generating the Round Keys 35 3.3.6 Initial Permutation of the Encryption Key 38 3.3.7 Contraction-Permutation that Generates the 48-Bit 42 Round Key from the 56-Bit Key 3.4 What Makes DES a Strong Cipher (to the 46 Extent It is a Strong Cipher) 3.5 Homework Problems 48 2 Computer and Network Security by Avi Kak Lecture 3 Back to TOC 3.1 IDEAL BLOCK CIPHER In a modern block cipher (but still using a classical encryption method), we replace a block of N bits from the plaintext with a block of N bits from the ciphertext. -

Analysis of Selected Block Cipher Modes for Authenticated Encryption

Analysis of Selected Block Cipher Modes for Authenticated Encryption by Hassan Musallam Ahmed Qahur Al Mahri Bachelor of Engineering (Computer Systems and Networks) (Sultan Qaboos University) – 2007 Thesis submitted in fulfilment of the requirement for the degree of Doctor of Philosophy School of Electrical Engineering and Computer Science Science and Engineering Faculty Queensland University of Technology 2018 Keywords Authenticated encryption, AE, AEAD, ++AE, AEZ, block cipher, CAESAR, confidentiality, COPA, differential fault analysis, differential power analysis, ElmD, fault attack, forgery attack, integrity assurance, leakage resilience, modes of op- eration, OCB, OTR, SHELL, side channel attack, statistical fault analysis, sym- metric encryption, tweakable block cipher, XE, XEX. i ii Abstract Cryptography assures information security through different functionalities, es- pecially confidentiality and integrity assurance. According to Menezes et al. [1], confidentiality means the process of assuring that no one could interpret infor- mation, except authorised parties, while data integrity is an assurance that any unauthorised alterations to a message content will be detected. One possible ap- proach to ensure confidentiality and data integrity is to use two different schemes where one scheme provides confidentiality and the other provides integrity as- surance. A more compact approach is to use schemes, called Authenticated En- cryption (AE) schemes, that simultaneously provide confidentiality and integrity assurance for a message. AE can be constructed using different mechanisms, and the most common construction is to use block cipher modes, which is our focus in this thesis. AE schemes have been used in a wide range of applications, and defined by standardisation organizations. The National Institute of Standards and Technol- ogy (NIST) recommended two AE block cipher modes CCM [2] and GCM [3]. -

Block Ciphers

Block Ciphers Chester Rebeiro IIT Madras CR STINSON : chapters 3 Block Cipher KE KD untrusted communication link Alice E D Bob #%AR3Xf34^$ “Attack at Dawn!!” message encryption (ciphertext) decryption “Attack at Dawn!!” Encryption key is the same as the decryption key (KE = K D) CR 2 Block Cipher : Encryption Key Length Secret Key Plaintext Ciphertext Block Cipher (Encryption) Block Length • A block cipher encryption algorithm encrypts n bits of plaintext at a time • May need to pad the plaintext if necessary • y = ek(x) CR 3 Block Cipher : Decryption Key Length Secret Key Ciphertext Plaintext Block Cipher (Decryption) Block Length • A block cipher decryption algorithm recovers the plaintext from the ciphertext. • x = dk(y) CR 4 Inside the Block Cipher PlaintextBlock (an iterative cipher) Key Whitening Round 1 key1 Round 2 key2 Round 3 key3 Round n keyn Ciphertext Block • Each round has the same endomorphic cryptosystem, which takes a key and produces an intermediate ouput • Size of the key is huge… much larger than the block size. CR 5 Inside the Block Cipher (the key schedule) PlaintextBlock Secret Key Key Whitening Round 1 Round Key 1 Round 2 Round Key 2 Round 3 Round Key 3 Key Expansion Expansion Key Key Round n Round Key n Ciphertext Block • A single secret key of fixed size used to generate ‘round keys’ for each round CR 6 Inside the Round Function Round Input • Add Round key : Add Round Key Mixing operation between the round input and the round key. typically, an ex-or operation Confusion Layer • Confusion layer : Makes the relationship between round Diffusion Layer input and output complex. -

The Twin Diffie-Hellman Problem and Applications

The Twin Diffie-Hellman Problem and Applications David Cash1 Eike Kiltz2 Victor Shoup3 February 10, 2009 Abstract We propose a new computational problem called the twin Diffie-Hellman problem. This problem is closely related to the usual (computational) Diffie-Hellman problem and can be used in many of the same cryptographic constructions that are based on the Diffie-Hellman problem. Moreover, the twin Diffie-Hellman problem is at least as hard as the ordinary Diffie-Hellman problem. However, we are able to show that the twin Diffie-Hellman problem remains hard, even in the presence of a decision oracle that recognizes solutions to the problem — this is a feature not enjoyed by the Diffie-Hellman problem in general. Specifically, we show how to build a certain “trapdoor test” that allows us to effectively answer decision oracle queries for the twin Diffie-Hellman problem without knowing any of the corresponding discrete logarithms. Our new techniques have many applications. As one such application, we present a new variant of ElGamal encryption with very short ciphertexts, and with a very simple and tight security proof, in the random oracle model, under the assumption that the ordinary Diffie-Hellman problem is hard. We present several other applications as well, including: a new variant of Diffie and Hellman’s non-interactive key exchange protocol; a new variant of Cramer-Shoup encryption, with a very simple proof in the standard model; a new variant of Boneh-Franklin identity-based encryption, with very short ciphertexts; a more robust version of a password-authenticated key exchange protocol of Abdalla and Pointcheval. -

Fair and Efficient Hardware Benchmarking of Candidates In

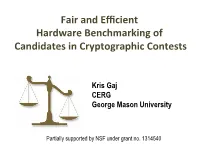

Fair and Efficient Hardware Benchmarking of Candidates in Cryptographic Contests Kris Gaj CERG George Mason University Partially supported by NSF under grant no. 1314540 Designs & results for this talk contributed by “Ice” Homsirikamol Farnoud Farahmand Ahmed Ferozpuri Will Diehl Marcin Rogawski Panasayya Yalla Cryptographic Standard Contests IX.1997 X.2000 AES 15 block ciphers → 1 winner NESSIE I.2000 XII.2002 CRYPTREC XI.2004 IV.2008 34 stream 4 HW winners eSTREAM ciphers → + 4 SW winners X.2007 X.2012 51 hash functions → 1 winner SHA-3 I.2013 TBD 57 authenticated ciphers → multiple winners CAESAR 97 98 99 00 01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 time Evaluation Criteria in Cryptographic Contests Security Software Efficiency Hardware Efficiency µProcessors µControllers FPGAs ASICs Flexibility Simplicity Licensing 4 AES Contest 1997-2000 Final Round Speed in FPGAs Votes at the AES 3 conference GMU results Hardware results matter! 5 Throughput vs. Area Normalized to Results for SHA-256 and Averaged over 11 FPGA Families – 256-bit variants Overall Normalized Throughput Early Leader Overall Normalized Area 6 SHA-3 finalists in high-performance FPGA families 0.25 0.35 0.50 0.79 1.00 1.41 2.00 2.83 4.00 7 FPGA Evaluations – From AES to SHA-3 AES eSTREAM SHA-3 Design Primary optimization Throughput Area Throughput/ target Throughput/ Area Area Multiple architectures No Yes Yes Embedded resources No No Yes Benchmarking Multiple FPGA families No No Yes Specialized tools No No Yes Experimental results No No Yes Reproducibility Availability -

2.4 the Random Oracle Model

國 立 交 通 大 學 資訊工程學系 博 士 論 文 可證明安全的公開金鑰密碼系統與通行碼驗證金鑰 交換 Provably Secure Public Key Cryptosystems and Password Authenticated Key Exchange Protocols 研 究 生:張庭毅 指導教授:楊維邦 教授 黃明祥 教授 中 華 民 國 九 十 五 年 十 二 月 可證明安全的公開金鑰密碼系統與通行碼驗證金鑰交換 Provably Secure Public Key Cryptosystems and Password Authenticated Key Exchange Protocols 研 究 生:張庭毅 Student:Ting-Yi Chang 指導教授:楊維邦 博士 Advisor:Dr. Wei-Pang Yang 黃明祥 博士 Dr. Min-Shiang Hwang 國 立 交 通 大 學 資 訊 工 程 學 系 博 士 論 文 A Dissertation Submitted to Department of Computer Science College of Computer Science National Chiao Tung University in partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in Computer Science December 2006 Hsinchu, Taiwan, Republic of China 中華民國九十五年十二月 ¡¢£¤¥¦§¨© ª« ¬ Æ ¯ « ¡¨ © ¡¢£¤¥ ¦§¨©¢ª«¬ Æ ¯ Æ Æ Æ ¡ ElGamal ¦§ °±¥ ²³´ ·§±¥¸¹º»¼½¶¾¿§¾¿¸¹³ °µ¶ p ° p§¾¿ ElGamal Hwang §°À¡Á²±¥·§ÂÃÄŨ© ElGamal-like È ÆǧȤÉÀÊËÌ¡ÍÎϧElGamal-like IND-CPA ¡¦ÃÅ Á²±¥·ÁÃÄŧ¨©§Æ ° ½¡ÐÑÒµ§ IND-CPA ElGamal IND- ±È¤±¥ÓÔÕ§ CCA2§ ElGamal-extended ¡Ö×جٶÚÀÛÜ°§¨©ÝÞ°ÛÜߧ ¡¦§ËÌ IND-CPAPAIR ElGamal-extended Ô°°ÃÄÅ ¬§DZàáâ ãäåæçèé°¡êÛÜ°ëìíîï§åÉ i ïíîÛܰ먩ǰ § ðñòóô ¨©õö÷°§Àäå øù×Øú§ûüÀÆý°þÿì° ÛÜµÌ °Ûܱ¡ Bellare-Pointcheval-Rogaway ¯À°úÐÑÒ·§¨© ° Diffie-Hellman õ°Ý§¡¦§ ò°¥§§±¥§Diffie- Hellman ¯§¥§§È秧 È秧ô§§ç±Ûܧ §ÐÑÒ ii Provably Secure Public Key Cryptosystems and Password Authenticated Key Exchange Protocols Student: Ting-Yi Chang Advisor: Dr. Wei-Pang Yang Dr. Min-Shiang Hwang Institute of Computer Science and Engineering National Chiao Tung University ABSTRACT In this thesis, we focus on two topics: public key cryptosystems and pass- word authenticated key exchange protocols. -

Development of the Advanced Encryption Standard

Volume 126, Article No. 126024 (2021) https://doi.org/10.6028/jres.126.024 Journal of Research of the National Institute of Standards and Technology Development of the Advanced Encryption Standard Miles E. Smid Formerly: Computer Security Division, National Institute of Standards and Technology, Gaithersburg, MD 20899, USA [email protected] Strong cryptographic algorithms are essential for the protection of stored and transmitted data throughout the world. This publication discusses the development of Federal Information Processing Standards Publication (FIPS) 197, which specifies a cryptographic algorithm known as the Advanced Encryption Standard (AES). The AES was the result of a cooperative multiyear effort involving the U.S. government, industry, and the academic community. Several difficult problems that had to be resolved during the standard’s development are discussed, and the eventual solutions are presented. The author writes from his viewpoint as former leader of the Security Technology Group and later as acting director of the Computer Security Division at the National Institute of Standards and Technology, where he was responsible for the AES development. Key words: Advanced Encryption Standard (AES); consensus process; cryptography; Data Encryption Standard (DES); security requirements, SKIPJACK. Accepted: June 18, 2021 Published: August 16, 2021; Current Version: August 23, 2021 This article was sponsored by James Foti, Computer Security Division, Information Technology Laboratory, National Institute of Standards and Technology (NIST). The views expressed represent those of the author and not necessarily those of NIST. https://doi.org/10.6028/jres.126.024 1. Introduction In the late 1990s, the National Institute of Standards and Technology (NIST) was about to decide if it was going to specify a new cryptographic algorithm standard for the protection of U.S. -

Chapter 3 – Block Ciphers and the Data Encryption Standard

Symmetric Cryptography Chapter 6 Block vs Stream Ciphers • Block ciphers process messages into blocks, each of which is then en/decrypted – Like a substitution on very big characters • 64-bits or more • Stream ciphers process messages a bit or byte at a time when en/decrypting – Many current ciphers are block ciphers • Better analyzed. • Broader range of applications. Block vs Stream Ciphers Block Cipher Principles • Block ciphers look like an extremely large substitution • Would need table of 264 entries for a 64-bit block • Arbitrary reversible substitution cipher for a large block size is not practical – 64-bit general substitution block cipher, key size 264! • Most symmetric block ciphers are based on a Feistel Cipher Structure • Needed since must be able to decrypt ciphertext to recover messages efficiently Ideal Block Cipher Substitution-Permutation Ciphers • in 1949 Shannon introduced idea of substitution- permutation (S-P) networks – modern substitution-transposition product cipher • These form the basis of modern block ciphers • S-P networks are based on the two primitive cryptographic operations we have seen before: – substitution (S-box) – permutation (P-box) (transposition) • Provide confusion and diffusion of message Diffusion and Confusion • Introduced by Claude Shannon to thwart cryptanalysis based on statistical analysis – Assume the attacker has some knowledge of the statistical characteristics of the plaintext • Cipher needs to completely obscure statistical properties of original message • A one-time pad does this Diffusion -

Multi-Server Authentication Key Exchange Approach in Bigdata Environment

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 04 Issue: 07 | July -2017 www.irjet.net p-ISSN: 2395-0072 MULTI-SERVER AUTHENTICATION KEY EXCHANGE APPROACH IN BIGDATA ENVIRONMENT Miss. Kiran More1, Prof. Jyoti Raghatwan2 1Kiran More, PG Student. Dept. of Computer Engg. RMD Sinhgad school of Engineering Warje, Pune 2Prof. Jyoti Raghatwan, Dept. of Computer Engg. RMD Sinhgad school of Engineering Warje, Pune ---------------------------------------------------------------------***--------------------------------------------------------------------- Abstract - The key establishment difficulty is the maximum Over-all Equivalent Files System. Which are usually required central issue and we learn the trouble of key organization for for advanced scientific or data exhaustive applications such secure many to many communications for past several years. as digital animation studios, computational fluid dynamics, The trouble is stimulated by the broadcast of huge level and semiconductor manufacturing. detached file organizations behind similar admission to In these milieus, hundreds or thousands of file various storage space tactics. Our chore focal ideas on the structure clients bit data and engender very much high current Internet commonplace for such folder systems that is summative I/O load on the file coordination supporting Parallel Network Folder System [pNFS], which generates petabytes or terabytes scale storage capacities. Liberated of employment of Kerberos to establish up similar session keys the enlargement of the knot and high-performance flanked by customers and storing strategy. Our evaluation of computing, the arrival of clouds and the MapReduce the available Kerberos bottommost procedure validates that it program writing model has resulted in file system such as has a numeral of borders: (a) a metadata attendant make the Hadoop Distributed File System (HDFS).