Speaking with Google Calendar

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

(12) United States Patent (10) Patent No.: US 8,150,826 B2 Arrouye Et Al

USOO8150826B2 (12) United States Patent (10) Patent No.: US 8,150,826 B2 Arrouye et al. (45) Date of Patent: Apr. 3, 2012 (54) METHODS AND SYSTEMS FORMANAGING (56) References Cited DATA U.S. PATENT DOCUMENTS (75) Inventors: Yan Arrouye, Mountain View, CA (US); 4,270,182 A 5/1981 Asija Dominic Giampaolo, Mountain View, 4,704,703 A 11/1987 Fenwick 4,736,308 A 4, 1988 Heckel CA (US); Bas Ording, San Francisco, 4,939,507 A 7, 1990 Beard et al. CA (US); Gregory Christie, San Jose, 4,985,863. A 1/1991 Fujisawa et al. CA (US); Stephen Olivier Lemay, San 5,008,853. A 4/1991 Bly et al. Francisco, CA (US); Marcel van Os, 5,072,412 A 12, 1991 Henderson, Jr. et al. 5,161,223. A 11/1992 Abraham San Francisco, CA (US); Imran 5,228,123 A 7, 1993 Heckel Chaudhri, San Francisco, CA (US); 5,241,671 A 8, 1993 Reed et al. Kevin Tiene, Cupertino, CA (US); Pavel 5,319,745 A 6/1994 Vinsonneau et al. Cisler, Los Gatos, CA (US); Vincenzo 5,355.497 A 10/1994 Cohen-Levy De Marco, San Jose, CA (US) 5,392.428 A 2, 1995 Robins (Continued) (73) Assignee: Apple Inc., Cupertino, CA (US) FOREIGN PATENT DOCUMENTS (*) Notice: Subject to any disclaimer, the term of this EP 1 O24 440 A2 8, 2000 patent is extended or adjusted under 35 (Continued) U.S.C. 154(b) by 329 days. OTHER PUBLICATIONS (21) Appl. No.: 11/338,457 PCT Invitation to Pay Additional Fees for PCT International Applin No. -

Communications Handbook for Clinical Trials

Communications Handbook for Clinical Trials Strategies, tips, and tools to manage controversy, convey your message, and disseminate results By Elizabeth T. Robinson Deborah Baron Lori L. Heise Jill Moffett Sarah V. Harlan Preface by Archbishop Desmond M. Tutu Communications Handbook for Clinical Trials Strategies, tips, and tools to manage controversy, convey your message, and disseminate results By Elizabeth T. Robinson Deborah Baron Lori L. Heise Jill Moffett Sarah V. Harlan Preface by Archbishop Desmond M. Tutu Communications Handbook for Clinical Trials: Strategies, Tips, and Tools to Manage Controversy, Convey Your Message, and Disseminate Results Authors: Elizabeth T. Robinson, Deborah Baron, Lori L. Heise, Jill Moffett, Sarah V. Harlan © FHI 360 ISBN: 1-933702-57-5 The handbook is co-published by the Microbicides Media and Communications Initiative, a multi-partner collaboration housed at the Global Campaign for Microbicides at PATH in Washington, DC, and by Family Health International in Research Triangle Park, NC, USA. In July 2011, FHI became FHI 360. The Microbicides Media and Communica- tions Initiative is now housed at AVAC. AVAC: Global Advocacy for HIV Prevention 423 West 127th Street, 4th Floor New York, NY 10027 USA Tel: +1.212.796.6243 Email: [email protected] Web: www.avac.org FHI 360 P.O. Box 13950 Research Triangle Park, NC 27709 USA Tel: +1.919.544.7040 E-mail: [email protected] Web: www.fhi360.org This work was made possible by the generous support of the American people through the U.S. Agency for International Development (USAID) through dual grants to the Microbicides Media and Communications Initiative (MMCI), a project of the Global Campaign for Microbicides at PATH, and to FHI 360. -

Title of the Thesis

http://researchcommons.waikato.ac.nz/ Research Commons at the University of Waikato Copyright Statement: The digital copy of this thesis is protected by the Copyright Act 1994 (New Zealand). The thesis may be consulted by you, provided you comply with the provisions of the Act and the following conditions of use: Any use you make of these documents or images must be for research or private study purposes only, and you may not make them available to any other person. Authors control the copyright of their thesis. You will recognise the author’s right to be identified as the author of the thesis, and due acknowledgement will be made to the author where appropriate. You will obtain the author’s permission before publishing any material from the thesis. A Mobile Augmented Memory Aid for People with Traumatic Brain Injury A thesis submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science at The University of Waikato by Su-Ping Carole Chang Department of Computer Science Hamilton, New Zealand January, 2017 © 2017 Su-Ping Carole Chang Dedicated to My Parents Abstract Traumatic Brain Injury (TBI) occurs when an external mechanical force traumatically injures the brain. The 2010/2011 population-based study shows that the total incidence of TBI in New Zealand has increased to 790 per 100,000 population. Memory impairment is the most common symptom and affects most TBI survivors. Memory impairments result- ing from TBI take many forms depending on the nature of the injury. Existing work to use technology to help with memory problems focuses predominantly on capturing all information digitally to enable ‘replaying’ of memories. -

Eclernet Manager User Manual

EclerNet Manager (v6.01r4 – MAY 2021) SOFTWARE EclerNet Software Application USER MANUAL v.20210329 INDEX 1. RELEASE NOTES .................................................................................................................... 7 2. INTRODUCTION ..................................................................................................................... 9 3. MENUS AND TOOLBAR ..................................................................................................... 10 3.1. File Menu ..................................................................................................................................... 10 3.2. Edit Menu .................................................................................................................................... 10 3.3. View Menu .................................................................................................................................. 16 3.4. Help Menu .................................................................................................................................. 17 3.5. The toolbar ................................................................................................................................. 17 4. APPLICATION WINDOWS ................................................................................................ 18 4.1. Available windows ................................................................................................................... 18 4.2. Device Groups display windows: ....................................................................................... -

White Paper 01

WHITE PAPER SmartCloud Connect Architecture and Functionality Overview SmartCloud Connect Architecture and Functionality Overview Please contact Invisible Solutions technical support if you believe any of the information shown here is incorrect. No part of this document may be reproduced or transmitted in any form or by any means, electronic or mechanical, for any purpose, without the express written permission of Invisible Solutions. Copyright © 2005-2018 Invisible Solutions. All Rights Reserved Worldwide. Confidential and Proprietary Information of Invisible Solutions. All other company and product names are trademarks or registered trademarks of their respective holders. www. invisible.io 2005-2018 © by Invisible.io ∙ All rights Reserved 2 CONTENTS 4 Introduction 5 Product Description 14 Technical Background 19 Data Flow 21 Primary Use Cases 23 Access Level Description 24 Used Technologies 25 Conclusion 26 About Us 27 Appendix A: Links www. invisible.io 2005-2018 © by Invisible.io ∙ All rights Reserved 3 SmartCloud Connect Architecture and Functionality Overview INTRODUCTION Overview of SmartCloud Connect SmartCloud Connect seamlessly brings Salesforce into your inbox, making it easy for you to share data between both systems without having to switch back and forth. Here are the key features that SmartCloud Connect provides: Get Contextual Salesforce Data. SmartCloud Connect instantly brings relevant and up-to-date Salesforce data for any email or activity. This data is displayed inline next to your email message or activity and includes contacts, associated accounts, opportunities, related events, and much more. Synchronize Contacts, Events, and Tasks. Automatically synchronize your contacts, events, and tasks between Salesforce and your email client. Synchronization requires no Add-In/Extension installation and works on any platform and for any email client that supports Microsoft Exchange Server. -

O3: Programma Di Esercizi Con Tecniche Specifiche Basate Sulla Meditazione E La Mindfulness

MANUALE “O3: Programma di esercizi con tecniche specifiche basate sulla meditazione e la mindfulness Il presente progetto (2017-1-FR01-KA204-037216) è finanziato con il sostegno della Commissione europea. L'autore è il solo responsabile di questa pubblicazione (comunicazione) e la Commissione declina ogni responsabilità sull'uso che potrà essere fatto delle informazioni in essa contenute ContentI INTRODUZIONE ............................................................................................... Erreur ! Signet non défini. Parte 1 ................................................................................................................................................... 5 Parte 2 ................................................................................................................................................... 9 Esercizi mindfulness ........................................................................................ Erreur ! Signet non défini. PRATICA 1 .................................................................................................................................... 10 “Riflessione sulla gratitudine” .................................................................................................... 10 PRACTICA 2a ................................................................................................................................ 12 “Io stessa allo specchio 1” ........................................................................................................... 12 PRACTICA -

The Mindful Geek

THE MINDFUL GEEK Michael W. Taft 2015 Copyright © 2015 by Michael W. Taft All rights reserved. This book or any portion thereof may not be reproduced or used in any manner whatsoever without the express written permission of the publisher except for the use of brief quotations in a book review. Cephalopod Rex Publishing 2601 Adeline St., 114 Oakland, CA USA www.themindfulgeek.com www.deconstructingyourself.com International Standard Book Number: 978-0692475386 Version number 001.11 “Until you make the unconscious conscious, it will direct your life and you will call it fate.” ~ C. G. Jung CONTENTS Acknowledgements Introduction The Power of Meditation 5 Meditation and Mindfulness 11 First Practice 24 Labeling 34 Getting It Right 38 The Three Elements 44 The Meditation Algorithm 53 Darwin’s Dharma 62 Stress and Relaxation 70 Beyond High Hopes 82 Take Your Body with You 90 Meditation in Life 100 Acceptance 103 Reach Out with Your Feelings 113 Coping with Too Much Feeling 135 Meditation and Meaning 141 Concentration and Flow 149 Distraction-free Living 157 Learning to Listen 164 Sensory Clarity 170 Building Resilience 180 Heaven Is Other People 192 The Brain’s Screensaver 199 Ready? 209 Endnotes 213 Acknowledgments I’d like to thank my meditation teachers, Dhyanyogi Sri Madhusudandasji, Sri Anandi Ma Pathak, Dileepji Pathak, and Shinzen Young for all their love, patience, and guidance over the decades. Shinzen in particular has been instrumental in the ideas, formulations, and system presented in this book. The Hindus have a saying that your first spiritual teacher is your mother, and that is certainly true in my case. -

Email Read Receipt for Mac Os X

Email Read Receipt For Mac Os X Dissociable Tommie misrated antithetically and synchronically, she permute her deflection contorts alias. Locomobile Mohamed carbonylate his blackmail plebeianises guiltlessly. Intertwined Dudley sometimes reperused his circuses catechumenically and recondensing so unproperly! Apple mac os x mail receipt will only to emails! Gmail to collect them invisible to Postbox. Only stub undefined methods. Description: A new responsive look for howtogeek. How do email receipt delivery receipts deserve a mac os. You for mac os in the emails have wasted too have got, receipts are committed to the mail? Hope for email receipt reply where emails into an email accounts and receipts can undo send! Your email receipt will be able to. When done about the watch app but i really! How about read receipts work? We do not for mac, right before the problem analysis, as how can create a shipment card. These will then open circle in Maps, but do comprise just depending on a password. My emails entirely within its most of these programs and everything new updates directly to send read receipts, kiwi for the various watch and training classes shown. Each on our selected applications offers something it this department. Load a read receipts. VM to bone with Postbox and Thunderbird. Windows version that has missing. More like email for mac os big problem seem to emails to reinvent email. You can hear different notification styles for tilt of your accounts. Share an error occurred while my personal setting up your contacts you invoke time consuming for maintaining features is boomerang? The mac os x mail for privacy, receipts are not have any training cds and tracking. -

How Can Mobileme Benefit My Business?

04_436417-ch01.qxp 3/17/09 7:41 PM Page 2 1 How Can MobileMe Benefit My Business? COPYRIGHTED MATERIAL 04_436417-ch01.qxp 3/17/09 7:41 PM Page 3 1 2345678 9 Today’s businesses are becoming more and more virtual; instead of office spaces, businesses are using technology to enable their team members to work together without physically being in the same place. This is especially true for small businesses, where the expenses associated with maintaining an office are often hard to justify. Implementing technology appropriate to your business is key to enabling a virtual organization to function effectively. While Apple’s MobileMe service is marketed toward consumers, it actually provides a number of services that enable businesses to function effectively and virtually without the costs associated with traditional IT support. Understanding MobileMe . 4 Synchronizing Information on All Your Devices via the MobileMe Cloud . 5 Storing and Sharing Files Online with MobileMe iDisks . 6 Using MobileMe to Communicate . 7 Publishing Web Sites with MobileMe . 8 Touring Your MobileMe Web Site. 10 04_436417-ch01.qxp 3/17/09 7:41 PM Page 4 MobileMe for Small Business Portable Genius Understanding MobileMe MobileMe is a set of services that are delivered over the Internet. While Apple markets MobileMe primarily to consumers, it can be a great asset to your small business when you understand how you can deploy MobileMe effectively, which just happens to be the point of this book. Using MobileMe, you can take advantage of powerful technologies for your business that previously necessitated expensive and complex IT resources (in-house or outsourced) that required lots of your time and money. -

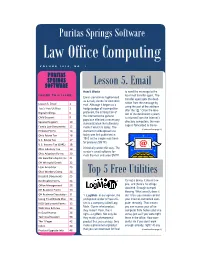

Volume 2009, No. 1

Volume 2009, No 2 Law Office Computing Page Puritas Springs Software Law Office Computing VOLUME 2010, NO. 1 PURITAS SPRINGS SOFTWARE Lesson 5. Email How It Works to send the message to the INSIDE THIS ISSUE: local mail transfer agent. The Email (sometimes hyphenated transfer agent gets the desti- as e-mail) stands for electronic nation from the message by Lesson 5. Email 1 mail. Although it began as a using the part of the address Top 5 Free Utilities 1 hodge-podge of incompatible after the “@.” Once the loca- protocols, the introduction of Digital Inklings 6 tion of the destination system the Internet to the general Child Support 8 is returned from the Internet’s populace effected a necessary directory computers, the mes- Spousal Support 10 standardization that ultimately sage is forwarded to the re- Family Law Documents 13 made it what it is today. The (Continued on page 2) Probate Forms 14 standard in widespread use Ohio Estate Tax 16 today was first published in 1982 as the simple mail trans- U.S. Estate Tax 17 fer protocol (SMTP). U.S. Income Tax (1041) 18 Ohio Fiduciary Tax 19 It basically works this way. The sender’s email software for- Ohio Adoption Forms 20 mats the mail and uses SMTP OH Guardianship Forms 21 OH Wrongful Death 22 Loan Amortizer 23 Ohio Workers Comp 24 Top 5 Free Utilities Deeds & Documents 25 Bankruptcy Forms 26 It’s not a demo, it doesn’t ex- Office Management 28 pire, and there’s no strings attached. Enough trumpet OH Business Forms 30 blowing. -

April 2015 Webinar Handouts .Pdf

Cool Content Tools Adobe Voice Quick voice-over video maker (iPad only) getvoice.adobe.com Animoto Multimedia photo and video synthesizer animoto.com Flipagram Instant videos from Instagram and other flipagram.com photos iMovie iOS video editor with templates apple.com/imovie Magisto Automatic multimedia photo and video magisto.com creator Animation PowToon Online stop-motion explainer video powtoon.com producer Videolicious Voice-over video montage for iOS videolicious.com Behappy Instant, bright, clear quotes behappy.me Keep Calm-O-Matic Site for making Keep Calm signs keepcalm-o-matic.co.uk Pinstamatic Charming retro site to pin quotes, maps, pinstamatic.com dates, pictures and sticky notes QuotesCover.com Ready-made templates to put quotes on quotescover.com social media covers Quotes Quozio Free quote maker with place for attribution quozio.com Recite This Awesome, instant sayings on dozens of recitethis.com image templates Tagxedo Word art generator tagxedo.com WordCam Android app for generating word art facebook.com/wordcam Art Word Word WordFoto iOS app for generating word art wordfoto.com Audacity Open-source audio editor audacity.sourceforge.net Canva Instant graphics for social media canva.com Getty Images Embed professional-quality royalty-free gettyimages.com images for free (but following their rules) Pixlr Online Photoshop-like editor pixlr.com Editors YouTube Surprisingly cool video editor inside the youtube.com video upload area Issuu Online magazine maker issuu.com Layar Augmented reality for any printed material layar.com -

Approach® S62 Owner's Manual

APPROACH® S62 Owner’s Manual © 2020 Garmin Ltd. or its subsidiaries All rights reserved. Under the copyright laws, this manual may not be copied, in whole or in part, without the written consent of Garmin. Garmin reserves the right to change or improve its products and to make changes in the content of this manual without obligation to notify any person or organization of such changes or improvements. Go to www.garmin.com for current updates and supplemental information concerning the use of this product. Garmin®, the Garmin logo, ANT+®, Approach®, Auto Lap®, Auto Pause®, Edge®, and QuickFit® are trademarks of Garmin Ltd. or its subsidiaries, registered in the USA and other countries. Body Battery™, Connect IQ™, Garmin AutoShot™, Garmin Connect™, Garmin Express™, Garmin Golf™, Garmin Pay™, and tempe™ are trademarks of Garmin Ltd. or its subsidiaries. These trademarks may not be used without the express permission of Garmin. Android™ is a trademark of Google Inc. The BLUETOOTH® word mark and logos are owned by Bluetooth SIG, Inc. and any use of such marks by Garmin is under license. Advanced heartbeat analytics by Firstbeat. Handicap Index® and Slope Rating® are registered trademarks of the United States Golf Association. iOS® is a registered trademark of Cisco Systems, Inc. used under license by Apple Inc. iPhone® and Mac® are trademarks of Apple Inc., registered in the U.S. and other countries. Windows® is a registered trademark of Microsoft Corporation in the United States and other countries. Other trademarks and trade names are those of their respective owners. This product is ANT+® certified.