Next-Gen-Scrapy: Extracting NFL Tracking Data from Images to Evaluate Quarterbacks and Pass Defenses

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Miami Dolphins Weekly Release

Miami Dolphins Weekly Release Game 12: Miami Dolphins (4-7) vs. Baltimore Ravens (4-7) Sunday, Dec. 6 • 1 p.m. ET • Sun Life Stadium • Miami Gardens, Fla. RESHAD JONES Tackle total leads all NFL defensive backs and is fourth among all NFL 20 / S 98 defensive players 2 Tied for first in NFL with two interceptions returned for touchdowns Consecutive games with an interception for a touchdown, 2 the only player in team history Only player in the NFL to have at least two interceptions returned 2 for a touchdown and at least two sacks 3 Interceptions, tied for fifth among safeties 7 Passes defensed, tied for sixth-most among NFL safeties JARVIS LANDRY One of two players in NFL to have gained at least 100 yards on rushing (107), 100 receiving (816), kickoff returns (255) and punt returns (252) 14 / WR Catch percentage, fourth-highest among receivers with at least 70 71.7 receptions over the last two years Of two receivers in the NFL to have a special teams touchdown (1 punt return 1 for a touchdown), rushing touchdown (1 rushing touchdown) and a receiving touchdown (4 receiving touchdowns) in 2015 Only player in NFL with a rushing attempt, reception, kickoff return, 1 punt return, a pass completion and a two point conversion in 2015 NDAMUKONG SUH 4 Passes defensed, tied for first among NFL defensive tackles 93 / DT Third-highest rated NFL pass rush interior defensive lineman 91.8 by Pro Football Focus Fourth-highest rated overall NFL interior defensive lineman 92.3 by Pro Football Focus 4 Sacks, tied for sixth among NFL defensive tackles 10 Stuffs, is the most among NFL defensive tackles 4 Pro Bowl selections following the 2010, 2012, 2013 and 2014 seasons TABLE OF CONTENTS GAME INFORMATION 4-5 2015 MIAMI DOLPHINS SEASON SCHEDULE 6-7 MIAMI DOLPHINS 50TH SEASON ALL-TIME TEAM 8-9 2015 NFL RANKINGS 10 2015 DOLPHINS LEADERS AND STATISTICS 11 WHAT TO LOOK FOR IN 2015/WHAT TO LOOK FOR AGAINST THE RAVENS 12 DOLPHINS-RAVENS OFFENSIVE/DEFENSIVE COMPARISON 13 DOLPHINS PLAYERS VS. -

Week 3 Training Camp Report

[Date] Volume 16, Issue 3 – 8/24/2021 Our goal at Footballguys is to help you win more at Follow our Footballguys Training Camp crew fantasy football. One way we do that is make sure on Twitter: you’re the most informed person in your league. @FBGNews, @theaudible, @football_guys, Our Staffers sort through the mountain of news and @sigmundbloom, @fbgwood, @bobhenry, deliver these weekly reports so you'll know @MattWaldman, @CecilLammey, everything about every team and every player that @JustinHoweFF, @Hindery, @a_rudnicki, matters. We want to help you crush your fantasy @draftdaddy, @AdamHarstad, draft. And this will do it. @JamesBrimacombe, @RyanHester13, @Andrew_Garda, @Bischoff_Scott, @PhilFBG, We’re your “Guide” in this journey. Buckle up and @xfantasyphoenix, @McNamaraDynasty let’s win this thing. Your Friends at Footballguys “What I saw from A.J. Green at Cardinals practice today looked like the 2015 version,” Riddick tweeted. “He was on fire. Arizona has the potential to have top-five wide receiver group with DHop, AJ, Rondale Moore, and Christian Kirk.” The Cardinals have lots of depth now at QB: Kyler Murray saw his first snaps this preseason, but the wide receiver position with the additions for Green it was evident Kliff Kingsbury sees little value in giving and Moore this offseason. his superstar quarterback an extended preseason look. He played nine snaps against the Chiefs before giving TE: The tight end position remains one of the big way to Colt McCoy and Chris Streveler. Those nine question marks. Maxx Williams sits at the top of the snaps were discouraging, as Murray took two sacks and depth chart, but it is muddied with Darrell Daniels, only completed one pass. -

Flowers Guard // 6-6 // 343 // Miami (Fl) ‘15 Acquired: Ufa, ‘20 (Was.) Hometown: Miami, Fla

ERECK 75 FLOWERS GUARD // 6-6 // 343 // MIAMI (FL) ‘15 ACQUIRED: UFA, ‘20 (WAS.) HOMETOWN: MIAMI, FLA. // BORN: 4/25/94 NFL: SIXTH SEASON // DOLPHINS: FIRST SEASON • Inactive for 1 game with Jacksonville. NFL CAREER • Dressed but did not play 2 games with TRANSACTIONS: Jacksonville. • Signed by Miami as an unrestricted free agent • Signed by Jacksonville on Oct. 12. from Washington on March 21, 2020. • Waived by the N.Y. Giants on Oct. 9. • Signed by Washington as an unrestricted free • Played in 5 games with 2 starts at right tackle for agent from Jacksonville on March 18, 2019. the N.Y. Giants. • Signed by Jacksonville on Oct. 12, 2018. VS. JACKSONVILLE (9/9): Started at right tackle. • Waived by the N.Y. Giants on Oct. 9, 2018. • Helped block for RB Saquon Barkley, who rushed • 1st-round pick (9th overall) by the N.Y. Giants in for a 68-yard TD in his NFL debut. the 2015 NFL draft. AT INDIANAPOLIS (11/11): Played but did not record any stats. CAREER HIGHLIGHTS: • Made his Jaguars debut. • Played in 89 career games with 85 starts. VS. PITTSBURGH (11/18): Started at left tackle. • Made his 1st Jaguars start. 2020 (MIAMI): • Started 14 games. 2017 (N.Y. GIANTS): • Inactive for 2 games. • Started 15 games at left tackle. AT JACKSONVILLE (9/24): Started at left guard. • Inactive for 1 game. • Protected QB Ryan Fitzpatrick who completed AT PHILADELPHIA (9/24): Started at left tackle. 18-of-20 passes (90.0 pct.) and set a new franchise • Helped protect QB Eli Manning, who passed for record for completion percentage in a single 366 yards and 3 TDs. -

Final Rosters

www.rtsports.com ALL JOHNSON FFL Week 17 07-Feb-2006 02:37 PM Eastern A. K. 47s - KALVIN BLAKE & ARRON BLAKE PRETENDERS - DAVE JOHNSON Brett Favre QB GNB vs SEA * 339 19.94 Kerry Collins QB OAK vs NYG * 319 18.76 Larry Johnson RB KAN vs CIN * 326 19.18 Warrick Dunn RB ATL vs CAR * 189 11.12 Tiki Barber RB NYG @ OAK * 314 18.47 Antowain Smith RB NOR @ TAM * 91 5.35 Derrick Mason WR BAL @ CLE * 131 7.71 Santana Moss WR WAS @ PHI * 221 13.00 Jimmy Smith WR JAC vs TEN * 135 7.94 Chad Johnson WR CIN @ KAN * 199 11.71 John Kasay K CAR @ ATL * 204 12.00 Ryan Longwell K GNB vs SEA * 166 9.76 Drew Brees QB SDG vs DEN 343 20.18 Josh McCown QB ARI @ IND 185 10.88 Rudi Johnson RB CIN @ KAN 229 13.47 Mike Alstott RB TAM vs NOR 65 3.82 Terry Glenn WR DAL vs STL 159 9.35 Ahman Green RB GNB vs SEA I/R 34 2.00 David Akers K PHI vs WAS 123 7.24 Joe Horn WR NOR @ TAM 69 4.06 Jeff Reed K PIT vs DET 199 11.71 BRUCE'S BAD BOY'S - BRUCE GALASSO Brad Johnson QB MIN vs CHI * 166 9.76 PRIME TIME - RICH CARREY Dominic Rhodes RB IND vs ARI * 43 2.53 Kyle Boller QB BAL @ CLE * 161 9.47 Jamal Lewis RB BAL @ CLE * 123 7.24 Steven Jackson RB STL @ DAL * 208 12.24 Rod Smith WR DEN @ SDG * 154 9.06 Shaun Alexander RB SEA @ GNB * 359 21.12 Joey Galloway WR TAM vs NOR * 195 11.47 Joe Jurevicius WR SEA @ GNB * 128 7.53 Mike Nugent K NYJ vs BUF * 158 9.29 Marvin Harrison WR IND vs ARI * 189 11.12 Jake Plummer QB DEN @ SDG 332 19.53 Lawrence Tynes K KAN vs CIN * 214 12.59 Chris Brown RB TEN @ JAC 162 9.53 Tom Brady QB NWE vs MIA 384 22.59 Curtis Martin RB NYJ vs BUF I/R 114 -

91 Holy Cross Postseason History

HHolyoly CCrossross PPostseasonostseason HHistoryistory 91 1946 Orange Bowl 1983 NCAA Division I-AA Quarterfi nals Miami (Fla.) 13, Holy Cross 6 Western Carolina 28, Holy Cross 21 January 1, 1946 • Orange Bowl • Miami, Fla. December 3, 1983 • Fitton Field • Worcester, Mass. Al Hudson of Miami is the only In a game which proved to be player ever to score a touchdown one of the most exciting ever at after time had offi cially expired in Fitton Field, a well-oiled Western an Orange Bowl. This climax, the Carolina passing attack dissected greatest in Orange Bowl history, the Holy Cross defense for a 28- gave the Hurricanes a 13-6 victory 21 win in the quarterfi nals of the over Holy Cross in the 1946 NCAA Division I-AA playoffs. contest, which appeared certain Holy Cross jumped on top 7-0, as to end in a 6-6 tie. The deadlock Gill Fenerty, coming off a shoulder appeared so certain that thousands separation, ran for a 33-yard of the spectators had headed for the touchdown early in the fi rst period. exits. They were stopped in their It was not long, however, before a tracks by the roar of the crowd, brilliant Western Carolina passing who saw Hudson leap high on game had its fi rst tally, a 30-yard the northeast side of the fi eld to pass from Jeff Gilbert to Eric intercept Gene DeFillipo’s long, Rasheed. A 7-7 halftime score had desperation pass on last play of the 10,814 on hand anxious for a the game — and turn it into an shootout in the second half. -

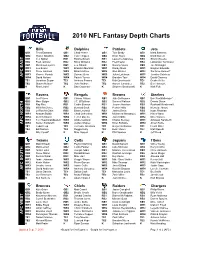

NFL Depth Chart Cheat Sheet

2010 NFL Fantasy Depth Charts Bills Dolphins Patriots Jets QB1 Trent Edwards QB1 Chad Henne QB1 Tom Brady QB1 Mark Sanchez QB2 Ryan Fitzpatrick QB2 Tyler Thigpen QB2 Brian Hoyer QB2 Mark Brunell RB1 C.J. Spiller RB1 Ronnie Brown RB1 Laurence Maroney RB1 Shonn Greene RB2 Fred Jackson RB2 Ricky Williams RB2 Fred Taylor RB2 LaDainian Tomlinson RB3 Marshawn Lynch RB3 Lex Hilliard RB3 Sammy Morris RB3 Joe McKnight WR1 Lee Evans WR1 Brandon Marshall WR1 Randy Moss WR1 Braylon Edwards WR2 Steve Johnson WR2 Brian Hartline WR2 Wes Welker WR2 Santonio Holmes* WR3 Roscoe Parrish WR3 Davone Bess WR3 Julian Edelman WR3 Jerricho Cotchery AFCEAST WR4 David Nelson WR4 Patrick Turner WR4 Brandon Tate WR4 David Clowney TE1 Jonathan Stupar TE1 Anthony Fasano TE1 Rob Gronkowski TE1 Dustin Keller TE2 Shawn Nelson* TE2 John Nalbone TE2 Aaron Hernandez TE2 Ben Hartsock K Rian Lindell K Dan Carpenter K Stephen Gostkowski K Nick Folk Ravens Bengals Browns Steelers QB1 Joe Flacco QB1 Carson Palmer QB1 Jake Delhomme QB1 Ben Roethlisberger* QB2 Marc Bulger QB2 J.T. O'Sullivan QB2 Seneca Wallace QB2 Dennis Dixon RB1 Ray Rice RB1 Cedric Benson RB1 Jerome Harrison RB1 Rashard Mendenhall RB2 Willis McGahee RB2 Bernard Scott RB2 Peyton Hillis RB2 Mewelde Moore RB3 Le'Ron McClain RB3 Brian Leonard RB3 James Davis RB3 Isaac Redman WR1 Anquan Boldin WR1 Chad Ochocinco WR1 Mohamed Massaquoi WR1 Hines Ward WR2 Derrick Mason WR2 Terrell Owens WR2 Josh Cribbs WR2 Mike Wallace WR3 T.J. Houshmandzadeh WR3 Andre Caldwell WR3 Chansi Stuckey WR3 Antwaan Randle El AFCNORTH WR4 Donte' -

New England Patriots Vs. Buffalo Bills Sunday, November 11, 2012 • 1:00 P.M

NEW ENGLAND PATRIOTS VS. BUFFALO BILLS Sunday, November 11, 2012 • 1:00 p.m. • Gillette Stadium # NAME ................... POS # NAME .................. POS 3 Stephen Gostkowski ..... K 3 John Potter ................ K 11 Julian Edelman ......... WR PATRIOTS OFFENSE PATRIOTS DEFENSE 4 Tyler Thigpen ...........QB WR: 83 Wes Welker 11 Julian Edelman LE: 50 Rob Ninkovich 96 Jermaine Cunningham 99 Trevor Scott 12 Tom Brady .................QB 92 Jake Bequette 6 Shawn Powell ............. P 14 Zoltan Mesko ............... P LT: 77 Nate Solder 61 Marcus Cannon DT: 75 Vince Wilfork 97 Ron Brace 7 Tarvaris Jackson .......QB 15 Ryan Mallett ..............QB LG: 70 Logan Mankins 64 Donald Thomas DT: 74 Kyle Love 94 Justin Francis 9 Rian Lindell ................ K 18 Matthew Slater ......... WR 11 T.J. Graham ............. WR C: 62 Ryan Wendell 65 Nick McDonald RE: 95 Chandler Jones 71 Brandon Deaderick 22 Stevan Ridley ............ RB 13 Stevie Johnson ........ WR 23 Marquice Cole ............ CB RG: 63 Dan Connolly 65 Nick McDonald LB: 51 Jerod Mayo 53 Jeff Tarpinian 59 Mike Rivera 14 Ryan Fitzpatrick ........QB 24 Kyle Arrington ............ CB RT: 76 Sebastian Vollmer 61 Marcus Cannon LB: 55 Brandon Spikes 90 Niko Koutouvides 16 Brad Smith .............. WR 25 Patrick Chung .............. S TE: 87 Rob Gronkowski 81 Aaron Hernandez 86 Daniel Fells LB: 54 Dont'a Hightower 58 Tracy White 19 Donald Jones........... WR 26 Derrick Martin .............. S 47 Michael Hoomanawanui 20 Tashard Choice ......... RB WR: 85 Brandon Lloyd 84 Deion Branch 18 Matthew Slater LCB: 32 Devin McCourty 37 Alfonzo Dennard 27 Tavon Wilson .............DB 21 Leodis McKelvin ........ CB 28 Steve Gregory ............. S QB: 12 Tom Brady 15 Ryan Mallett RCB: 24 Kyle Arrington 23 Marquice Cole 22 Fred Jackson ........... -

The Disparate Impact of the NFL's Use of the Wonderlic Intelligence Test and the Case for a Football- Specific Estt Note

University of Connecticut OpenCommons@UConn Connecticut Law Review School of Law 2009 Fourth and Short on Equality: The Disparate Impact of the NFL's Use of the Wonderlic Intelligence Test and the Case for a Football- Specific estT Note Christopher Hatch Follow this and additional works at: https://opencommons.uconn.edu/law_review Recommended Citation Hatch, Christopher, "Fourth and Short on Equality: The Disparate Impact of the NFL's Use of the Wonderlic Intelligence Test and the Case for a Football-Specific estT Note" (2009). Connecticut Law Review. 38. https://opencommons.uconn.edu/law_review/38 CONNECTICUT LAW REVIEW VOLUME 41 JULY 2009 NUMBER 5 Note FOURTH AND SHORT ON EQUALITY: THE DISPARATE IMPACT OF THE NFL’S USE OF THE WONDERLIC INTELLIGENCE TEST AND THE CASE FOR A FOOTBALL-SPECIFIC TEST CHRISTOPHER HATCH Prior to being selected in the NFL draft, a player must undergo a series of physical and mental evaluations, including the Wonderlic Intelligence Test. The twelve-minute test, which measures “cognitive ability,” has been shown to have a disparate impact on minorities in various employment situations. This Note contends that the NFL’s use of the Wonderlic also has a disparate impact because of its effect on a player’s draft status and ultimately his salary. The test cannot be justified by business necessity because there is no correlation between a player’s Wonderlic score and their on-field performance. As such, this Note calls for the creation of a football-specific intelligence test that would be less likely to have a disparate impact than the Wonderlic, while also being sufficiently job-related and more reliable in predicting a player’s success. -

Nfl Training Camp Quarterback Update

NATIONAL FOOTBALL LEAGUE 280 Park Avenue, New York, NY 10017 (212) 450-2000 * FAX (212) 681-7573 WWW.NFLMedia.com Joe Browne, Executive Vice President-Communications Greg Aiello, Vice President-Public Relations FOR USE AS DESIRED NFL-41 7/26/06 NFL TRAINING CAMP QUARTERBACK UPDATE With all 32 NFL teams in training camp by this Sunday, a major focus will be on the leader of each team’s offense – the starting quarterback. The starter is set at some clubs. It’s an open competition at others. Following is an alphabetical team-by-team list of NFL quarterbacks (* Expected starter; # Veteran new to team; ^ NFL Europe League veteran): AMERICAN FOOTBALL CONFERENCE TEAM NFL EXPERIENCE TEAM NFL EXPERIENCE BALTIMORE KANSAS CITY KYLE BOLLER 4 BRODIE CROYLE R STEVE MC NAIR * # 12 TRENT GREEN * 13 DREW OLSON R DAMON HUARD ^ 10 BRIAN ST. PIERRE 3 CASEY PRINTERS 1 BUFFALO MIAMI KELLY HOLCOMB ^ 10 BROCK BERLIN ^ 1 KLIFF KINGSBURY # ^ 2 DAUNTE CULPEPPER * # 8 J.P. LOSMAN 3 JOEY HARRINGTON # 5 CRAIG NALL # ^ 5 JUSTIN HOLLAND R CLEO LEMON ^ 3 CINCINNATI DOUG JOHNSON # 6 NEW ENGLAND ERIK MEYER R TOM BRADY * 7 CARSON PALMER * 4 COREY BRAMLET R ANTHONY WRIGHT # 8 MATT CASSEL 2 TODD MORTENSEN ^ 1 CLEVELAND DEREK ANDERSON 2 NEW YORK JETS LANG CAMPBELL ^ 1 BROOKS BOLLINGER 4 KEN DORSEY # 4 KELLEN CLEMENS R CHARLIE FRYE * 2 CHAD PENNINGTON 7 DARRELL HACKNEY R PATRICK RAMSEY # 5 DENVER OAKLAND JAY CUTLER R AARON BROOKS * # 8 PRESTON PARSONS # 3 REGGIE ROBERTSON ^ 1 JAKE PLUMMER * 10 KENT SMITH R BRADLEE VAN PELT 2 MARQUES TUIASOSOPO 6 ANDREW WALTER 2 HOUSTON MATT BAKER R PITTSBURGH DAVID CARR * 5 CHARLIE BATCH 9 QUINTON PORTER R SHAYNE BOYD ^ 1 SAGE ROSENFELS # 6 OMAR JACOBS R BEN ROETHLISBERGER * 3 INDIANAPOLIS ROD RUTHERFORD 1 JOSH BETTS R SHAUN KING # 7 SAN DIEGO DAVID KORAL R BRETT ELLIOTT R PEYTON MANNING * 9 A.J. -

NFL Fantasy Football Depth Chart. Updated

NFL Fantasy Football Depth Chart. Updated: August 27th Key: [R]-Rookie, AFC East Division Buffalo Bills Miami Dolphins New England Patriots New York Jets Pos Name Pos Name Pos Name Pos Name QB Ryan Fitzpatrick QB Chad Henne QB Tom Brady QB Mark Sanchez Brian Brohm Pat White Brian Hoyer Kellen Clemens RB Fred Jackson RB Ronnie Brown RB Laurence Maroney RB Shonn Greene C.J. Spiller [R] Ricky Williams Sammy Morris Ladainian Tomlinson WR1 Lee Evans WR1 Brandon Marshall WR1 Randy Moss WR1 Braylon Edwards WR2 Steve Johnson WR2 Davone Bess WR2 Wes Welker WR2 Santonio Holmes James Hardy Brian Hartline Julian Edelman Jerricho Cotchery Chad Jackson Patrick Turner Brandon Tate David Clowney TE Shawn Nelson TE Anthony Fasano TE Rob Gronkowski [R] TE Dustin Keller Jonathan Stupar Joey Haynos Aaron Hernandez [R] Ben Hartsock K Rian Lindell K Dan Carpenter K Stephen Gostkowski K Nick Folk AFC North Division Cincinnati Bengals Baltimore Ravens Pittsburgh Steelers Cleveland Browns Pos Name Pos Name Pos Name Pos Name QB Carson Palmer QB Joe Flacco QB Ben Roethlisberger QB Jake Delhomme J.T. O'Sullivan Marc Bulger D. Dixon/B. Leftwich Seneca Wallace RB Cedric Benson RB Ray Rice RB Rashard Mendenhall RB Jerome Harrison Bernard Scott Willis McGahee Mewelde Moore Montario Hardesty WR1 Chad Ochocinco WR1 Anquan Boldin WR1 Hines Ward WR1 Mohamed Massaquoi WR2 Terrel Owens WR2 Derrick Mason WR2 Mike Wallace WR2 Brian Robiskie Antonio Bryant Mark Clayton Arnaz Battle Chansi Stuckey Jerome Simpson Donte' Stallworth Antwaan Randle El Joshua Cribbs TE Jermaine Gresham -

Week 10 – Thursday, November 12, 2015 Buffalo Bills (4-4) at New York Jets (5-3) Series Bills Jets Thurs. Record 6-8-1 6-10 Se

WEEK 10 – THURSDAY, NOVEMBER 12, 2015 BUFFALO BILLS (4-4) AT NEW YORK JETS (5-3) SERIES BILLS JETS THURS. RECORD 6-8-1 6-10 SERIES LEADER 57-51 STREAKS 4 of past 5 COACHES VS. OPP. Ryan: 0-0 Bowles: 1-0 LAST WEEK W 33-17 vs. Dolphins W 28-23 vs. Jaguars LAST GAME 11/24/14: Jets 3 at Bills 38 (in Detroit). WR Robert Woods has 9 catches for 119 yards & 1 TD. Bills RBs Boobie Dixon & Fred Jackson each have rushing TD. LAST GAME AT SITE 10/26/14: Bills 43, Jets 23. Buffalo QB Kyle Orton throws for 238 yards & 4 TDs (142.8 passer rating). Bills WR Sammy Watkins has 3 catches for 157 yards & TD. REFEREE Brad Allen BROADCAST NFLN (8:25PM ET): Jim Nantz, Phil Simms, Tracy Wolfson (field reporter). Westwood One: Ian Eagle, Boomer Esiason. SIRIUS: 88 (WW1), 83 (Buf.), 93 (NYJ). XM: 88 (WW1), 225 (Buf.), 226 (NYJ). STATS PASSING Taylor: 107-149-1278-10-4-108.9 (3C) Fitzpatrick: 154-249-1790-13-7-89.3 RUSHING McCoy: 94-416-4.4-2 Ivory: 138-544-3.9-6 (1C) RECEIVING Clay (TE): 35-356-10.2-2 Marshall: 54-730-13.5-5 OFFENSE 350.5 365.9 TAKE/GIVE +1 +7 (T1C) DEFENSE 353.5 323.3 (2C) SACKS Hughes, Williams: 3 Wilkerson: 5 INTs Three tied: 2 Williams: 4 (T3L) PUNTING Schmidt: 48.7 (3L) Quigley: 41.9 KICKING Carpenter: 49 (22/23 PAT; 9/11 FG) Bullock (w. Hou): 18 (3/5 PAT; 5/6 FG) NOTES BILLS: QB TYROD TAYLOR (108.9) is on pace to surpass HOFer JIM KELLY (101.2 in 1990) for highest single-season passer rating in team history. -

New York Jets at Miami Dolphins Hard Rock Stadium Oct

NEW YORK JETS AT MIAMI DOLPHINS HARD ROCK STADIUM OCT. 18, 2020 | 4:05 PM POSTGAME NOTES DOLPHINS SHUT OUT JETS, 24- 0 • Miami’s 24-0 win is the team’s first shutout since Nov. 2, 2014 when they beat San Diego, 37-0. • This is the first time the Dolphins won back-to-back games in a season by 20 or more points since 1990 when the Dolphins posted a 27-7 win at Indianapolis followed by a 23-3 win vs. Phoenix. o However, the Dolphins have won by 20-plus points in the 2001 season finale (34-7 vs. Buffalo) followed by a 20-plus point win in 2002 season opener (49-21 vs. Detroit) in that span. • The Dolphins have now won three of their past four games and eight of their past 15 dating back to 2019. • This is Miami’s fifth consecutive victory over the Jets at Hard Rock Stadium. • Miami has now won five of its past six meetings against the Jets and seven of the past nine. • This is the Dolphins’ fourth win in their past seven home games. • Miami is now 65-40-3 all-time in home games in October. • The Dolphins have won 21 of their past 35 games at Hard Rock Stadium dating back to 2016. DOLPHINS DEFENSE PITCHES SHUT OUT • Miami’s 24-0 win is the team’s first shutout since Nov. 2, 2014 when they beat San Diego, 37-0. • It’s the 27th regular-season shutout in Dolphins history and third of the Jets.