Re-Architecting Network Telemetry with Resource Efficiency and Full Accuracy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bridging the Data Charging Gap in the Cellular Edge

Bridging the Data Charging Gap in the Cellular Edge Yuanjie Li, Kyu-Han Kim, Christina Vlachou, Junqing Xie Hewlett Packard Labs {yuanjiel,kyu-han.kim,christina.vlachou,jun-qing.xie}@hpe.com ABSTRACT licensed spectrum and support carrier-grade QoS for low latency. The 4G/5G cellular edge promises low-latency experiences any- We have seen some early operational edge services via 4G/5G (x2.2). where, anytime. However, data charging gaps can arise between The cellular network data is not free. To enjoy the low-latency the cellular operators and edge application vendors, and cause services, the edge app vendors should pay the cellular operators by over-/under-billing. We find that such gap can come from data loss, traffic usage (similar to our mobile data plans). Even for the“un- selfish charging, or both. It can be amplified in the edge, duetoits limited” data plans, the edge app’s network speed will be throttled low-latency requirements. We devise TLC, a Trusted, Loss-tolerant (e.g., 128Kbps) if its usage exceeds pre-defined quota. This model Charging scheme for the cellular edge. In its core, TLC enables loss- inherits from 4G/5G [6, 7], and is standardized in the edge [8]. selfishness cancellation to bridge the gap, and constructs publicly However, the cellular operator and edge app vendor may not verifiable, cryptographic proof-of-charging for mutual trust. Weim- always agree on how much data should be paid. A data charging plement TLC with commodity edge nodes, OpenEPC and small cells. gap can arise, i.e., the data volume charged by the cellular opera- Our experiments in various edge scenarios validate TLC’s viability tor differs from what the edge sends/receives. -

![[Front Matter]](https://docslib.b-cdn.net/cover/9784/front-matter-279784.webp)

[Front Matter]

Future Technologies Conference (FTC) 2017 29-30 November 2017| Vancouver, Canada About the Conference IEEE Technically Sponsored Future Technologies Conference (FTC) 2017 is a second research conference in the series. This conference is a part of SAI conferences being held since 2013. The conference series has featured keynote talks, special sessions, poster presentation, tutorials, workshops, and contributed papers each year. The goal of the conference is to be a world's pre-eminent forum for reporting technological breakthroughs in the areas of Computing, Electronics, AI, Robotics, Security and Communications. FTC 2017 is held at Pan Pacific Hotel Vancouver. The Pan Pacific luxury Vancouver hotel in British Columbia, Canada is situated on the downtown waterfront of this vibrant metropolis, with some of the city’s top business venues and tourist attractions including Flyover Canada and Gastown, the Vancouver Convention Centre, Cruise Ship Terminal as well as popular shopping and entertainment districts just minutes away. Pan Pacific Hotel Vancouver has great meeting rooms with new designs. We chose it for the conference because it’s got plenty of space, serves great food and is 100% accessible. Venue Name: Pan Pacific Hotel Vancouver Address: Suite 300-999 Canada Place, Vancouver, British Columbia V6C 3B5, Canada Tel: +1 604-662-8111 3 | P a g e Future Technologies Conference (FTC) 2017 29-30 November 2017| Vancouver, Canada Preface FTC 2017 is a recognized event and provides a valuable platform for individuals to present their research findings, display their work in progress and discuss conceptual advances in areas of computer science and engineering, Electrical Engineering and IT related disciplines. -

SIGOPS Annual Report 2012

SIGOPS Annual Report 2012 Fiscal Year July 2012-June 2013 Submitted by Jeanna Matthews, SIGOPS Chair Overview SIGOPS is a vibrant community of people with interests in “operatinG systems” in the broadest sense, includinG topics such as distributed computing, storaGe systems, security, concurrency, middleware, mobility, virtualization, networkinG, cloud computinG, datacenter software, and Internet services. We sponsor a number of top conferences, provide travel Grants to students, present yearly awards, disseminate information to members electronically, and collaborate with other SIGs on important programs for computing professionals. Officers It was the second year for officers: Jeanna Matthews (Clarkson University) as Chair, GeorGe Candea (EPFL) as Vice Chair, Dilma da Silva (Qualcomm) as Treasurer and Muli Ben-Yehuda (Technion) as Information Director. As has been typical, elected officers agreed to continue for a second and final two- year term beginning July 2013. Shan Lu (University of Wisconsin) will replace Muli Ben-Yehuda as Information Director as of AuGust 2013. Awards We have an excitinG new award to announce – the SIGOPS Dennis M. Ritchie Doctoral Dissertation Award. SIGOPS has lonG been lackinG a doctoral dissertation award, such as those offered by SIGCOMM, Eurosys, SIGPLAN, and SIGMOD. This new award fills this Gap and also honors the contributions to computer science that Dennis Ritchie made durinG his life. With this award, ACM SIGOPS will encouraGe the creativity that Ritchie embodied and provide a reminder of Ritchie's leGacy and what a difference a person can make in the field of software systems research. The award is funded by AT&T Research and Alcatel-Lucent Bell Labs, companies that both have a strong connection to AT&T Bell Laboratories where Dennis Ritchie did his seminal work. -

Llista Congressos Notables

Llistat de congressos notables per la UPC (Ordenat pel nom del congrés) Servei d'Informació RDI (Per dubtes: [email protected]). 5 de febrer de 2018 Nom del congrés Acrònim AAAI Conference on Artificial Intelligence AAAI ACM Conference on Computer and Communications Security CCS ACM Conference on Human Factors in Computing Systems CHI ACM European Conference on Computer Systems EuroSys ACM Great Lakes Symposium on VLSI GLSVLSI ACM International Conference on Information and Knowledge Management CIKM ACM International Conference on Measurement and Modelling of Computer Systems SIGMETRICS ACM International Conference on Modeling, Analysis and Simulation of Wireless and Mobile Systems MSWiN ACM International Conference on Multimedia ACMMM ACM International Symposium on Mobile AdHoc Networking and Computing ACM MOBIHOC ACM International Symposium on Modeling, Analysis and Simulation of Wireless and Mobile Systems MSWiM ACM International Symposium on Physical Design ISPD ACM Object-Oriented Programming, Systems, Languages & Applications OOPSLA ACM Principles of Programming Languages POPL ACM SIGCOMM Special Interest Group on Data Communications SIGCOMM ACM SIGMOD Conference ACM SIGMOD ACM SIGPLAN 2005 Conference on Programming Language Design and Implementation PLDI ACM Symposium on Applied Computing SAC ACM Symposium on Operating Systems Principles SOSP ACM Symposium on Parallel Algorithms and Architectures SPAA ACM Symposium on Principles of Database Systems PODS ACM Symposium on Principles of Distributed Computing PODC ACM Symposium on -

Annual Report

ANNUAL REPORT 2019FISCAL YEAR ACM, the Association for Computing Machinery, is an international scientific and educational organization dedicated to advancing the arts, sciences, and applications of information technology. Letter from the President It’s been quite an eventful year and challenges posed by evolving technology. for ACM. While this annual Education has always been at the foundation of exercise allows us a moment ACM, as reflected in two recent curriculum efforts. First, “ACM’s mission to celebrate some of the many the ACM Task Force on Data Science issued “Comput- hinges on successes and achievements ing Competencies for Undergraduate Data Science Cur- creating a the Association has realized ricula.” The guidelines lay out the computing-specific over the past year, it is also an competencies that should be included when other community that opportunity to focus on new academic departments offer programs in data science encompasses and innovative ways to ensure at the undergraduate level. Second, building on the all who work in ACM remains a vibrant global success of our recent guidelines for 4-year cybersecu- the computing resource for the computing community. rity curricula, the ACM Committee for Computing Edu- ACM’s mission hinges on creating a community cation in Community Colleges created a related cur- and technology that encompasses all who work in the computing and riculum targeted at two-year programs, “Cybersecurity arena” technology arena. This year, ACM established a new Di- Curricular Guidance for Associate-Degree Programs.” versity and Inclusion Council to identify ways to create The following pages offer a sampling of the many environments that are welcoming to new perspectives ACM events and accomplishments that occurred over and will attract an even broader membership from the past fiscal year, none of which would have been around the world. -

Joel Sommers

JOEL SOMMERS Department of Computer Science, Colgate University 306 McGregory Hall 13 Oak Drive Hamilton, NY 13346 315-228-7587 [email protected], http://cs.colgate.edu/~jsommers/ EDUCATION University of Wisconsin-Madison, Madison, WI, 2001-2007. Ph.D. in Computer Science, August 2007. Dissertation title: Calibrated network measurement. Adviser: Paul Barford Ph.D. minor in science and technology studies (distributed option). Worcester Polytechnic Institute, Worcester, MA, 1995-1997. M.S. in Computer Science, May 1997. Thesis title: Merging client and server profiles on the world-wide web. Adviser: Craig Wills Atlantic Union College, South Lancaster, MA, 1990-1995. B.S. in Computer Science, May 1995. B.S. in Mathematics, May 1995. ACADEMIC EXPERIENCE Associate Professor, Department of Computer Science, Colgate University, Hamilton, NY, 2013-present. Visiting Associate Professor, School of Engineering and Computer Science, Victoria University of Wellington, Wellington, New Zealand, October 2016-March 2017. Assistant Professor, Department of Computer Science, Colgate University, Hamilton, NY, 2007-2012. Research Assistant, Computer Sciences Department, University of Wisconsin, Madison, WI, 2001-2007. Projects included the Harpoon network traffic generator, the BADABING loss measurement methodology, and compliance measurement of service level agreements (SLAs). Teaching Assistant, Computer networking (CS640), University of Wisconsin, 2001. Prepared and graded programming projects, held office hours, graded papers and exams. Research Assistant, Computer Science Department, Worcester Polytechnic Institute, 1996-1997. Studied performance and security aspects of electronic commerce systems. Laboratory Instructor, Stanborough Secondary School, Watford, England, 1992-1993. Prepared and administered biology, chemistry, and physics labs for secondary school students. PROFESSIONAL EXPERIENCE Lead Developer, First Options of Chicago, Subsidiary of Goldman Sachs, Chicago, IL, 2000-2001. -

Central Library: IIT GUWAHATI

Central Library, IIT GUWAHATI BACK VOLUME LIST DEPARTMENTWISE (as on 20/04/2012) COMPUTER SCIENCE & ENGINEERING S.N. Jl. Title Vol.(Year) 1. ACM Jl.: Computer Documentation 20(1996)-26(2002) 2. ACM Jl.: Computing Surveys 30(1998)-35(2003) 3. ACM Jl.: Jl. of ACM 8(1961)-34(1987); 43(1996);45(1998)-50 (2003) 4. ACM Magazine: Communications of 39(1996)-46#3-12(2003) ACM 5. ACM Magazine: Intelligence 10(1999)-11(2000) 6. ACM Magazine: netWorker 2(1998)-6(2002) 7. ACM Magazine: Standard View 6(1998) 8. ACM Newsletters: SIGACT News 27(1996);29(1998)-31(2000) 9. ACM Newsletters: SIGAda Ada 16(1996);18(1998)-21(2001) Letters 10. ACM Newsletters: SIGAPL APL 28(1998)-31(2000) Quote Quad 11. ACM Newsletters: SIGAPP Applied 4(1996);6(1998)-8(2000) Computing Review 12. ACM Newsletters: SIGARCH 24(1996);26(1998)-28(2000) Computer Architecture News 13. ACM Newsletters: SIGART Bulletin 7(1996);9(1998) 14. ACM Newsletters: SIGBIO 18(1998)-20(2000) Newsletters 15. ACM Newsletters: SIGCAS 26(1996);28(1998)-30(2000) Computers & Society 16. ACM Newsletters: SIGCHI Bulletin 28(1996);30(1998)-32(2000) 17. ACM Newsletters: SIGCOMM 26(1996);28(1998)-30(2000) Computer Communication Review 1 Central Library, IIT GUWAHATI BACK VOLUME LIST DEPARTMENTWISE (as on 20/04/2012) COMPUTER SCIENCE & ENGINEERING S.N. Jl. Title Vol.(Year) 18. ACM Newsletters: SIGCPR 17(1996);19(1998)-20(1999) Computer Personnel 19. ACM Newsletters: SIGCSE Bulletin 28(1996);30(1998)-32(2000) 20. ACM Newsletters: SIGCUE Outlook 26(1998)-27(2001) 21. -

List of Related Organizations and Conferences

List of Related Organizations and Conferences Organization/Event Name Sponsor Description # of days With approx. 80,000 members, ACM delivers resources that advance computing as a science and a profession. ACM provides the computing field's premier Digital ACM Library and serves its members and the computing profession with leading-edge publications, conferences, and career resources. With nearly 85,000 members, the IEEE Computer Society is the world’s leading organization of computing professionals. Founded in 1946, and the largest of the IEEE Computer Society 39 societies of the Institute of Electrical and Electronics Engineers (IEEE), the CS is dedicated to advancing the theory and application of computer and information- processing technology. ASPLOS is a multi-disciplinary conference for research that spans the boundaries of hardware, computer architecture, compilers, languages, operating systems, networking, and applications. ASPLOS provides a high quality forum for scientists and engineers to present their latest research findings in these rapidly changing ASPLOS XIII ACM SIGARCH, SIGPLAN, SIGOPS 3 days fields. It has captured some of the major computer systems innovations of the past two decades (e.g., RISC and VLIW processors, small and large-scale multiprocessors, clusters and networks-of-workstations, optimizing compilers, RAID, and network-storage system designs). BSDCan, a BSD conference held in Ottawa, Canada, has quickly established itself as the technical conference for people working on and with 4.4BSD based operating BSDCanada 4 days systems and related projects. The organizers have found a fantastic formula that appeals to a wide range of people from extreme novices to advanced The Federated Computer Research Conference (FCRC) assembles a spectrum of affiliated research conferences and workshops into a week long coordinated meeting 7 day mix of held at a common time in a common place. -

Appendix C SURPLUS/LOSS: $291.34 SIGCSE 0.00 Sigada 100.00 SIGAPP 0.00 SIGPLAN 0.00

Starts Ends Conference Actual SIGs and their % SIGACCESS 21-Oct-13 23-Oct-13 ASSETS '13: The 15th International ACM SIGACCESS ATTENDANCE: 155 SIGACCESS 100.00 Conference on Computers and Accessibility INCOME: $74,697.30 EXPENSE: $64,981.11 SURPLUS/LOSS: $9,716.19 SIGACT 12-Jan-14 14-Jan-14 ITCS'14 : Innovations in Theoretical Computer Science ATTENDANCE: 76 SIGACT 100.00 INCOME: $19,210.00 EXPENSE: $21,744.07 SURPLUS/LOSS: ($2,534.07) 22-Jul-13 24-Jul-13 PODC '13: ACM Symposium on Principles ATTENDANCE: 98 SIGOPS 50.00 of Distributed Computing INCOME: $62,310.50 SIGACT 50.00 EXPENSE: $56,139.24 SURPLUS/LOSS: $6,171.26 23-Jul-13 25-Jul-13 SPAA '13: 25th ACM Symposium on Parallelism in ATTENDANCE: 45 SIGACT 50.00 Algorithms and Architectures INCOME: $45,665.50 SIGARCH 50.00 EXPENSE: $39,586.18 SURPLUS/LOSS: $6,079.32 23-Jun-14 25-Jun-14 SPAA '14: 26th ACM Symposium on Parallelism in ATTENDANCE: 73 SIGARCH 50.00 Algorithms and Architectures INCOME: $36,107.35 SIGACT 50.00 EXPENSE: $22,536.04 SURPLUS/LOSS: $13,571.31 31-May-14 3-Jun-14 STOC '14: Symposium on Theory of Computing ATTENDANCE: SIGACT 100.00 INCOME: $0.00 EXPENSE: $0.00 SURPLUS/LOSS: $0.00 SIGAda 10-Nov-13 14-Nov-13 HILT 2013:High Integrity Language Technology ATTENDANCE: 60 SIGBED 0.00 ACM SIGAda Annual INCOME: $32,696.00 SIGCAS 0.00 EXPENSE: $32,404.66 SIGSOFT 0.00 Appendix C SURPLUS/LOSS: $291.34 SIGCSE 0.00 SIGAda 100.00 SIGAPP 0.00 SIGPLAN 0.00 SIGAI 11-Nov-13 15-Nov-13 ASE '13: ACM/IEEE International Conference on ATTENDANCE: 195 SIGAI 25.00 Automated Software Engineering -

Truly Perfect Samplers for Data Streams and Sliding Windows

Truly Perfect Samplers for Data Streams and Sliding Windows Rajesh Jayaram∗ David P. Woodruff† Samson Zhou‡ August 30, 2021 Abstract In the G-sampling problem, the goal is to output an index i of a vector f Rn, such that for all coordinates j [n], ∈ ∈ G(f ) Pr[i = j]=(1 ǫ) j + γ, ± k∈[n] G(fk) P where G : R R≥0 is some non-negative function. If ǫ = 0 and γ = 1/ poly(n), the sampler is called perfect→. In the data stream model, f is defined implicitly by a sequence of updates to its coordinates, and the goal is to design such a sampler in small space. Jayaram and p Woodruff (FOCS 2018) gave the first perfect Lp samplers in turnstile streams, where G(x)= x , using polylog(n) space for p (0, 2]. However, to date all known sampling algorithms are| not| truly perfect, since their output∈ distribution is only point-wise γ =1/ poly(n) close to the true distribution. This small error can be significant when samplers are run many times on successive portions of a stream, and leak potentially sensitive information about the data stream. In this work, we initiate the study of truly perfect samplers, with ǫ = γ = 0, and compre- hensively investigate their complexity in the data stream and sliding window models. We begin by showing that sublinear space truly perfect sampling is impossible in the turnstile model, by 1 proving a lower bound of Ω min n, log γ for any G-sampler with point-wise error γ from the true distribution. -

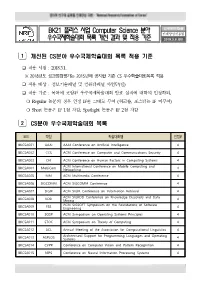

BK21 플러스 사업 Computer Science 분야 우수국제학술대회 목록 개선

BK21 플러스 사업 Computer Science 분야 인재양성지원실 인재양성진흥팀 우수국제학술대회 목록 개선 결과 및 적용 기준 2018.3.6.(화) 1 개선된 CS분야 우수국제학술대회 목록 적용 기준 ❑ 적용 시점 : 2018.3.1. ※ 2018년도 성과점검평가는 2015년에 공지한 기존 CS 우수학술대회목록 적용 ❑ 적용 대상 : 정보기술패널 및 컴퓨터패널 사업단(팀) ❑ 적용 기준 : 목록에 포함된 우수국제학술대회 발표 실적에 대하여 인정하되, ❍ Regular 논문의 경우 인정 IF를 그대로 부여 (워크숍, 포스터는 IF 미부여) ❍ Short 논문은 IF 1점 차감, Spotlight 논문은 IF 2점 차감 2 CS분야 우수국제학술대회 목록 코드 약칭 학술대회명 인정IF BKCSA001 AAAI AAAI Conference on Artificial Intelligence 4 BKCSA002 CCS ACM Conference on Computer and Communications Security 4 BKCSA003 CHI ACM Conference on Human Factors in Computing Systems 4 ACM International Conference on Mobile Computing and BKCSA004 MobiCom Networking 4 BKCSA005 MM ACM Multimedia Conference 4 BKCSA006 SIGCOMM ACM SIGCOMM Conference 4 BKCSA007 SIGIR ACM SIGIR Conference on Information Retrieval 4 ACM SIGKDD Conference on Knowledge Discovery and Data BKCSA008 KDD 4 Mining ACM SIGSOFT Symposium on the Foundations of Software BKCSA009 FSE Engineering 4 BKCSA010 SOSP ACM Symposium on Operating Systems Principles 4 BKCSA011 STOC ACM Symposium on Theory of Computing 4 BKCSA012 ACL Annual Meeting of the Association for Computational Linguistics 4 Architectural Support for Programming Languages and Operating BKCSA013 ASPLOS 4 Systems BKCSA014 CVPR Conference on Computer Vision and Pattern Recognition 4 BKCSA015 NIPS Conference on Neural Information Processing Systems 4 1 코드 약칭 학술대회명 인정IF BKCSA016 OOPSLA Conference on Object-Oriented Programming, System, Languages, 4 and Applications BKCSA017 INFOCOM IEEE Conference on Computer Communications -

Scouts: Improving the Diagnosis Process Through Domain-Customized Incident Routing

Scouts: Improving the Diagnosis Process Through Domain-customized Incident Routing Jiaqi Gao¢, Nofel Yaseen⋄, Robert MacDavid∗, Felipe Vieira Frujeri◦, Vincent Liu⋄, Ricardo Bianchini◦ Ramaswamy Adityax, Xiaohang Wangx, Henry Leex, David Maltzx, Minlan Yu¢, Behnaz Arzani◦ ¢Harvard University ⋄University of Pennsylvania ∗Princeton University ◦Microsoft Research xMicrosoft ABSTRACT service-level objectives. When incidents are mis-routed (sent to the Incident routing is critical for maintaining service level objectives wrong team), their time-to-diagnosis can increase by 10× [21]. in the cloud: the time-to-diagnosis can increase by 10× due to mis- A handful of well-known teams that underpin other services routings. tend to bear the brunt of this effect. The physical networking team Properly routing incidents is challenging because of the complex- in our large cloud, for instance, is a recipient in 1 in every 10 mis- ity of today’s data center (DC) applications and their dependencies. routed incidents (see §3). In comparison, the hundreds of other For instance, an application running on a VM might rely on a possible teams typically receive 1 in 100 to 1 in 1000. These findings functioning host-server, remote-storage service, and virtual and are common across the industry (see Appendix A). physical network components. It is hard for any one team, rule- Incident routing remains challenging because modern DC ap- based system, or even machine learning solution to fully learn the plications are large, complex, and distributed systems that rely complexity and solve the incident routing problem. We propose a on many sub-systems and components. Applications’ connections different approach using per-team Scouts.