CSC535: Probabilistic Graphical Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Approximated Bayes and Empirical Bayes Confidence Intervals—

Ann. Inst. Statist. Math. Vol. 40, No. 4, 747-767 (1988) APPROXIMATED BAYES AND EMPIRICAL BAYES CONFIDENCE INTERVALSmTHE KNOWN VARIANCE CASE* A. J. VAN DER MERWE, P. C. N. GROENEWALD AND C. A. VAN DER MERWE Department of Mathematical Statistics, University of the Orange Free State, PO Box 339, Bloemfontein, Republic of South Africa (Received June 11, 1986; revised September 29, 1987) Abstract. In this paper hierarchical Bayes and empirical Bayes results are used to obtain confidence intervals of the population means in the case of real problems. This is achieved by approximating the posterior distribution with a Pearson distribution. In the first example hierarchical Bayes confidence intervals for the Efron and Morris (1975, J. Amer. Statist. Assoc., 70, 311-319) baseball data are obtained. The same methods are used in the second example to obtain confidence intervals of treatment effects as well as the difference between treatment effects in an analysis of variance experiment. In the third example hierarchical Bayes intervals of treatment effects are obtained and compared with normal approximations in the unequal variance case. Key words and phrases: Hierarchical Bayes, empirical Bayes estimation, Stein estimator, multivariate normal mean, Pearson curves, confidence intervals, posterior distribution, unequal variance case, normal approxima- tions. 1. Introduction In the Bayesian approach to inference, a posterior distribution of unknown parameters is produced as the normalized product of the like- lihood and a prior distribution. Inferences about the unknown parameters are then based on the entire posterior distribution resulting from the one specific data set which has actually occurred. In most hierarchical and empirical Bayes cases these posterior distributions are difficult to derive and cannot be obtained in closed form. -

1 Estimation and Beyond in the Bayes Universe

ISyE8843A, Brani Vidakovic Handout 7 1 Estimation and Beyond in the Bayes Universe. 1.1 Estimation No Bayes estimate can be unbiased but Bayesians are not upset! No Bayes estimate with respect to the squared error loss can be unbiased, except in a trivial case when its Bayes’ risk is 0. Suppose that for a proper prior ¼ the Bayes estimator ±¼(X) is unbiased, Xjθ (8θ)E ±¼(X) = θ: This implies that the Bayes risk is 0. The Bayes risk of ±¼(X) can be calculated as repeated expectation in two ways, θ Xjθ 2 X θjX 2 r(¼; ±¼) = E E (θ ¡ ±¼(X)) = E E (θ ¡ ±¼(X)) : Thus, conveniently choosing either EθEXjθ or EX EθjX and using the properties of conditional expectation we have, θ Xjθ 2 θ Xjθ X θjX X θjX 2 r(¼; ±¼) = E E θ ¡ E E θ±¼(X) ¡ E E θ±¼(X) + E E ±¼(X) θ Xjθ 2 θ Xjθ X θjX X θjX 2 = E E θ ¡ E θ[E ±¼(X)] ¡ E ±¼(X)E θ + E E ±¼(X) θ Xjθ 2 θ X X θjX 2 = E E θ ¡ E θ ¢ θ ¡ E ±¼(X)±¼(X) + E E ±¼(X) = 0: Bayesians are not upset. To check for its unbiasedness, the Bayes estimator is averaged with respect to the model measure (Xjθ), and one of the Bayesian commandments is: Thou shall not average with respect to sample space, unless you have Bayesian design in mind. Even frequentist agree that insisting on unbiasedness can lead to bad estimators, and that in their quest to minimize the risk by trading off between variance and bias-squared a small dosage of bias can help. -

Download Article (PDF)

Journal of Statistical Theory and Applications, Vol. 17, No. 2 (June 2018) 359–374 ___________________________________________________________________________________________________________ BAYESIAN APPROACH IN ESTIMATION OF SHAPE PARAMETER OF THE EXPONENTIATED MOMENT EXPONENTIAL DISTRIBUTION Kawsar Fatima Department of Statistics, University of Kashmir, Srinagar, India [email protected] S.P Ahmad* Department of Statistics, University of Kashmir, Srinagar, India [email protected] Received 1 November 2016 Accepted 19 June 2017 Abstract In this paper, Bayes estimators of the unknown shape parameter of the exponentiated moment exponential distribution (EMED)have been derived by using two informative (gamma and chi-square) priors and two non- informative (Jeffrey’s and uniform) priors under different loss functions, namely, Squared Error Loss function, Entropy loss function and precautionary Loss function. The Maximum likelihood estimator (MLE) is obtained. Also, we used two real life data sets to illustrate the result derived. Keywords: Exponentiated Moment Exponential distribution; Maximum Likelihood Estimator; Bayesian estimation; Priors; Loss functions. 2000 Mathematics Subject Classification: 22E46, 53C35, 57S20 1. Introduction The exponentiated exponential distribution is a specific family of the exponentiated Weibull distribution. In analyzing several life time data situations, it has been observed that the dual parameter exponentiated exponential distribution can be more effectively used as compared to both dual parameters of gamma or Weibull distribution. When we consider the shape parameter of exponentiated exponential, gamma and Weibull is one, then these distributions becomes one parameter exponential distribution. Hence, these three distributions are the off shoots of the exponential distribution. Moment distributions have a vital role in mathematics and statistics, in particular probability theory, in the viewpoint research related to ecology, reliability, biomedical field, econometrics, survey sampling and in life-testing. -

Bayes Estimator Recap - Example

Recap Bayes Risk Consistency Summary Recap Bayes Risk Consistency Summary . Last Lecture . Biostatistics 602 - Statistical Inference Lecture 16 • What is a Bayes Estimator? Evaluation of Bayes Estimator • Is a Bayes Estimator the best unbiased estimator? . • Compared to other estimators, what are advantages of Bayes Estimator? Hyun Min Kang • What is conjugate family? • What are the conjugate families of Binomial, Poisson, and Normal distribution? March 14th, 2013 Hyun Min Kang Biostatistics 602 - Lecture 16 March 14th, 2013 1 / 28 Hyun Min Kang Biostatistics 602 - Lecture 16 March 14th, 2013 2 / 28 Recap Bayes Risk Consistency Summary Recap Bayes Risk Consistency Summary . Recap - Bayes Estimator Recap - Example • θ : parameter • π(θ) : prior distribution i.i.d. • X1, , Xn Bernoulli(p) • X θ fX(x θ) : sampling distribution ··· ∼ | ∼ | • π(p) Beta(α, β) • Posterior distribution of θ x ∼ | • α Prior guess : pˆ = α+β . Joint fX(x θ)π(θ) π(θ x) = = | • Posterior distribution : π(p x) Beta( xi + α, n xi + β) | Marginal m(x) | ∼ − • Bayes estimator ∑ ∑ m(x) = f(x θ)π(θ)dθ (Bayes’ rule) | α + x x n α α + β ∫ pˆ = i = i + α + β + n n α + β + n α + β α + β + n • Bayes Estimator of θ is ∑ ∑ E(θ x) = θπ(θ x)dθ | θ Ω | ∫ ∈ Hyun Min Kang Biostatistics 602 - Lecture 16 March 14th, 2013 3 / 28 Hyun Min Kang Biostatistics 602 - Lecture 16 March 14th, 2013 4 / 28 Recap Bayes Risk Consistency Summary Recap Bayes Risk Consistency Summary . Loss Function Optimality Loss Function Let L(θ, θˆ) be a function of θ and θˆ. -

9 Bayesian Inference

9 Bayesian inference 1702 - 1761 9.1 Subjective probability This is probability regarded as degree of belief. A subjective probability of an event A is assessed as p if you are prepared to stake £pM to win £M and equally prepared to accept a stake of £pM to win £M. In other words ... ... the bet is fair and you are assumed to behave rationally. 9.1.1 Kolmogorov’s axioms How does subjective probability fit in with the fundamental axioms? Let A be the set of all subsets of a countable sample space Ω. Then (i) P(A) ≥ 0 for every A ∈A; (ii) P(Ω)=1; 83 (iii) If {Aλ : λ ∈ Λ} is a countable set of mutually exclusive events belonging to A,then P Aλ = P (Aλ) . λ∈Λ λ∈Λ Obviously the subjective interpretation has no difficulty in conforming with (i) and (ii). (iii) is slightly less obvious. Suppose we have 2 events A and B such that A ∩ B = ∅. Consider a stake of £pAM to win £M if A occurs and a stake £pB M to win £M if B occurs. The total stake for bets on A or B occurring is £pAM+ £pBM to win £M if A or B occurs. Thus we have £(pA + pB)M to win £M and so P (A ∪ B)=P(A)+P(B) 9.1.2 Conditional probability Define pB , pAB , pA|B such that £pBM is the fair stake for £M if B occurs; £pABM is the fair stake for £M if A and B occur; £pA|BM is the fair stake for £M if A occurs given B has occurred − other- wise the bet is off. -

Lecture 8 — October 15 8.1 Bayes Estimators and Average Risk

STATS 300A: Theory of Statistics Fall 2015 Lecture 8 | October 15 Lecturer: Lester Mackey Scribe: Hongseok Namkoong, Phan Minh Nguyen Warning: These notes may contain factual and/or typographic errors. 8.1 Bayes Estimators and Average Risk Optimality 8.1.1 Setting We discuss the average risk optimality of estimators within the framework of Bayesian de- cision problems. As with the general decision problem setting the Bayesian setup considers a model P = fPθ : θ 2 Ωg, for our data X, a loss function L(θ; d), and risk R(θ; δ). In the frequentist approach, the parameter θ was considered to be an unknown deterministic quan- tity. In the Bayesian paradigm, we consider a measure Λ over the parameter space which we call a prior. Assuming this measure defines a probability distribution, we interpret the parameter θ as an outcome of the random variable Θ ∼ Λ. So, in this setup both X and θ are random. Conditioning on Θ = θ, we assume the data is generated by the distribution Pθ. Now, the optimality goal for our decision problem of estimating g(θ) is the minimization of the average risk r(Λ; δ) = E[L(Θ; δ(X))] = E[E[L(Θ; δ(X)) j X]]: An estimator δ which minimizes this average risk is a Bayes estimator and is sometimes referred to as being Bayes. Note that the average risk is an expectation over both the random variables Θ and X. Then by using the tower property, we showed last time that it suffices to find an estimator δ which minimizes the posterior risk E[L(Θ; δ(X))jX = x] for almost every x. -

Unbiasedness and Bayes Estimators

Unbiasedness and Bayes Estimators Siamak Noorbaloochi Center for Chronic Disease Outcomes Research Minneapolis VA Medical Center and Glen Meeden1 School of Statistics University of Minnesota2 A simple geometric representation of Bayes and unbiased rules for squared error loss is provided. Some orthogonality relationships between them and the functions they are estimating are proved. Bayes estimators are shown to be behave asymptotically like unbiased estimators. Key Words: Unbiasedness, Bayes estimators, squared error loss and con- sistency 1Research supported in part by NSF Grant DMS 9971331 2Glen Meeden School of Statistics 313 Ford Hall 224 Church ST S.E. University of Minnesota Minneapolis, MN 55455-0460 1 1 Introduction Let X be a random variable, possible vector valued, with a family of possible probability distributions indexed by the parameter θ ∈ Θ. Suppose γ, some real-valued function defined on Θ, is to be estimated using X. An estimator δ is said to be unbiased for γ if Eθδ(X) = γ(θ) for all θ ∈ Θ. Lehmann (1951) proposed a generalization of this notion of unbiasedness which takes into account the loss function for the problem. Noorbaloochi and Meeden (1983) proposed a generalization of Lehmann’s definition but which depends on a prior distribution π for θ. Assuming squared error loss let the Bayes risk of an estimator δ for estimating γ be denoted by r(δ, γ; π). Then under their definition δ is unbiased for estimating γ for the prior π if r(δ, γ; π) = inf r(δ, γ0; π) γ0 Under very weak assumptions it is easy to see that this definition reduces to the usual one. -

Bayesian Cluster Enumeration Criterion for Unsupervised Learning

1 Bayesian Cluster Enumeration Criterion for Unsupervised Learning Freweyni K. Teklehaymanot, Student Member, IEEE, Michael Muma, Member, IEEE, and Abdelhak M. Zoubir, Fellow, IEEE Abstract—We derive a new Bayesian Information Criterion The BIC was originally derived by Schwarz in [8] assum- (BIC) by formulating the problem of estimating the number of ing that (i) the observations are independent and identically clusters in an observed data set as maximization of the posterior distributed (iid), (ii) they arise from an exponential family probability of the candidate models. Given that some mild assumptions are satisfied, we provide a general BIC expression of distributions, and (iii) the candidate models are linear in for a broad class of data distributions. This serves as a starting parameters. Ignoring these rather restrictive assumptions, the point when deriving the BIC for specific distributions. Along this BIC has been used in a much larger scope of model selection line, we provide a closed-form BIC expression for multivariate problems. A justification of the widespread applicability of Gaussian distributed variables. We show that incorporating the the BIC was provided in [16] by generalizing Schwarz’s data structure of the clustering problem into the derivation of the BIC results in an expression whose penalty term is different derivation. In [16], the authors drop the first two assumptions from that of the original BIC. We propose a two-step cluster enu- made by Schwarz given that some regularity conditions are meration algorithm. First, a model-based unsupervised learning satisfied. The BIC is a generic criterion in the sense that algorithm partitions the data according to a given set of candidate it does not incorporate information regarding the specific models. -

Transformations and Bayesian Estimation of Skewed and Heavy-Tailed Densities

Transformations and Bayesian Estimation of Skewed and Heavy-Tailed Densities Dissertation Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Andrew Bean, B.A.,M.S. Graduate Program in Statistics The Ohio State University 2017 Dissertation Committee: Xinyi Xu, Co-Advisor Steven N. MacEachern, Co-Advisor Yoonkyung Lee Matthew T. Pratola c Copyright by Andrew Bean 2017 Abstract In data analysis applications characterized by large and possibly irregular data sets, nonparametric statistical techniques aim to ensure that, as the sample size grows, all unusual features of the data generating process can be captured. Good large- sample performance can be guaranteed in broad classes of problems. Yet within these broad classes, some problems may be substantially more difficult than others. This fact, long recognized in classical nonparametrics, also holds in the growing field of Bayesian nonparametrics, where flexible prior distributions are developed to allow for an infinite-dimensional set of possible truths. This dissertation studies the Bayesian approach to the classic problem of nonpara- metric density estimation, in the presence of specific irregularities such as heavy tails and skew. The problem of estimating an unknown probability density is recognized as being harder when the density is skewed or heavy tailed than when it is symmetric and light-tailed. It is more challenging problem for classical kernel density estimators, where the expected squared-error loss is higher for heavier tailed densities. It is also a more challenging problem in Bayesian density estimation, where heavy tails preclude the analytical treatment required to establish a large-sample convergence rate for the popular Dirichlet-Process (DP) mixture model. -

Comparison Between Bayesian and Maximum Likelihood Estimation of Scale Parameter in Weibull Distribution with Known Shape Under Linex Loss Function

Journal of Scientific Research Vol. 55, 2011 : 163-172 Banaras Hindu University, Varanasi ISSN : 0447-9483 COMPARISON BETWEEN BAYESIAN AND MAXIMUM LIKELIHOOD ESTIMATION OF SCALE PARAMETER IN WEIBULL DISTRIBUTION WITH KNOWN SHAPE UNDER LINEX LOSS FUNCTION B.N. Pandey, Nidhi Dwivedi and Pulastya Bandyopadhyay Department of Statistics, Banaras Hindu University, Varanasi-221005 Email: [email protected] Abstract Weibull distribution is widely employed in modeling and analyzing lifetime data. The present paper considers the estimation of the scale parameter of two parameter Weibull distribution with known shape. Maximum likelihood estimation is discussed. Bayes estimator is obtained using Jeffreys’ prior under linex loss function. Relative efficiency of the estimators are calculated in small and large samples for over-estimation and under-estimation using simulated data sets. It is observed that Bayes estimator fairs better especially in small sample size and when over estimation is more critical than under estimation. INTRODUCTION The Weibull distribution is one of the most widely used distributions for analyzing lifetime data. It is found to be useful in diverse fields ranging from engineering to medical sciences (see Lawless [4], Martz and Waller [6]). The Weibull family is a generalization of the exponential family and can model data exhibiting monotone hazard rate behavior, i.e. it can accommodate three types of failure rates, namely increasing, decreasing and constant. The probability density function of the Weibull distribution is given by: β x β f(x|α, β ) = x β −1 exp[− ] ; x ≥ 0, α , β >0 (1) α α where the parameter β determines the shape of the distribution and α is the scale parameter. -

Bayes Estimators

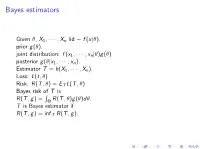

Bayes estimators Given θ; X1; ··· ; Xn iid ∼ f (xjθ). prior g(θ). joint distribution: f (x1; ··· ; xnjθ)g(θ) posterior g(θjx1; ··· ; xn). Estimator T = h(X1; ··· ; Xn). Loss: L(t; θ) Risk: R(T ; θ) = ET L(T ; θ) Bayes risk of T is R R(T ; g) = Θ R(T ; θ)g(θ)dθ. T is Bayes estimator if R(T ; g) = infT R(T ; g). To minimize Bayes risk, we only need to minimize the conditional expected loss given each x observed. Binomial model X ∼ bin(xjn; θ) prior g(θ) ∼ unif (0; 1). posterior g(θjx) ∼ beta(x + 1; n − x + 1). Beta distribution: Γ(α+β) α−1 β−1 α f (x) = Γ(α)Γ(β) x (1 − x) ; 0 ≤ x ≤ 1; E(X ) = α+β . For squared error loss, the Bayes estimator is x+1 posterior mean n+2 : If X is a random variable, choose c min E(X − c)2. c = E(X ) Prior distributions Where do prior distributions come from? * a prior knowledge about θ * population interpretation{(a population of possible θ values). * mathematical convenience (conjugate prior) conjugate distribution{ the prior and the posterior distribution are in the same parametric family. Conjugate prior distribution Advantages: * mathematically convenient * easy to interpret * can provide good approximation to many prior opinions (especially if we allow mixtures of distributions from the conjugate family) Disadvantages: may not be realistic Binomial model X jθ ∼ bin(n; θ) θ ∼ beta(α; β). Beta distribution: Γ(α+β) α−1 β−1 f (x) = Γ(α)Γ(β) x (1 − x) ; α 0 ≤ x ≤ 1; E(X ) = α+β . -

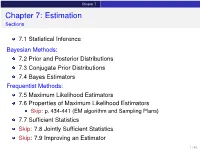

Chapter 7: Estimation Sections

Chapter 7 Chapter 7: Estimation Sections 7.1 Statistical Inference Bayesian Methods: 7.2 Prior and Posterior Distributions 7.3 Conjugate Prior Distributions 7.4 Bayes Estimators Frequentist Methods: 7.5 Maximum Likelihood Estimators 7.6 Properties of Maximum Likelihood Estimators Skip: p. 434-441 (EM algorithm and Sampling Plans) 7.7 Sufficient Statistics Skip: 7.8 Jointly Sufficient Statistics Skip: 7.9 Improving an Estimator 1 / 40 Chapter 7 7.1 Statistical Inference Statistical Inference We have seen statistical models in the form of probability distributions: f (xjθ) In this section the general notation for any parameter will be θ The parameter space will be denoted by Ω For example: Life time of a christmas light series follows the Expo(θ) The average of 63 poured drinks is approximately normal with mean θ The number of people that have a disease out of a group of N people follows the Binomial(N; θ) distribution. In practice the value of the parameter θ is unknown. 2 / 40 Chapter 7 7.1 Statistical Inference Statistical Inference Statistical Inference: Given the data we have observed what can we say about θ? I.e. we observe random variables X1;:::; Xn that we assume follow our statistical model and then we want to draw probabilistic conclusions about the parameter θ. For example: If I tested 5 Christmas light series from the same manufacturer and they lasted for 21; 103; 76; 88 and 96 days. Assuming that the life times are independent and follow Expo(θ), what does this data set tell me about the failure rate θ? 3 / 40 Chapter 7 7.1 Statistical Inference Statistical Inference – Another example Say I take a random sample of 100 people and test them all for a disease.