Compiling the Linux Kernel with LLVM Tools

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FIPS 140-2 Non-Proprietary Security Policy

Kernel Crypto API Cryptographic Module version 1.0 FIPS 140-2 Non-Proprietary Security Policy Version 1.3 Last update: 2020-03-02 Prepared by: atsec information security corporation 9130 Jollyville Road, Suite 260 Austin, TX 78759 www.atsec.com © 2020 Canonical Ltd. / atsec information security This document can be reproduced and distributed only whole and intact, including this copyright notice. Kernel Crypto API Cryptographic Module FIPS 140-2 Non-Proprietary Security Policy Table of Contents 1. Cryptographic Module Specification ..................................................................................................... 5 1.1. Module Overview ..................................................................................................................................... 5 1.2. Modes of Operation ................................................................................................................................. 9 2. Cryptographic Module Ports and Interfaces ........................................................................................ 10 3. Roles, Services and Authentication ..................................................................................................... 11 3.1. Roles .......................................................................................................................................................11 3.2. Services ...................................................................................................................................................11 -

Introduction to Computer Systems 15-213/18-243, Spring 2009 1St

M4-L3: Executables CSE351, Winter 2021 Executables CSE 351 Winter 2021 Instructor: Teaching Assistants: Mark Wyse Kyrie Dowling Catherine Guevara Ian Hsiao Jim Limprasert Armin Magness Allie Pfleger Cosmo Wang Ronald Widjaja http://xkcd.com/1790/ M4-L3: Executables CSE351, Winter 2021 Administrivia ❖ Lab 2 due Monday (2/8) ❖ hw12 due Friday ❖ hw13 due next Wednesday (2/10) ▪ Based on the next two lectures, longer than normal ❖ Remember: HW and readings due before lecture, at 11am PST on due date 2 M4-L3: Executables CSE351, Winter 2021 Roadmap C: Java: Memory & data car *c = malloc(sizeof(car)); Car c = new Car(); Integers & floats c->miles = 100; c.setMiles(100); x86 assembly c->gals = 17; c.setGals(17); Procedures & stacks float mpg = get_mpg(c); float mpg = Executables free(c); c.getMPG(); Arrays & structs Memory & caches Assembly get_mpg: Processes pushq %rbp language: movq %rsp, %rbp Virtual memory ... Memory allocation popq %rbp Java vs. C ret OS: Machine 0111010000011000 100011010000010000000010 code: 1000100111000010 110000011111101000011111 Computer system: 3 M4-L3: Executables CSE351, Winter 2021 Reading Review ❖ Terminology: ▪ CALL: compiler, assembler, linker, loader ▪ Object file: symbol table, relocation table ▪ Disassembly ▪ Multidimensional arrays, row-major ordering ▪ Multilevel arrays ❖ Questions from the Reading? ▪ also post to Ed post! 4 M4-L3: Executables CSE351, Winter 2021 Building an Executable from a C File ❖ Code in files p1.c p2.c ❖ Compile with command: gcc -Og p1.c p2.c -o p ▪ Put resulting machine code in -

The LLVM Instruction Set and Compilation Strategy

The LLVM Instruction Set and Compilation Strategy Chris Lattner Vikram Adve University of Illinois at Urbana-Champaign lattner,vadve ¡ @cs.uiuc.edu Abstract This document introduces the LLVM compiler infrastructure and instruction set, a simple approach that enables sophisticated code transformations at link time, runtime, and in the field. It is a pragmatic approach to compilation, interfering with programmers and tools as little as possible, while still retaining extensive high-level information from source-level compilers for later stages of an application’s lifetime. We describe the LLVM instruction set, the design of the LLVM system, and some of its key components. 1 Introduction Modern programming languages and software practices aim to support more reliable, flexible, and powerful software applications, increase programmer productivity, and provide higher level semantic information to the compiler. Un- fortunately, traditional approaches to compilation either fail to extract sufficient performance from the program (by not using interprocedural analysis or profile information) or interfere with the build process substantially (by requiring build scripts to be modified for either profiling or interprocedural optimization). Furthermore, they do not support optimization either at runtime or after an application has been installed at an end-user’s site, when the most relevant information about actual usage patterns would be available. The LLVM Compilation Strategy is designed to enable effective multi-stage optimization (at compile-time, link-time, runtime, and offline) and more effective profile-driven optimization, and to do so without changes to the traditional build process or programmer intervention. LLVM (Low Level Virtual Machine) is a compilation strategy that uses a low-level virtual instruction set with rich type information as a common code representation for all phases of compilation. -

CERES Software Bulletin 95-12

CERES Software Bulletin 95-12 Fortran 90 Linking Experiences, September 5, 1995 1.0 Purpose: To disseminate experience gained in the process of linking Fortran 90 software with library routines compiled under Fortran 77 compiler. 2.0 Originator: Lyle Ziegelmiller ([email protected]) 3.0 Description: One of the summer students, Julia Barsie, was working with a plot program which was written in f77. This program called routines from a graphics package known as NCAR, which is also written in f77. Everything was fine. The plot program was converted to f90, and a new version of the NCAR graphical package was released, which was written in f77. A problem arose when trying to link the new f90 version of the plot program with the new f77 release of NCAR; many undefined references were reported by the linker. This bulletin is intended to convey what was learned in the effort to accomplish this linking. The first step I took was to issue the "-dryrun" directive to the f77 compiler when using it to compile the original f77 plot program and the original NCAR graphics library. "- dryrun" causes the linker to produce an output detailing all the various libraries that it links with. Note that these libaries are in addition to the libaries you would select on the command line. For example, you might compile a program with erbelib, but the linker would have to link with librarie(s) that contain the definitions of sine or cosine. Anyway, it was my hypothesis that if everything compiled and linked with f77, then all the libraries must be contained in the output from the f77's "-dryrun" command. -

What Is LLVM? and a Status Update

What is LLVM? And a Status Update. Approved for public release Hal Finkel Leadership Computing Facility Argonne National Laboratory Clang, LLVM, etc. ✔ LLVM is a liberally-licensed(*) infrastructure for creating compilers, other toolchain components, and JIT compilation engines. ✔ Clang is a modern C++ frontend for LLVM ✔ LLVM and Clang will play significant roles in exascale computing systems! (*) Now under the Apache 2 license with the LLVM Exception LLVM/Clang is both a research platform and a production-quality compiler. 2 A role in exascale? Current/Future HPC vendors are already involved (plus many others)... Apple + Google Intel (Many millions invested annually) + many others (Qualcomm, Sony, Microsoft, Facebook, Ericcson, etc.) ARM LLVM IBM Cray NVIDIA (and PGI) Academia, Labs, etc. AMD 3 What is LLVM: LLVM is a multi-architecture infrastructure for constructing compilers and other toolchain components. LLVM is not a “low-level virtual machine”! LLVM IR Architecture-independent simplification Architecture-aware optimization (e.g. vectorization) Assembly printing, binary generation, or JIT execution Backends (Type legalization, instruction selection, register allocation, etc.) 4 What is Clang: LLVM IR Clang is a C++ frontend for LLVM... Code generation Parsing and C++ Source semantic analysis (C++14, C11, etc.) Static analysis ● For basic compilation, Clang works just like gcc – using clang instead of gcc, or clang++ instead of g++, in your makefile will likely “just work.” ● Clang has a scalable LTO, check out: https://clang.llvm.org/docs/ThinLTO.html 5 The core LLVM compiler-infrastructure components are one of the subprojects in the LLVM project. These components are also referred to as “LLVM.” 6 What About Flang? ● Started as a collaboration between DOE and NVIDIA/PGI. -

Linking + Libraries

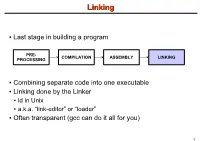

LinkingLinking ● Last stage in building a program PRE- COMPILATION ASSEMBLY LINKING PROCESSING ● Combining separate code into one executable ● Linking done by the Linker ● ld in Unix ● a.k.a. “link-editor” or “loader” ● Often transparent (gcc can do it all for you) 1 LinkingLinking involves...involves... ● Combining several object modules (the .o files corresponding to .c files) into one file ● Resolving external references to variables and functions ● Producing an executable file (if no errors) file1.c file1.o file2.c gcc file2.o Linker Executable fileN.c fileN.o Header files External references 2 LinkingLinking withwith ExternalExternal ReferencesReferences file1.c file2.c int count; #include <stdio.h> void display(void); Compiler extern int count; int main(void) void display(void) { file1.o file2.o { count = 10; with placeholders printf(“%d”,count); display(); } return 0; Linker } ● file1.o has placeholder for display() ● file2.o has placeholder for count ● object modules are relocatable ● addresses are relative offsets from top of file 3 LibrariesLibraries ● Definition: ● a file containing functions that can be referenced externally by a C program ● Purpose: ● easy access to functions used repeatedly ● promote code modularity and re-use ● reduce source and executable file size 4 LibrariesLibraries ● Static (Archive) ● libname.a on Unix; name.lib on DOS/Windows ● Only modules with referenced code linked when compiling ● unlike .o files ● Linker copies function from library into executable file ● Update to library requires recompiling program 5 LibrariesLibraries ● Dynamic (Shared Object or Dynamic Link Library) ● libname.so on Unix; name.dll on DOS/Windows ● Referenced code not copied into executable ● Loaded in memory at run time ● Smaller executable size ● Can update library without recompiling program ● Drawback: slightly slower program startup 6 LibrariesLibraries ● Linking a static library libpepsi.a /* crave source file */ … gcc .. -

The Xen Port of Kexec / Kdump a Short Introduction and Status Report

The Xen Port of Kexec / Kdump A short introduction and status report Magnus Damm Simon Horman VA Linux Systems Japan K.K. www.valinux.co.jp/en/ Xen Summit, September 2006 Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 1 / 17 Outline Introduction to Kexec What is Kexec? Kexec Examples Kexec Overview Introduction to Kdump What is Kdump? Kdump Kernels The Crash Utility Xen Porting Effort Kexec under Xen Kdump under Xen The Dumpread Tool Partial Dumps Current Status Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 2 / 17 Introduction to Kexec Outline Introduction to Kexec What is Kexec? Kexec Examples Kexec Overview Introduction to Kdump What is Kdump? Kdump Kernels The Crash Utility Xen Porting Effort Kexec under Xen Kdump under Xen The Dumpread Tool Partial Dumps Current Status Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 3 / 17 Kexec allows you to reboot from Linux into any kernel. as long as the new kernel doesn’t depend on the BIOS for setup. Introduction to Kexec What is Kexec? What is Kexec? “kexec is a system call that implements the ability to shutdown your current kernel, and to start another kernel. It is like a reboot but it is indepedent of the system firmware...” Configuration help text in Linux-2.6.17 Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 4 / 17 . as long as the new kernel doesn’t depend on the BIOS for setup. Introduction to Kexec What is Kexec? What is Kexec? “kexec is a system call that implements the ability to shutdown your current kernel, and to start another kernel. -

Using the DLFM Package on the Cray XE6 System

Using the DLFM Package on the Cray XE6 System Mike Davis, Cray Inc. Version 1.4 - October 2013 1.0: Introduction The DLFM package is a set of libraries and tools that can be applied to a dynamically-linked application, or an application that uses Python, to provide improved performance during the loading of dynamic libraries and importing of Python modules when running the application at large scale. Included in the DLFM package are: a set of wrapper functions that interface with the dynamic linker (ld.so) to cache the application's dynamic library load operations; and a custom libpython.so, with a set of optimized components, that caches the application's Python import operations. 2.0: Dynamic Linking and Python Importing Without DLFM When a dynamically-linked application is executed, the set of dependent libraries is loaded in sequence by ld.so prior to the transfer of control to the application's main function. This set of dependent libraries ordinarily numbers a dozen or so, but can number many more, depending on the needs of the application; their names and paths are given by the ldd(1) command. As ld.so processes each dependent library, it executes a series of system calls to find the library and make the library's contents available to the application. These include: fd = open (/path/to/dependent/library, O_RDONLY); read (fd, elfhdr, 832); // to get the library ELF header fstat (fd, &stbuf); // to get library attributes, such as size mmap (buf, length, prot, MAP_PRIVATE, fd, offset); // read text segment mmap (buf, length, prot, MAP_PRIVATE, fd, offset); // read data segment close (fd); The number of open() calls can be many times larger than the number of dependent libraries, because ld.so must search for each dependent library in multiple locations, as described on the ld.so(1) man page. -

A DSL for Resource Checking Using Finite State Automaton-Driven Symbolic Execution Code

Open Comput. Sci. 2021; 11:107–115 Research Article Endre Fülöp and Norbert Pataki* A DSL for Resource Checking Using Finite State Automaton-Driven Symbolic Execution https://doi.org/10.1515/comp-2020-0120 code. Compilers validate the syntactic elements, referred Received Mar 31, 2020; accepted May 28, 2020 variables, called functions to name a few. However, many problems may remain undiscovered. Abstract: Static analysis is an essential way to find code Static analysis is a widely-used method which is by smells and bugs. It checks the source code without exe- definition the act of uncovering properties and reasoning cution and no test cases are required, therefore its cost is about software without observing its runtime behaviour, lower than testing. Moreover, static analysis can help in restricting the scope of tools to those which operate on the software engineering comprehensively, since static anal- source representation, the code written in a single or mul- ysis can be used for the validation of code conventions, tiple programming languages. While most static analysis for measuring software complexity and for executing code methods are designed to detect anomalies (called bugs) in refactorings as well. Symbolic execution is a static analy- software code, the methods they employ are varied [1]. One sis method where the variables (e.g. input data) are inter- major difference is the level of abstraction at which the preted with symbolic values. code is represented [2]. Because static analysis is closely Clang Static Analyzer is a powerful symbolic execution related to the compilation of the code, the formats used engine based on the Clang compiler infrastructure that to represent the different abstractions are not unique to can be used with C, C++ and Objective-C. -

SMT-Based Refutation of Spurious Bug Reports in the Clang Static Analyzer

SMT-Based Refutation of Spurious Bug Reports in the Clang Static Analyzer Mikhail R. Gadelha∗, Enrico Steffinlongo∗, Lucas C. Cordeiroy, Bernd Fischerz, and Denis A. Nicole∗ ∗University of Southampton, UK. yUniversity of Manchester, UK. zStellenbosch University, South Africa. Abstract—We describe and evaluate a bug refutation extension bit in a is one, and (a & 1) ˆ 1 inverts the last bit in a. for the Clang Static Analyzer (CSA) that addresses the limi- The analyzer, however, produces the following (spurious) bug tations of the existing built-in constraint solver. In particular, report when analyzing the program: we complement CSA’s existing heuristics that remove spurious bug reports. We encode the path constraints produced by CSA as Satisfiability Modulo Theories (SMT) problems, use SMT main.c:4:12: warning: Dereference of null solvers to precisely check them for satisfiability, and remove pointer (loaded from variable ’z’) bug reports whose associated path constraints are unsatisfi- return *z; able. Our refutation extension refutes spurious bug reports in ˆ˜ 8 out of 12 widely used open-source applications; on aver- age, it refutes ca. 7% of all bug reports, and never refutes 1 warning generated. any true bug report. It incurs only negligible performance overheads, and on average adds 1.2% to the runtime of the The null pointer dereference reported here means that CSA full Clang/LLVM toolchain. A demonstration is available at claims to nevertheless have found a path where the dereference https://www.youtube.com/watch?v=ylW5iRYNsGA. of z is reachable. Such spurious bug reports are in practice common; in our I. -

The Linux 2.4 Kernel's Startup Procedure

The Linux 2.4 Kernel’s Startup Procedure William Gatliff 1. Overview This paper describes the Linux 2.4 kernel’s startup process, from the moment the kernel gets control of the host hardware until the kernel is ready to run user processes. Along the way, it covers the programming environment Linux expects at boot time, how peripherals are initialized, and how Linux knows what to do next. 2. The Big Picture Figure 1 is a function call diagram that describes the kernel’s startup procedure. As it shows, kernel initialization proceeds through a number of distinct phases, starting with basic hardware initialization and ending with the kernel’s launching of /bin/init and other user programs. The dashed line in the figure shows that init() is invoked as a kernel thread, not as a function call. Figure 1. The kernel’s startup procedure. Figure 2 is a flowchart that provides an even more generalized picture of the boot process, starting with the bootloader extracting and running the kernel image, and ending with running user programs. Figure 2. The kernel’s startup procedure, in less detail. The following sections describe each of these function calls, including examples taken from the Hitachi SH7750/Sega Dreamcast version of the kernel. 3. In The Beginning... The Linux boot process begins with the kernel’s _stext function, located in arch/<host>/kernel/head.S. This function is called _start in some versions. Interrupts are disabled at this point, and only minimal memory accesses may be possible depending on the capabilities of the host hardware. -

CUDA Flux: a Lightweight Instruction Profiler for CUDA Applications

CUDA Flux: A Lightweight Instruction Profiler for CUDA Applications Lorenz Braun Holger Froning¨ Institute of Computer Engineering Institute of Computer Engineering Heidelberg University Heidelberg University Heidelberg, Germany Heidelberg, Germany [email protected] [email protected] Abstract—GPUs are powerful, massively parallel processors, hardware, it lacks verbosity when it comes to analyzing the which require a vast amount of thread parallelism to keep their different types of instructions executed. This problem is aggra- thousands of execution units busy, and to tolerate latency when vated by the recently frequent introduction of new instructions, accessing its high-throughput memory system. Understanding the behavior of massively threaded GPU programs can be for instance floating point operations with reduced precision difficult, even though recent GPUs provide an abundance of or special function operations including Tensor Cores for ma- hardware performance counters, which collect statistics about chine learning. Similarly, information on the use of vectorized certain events. Profiling tools that assist the user in such analysis operations is often lost. Characterizing workloads on PTX for their GPUs, like NVIDIA’s nvprof and cupti, are state-of- level is desirable as PTX allows us to determine the exact types the-art. However, instrumentation based on reading hardware performance counters can be slow, in particular when the number of the instructions a kernel executes. On the contrary, nvprof of metrics is large. Furthermore, the results can be inaccurate as metrics are based on a lower-level representation called SASS. instructions are grouped to match the available set of hardware Still, even if there was an exact mapping, some information on counters.