Computer Science & Information Technology 98

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Totalmem),Form Factor,System on Chip,Screen Sizes,Screen Densities,Abis,Android SDK Versions,Opengl ES Versions

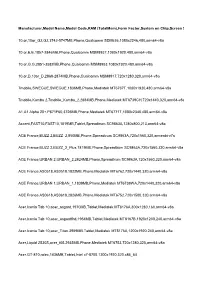

Manufacturer,Model Name,Model Code,RAM (TotalMem),Form Factor,System on Chip,Screen Sizes,Screen Densities,ABIs,Android SDK Versions,OpenGL ES Versions 10.or,E,E,2846MB,Phone,Qualcomm MSM8937,1080x1920,480,arm64-v8a 10.or,G,G,3603MB,Phone,Qualcomm MSM8953,1080x1920,480,arm64-v8a 10.or,D,10or_D,2874MB,Phone,Qualcomm MSM8917,720x1280,320,arm64-v8a 4good,A103,4GOOD_Light_A103,907MB,Phone,Mediatek MT6737M,540x960,240,armeabi- v7a 4good,4GOOD Light B100,4GOOD_Light_B100,907MB,Phone,Mediatek MT6737M,540x960,240,armeabi-v7a 7Eleven,IN265,IN265,466MB,Phone,Mediatek MT6572,540x960,240,armeabi-v7a 7mobile,DRENA,DRENA,925MB,Phone,Spreadtrum SC7731C,480x800,240,armeabi-v7a 7mobile,KAMBA,KAMBA,1957MB,Phone,Mediatek MT6580,720x1280,320,armeabi-v7a 7mobile,SWEGUE,SWEGUE,1836MB,Phone,Mediatek MT6737T,1080x1920,480,arm64-v8a A.O.I. ELECTRONICS FACTORY,A.O.I.,TR10CS1_11,965MB,Tablet,Intel Z2520,1280x800,160,x86 Aamra WE,E2,E2,964MB,Phone,Mediatek MT6580,480x854,240,armeabi-v7a Accent,Pearl_A4,Pearl_A4,955MB,Phone,Mediatek MT6580,720x1440,320,armeabi-v7a Accent,FAST7 3G,FAST7_3G,954MB,Tablet,Mediatek MT8321,720x1280,160,armeabi-v7a Accent,Pearl A4 PLUS,PEARL_A4_PLUS,1929MB,Phone,Mediatek MT6737,720x1440,320,armeabi-v7a Accent,SPEED S8,SPEED_S8,894MB,Phone,Mediatek MT6580,720x1280,320,armeabi-v7a Acegame S.A. -

Android Untrusted Detection with Permission Based Scoring Analysis

International Journal of Information Technology Convergence and Services (IJITCS) Vol.8, No.3/4, August 2018 ANDROID UNTRUSTED DETECTION WITH PERMISSION BASED SCORING ANALYSIS Jackelou Sulapas Mapa College of Information Technology,Saint Joseph Institute of Technology,Montilla Boulevard, Butuan City. ABSTRACT Android smart phone is one of the fast growing mobile phones and because of these it the one of the most preferred target of malware developer. Malware apps can penetrate the device and gain privileges in which it can perform malicious activities such reading user contact, misusing of private information such as sending SMS and can harm user by exploiting the users private data which is stored in the device. The study is about implementation of detecting untrusted on android applications, which would be the basis of all future development regarding malware detection. The smartphone users worldwide are not aware of the permissions as the basis of all malicious activities that could possibly operate in an android system and may steal personal and private information. Android operating system is an open system in which users are allowed to install application from any unsafe sites. However permission mechanism of and android system is not enough to guarantee the invulnerability of the application that can harm the user. In this paper, the permission scoring-based analysis that will scrutinized the installed permission and allows user to increase the efficiency of Android permission to inform user about the risk of the installed Android application, in this paper, the framework that would classify the level of sensitivity of the permission access by the application. -

Flare 1.5 Download

Flare 1.5 download LINK TO DOWNLOAD Flare Reviews. Popular Downloads. Windows XP Service Pack 3 SP 3 Windows XP Service Pack 3 Build FINAL; Minecraft Build anything you can imagine. Mozilla Firefox Firefox is a fast, full-featured Web browser. Just Cause 2 Just Cause 2; Outlook Express Flare can be downloaded from our website for free. This download was checked by our built-in antivirus and was rated as clean. This free software was originally created by Igor Kogan. The following versions: and are the most frequently downloaded ones by the program users/5(6). Community Edition (Open Source) The source code is available under the GPL on renuzap.podarokideal.ru need installers, code contributions or donations to support this edition. If you want to check out the project plans for future releases of the project you can view the roadmap. Full ChangeLog. The Flare Minecraft Hack has been available to the public since Minecraft version The Development Team includes KNOX, Gildfesh, Aarow, and Lead Designer, Mr. Taco on Tuesdays. It looks better and performs faster then any other client available. Then, download the firmware for your product below. Click on the “View PDF Instructions” link or watch the informational video for details. View PDF Instructions. Flare. Files. Date. Downloads. USA: Mon-Fri 9AM-5PM (PT) International Markets. ThopTV APK v Download for Android; World Cricket Championship 3 Mod APK {Unlimited Coins} Free; Hill Climb Racing Mod APK v {Unlimited Coins & Gems} MovieStarPlanet Mod APK v {Unlimited Coins, Diamonds} Hotel Story Mod APK v {Unlimited Coins & Diamonds} Avakin Life Mod APK v {Unlimited Avacoins, Money}. -

Conformity No. ESD-GEC Brand/Model Type of Equipment Grantee Date Issued

Conformity No. ESD-GEC Brand/Model Type of Equipment Grantee Date issued 1602752 ZTE MF920W+ GSM/WCDMA/LTE Wireless Router with WLAN ZTE Phils Inc 02/18/2016 1603668 ALCATEL---7048X GSM/WCDMA/LTE Mobile Phone with WLAN and Bluetooth TCT MOBILE PHILIPPINES, INC. 01/05/2016 1603669 Dell---T02H001 Portable Computer*-Tablet with GSM/WCDMA/LTE, WLAN, Bluetooth Dell, Inc. 01/05/2016 1603670 MOD hp---VCVRA-1504-00 Printer with Facsimile and WLAN Module HP PPS Philippines Inc. 12/22/2016 1603671 hp---HSTNN-I72C Tablet PC* with GSM/WCDMA/LTE, WLAN, Bluetooth and NFC Inventec Corporation 01/05/2016 1603672 O+ NOTEPAD Tablet PC with GSM/WCDMA/LTE, WLAN and Bluetooth Cognetics Inc 01/05/2016 1603673 Four-Faith F3836 LTE/WCDMA/GSM Modem/Router with WLAN One Commerce International Corporation 01/05/2016 1603674 ASUS_X013DA ASUS Phone with GSM/WCDMA/LTE,WLAN and Bluetooth ASUSTek Computer Inc 01/05/2016 1603675 ZH&K---ODYSSEY GLACIER GSM/WCDMA Mobile Phone with WLAN and Bluetooth ZH&K Commercial Cororation 01/05/2016 1603676 ZH&K---ODYSSEY ACE GSM/WCDMA Mobile Phone with WLAN and Bluetooth ZH&K Commercial Cororation 01/05/2016 1603677 ZH&K---ODYSSEY GLAMOUR GSM/WCDMA Mobile Phone with WLAN and Bluetooth ZH&K Commercial Cororation 01/05/2016 1603678 ZH&K ODYSSEY TYTAN GSM/WCDMA Mobile Phone with WLAN and Bluetooth ZH & K Commercial Corporation 01/05/2016 1603679 Lenovo S1La40 GSM/WCDMA//LTE Mobile Phone with WLAN and Bluetooth Lenovo Mobile Communications Technology Ltd 01/05/2016 1603680 ZH&K GEM Tablet PC with GSM/WCDMA, WLAN and Bluetooth ZH & K Commercial -

Manufacturer Device Model Consumers Count Apple Iphone

Manufacturer Device Model Consumers Count Apple iPhone 24,666,239 Apple iPad 13,155,907 samsung SM-J500M 1,079,744 Apple iPod touch 1,070,538 samsung SM-G531H 1,043,553 samsung SM-G935F 1,026,327 samsung SM-T113 894,096 samsung SM-J700M 888,680 motorola MotoG3 860,116 samsung SM-J700F 847,315 samsung SM-G920F 834,655 samsung SM-G900F 827,050 samsung SM-G610F 786,659 HUAWEI ALE-L21 783,180 OPPO A37f 701,488 samsung SM-G955U 699,321 samsung SM-G930F 685,195 samsung SM-J510FN 673,415 samsung SM-G950U 654,635 samsung SM-G530H 651,695 samsung SM-J710F 647,723 motorola Moto G (4) 640,091 samsung SM-T110 627,013 samsung SM-J200G 611,728 OPPO A1601 588,226 samsung SM-G925F 571,858 samsung SM-G930V 557,813 samsung SM-A510F 533,209 ZTE Z981 532,290 samsung GT-I9300 516,580 samsung SM-J320FN 511,109 Xiaomi Redmi Note 4 507,119 samsung GT-I9505 504,325 samsung GT-I9060I 488,253 samsung SM-J120H 472,748 samsung SM-G900V 458,996 Xiaomi Redmi Note 3 435,822 samsung SM-A310F 435,163 samsung SM-T560 435,042 motorola XT1069 433,667 motorola Moto G Play 422,147 LGE LG-K430 406,009 samsung GT-I9500 392,674 Xiaomi Redmi 3S 388,092 samsung SM-J700H 384,922 samsung SM-G532G 384,884 samsung SM-N9005 382,982 samsung SM-G531F 382,728 motorola XT1033 380,899 Generic Android 7.0 374,405 motorola XT1068 373,075 samsung SM-J500FN 372,029 samsung SM-J320M 366,049 samsung SM-J105B 351,985 samsung SM-T230 348,374 samsung SM-T280 347,350 samsung SM-T113NU 341,313 samsung SM-T350 338,525 samsung SM-G935V 337,090 samsung SM-J500F 332,972 samsung SM-J320F 329,165 motorola -

120009 My/Phone A868 Duo GSM/WCDMA Mobile Phone with WLAN and Bluetooth Mysolid Technologues & Devices Corp

Conformity no. ESD-GEC Brand/Model Type of Equipment Grantee Date issued 120009 my/phone A868 Duo GSM/WCDMA Mobile Phone with WLAN and Bluetooth MySolid Technologues & Devices Corp. 7/24/2012 0900001 Sony Ericsson C510a (AAD-3880010-BV) Tri-Band GSM/UMTS/HSDPA Mobile Phone w/ Bluetooth & 3.2MP Camera Sony Ericsson Mobile Comms. Int'l AB 3/11/2009 0900002 Sony Ericsson W705 (AAD-3880006-BV) Quad-Band GSM/UMTS/HSPA Mobile Phone w/ 802.11b/g Sony Ericsson Mobile Comms. Int'l AB 3/6/2009 0900003 Sony Ericsson W705a (AAD-3880005-BV Quad-Band GSM/UMTS/HSDPA Mobile Phone w/ 802.11b/g WLAN, Bluetooth, MP3 Player & 3.2Mpix Digital Camera Sony Ericsson Mobile Comms. Int'l AB 3/11/2009 0900004 Sony Ericsson W395 (type-AAB-1880014-BV) Quad-Band GSM Mobile Phone w/ Bluetooth Sony Ericsson Mobile Comms. Int'l AB 3/20/2009 0900005 Torque DTV100 Dual-Band GSM/GPRS Mobile Phone w/ Bluetooth and 0.3 MP Camera Omniforce Enteerprises 3/13/2009 0900006 Nokia 5730s-1 (RM-465)/ Nokia 5730 Quad-Band GSM/WCDMA FDD Mobile Phone w/ Bluetooth and WLAN Nokia Phils.,Inc. 3/18/2009 0900007 LG KF900 Quad-Band GSM/WCDMA 900/2100 Mobile w/ Bluetooth & WLAN Connectivity LG Electronics Phils.,Inc. 3/20/2009 0900008 LG GM200 Quad-Band GsM Mobile Phone with Bluetooth LG Electronics Phils.,Inc. 3/20/2009 0900011 Blackberry Storm 9500 (RCC51UW) Quad-Band GSM/GPRS/WCDMA/HSPA Mobile Phone Globe Telecom, Inc. 3/11/2013 0900011 Blackberry 9500 (RCC51UW) Quad-Band GSM /WCDMA FDD 1 HSPA Mobile Phone CP Communications, Inc. -

Elenco Device Android.Xlsx

Manufacturer,Model Name,Model Code,RAM (TotalMem),Form Factor,System on Chip,Screen Sizes,Screen Densities,ABIs,Android SDK Versions,OpenGL ES Versions 10.or,10or_G2,G2,3742-5747MB,Phone,Qualcomm SDM636,1080x2246,480,arm64-v8a 10.or,E,E,1857-2846MB,Phone,Qualcomm MSM8937,1080x1920,480,arm64-v8a 10.or,G,G,2851-3582MB,Phone,Qualcomm MSM8953,1080x1920,480,arm64-v8a 10.or,D,10or_D,2868-2874MB,Phone,Qualcomm MSM8917,720x1280,320,arm64-v8a 7mobile,SWEGUE,SWEGUE,1836MB,Phone,Mediatek MT6737T,1080x1920,480,arm64-v8a 7mobile,Kamba 2,7mobile_Kamba_2,2884MB,Phone,Mediatek MT6739CH,720x1440,320,arm64-v8a A1,A1 Alpha 20+,P671F60,3726MB,Phone,Mediatek MT6771T,1080x2340,480,arm64-v8a Accent,FAST10,FAST10,1819MB,Tablet,Spreadtrum SC9863A,1280x800,213,arm64-v8a ACE France,BUZZ 2,BUZZ_2,850MB,Phone,Spreadtrum SC9863A,720x1560,320,armeabi-v7a ACE France,BUZZ 2,BUZZ_2_Plus,1819MB,Phone,Spreadtrum SC9863A,720x1560,320,arm64-v8a ACE France,URBAN 2,URBAN_2,2824MB,Phone,Spreadtrum SC9863A,720x1560,320,arm64-v8a ACE France,AS0518,AS0518,1822MB,Phone,Mediatek MT6762,720x1440,320,arm64-v8a ACE France,URBAN 1,URBAN_1,1839MB,Phone,Mediatek MT6739WA,720x1440,320,arm64-v8a ACE France,AS0618,AS0618,2826MB,Phone,Mediatek MT6752,720x1500,320,arm64-v8a Acer,Iconia Tab 10,acer_asgard,1970MB,Tablet,Mediatek MT8176A,800x1280,160,arm64-v8a Acer,Iconia Tab 10,acer_asgardfhd,1954MB,Tablet,Mediatek MT8167B,1920x1200,240,arm64-v8a Acer,Iconia Tab 10,acer_Titan,3959MB,Tablet,Mediatek MT8176A,1200x1920,240,arm64-v8a Acer,Liquid Z530S,acer_t05,2935MB,Phone,Mediatek MT6753,720x1280,320,arm64-v8a -

LIST of GRANT of EQUIPMENT CONFORMITY (GEC) Conformity No

LIST OF GRANT OF EQUIPMENT CONFORMITY (GEC) Conformity No. ESD-GEC Brand/Model Marketing Name Integrated RF Module BAND GSM/GPS WCDMA LTE/BWA LORA Type of Equipment APPLICANT Date issued 1201074 35911 Freedom Alert System with DECT Technology-Base Unit Mannasoft technology Corporation 06/06/2012 ESD-GEC-2006285 ACER ONE 8 T2 900/1800/2400-2483.5/5150-5350/5470-5850 x x x Tablet Computer ACER PHILIPPINES INCORPORATED 05/10/2020 ESD-GEC-2106820 HITACHI CP-QGWG-E2S-1 CPTrans-MGW Quectel EG25-G 850/900/1800/1900/2400-2483.5 x x x Industrial Wireless Router Hitachi Industrial Equipment Systems Co Ltd 07/08/2021 ESD-GEC-2006418 SoftBand Robotics A00000101A01 Whiz 915 - 918.0 MHz x x x Automatic Cleaning Machine SoftBank Robotics Corp 11/27/2020 1503646 0+---8.37y Android GSM/WCDMA Mobile Phone with Wi-Fi and Bluetooth Cognetics Inc. 12/17/2015 1402562 1046D GSM Mobile Phone w/ Bluetooth TCT Mobile Philippines, Inc. 06/09/2014 1402494 225 (RM-1011) GSM/WCDMA Mobile Phone w/ WLAN and Bluetooth Nokia (Philippines), Inc. 05/12/2014 1402540 4032E GSM/WCDMA Mobile Phone w/ WiFi and Bluetooth TCT MOBILE PHILIPPINES, INC. 05/28/2014 1402495 5050X GSM/WCDMA/LTE Mobile Phone w/ 802.11 b.g.n WLAN, Bluetooth & NFC and ANT + TCT MOBILE PHILIPPINES 05/13/2014 1402363 6043D GSM/WCDMA Mobile Phone w/ 802.11 a/b/g/n WLAN and Bluetooth TCT Mobile Philippines, Inc. 03/11/2014 1402479 7D-501L GSM/WCDMA/LTE MediaPad X1 w/ 802.11 b.g.n WLAN, Bluetooth Huawei Technologies Philippines, Inc. -

We Now Accept Credit Cards

We Now Accept Credit Cards 02/02/2015 INTEL MINI PC - NUC KIT SRP LCD / LED TV SRP INKJET PRINTER SRP HP INK SRP INTEL NUC BOXDN2820FYKH0 2.4GHz 4TH GEN 6,500 EZY 24" (24PD35) LED TV HD 1080P 6,700 EPSON L120 PRINTER 4,995 HP 920 Black 826 INTEL NUC BOXD34010WYKH i3-4010U 4TH GEN 13,200 EZY 32" (32PD35) LED TV HD 1080P 9,600 EPSON STYLUS PHOTO T60 9,495 HP 920 XL CMY 635 INTEL PROCESSORS 3RD GENERATION - EZY 42" 42PSA300 LED TV HD 1080P 18,100 EPSON L800 INKJET PRINTER 13,800 HP 932 BLACK 945 INTEL CELERON G1620 2.7GHZ LGA 1155 1,990 EZY 50" 50K20/D LED TV HD 1080P 25,890 EPSON STYLUS OFFICE T1100 A3 12,995 HP 932 XL BLACK 1,495 INTEL PENTIUM G2020 2.9GHZ LGA 1155 2,450 SONY 40 LCD TV KLV-40BX420 25,999 EPSON L1300 PRINTER A3 18,995 HP 933 XL CMY 790 INTEL PENTIUM G2030 3.0GHZ LGA 1155 2,475 PRESTIZ 19" (19D700B) LED TV 4,990 CANON PIXMA iP2770 PRINTER 2,405 HP 650 BLK/COLOR 335 INTEL CORE i3 3240 3.40Ghz LGA 1155 4,955 PRESTIZ 24" (24D90K) LED TV 6,975 HP INK ADVANTAGE 1015 2,685 HP 678 CMYK/BLK 2515 385 INTEL MAINBOARDS LGA 1155 - PRESTIZ 32" (32EA2) LED TV 9,500 HP INK ADVANTAGE 2020HC 2,635 HP #46 CZ637AA 2020HC 460 ASUS H61M-E LGA1155, DDR3,VGA,LAN,USB 2,260 PRESTIZ 42" (42D900K) LED TV 17,590 HP OFFICEJET K7110 A3 8,450 HP #60 CC640WA B 660 ASUS H61M-C 1155,DDR3 ,8CH,GLAN 2,435 PRESTIZ 46" (46D700D) LED TV 19,390 ALL in ONE - HP #60 CC643WA C 780 BIOSTAR H61MGV3 1155,DDR3,VGA,6CH,GLAN 1,750 PRESTIZ 50" (50D900K) LED TV 25,999 CANON MP237 ALL IN ONE PRINTER 3,563 HP #61 CH561WA B 2050/3050/3060 660 GIGABYTE H61M-DS2 DDR3/INTEL HD/5.1CH/GLAN -

Список Мобильных Устройств, Работающих На Частотах Диапазона LTE 850 (Band 5 И Band 18)

Список мобильных устройств, работающих на частотах диапазона LTE 850 (band 5 и band 18) Источник: https://www.frequencycheck.com/models?commit=Search&q%5Bdevice_brand_id_eq%5D=&q% 5Bfrequency_bands_id_eq%5D=5&q%5Bfull_name_cont%5D=&utf8=%E2%9C%93 Обратите внимание! Для гарантированной работы сети 4G Билайн необходимо убедиться, что ваше устройство поддерживает частоту диапазона LTE 850 (band 5 и band 18). Это можно найти в технических характеристиках или на упаковке устройства. Данные актуальны на 31 января 2018 года. Device brand Name Acer Acer Liquid Jade Primo S58 LTE PA Dual SIM Acer Acer Liquid M330 LTE Dual SIM TM01 Acer Acer Liquid M330 LTE NA Dual SIM TM01 Acer Acer Liquid Z330 LTE NA Dual SIM T01 Acer Acer Liquid Z6 Plus Dual SIM TD-LTE Acer Acer Liquid Z6E Duo LTE Acer Acer Liquid Z6E LTE Akua Mobile Akua Mobile RS3 Dual SIM LTE Alcatel Alcatel A3 10 TD-LTE IN 9026T Alcatel Alcatel A30 Fierce LTE 5049Z Alcatel Alcatel A30 Plus LTE 5049S Alcatel Alcatel A5 LED Dual LTE 5085N Alcatel Alcatel A50 LTE Alcatel Alcatel Flash 2017 Dual SIM TD-LTE EMEA (TCL FL03) Alcatel Alcatel Flash Plus 2 Dual SIM TD-LTE 32GB Alcatel Alcatel One Touch Ideal LTE 4060A Alcatel Alcatel One Touch Idol 2S OT-6050F Alcatel Alcatel One Touch Idol 3 4.7 LTE 6039S Alcatel Alcatel One Touch Idol 3 5.5 LTE 6045F (TCL i806) Alcatel Alcatel One Touch Idol 3 5.5 LTE AM-H200 (TCL i806) Alcatel Alcatel One Touch Idol 4 LTE 6055U Alcatel Alcatel One Touch Idol 4 LTE Dual SIM 6055H Alcatel Alcatel One Touch Idol 4S LTE 6070O Alcatel Alcatel One Touch Idol 5S 6060S -

List of Grant of Equipment Conformity (Gec)

LIST OF GRANT OF EQUIPMENT CONFORMITY (GEC) Conformity No. ESD-GEC Brand/Model Marketing Name Integrated RF Module BAND GSM/GPS WCDMA LTE/BWA LORA Type of Equipment Grantee Date issued 0900001 Sony Ericsson C510a (AAD-3880010-BV) Tri-Band GSM/UMTS/HSDPA Mobile Phone w/ Bluetooth & 3.2MP Camera Sony Ericsson Mobile Comms. Int'l AB 3/11/2009 0900002 Sony Ericsson W705 (AAD-3880006-BV) Quad-Band GSM/UMTS/HSPA Mobile Phone w/ 802.11b/g Sony Ericsson Mobile Comms. Int'l AB 3/6/2009 0900003 Sony Ericsson W705a (AAD-3880005-BV Quad-Band GSM/UMTS/HSDPA Mobile Phone w/ 802.11b/g WLAN, Bluetooth, MP3 Player & 3.2Mpix Digital Camera Sony Ericsson Mobile Comms. Int'l AB 3/11/2009 0900004 Sony Ericsson W395 (type-AAB-1880014-BV) Quad-Band GSM Mobile Phone w/ Bluetooth Sony Ericsson Mobile Comms. Int'l AB 3/20/2009 0900005 Torque DTV100 Dual-Band GSM/GPRS Mobile Phone w/ Bluetooth and 0.3 MP Camera Omniforce Enterprises 3/13/2009 0900006 Nokia 5730s-1 (RM-465)/ Nokia 5730 Quad-Band GSM/WCDMA FDD Mobile Phone w/ Bluetooth and WLAN Nokia Phils.,Inc. 3/18/2009 0900007 LG KF900 Quad-Band GSM/WCDMA 900/2100 Mobile w/ Bluetooth & WLAN Connectivity LG Electronics Phils.,Inc. 3/20/2009 0900008 LG GM200 Quad-Band GsM Mobile Phone with Bluetooth LG Electronics Phils.,Inc. 3/20/2009 0900011 Blackberry Storm 9500 (RCC51UW) Quad-Band GSM/GPRS/WCDMA/HSPA Mobile Phone Globe Telecom, Inc. 3/11/2013 0900011 Blackberry 9500 (RCC51UW) Quad-Band GSM /WCDMA FDD 1 HSPA Mobile Phone CP Communications, Inc. -

PCX MOBILE | Smartphone and Tablet Price List November 18, 2015

PCX MOBILE | Smartphone and Tablet Price List November 18, 2015 Monthly Instalments SMARTPHONES SRP 3 Months 6 Months 12 Months CEL-ACER LIQUID E3 SINGLE SIM 4,990.00 1,713.23 N/A N/A CEL-ACER LIQUID E3 DUAL SIM 5,490.00 1,884.90 969.90 N/A CEL-ACER LIQUID E600 6,990.00 2,399.90 1,234.90 N/A CEL-ACER LIQUID E700 5,990.00 2,056.57 1,058.23 559.07 CEL-ACER LIQUID JADE 8,990.00 3,086.57 1,588.23 839.07 CEL-ACER LIQUID JADE Z 7,990.00 2,743.23 1,411.57 745.73 CEL-ACER LIQUID M220 2,490.00 854.90 N/A N/A CEL-ACER LIQUID X1 9,990.00 3,429.90 1,764.90 932.40 CEL-ACER LIQUID Z5 5,990.00 2,056.57 N/A N/A CEL-ACER LIQUID Z200 1,990.00 N/A N/A N/A CEL-ACER LIQUID Z220 2,990.00 N/A N/A N/A CEL-ACER LIQUID Z330 3,990.00 1,369.90 N/A N/A CEL-ACER LIQUID Z410 4,490.00 1,541.57 793.23 N/A CEL-ACER LIQUID Z500 6,990.00 2,399.90 1,234.90 N/A CEL-ACER LIQUID Z520 4,990.00 1,713.23 881.57 N/A CEL-ACER LIQUID Z630 6,990.00 2,399.90 1,234.90 N/A CEL-APPLE IPHONE 5C 16GB 19,990.00 N/A N/A N/A CEL-APPLE IPHONE 5S 32GB 33,490.00 N/A N/A N/A CEL-APPLE IPHONE 5S 16GB 31,990.00 10,983.23 5,651.57 2,985.73 CEL-APPLE IPHONE 6 16GB 35,490.00 12,184.90 6,269.90 3,312.40 CEL-APPLE IPHONE 6 64GB 41,490.00 14,244.90 7,329.90 3,872.40 CEL-APPLE IPHONE 6 128GB 47,490.00 16,304.90 8,389.90 4,432.40 CEL-APPLE IPHONE 6 PLUS 16GB 41,990.00 14,416.57 7,418.23 3,919.07 CEL-APPLE IPHONE 6 PLUS 64GB 47,990.00 16,476.57 8,478.23 4,479.07 CEL-ARC BASIC II 799.00 N/A N/A N/A CEL-ARC LITE 599.00 N/A N/A N/A CEL-ARC MOBILE MEMO 3,999.00 N/A N/A N/A CEL-ARC NITRO 400D 3,199.00 N/A N/A