Nvidia Video Decoder (Nvdec) Interface

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nvidia Video Codec Sdk - Encoder

NVIDIA VIDEO CODEC SDK - ENCODER Application Note vNVENC_DA-6209-001_v14 | July 2021 Table of Contents Chapter 1. NVIDIA Hardware Video Encoder.......................................................................1 1.1. Introduction............................................................................................................................... 1 1.2. NVENC Capabilities...................................................................................................................1 1.3. NVENC Licensing Policy...........................................................................................................3 1.4. NVENC Performance................................................................................................................ 3 1.5. Programming NVENC...............................................................................................................5 1.6. FFmpeg Support....................................................................................................................... 5 NVIDIA VIDEO CODEC SDK - ENCODER vNVENC_DA-6209-001_v14 | ii Chapter 1. NVIDIA Hardware Video Encoder 1.1. Introduction NVIDIA GPUs - beginning with the Kepler generation - contain a hardware-based encoder (referred to as NVENC in this document) which provides fully accelerated hardware-based video encoding and is independent of graphics/CUDA cores. With end-to-end encoding offloaded to NVENC, the graphics/CUDA cores and the CPU cores are free for other operations. For example, in a game recording scenario, -

The Qumu AWS Live Encoder High Performance Video Processing for Live Streaming

The Qumu AWS Live Encoder High Performance Video Processing for Live Streaming Whether you’re broadcasting a CEO event, new product introduction or crisis Overview communication, the Qumu AWS Live Encoder makes live, enterprise-wide video communication efficient, reliable and secure. HIGH PERFORMANCE Deliver content via Apple HLS, RTMP, Microsoft Smooth The Qumu Live Encoder (QLE) from AWS to the Video Control Center (VCC) and Streaming, or transport streams. is a video processing engine that provides automated ingest of archives for video-on- Alternatively, create mezzanine real-time video and audio encoding for live demand viewing. deliverables for wrapping with streaming via the Qumu enterprise video separate packages to reduce The Linux-based system can also be controlled platform. Powered by AWS Elemental Live, network bandwidth. the QLE performs simultaneous processing of through an intuitive web interface to set up multiple video outputs, delivering the high- and monitor the QLE, eliminating the need to VERSATILE DEPLOYMENT quality, high-efficiency performance required use external remote control tools. The QLE Control the Linux-based system for formatting live video for any device. The allows users to configure broadcasts that can through an intuitive web interface QLE is designed to integrate seamlessly into publish to both internal (i.e., Qumu VideoNet or REST / XML APIs for quick and the Qumu delivery workflow, with the flexibility Edge) and external (i.e., Amazon CloudFront, simple integration into existing workflows. Unified control and to evolve as technology requires. The QLE Akamai) distribution points simultaneously. management reduces setup time, provides flexible input options from HD-SDI In addition, for customers requiring live simplifies maintenance tasks and and HDMI inputs to both local file and HLS or multitrack audio for live audio translations allows for centralized upgrades of MPEG Transport Stream network inputs. -

Referência Debian I

Referência Debian i Referência Debian Osamu Aoki Referência Debian ii Copyright © 2013-2021 Osamu Aoki Esta Referência Debian (versão 2.85) (2021-09-17 09:11:56 UTC) pretende fornecer uma visão geral do sistema Debian como um guia do utilizador pós-instalação. Cobre muitos aspetos da administração do sistema através de exemplos shell-command para não programadores. Referência Debian iii COLLABORATORS TITLE : Referência Debian ACTION NAME DATE SIGNATURE WRITTEN BY Osamu Aoki 17 de setembro de 2021 REVISION HISTORY NUMBER DATE DESCRIPTION NAME Referência Debian iv Conteúdo 1 Manuais de GNU/Linux 1 1.1 Básico da consola ................................................... 1 1.1.1 A linha de comandos da shell ........................................ 1 1.1.2 The shell prompt under GUI ......................................... 2 1.1.3 A conta root .................................................. 2 1.1.4 A linha de comandos shell do root ...................................... 3 1.1.5 GUI de ferramentas de administração do sistema .............................. 3 1.1.6 Consolas virtuais ............................................... 3 1.1.7 Como abandonar a linha de comandos .................................... 3 1.1.8 Como desligar o sistema ........................................... 4 1.1.9 Recuperar uma consola sã .......................................... 4 1.1.10 Sugestões de pacotes adicionais para o novato ................................ 4 1.1.11 Uma conta de utilizador extra ........................................ 5 1.1.12 Configuração -

GPU-Based Video Processing in the Context of TV Broadcasting

GPU-based Video Processing in the Context of TV Broadcasting DIPLOMARBEIT zur Erlangung des akademischen Grades Diplom-Ingenieur im Rahmen des Studiums Visual Computing eingereicht von Heinrich Fink Matrikelnummer 0425503 an der Fakultät für Informatik der Technischen Universität Wien Betreuung: Associate Prof. Dipl.-Ing. Dipl.-Ing. Dr.techn. Michael Wimmer Mitwirkung: Dipl.-Ing. Dr. Anton Fuhrmann Wien, 14.08.2013 (Unterschrift Verfasser) (Unterschrift Betreuung) Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at GPU-based Video Processing in the Context of TV Broadcasting MASTER’S THESIS submitted in partial fulfillment of the requirements for the degree of Diplom-Ingenieur in Visual Computing by Heinrich Fink Registration Number 0425503 to the Faculty of Informatics at the Vienna University of Technology Advisor: Associate Prof. Dipl.-Ing. Dipl.-Ing. Dr.techn. Michael Wimmer Assistance: Dipl.-Ing. Dr. Anton Fuhrmann Vienna, 14.08.2013 (Signature of Author) (Signature of Advisor) Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Erklärung zur Verfassung der Arbeit Heinrich Fink Schwarzingergasse 1/7, 1020 Wien Hiermit erkläre ich, dass ich diese Arbeit selbständig verfasst habe, dass ich die verwen- deten Quellen und Hilfsmittel vollständig angegeben habe und dass ich die Stellen der Arbeit - einschließlich Tabellen, Karten und Abbildungen -, die anderen Werken oder dem Internet im Wortlaut oder dem Sinn nach entnommen sind, auf jeden Fall unter Angabe der Quelle als Entlehnung kenntlich gemacht habe. (Ort, Datum) (Unterschrift Verfasser) i Acknowledgements I thank my supervisor Michael Wimmer. I was working with him for many years at the Institute of Computer Graphics, TU Vienna. -

Vysoke´Ucˇenítechnicke´V Brneˇ

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Digital library of Brno University of Technology VYSOKE´ UCˇ ENI´ TECHNICKE´ V BRNEˇ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA INFORMACˇ NI´CH TECHNOLOGII´ U´ STAV POCˇ ´ITACˇ OVY´ CH SYSTE´ MU˚ FACULTY OF INFORMATION TECHNOLOGY DEPARTMENT OF COMPUTER SYSTEMS AKCELERACE MIKROSKOPICKE´ SIMULACE DOPRAVY ZA POUZˇ ITI´ OPENCL DIPLOMOVA´ PRA´ CE MASTER’S THESIS AUTOR PRA´ CE ANDREJ URMINSKY´ AUTHOR BRNO 2011 VYSOKE´ UCˇ ENI´ TECHNICKE´ V BRNEˇ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA INFORMACˇ NI´CH TECHNOLOGII´ U´ STAV POCˇ ´ITACˇ OVY´ CH SYSTE´ MU˚ FACULTY OF INFORMATION TECHNOLOGY DEPARTMENT OF COMPUTER SYSTEMS AKCELERACE MIKROSKOPICKE´ SIMULACE DOPRAVY ZA POUZˇ ITI´ OPENCL ACCELERATION OF MICROSCOPIC URBAN TRAFFIC SIMULATION USING OPENCL DIPLOMOVA´ PRA´ CE MASTER’S THESIS AUTOR PRA´ CE ANDREJ URMINSKY´ AUTHOR VEDOUCI´ PRA´ CE Ing. PAVOL KORCˇ EK SUPERVISOR BRNO 2011 Abstrakt S narastajúcim poètom vozidiel na na¹ich cestách sa èoraz väčšmi stretávame so súèasnými problémami dopravy, medzi ktoré by sme mohli zaradi» poèetnej¹ie havárie, zápchy a zvýše- nie vypú¹»aných emisií CO2, ktoré zneèis»ujú životné prostredie. Na to, aby sme boli schopní efektívne využíva» cestnú infra¹truktúru, nám môžu poslúžiť napríklad simulátory dopravy. Pomocou takýchto simulátorov môžme vyhodnoti» vývoj premávky za rôznych poèiatoè- ných podmienok a tým vedie», ako sa správa» a reagova» v rôznych situáciách dopravy. Táto práca sa zaoberá témou akcelerácia mikroskopickej simulácie dopravy za použitia OpenCL. Akcelerácia simulácie je dôležitá pri potrebe analyzova» veľkú sie» infra¹truktúry, kde nám bežné spôsoby implementácie simulátorov nestaèia. Pre tento úèel je možné použiť naprí- klad techniku GPGPU súèasných grafických kariet, ktoré sú schopné paralelne vykonáva» v¹eobecné výpoèty. -

New NVIDIA Geforce GT 430 Is the Perfect GPU for Digital Media Pcs

New NVIDIA GeForce GT 430 Is the Perfect GPU for Digital Media PCs Newest Addition to "Fermi" Family Provides Immense Performance and Unique 3D Features Not Offered by Integrated Graphics Solutions SANTA CLARA, CA -- NVIDIA today announced the latest addition to its Fermi class of graphics processing units (GPUs), the NVIDIA® GeForce® GT 430, which was specifically designed to provide the horsepower needed to power today's digital media PCs and provide the high definition video and audio experiences that desktop customers demand. The GeForce GT 430 utilizes the Company's latest 40nm GPU, codenamed GF108, which is already designed into a variety of desktop and notebook platforms from the world's leading OEMs, including Acer, ASUS, Dell, HP, Lenovo, MSI, Samsung, Sony and others. For customers looking for a viable desktop upgrade from lowly integrated solutions, the GeForce GT 430 is a major step up, delivering unparalleled experiences in photo and video editing, Blu-ray 3D, as well as the next generation of GPU-accelerated Web browsers and Web content. And, when combined with NVIDIA 3D Vision™ technology and a compatible display, the GT430 is also the only GPU in its class that allows consumers to experience their digital content in full stereoscopic 3D. Offering up to 1.5x the gaming performance of previous generation products, the GeForce GT 430 also adds support for DirectX 11 (DX11), the industry standard for game development. Most importantly, the GT 430 delivers unmatched out-of-the-box game compatibility(i) and playable frame rates in all of today's top 30 games(ii), when compared to integrated graphics solutions. -

Why You Need the EVGA E-Geforce 6800 GS

Why You Need the EVGA e-GeForce 6800 GS GeForce 6800 GS Profile NVIDIA’s announcement of a new GPU product hailing from the now legendary GeForce 6 series adds new fire to the lineup in the form of the GeForce 6800 GS. This powerful cast now reads as GeForce 6800 Ultra, GeForce 6800 GT, GeForce 6800, GeForce 6800 LE AND GeForce 6800 GS. As we know, the GeForce 6 series takes a step back to the more powerful and newer GeForce 7 series released recently. So why did NVIDIA release a new GPU for an older product line? Is it simply a holiday season marketing push, or does the answer have something to do with rival ATI? ATI expanded the RADEON X800 series product line with two new VPU products – the RADEON X800 GT and RADEON X800 GTO - just prior to their announcement of the new RADEON X1000 series. The former is an 8 pixel pipe product, while the latter is built with 12 pipes. Both are targeted for the mid-range market. These new “GT” VPUs place a lot of pressure to NVIDIA’s GeForce 6600 GT (8 pixel pipes) and GeForce 6800 (12 pixel pipes) and forces the company to respond with a new product to meet the challenge head-on. Many of NVIDIA fans might prefer new GeForce 7 products for the mid-range market, but NVIDIA still needs time to prepare them. In fact, NVIDIA’s older but more mature GeForce 6600 or GeForce 6800 series products can be improved to provide enough power to compete with the RADEON X800 GT and GTO. -

NVIDIA VIDEO TECHNOLOGIES Abhijit Patait, 3/20/2019 NVIDIA Video Technologies Overview Turing Video Enhancements AGENDA Video Codec SDK Updates Benchmarks Roadmap

NVIDIA VIDEO TECHNOLOGIES Abhijit Patait, 3/20/2019 NVIDIA Video Technologies Overview Turing Video Enhancements AGENDA Video Codec SDK Updates Benchmarks Roadmap 2 NVIDIA VIDEO TECHNOLOGIES 3 NVIDIA GPU VIDEO CAPABILITIES Decode HW* Encode HW* Formats: CPU • MPEG-2 Formats: • VC1 • H.264 • VP8 • H.265 • VP9 • Lossless • H.264 • H.265 Bit depth: • Lossless • 8 bit NVDEC Buffer NVENC • 10 bit Bit depth: • 8/10/12 bit Color** • YUV 4:4:4 Color** • YUV 4:2:0 • YUV 4:2:0 • YUV 4:4:4 CUDA Cores Resolution • Up to 8K*** Resolution • Up to 8K*** * See support diagram for previous NVIDIA HW generations ** 4:4:4 is supported only on HEVC for Turing; 4:2:2 is not natively supported on HW 4 *** Support is codec dependent Gamestream VIDEO CODEC SDK A comprehensive set of APIs for GPU- Video transcoding accelerated video encode and decode Remote desktop streaming NVENCODE API for video encode acceleration Intelligent video analytics NVDECODE API for video & JPEG decode acceleration (formerly called NVCUVID API) Independent of CUDA/3D cores on GPU for Video archiving pre-/post-processing Video editing 5 NVIDIA VIDEO TECHNOLOGIES Easy access to GPU DeepStream cuDNN, TensorRT, DALI video acceleration SDK cuBLAS, cuSPARSE VIDEO CODEC, OPTICAL FLOW SDK CUDA TOOLKIT Video Encode and Decode for Windows and Linux SOFTWARE APIs, libraries, tools, samples CUDA, DirectX, OpenGL interoperability NVIDIA DRIVER NVENC NVDEC CUDA Video encode Video decode High-performance computing on GPU HARDWARE 6 VIDEO CODEC SDK UPDATE 7 VIDEO CODEC SDK UPDATE SDK 7.x SDK 8.1 SDK 9.0 Pascal B-as-ref Turing 10-bit encode QP/emphasis map Multi-NVDEC FFmpeg 4K60 HEVC encode HEVC 4:4:4 decode ME-only for VR Reusable classes & Encode quality++ new sample apps HEVC B frames Quality++ SDK 8.0 10-bit transcode SDK 8.2 10/12-bit decode Decode + inference OpenGL optimizations Dec. -

Video Projector VP-15S1

Video Projector VP-15S1 VP-15S1!True HD 1080p DLP projector With the introduction of the VP-15S1 a wider audience will have access to top quality video projection. As a full HD 1920 x 1080 DLP projector, it fulfills all the demands of the new generation Blu-ray DiscTM , HD-DVD and HD Satellite TV. It features the the finest video technology from Gennum and the perfect parameters from Marantz. Even non-HD sources such as DVD or TV will look like high definition heaven. In addition, the high light output and the flexibility for installation make it an universal projector with an outstanding performce. Features Benefits TrueHD DLP video projector with 1920 x 1080 resolution Displays SD and HD sources with a significantly higher Excellent 1080p performance with over 2 million pixels light output and better contrast ratio at a native resolution Gennum GF9351 10-bit professional video processing of 1920 x 1080. Native contrast ratio of 10,000:1 Professional video processing technologies, delivering Custom made reference setting optics with extended zoom stunning video performance for all HD and SD video function sources Wide vertical lens shift (up to 165% - down to 85%) Supports high resolution media such as Blu-ray DiscTM Selectable dual iris system, 2x cat eye and HD-DVD Newly designed high speed, six segment color wheel Reliable, highly accurate lens for the best edge to edge 2x HDMI1.3a input linearity and image quality. Extensive picture adjustment possibilities Increased installation flexibility without reducing the Noise reducing die-cast -

Nvidia Video Codec Sdk - Decoder

NVIDIA VIDEO CODEC SDK - DECODER Application Note vNVDEC_DA-08097-001_v08 | July 2021 Table of Contents Chapter 1. NVIDIA Hardware Video Decoder.......................................................................1 1.1. Introduction............................................................................................................................... 1 1.2. NVDEC Capabilities...................................................................................................................1 1.3. What’s new................................................................................................................................ 2 1.4. NVDEC Performance................................................................................................................ 2 1.5. Programming NVDEC............................................................................................................... 4 1.6. FFmpeg Support....................................................................................................................... 4 NVIDIA VIDEO CODEC SDK - DECODER vNVDEC_DA-08097-001_v08 | ii Chapter 1. NVIDIA Hardware Video Decoder 1.1. Introduction NVIDIA GPUs contain a hardware-based decoder (referred to as NVDEC in this document) which provides fully accelerated hardware-based video decoding for several popular codecs. With complete decoding offloaded to NVDEC, the graphics engine and CPU are free for other operations. NVDEC supports much faster than real-time decoding which makes it suitable for transcoding scenarios -

NVIDIA Redefines the Notebook PC

NVIDIA Redefines The Notebook PC All information is under embargo until May 9, 2007 NVIDIA – Redefines The Notebook PC Windows Vista New Mainstream 3D applications Blu-ray & HD DVD Movies Hyper-realistic DX10 Games NVIDIA Confidential – Do not disclose until May 9, 2007. Slide# 2 Tremendous Notebook PC Growth 200,000,000 Source: Gartner 180,000,000 160,000,000 140,000,000 120,000,000 100,000,000 80,000,000 60,000,000 40,000,000 20,000,000 0 2004 2005 2006 2007 2008 2009 2010 Desktop PC Notebook PC Notebook PC projected to exceed Desktop PC shipments ! NVIDIA Confidential – Do not disclose until May 9, 2007. Slide# 3 NVIDIA Notebook Products Launching Today Enthusiast NVIDIA SLI GeForce 8600M GT Performance GeForce 8600M GS GeForce 8400M GT Mainstream GeForce 8400M GS GeForce 8400M G GeForce 7190M + nForce 650M Value GeForce 7150M + nForce 630M GeForce 7000M + nForce 610M NVIDIA Confidential – Do not disclose until May 9, 2007. Slide# 4 NVIDIA GeForce 8M Series Notebook GPUs Redefining the Notebook PC GeForce 8M Series – Key Features Industry Leading DX10 unified architecture Delivers up to 2x the performance of previous generation GPUS Revolutionary new PureVideo HD™ video processing engine Ultimate playback of HD DVD, Blu-ray, and HD movie downloads NVIDIA PowerMizer technology Intelligently balances user’s need for longer battery life and performance Delivered on MXM With all the benefits of “de-facto standard” modular graphics subsystem NVIDIA Confidential – Do not disclose until May 9, 2007. Slide# 6 GeForce 8M Series – Key Specifications -

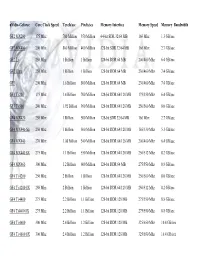

Nvidia-Geforce GF2 MX200 GF2 MX400 GF2 Ti GF2 Ultra

nVidia-Geforce Core Clock Speed Texels/sec Pixels/sec Memory Interface Memory Speed Memory Bandwidth GF2 MX200 175 Mhz 700 Million 350 Million 64-bit SDR 32/64 MB 166 Mhz 1.3 GB/sec GF2 MX400 200 Mhz 800 Million 400 Million 128-bit SDR 32/64 MB 166 Mhz 2.7 GB/sec GF2 Ti 250 Mhz 1 Billion 1 Billion 128-bit DDR 64 MB 200/400 Mhz 6.4 GB/sec GF2 Ultra 250 Mhz 1 Billion 1 Billion 128-bit DDR 64 MB 230/460 Mhz 7.4 GB/sec GF3 200 Mhz 1.6 Billion 800 Million 128-bit DDR 64 MB 230/460 Mhz 7.4 GB/sec GF3 Ti 200 175 Mhz 1.4 Billion 700 Million 128-bit DDR 64/128 MB 175/350 Mhz 6.4 GB/sec GF3 Ti 500 240 Mhz 1.92 Billion 960 Million 128-bit DDR 64/128 MB 250/500 Mhz 8.0 GB/sec GF4 MX420 250 Mhz 1 Billion 500 Million 128-bit SDR 32/64 MB 166 Mhz 2.7 GB/sec GF4 MX440-SE 250 Mhz 1 Billion 500 Million 128-bit DDR 64/128 MB 165/330 Mhz 5.3 GB/sec GF4 MX440 270 Mhz 1.08 Billion 540 Million 128-bit DDR 64/128 MB 200/400 Mhz 6.4 GB/sec GF4 MX440 8X 275 Mhz 1.1 Billion 550 Million 128-bit DDR 64/128 MB 256/512 Mhz 8.2 GB/sec GF4 MX460 300 Mhz 1.2 Billion 600 Million 128-bit DDR 64 MB 275/550 Mhz 8.8 GB/sec GF4 Ti 4200 250 Mhz 2 Billion 1 Billion 128-bit DDR 64/128 MB 250/500 Mhz 8.0 GB/sec GF4 Ti 4200 8X 250 Mhz 2 Billion 1 Billion 128-bit DDR 64/128 MB 256/512 Mhz 8.2 GB/sec GF4 Ti 4400 275 Mhz 2.2 Billion 1.1 Billion 128-bit DDR 128 MB 275/550 Mhz 8.8 GB/sec GF4 Ti4400 8X 275 Mhz 2.2 Billion 1.1 Billion 128-bit DDR 128 MB 275/550 Mhz 8.8 GB/sec GF4 Ti 4600 300 Mhz 2.4 Billion 1.2 Billion 128-bit DDR 128 MB 325/650 Mhz 10.4 GB/sec GF4 Ti 4600 8X 300 Mhz 2.4