A Framework for Multimedia Playback and Analysis of MPEG-2 Videos with Ffmpeg Anand Saggi Iowa State University

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PD7040/98 Philips Portable DVD Player

Philips Portable DVD Player 18cm/ 7" LCD 5-hr playtime PD7040 Longer movies enjoyment on the go with USB for digital media playback Enjoy your movies anytime, anyplace! The PD7040 portable DVD player features 7”/ 18cm LCD swivel screen for your great viewing experience. You can indulge in up to 5 hours of DVD/DivX®/MPEG movies, MP3-CD/CD music and JPEG photos on the go. Play your movies, music and photos on the go • DVD, DVD+/-R, DVD+/-RW, (S)VCD, CD compatible • DivX Certified for standard DivX video playback • MP3-CD, CD and CD-RW playback • View JPEG images from picture disc Enrich your AV entertainment experience • 7" swivel color LCD panel for improved viewing flexibility • Enjoy movies in 16:9 widescreen format • Built-in stereo speakers Extra touches for your convenience • Up to 5-hour playback with a built-in battery* • USB Direct for music and photo playback • Car mount pouch included for easy in-car use • Full Resume on Power Loss • AC adaptor, car adaptor and AV cable included Portable DVD Player PD7040/98 18cm/ 7" LCD 5-hr playtime Highlights MP3-CD, CD and CD-RW playback USB Direct where you have stopped the movie last time just by reloading the disc. Making your life a lot easier! MP3 is a revolutionary compression Simply plug in your USB device on the system technology by which large digital music files can and share your stored digital music and photos be made up to 10 times smaller without with your family and friends. radically degrading their audio quality. -

A History of Video Game Consoles Introduction the First Generation

A History of Video Game Consoles By Terry Amick – Gerald Long – James Schell – Gregory Shehan Introduction Today video games are a multibillion dollar industry. They are in practically all American households. They are a major driving force in electronic innovation and development. Though, you would hardly guess this from their modest beginning. The first video games were played on mainframe computers in the 1950s through the 1960s (Winter, n.d.). Arcade games would be the first glimpse for the general public of video games. Magnavox would produce the first home video game console featuring the popular arcade game Pong for the 1972 Christmas Season, released as Tele-Games Pong (Ellis, n.d.). The First Generation Magnavox Odyssey Rushed into production the original game did not even have a microprocessor. Games were selected by using toggle switches. At first sales were poor because people mistakenly believed you needed a Magnavox TV to play the game (GameSpy, n.d., para. 11). By 1975 annual sales had reached 300,000 units (Gamester81, 2012). Other manufacturers copied Pong and began producing their own game consoles, which promptly got them sued for copyright infringement (Barton, & Loguidice, n.d.). The Second Generation Atari 2600 Atari released the 2600 in 1977. Although not the first, the Atari 2600 popularized the use of a microprocessor and game cartridges in video game consoles. The original device had an 8-bit 1.19MHz 6507 microprocessor (“The Atari”, n.d.), two joy sticks, a paddle controller, and two game cartridges. Combat and Pac Man were included with the console. In 2007 the Atari 2600 was inducted into the National Toy Hall of Fame (“National Toy”, n.d.). -

An Introduction to Analysis and Optimization with AMD Codeanalyst™ Performance Analyzer

An introduction to analysis and optimization with AMD CodeAnalyst™ Performance Analyzer Paul J. Drongowski AMD CodeAnalyst Team Advanced Micro Devices, Inc. Boston Design Center 8 September 2008 Introduction This technical note demonstrates how to use the AMD CodeAnalyst™ Performance Analyzer to analyze and improve the performance of a compute-bound program. The program that we chose for this demonstration is an old classic: matrix multiplication. We'll start with a "textbook" implementation of matrix multiply that has well-known memory access issues. We will measure and analyze its performance using AMD CodeAnalyst. Then, we will improve the performance of the program by changing its memory access pattern. 1. AMD CodeAnalyst AMD CodeAnalyst is a suite of performance analysis tools for AMD processors. Versions of AMD CodeAnalyst are available for both Microsoft® Windows® and Linux®. AMD CodeAnalyst may be downloaded (free of charge) from AMD Developer Central. (Go to http://developer.amd.com and click on CPU Tools.) Although we will use AMD CodeAnalyst for Windows in this tech note, engineers and developers can use the same techniques to analyze programs on Linux. AMD CodeAnalyst performs system-wide profiling and supports the analysis of both user applications and kernel- mode software. It provides five main types of data collection and analysis: • Time-based profiling (TBP), • Event-based profiling (EBP), • Instruction-based sampling (IBS), • Pipeline simulation (Windows-only feature), and • Thread profiling (Windows-only feature). We will look at the first three kinds of analysis in this note. Performance analysis usually begins with time-based profiling to identify the program hot spots that are candidates for optimization. -

Software Optimization Guide for Amd Family 15H Processors (.Pdf)

Software Optimization Guide for AMD Family 15h Processors Publication No. Revision Date 47414 3.06 January 2012 Advanced Micro Devices © 2012 Advanced Micro Devices, Inc. All rights reserved. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and product descriptions at any time without notice. The infor- mation contained herein may be of a preliminary or advance nature and is subject to change without notice. No license, whether express, implied, arising by estoppel or other- wise, to any intellectual property rights is granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of merchantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as compo- nents in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other application in which the failure of AMD’s product could create a situation where personal injury, death, or severe property or environmental damage may occur. AMD reserves the right to discontinue or make changes to its products at any time without notice. Trademarks AMD, the AMD Arrow logo, and combinations thereof, AMD Athlon, AMD Opteron, 3DNow!, AMD Virtualization and AMD-V are trademarks of Advanced Micro Devices, Inc. -

Forensic Analysis of the Nintendo 3DS NAND

Edith Cowan University Research Online ECU Publications Post 2013 2019 Forensic Analysis of the Nintendo 3DS NAND Gus Pessolano Huw O. L. Read Iain Sutherland Edith Cowan University Konstantinos Xynos Follow this and additional works at: https://ro.ecu.edu.au/ecuworkspost2013 Part of the Physical Sciences and Mathematics Commons 10.1016/j.diin.2019.04.015 Pessolano, G., Read, H. O., Sutherland, I., & Xynos, K. (2019). Forensic analysis of the Nintendo 3DS NAND. Digital Investigation, 29, S61-S70. Available here This Journal Article is posted at Research Online. https://ro.ecu.edu.au/ecuworkspost2013/6459 Digital Investigation 29 (2019) S61eS70 Contents lists available at ScienceDirect Digital Investigation journal homepage: www.elsevier.com/locate/diin DFRWS 2019 USA e Proceedings of the Nineteenth Annual DFRWS USA Forensic Analysis of the Nintendo 3DS NAND * Gus Pessolano a, Huw O.L. Read a, b, , Iain Sutherland b, c, Konstantinos Xynos b, d a Norwich University, Northfield, VT, USA b Noroff University College, 4608 Kristiansand S., Vest Agder, Norway c Security Research Institute, Edith Cowan University, Perth, Australia d Mycenx Consultancy Services, Germany article info abstract Article history: Games consoles present a particular challenge to the forensics investigator due to the nature of the hardware and the inaccessibility of the file system. Many protection measures are put in place to make it deliberately difficult to access raw data in order to protect intellectual property, enhance digital rights Keywords: management of software and, ultimately, to protect against piracy. History has shown that many such Nintendo 3DS protections on game consoles are circumvented with exploits leading to jailbreaking/rooting and Games console allowing unauthorized software to be launched on the games system. -

Making Speech Recognition Work on the Web Christopher J. Varenhorst

Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY May 2011 c Massachusetts Institute of Technology 2011. All rights reserved. Author.................................................................... Department of Electrical Engineering and Computer Science May 20, 2011 Certified by . James R. Glass Principal Research Scientist Thesis Supervisor Certified by . Scott Cyphers Research Scientist Thesis Supervisor Accepted by . Christopher J. Terman Chairman, Department Committee on Graduate Students Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science on May 20, 2011, in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering Abstract We present an improved Audio Controller for Web-Accessible Multimodal Interface toolkit { a system that provides a simple way for developers to add speech recognition to web pages. Our improved system offers increased usability and performance for users and greater flexibility for developers. Tests performed showed a %36 increase in recognition response time in the best possible networking conditions. Preliminary tests shows a markedly improved users experience. The new Wowza platform also provides a means of upgrading other Audio Controllers easily. Thesis Supervisor: James R. Glass Title: Principal Research Scientist Thesis Supervisor: Scott Cyphers Title: Research Scientist 2 Contents 1 Introduction and Background 7 1.1 WAMI - Web Accessible Multimodal Toolkit . 8 1.1.1 Existing Java applet . 11 1.2 SALT . -

“Laboratório” De T V Digital Usando Softw Are Open Source

“Laboratório” de TV digital usando software open source Objectivos Realizar uma pesquisa de software Open Source, nomeadamente o que está disponível em Sourceforge.net relacionado com a implementação de operações de processamento de sinais audiovisuais que tipicamente existem em sistemas de produção de TV digital. Devem ser identificadas aplicações para: • aquisição de vídeo, som e imagem • codificação com diferentes formatos (MPEG-2, MPEG-4, JPEG, etc.) • conversão entre formatos • pré e pós processamento (tal como filtragens) • edição • anotação Instalação dos programas e teste das suas funcionalidades. Linux Aquisição Filtros Codificação :: VLC :: Xine :: Ffmpeg :: Kino (DV) :: VLC :: Transcode :: Tvtime Television Viewer (TV) :: Video4Linux Grab Edição :: Mpeg4IP :: Kino (DV) Conversão :: Jashaka :: Kino :: Cinelerra :: VLC Playback :: Freej :: VLC :: FFMpeg :: Effectv :: MJPEG Tools :: PlayerYUV :: Lives :: Videometer :: MPlayer Anotação :: Xmovie :: Agtoolkit :: Video Squirrel VLC (VideoLan Client) VLC - the cross-platform media player and streaming server. VLC media player is a highly portable multimedia player for various audio and video formats (MPEG-1, MPEG-2, MPEG-4, DivX, mp3, ogg, ...) as well as DVDs, VCDs, and various streaming protocols. It can also be used as a server to stream in unicast or multicast in IPv4 or IPv6 on a high-bandwidth network. http://www.videolan.org/ Kino (DV) Kino is a non-linear DV editor for GNU/Linux. It features excellent integration with IEEE-1394 for capture, VTR control, and recording back to the camera. It captures video to disk in Raw DV and AVI format, in both type-1 DV and type-2 DV (separate audio stream) encodings. http://www.kinodv.org/ Tvtime Television Viewer (TV) Tvtime is a high quality television application for use with video capture cards on Linux systems. -

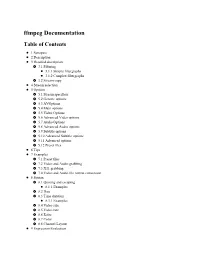

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 6 Tips 7 Examples 7.1 Preset files 7.2 Video and Audio grabbing 7.3 X11 grabbing 7.4 Video and Audio file format conversion 8 Syntax 8.1 Quoting and escaping 8.1.1 Examples 8.2 Date 8.3 Time duration 8.3.1 Examples 8.4 Video size 8.5 Video rate 8.6 Ratio 8.7 Color 8.8 Channel Layout 9 Expression Evaluation 10 OpenCL Options 11 Codec Options 12 Decoders 13 Video Decoders 13.1 rawvideo 13.1.1 Options 14 Audio Decoders 14.1 ac3 14.1.1 AC-3 Decoder Options 14.2 ffwavesynth 14.3 libcelt 14.4 libgsm 14.5 libilbc 14.5.1 Options 14.6 libopencore-amrnb 14.7 libopencore-amrwb 14.8 libopus 15 Subtitles Decoders 15.1 dvdsub 15.1.1 Options 15.2 libzvbi-teletext 15.2.1 Options 16 Encoders 17 Audio Encoders 17.1 aac 17.1.1 Options 17.2 ac3 and ac3_fixed 17.2.1 AC-3 Metadata 17.2.1.1 Metadata Control Options 17.2.1.2 Downmix Levels 17.2.1.3 Audio Production Information 17.2.1.4 Other Metadata Options 17.2.2 Extended Bitstream Information 17.2.2.1 Extended Bitstream Information - Part 1 17.2.2.2 Extended Bitstream Information - Part 2 17.2.3 Other AC-3 Encoding Options 17.2.4 Floating-Point-Only AC-3 Encoding -

OM-Cube Project

OM-Cube project V. Hiribarren, N. Marchand, N. Talfer [email protected] - [email protected] - [email protected] Abstract. The OM-Cube project is composed of several components like a minimal operating system, a multi- media player, a LCD display and an infra-red controller. They should be chosen to fit the hardware of an em- bedded system. Several other similar projects can provide information on the software that can be chosen. This paper aims to examine the different available tools to build the OM-Multimedia machine. The main purpose is to explore different ways to build an embedded system that fits the hardware and fulfills the project. 1 A Minimal Operating System The operating system is the core of the embedded system, and therefore should be chosen with care. Because of its popu- larity, a Linux based system seems the best choice, but other open systems exist and should be considered. After having elected a system, all unnecessary components may be removed to get a minimal operating system. 1.1 A Linux Operating System Using a Linux kernel has several advantages. As it’s a popular kernel, many drivers and documentation are available. Linux is an open source kernel; therefore it enables anyone to modify its sources and to recompile it. Using Linux in an embedded system requires adapting the kernel to the hardware and to the system needs. A simple method for building a Linux embed- ded system is to create a partition on a development host and to mount it on a temporary mount point. This partition is filled as one goes along and then, the final distribution is put on the target host [Fich02] [LFS]. -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

A Novel Edge-Preserving Mesh-Based Method for Image Scaling

A Novel Edge-Preserving Mesh-Based Method for Image Scaling Seyedali Mostafavian and Michael D. Adams Dept. of Electrical and Computer Engineering, University of Victoria, Victoria, BC V8P 5C2, Canada [email protected] and [email protected] Abstract—In this paper, we present a novel image scaling no loss of image quality. One main problem in vector-based method that employs a mesh model that explicitly represents interpolation methods, however, is how to create a vector discontinuities in the image. Our method effectively addresses model which faithfully represents the raster image data and its the problem of preserving the sharpness of edges, which has always been a challenge, during image enlargement. We use important features such as edges. Among the many techniques a constrained Delaunay triangulation to generate the model to generate a vector image from a raster image, triangle and an approximating function that is continuous everywhere mesh models have become quite popular. With a triangle-mesh except across the image edges (i.e., discontinuities). The model model, the image domain is partitioned into a set of non- is then rasterized using a subdivision-based technique. Visual overlapping triangles called a triangulation. Then, the image comparisons and quantitative measures show that our method can greatly reduce the blurring artifacts that can arise during intensity function is approximated over each of the triangles. image enlargement and produce images that look more pleasant A mesh-generation method is required to choose a good subset to human observers, compared to the well-known bilinear and of sample points and to collect any critical data from the input bicubic methods. -

Ease Your Automation, Improve Your Audio, with Ffmpeg

Ease your automation, improve your audio, with FFmpeg A talk by John Warburton, freelance newscaster for the More Radio network, lecturer in the Department of Music and Media in the University of Surrey, Guildford, England Ease your automation, improve your audio, with FFmpeg A talk by John Warburton, who doesn’t have a camera on this machine. My use of Liquidsoap I used it to develop a highly convenient, automated system suitable for in-store radio, sustaining services without time constraints, and targeted music and news services. It’s separate from my professional newscasting job, except I leave it playing when editing my professional on-air bulletins, to keep across world, UK and USA news, and for calming music. In this way, it’s incredibly useful, professionally, right now! What is FFmpeg? It’s not: • “a command line program” • “a tool for pirating” • “a hacker’s plaything” (that’s what they said about GNU/Linux once!) • “out of date” • “full of patent problems” It asks for: • Some technical knowledge of audio-visual containers and codec • Some understanding of what makes up picture and sound files and multiplexes What is FFmpeg? It is: • a world-leading multimedia manipulation framework • a gateway to codecs, filters, multiplexors, demultiplexors, and measuring tools • exceedingly generous at accepting many flavours of audio-visual input • aims to achieve standards-compliant output, and often succeeds • gives the user both library-accessed and command-line-accessed toolkits • is generally among the fastest of its type • incorporates many industry-leading tools • is programmer-friendly • is cross-platform • is open source, by most measures of the term • is at the heart of many broadcast conversion, and signal manipulation systems • is a viable Internet transmission platform Integration with Liquidsoap? (1.4.3) 1.