A Simple Trading Strategy Based on an Artificial Neural Network

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Free Stock Screener Page 1

Free Stock Screener www.dojispace.com Page 1 Disclaimer The information provided is not to be considered as a recommendation to buy certain stocks and is provided solely as an information resource to help traders make their own decisions. Past performance is no guarantee of future success. It is important to note that no system or methodology has ever been developed that can guarantee profits or ensure freedom from losses. No representation or implication is being made that using The Shocking Indicator will provide information that guarantees profits or ensures freedom from losses. Copyright © 2005-2012. All rights reserved. No part of this book may be reproduced or transmitted in any form or by any means, electronic or mechanical, without written prior permission from the author. Free Stock Screener www.dojispace.com Page 2 Bullish Engulfing Pattern is one of the strongest patterns that generates a buying signal in candlestick charting and is one of my favorites. The following figure shows how the Bullish Engulfing Pattern looks like. The following conditions must be met for a pattern to be a bullish engulfing. 1. The stock is in a downtrend (short term or long term) 2. The first candle is a red candle (down day) and the second candle must be white (up day) 3. The body of the second candle must completely engulfs the first candle. The following conditions strengthen the buy signal 1. The trading volume is higher than usual on the engulfing day 2. The engulfing candle engulfs multiple previous down days. 3. The stock gap up or trading higher the next day after the bullish engulfing pattern is formed. -

Copyrighted Material

INDEX Page numbers followed by n indicate note numbers. A Arnott, Robert, 391 Ascending triangle pattern, 140–141 AB model. See Abreu-Brunnermeier model Asks (offers), 8 Abreu-Brunnermeier (AB) model, 481–482 Aspray, Thomas, 223 Absolute breadth index, 327–328 ATM. See Automated teller machine Absolute difference, 327–328 ATR. See Average trading range; Average Accelerated trend lines, 65–66 true range Acceleration factor, 88, 89 At-the-money, 418 Accumulation, 213 Average range, 79–80 ACD method, 186 Average trading range (ATR), 113 Achelis, Steven B., 214n1 Average true range (ATR), 79–80 Active month, 401 Ayers-Hughes double negative divergence AD. See Chaikin Accumulation Distribution analysis, 11 Adaptive markets hypothesis, 12, 503 Ayres, Leonard P. (Colonel), 319–320 implications of, 504 ADRs. See American depository receipts ADSs. See American depository shares B 631 Advance, 316 Bachelier, Louis, 493 Advance-decline methods Bailout, 159 advance-decline line moving average, 322 Baltic Dry Index (BDI), 386 one-day change in, 322 Bands, 118–121 ratio, 328–329 trading strategies and, 120–121, 216, 559 that no longer are profitable, 322 Bandwidth indicator, 121 to its 32-week simple moving average, Bar chart patterns, 125–157. See also Patterns 322–324 behavioral finance and pattern recognition, ADX. See Directional Movement Index 129–130 Alexander, Sidney, 494 classic, 134–149, 156 Alexander’s filter technique, 494–495 computers and pattern recognition, 130–131 American Association of Individual Investors learning objective statements, 125 (AAII), 520–525 long-term, 155–156 American depository receipts (ADRs), 317 market structure and pattern American FinanceCOPYRIGHTED Association, 479, 493 MATERIALrecognition, 131 Amplitude, 348 overview, 125–126 Analysis pattern description, 126–128 description of, 300 profitability of, 133–134 fundamental, 473 Bar charts, 38–39 Andrews, Dr. -

Trend Following Algorithms in Automated Derivatives Market Trading ⇑ Simon Fong , Yain-Whar Si, Jackie Tai

Expert Systems with Applications 39 (2012) 11378–11390 Contents lists available at SciVerse ScienceDirect Expert Systems with Applications journal homepage: www.elsevier.com/locate/eswa Trend following algorithms in automated derivatives market trading ⇑ Simon Fong , Yain-Whar Si, Jackie Tai Department of Computer and Information Science, University of Macau, Macau article info abstract Keywords: Trend following (TF) is trading philosophy by which buying/selling decisions are made solely according to Trend following the observed market trend. For many years, many manifestations of TF such as a software program called Automated trading system Turtle Trader, for example, emerged in the industry. Surprisingly little has been studied in academic Futures contracts research about its algorithms and applications. Unlike financial forecasting, TF does not predict any mar- Mechanical trading ket movement; instead it identifies a trend at early time of the day, and trades automatically afterwards by a pre-defined strategy regardless of the moving market directions during run time. Trend following trading has been popular among speculators. However it remains as a trading method where human judgment is applied in setting the rules (aka the strategy) manually. Subsequently the TF strategy is exe- cuted in pure objective operational manner. Finding the correct strategy at the beginning is crucial in TF. This usually involves human intervention in first identifying a trend, and configuring when to place an order and close it out, when certain conditions are met. In this paper, we evaluated and compared a col- lection of TF algorithms that can be programmed in a computer system for automated trading. In partic- ular, a new version of TF called trend recalling model is presented. -

FOREX WAVE THEORY.Pdf

FOREX WAVE THEORY This page intentionally left blank FOREX WAVE THEORY A Technical Analysis for Spot and Futures Currency Traders JAMES L. BICKFORD McGraw-Hill New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto Copyright © 2007 by The McGraw-Hill Companies. All rights reserved. Manufactured in the United States of America. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written permission of the publisher. 0-07-151046-X The material in this eBook also appears in the print version of this title: 0-07-149302-6. All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occurrence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps. McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales pro- motions, or for use in corporate training programs. For more information, please contact George Hoare, Special Sales, at [email protected] or (212) 904-4069. TERMS OF USE This is a copyrighted work and The McGraw-Hill Companies, Inc. (“McGraw-Hill”) and its licen- sors reserve all rights in and to the work. Use of this work is subject to these terms. -

The Candlestick Forecaster

The Candlestick Forecaster Samurai Edition User Manual Copyright Ó 2000 Highest Summit Technologies Sdn Bhd. All Rights Reserved. LICENCE AGREEMENT THE CANDLESTICK FORECASTERÒ software constitutes a CD having copyrighted computer software accompanied by a copyrighted user manual in which all copyrights and ownership rights are owned only by Highest Summit Technologies Sdn Bhd (HST). HST grants to you a non-exclusive license to use a copy of The Candlestick Forecaster software on a single computer and the terms of this grant is effective unless violated. You may call and discuss with us by telephone any questions about the installation and use of The Candlestick Forecaster software by calling our office at (603) 245-5877, fax us at (603) 245-6792 or email us at [email protected]. We reserve the right to discontinue technical support at anytime without notice to you. You are not entitled to sub-license, rent, lease, sell, pledge or otherwise transfer or distribute the original copy of The Candlestick Forecaster software. Modification, disassembly, reverse engineering or creating derivative works based on the software or any portion thereof is expressly prohibited. Copying of the manual is also prohibited. Breach of these provisions automatically terminates this agreement and subjects you to further legal implications. The Candlestick Forecaster is warranted for ninety (90) days from the date of purchase to be free of defects in materials and workmanship under normal use. To obtain replacement of any material under this warranty, you must return the inaccurate CD or copy of the manual to us within the warranty period or notify us in writing within the warranty period that you have found an inaccuracy in The Candlestick Forecaster software and then return the materials to us. -

Forex Training Summary and Quiz – Stochastic Oscillator in Forex

EXCHANGE RATES FOREX TRADING MONEY TRANSFERS CURRENCY HEDGING ABOUT US MY ACCOUNT HELP DESK SEARCH U.S. Why OANDA Products Learn News & Analysis Home Learn Forex Technical Analy sis Stochastic Oscillator Forex Training Summary and Quiz LESSON 4: STOCHASTIC OSCILLATOR Sign in or Register with OANDA Forex Training Summary and Quiz Stochastic Oscillator in Forex Stochastic Oscillators w ere developed in the late 1950s by George C. Lane and are used to help predict the future direction of an exchange rate. The Oscillator scale ranges from 0 to 100. When calculating the strength of a trend, the Stochastic Oscillator defines and uptrend as the period of time w hen rates remain equal to or higher than the previous close, w hile a downtrend is the period of time w hen rates remain equal to or low er than the previous close. The Full Stochastic consists of tw o stochastic lines - %K and %D w here: %K tracks the current rate for the currency pair %D is a moving average based on the %K line - the fact that it is an average of %K means that it w ill produce a "smoothed out" version of %K The %K line is commonly referred to as the Fast Stochastic as it moves w ith changes in the spot rate w hile the %D line - w hich is a moving average of the %K line - reacts more slow ly to rate changes. For this reason, it is often referred to as the Slow Stochastic. Crossovers occur w hen the %K line intersects the %D line. -

Stochastic Pop & Drop Strategies

Stochastic Pop & Drop Strategies Establish a Trading Bias •Establish a short-term trading bias with a long-term indicator. •Traders look for bullish setups when the bias is bullish and bearish setups when the bias is bearish. •Trading in the direction of this bias is like riding a bike with the wind at your back. The chances of success are higher when the bigger trend is in your favor. •Use the weekly Stochastic Oscillator to define the trading bias. •In particular, the trading bias is deemed bullish when the weekly 14-period Stochastic Oscillator is above 50 and rising, and vice versa for bearish bias •Use a 70-period daily Stochastic Oscillator so all indicators can be displayed on the chart **This timeframe is simply five times the 14-day timeframe. Stochastic Pop Stochastic Pop Buy Signal •70-day Stochastic Oscillator is above 50 •14-day Stochastic Oscillator surges above 80 •Stock rises on high volume and/or breaks consolidation resistance. •Candle pattern confirmation Stochastic Pop •Once the bullish prerequisites are in place, a buy signal triggers when the 14-day Stochastic Oscillator surges above 80 and the stock breaks out on above average volume. •Consolidation breakouts are preferred when using this strategy (ie Box range) •Do not ignore high volume signals that do not produce breakouts •Sometimes the initial high-volume surge is a precursor to a breakout Box Range / Consolidation Trending Market Trending Market Trending Market Trending Market / Box Range Stochastics Stochastic Drop Stochastic Drop Sell Signal •70-day Stochastic Oscillator is below 50 •14-day Stochastic Oscillator plunges below 20 •Stock declines on high volume and/or breaks consolidation support. -

Data Visualization and Analysis

HTML5 Financial Charts Data visualization and analysis Xinfinit’s advanced HTML5 charting tool is also available separately from the managed data container and can be licensed for use with other tools and used in conjunction with any editor. It includes a comprehensive library of over sixty technical indictors, additional ones can easily be developed and added on request. 2 Features Analysis Tools Trading from Chart Trend Channel Drawing Instrument Selection Circle Drawing Chart Duration Rectangle Drawing Chart Intervals Fibonacci Patterns Chart Styles (Line, OHLC etc.) Andrew’s Pitchfork Comparison Regression Line and Channel Percentage (Y-axis) Up and Down arrows Log (Y-axis) Text box Show Volume Save Template Show Data values Load Template Show Last Value Save Show Cross Hair Load Show Cross Hair with Last Show Min / Max Show / Hide History panel Show Previous Close Technical Indicators Show News Flags Zooming Data Streaming Full Screen Print Select Tool Horizontal Divider Trend tool Volume by Price Horizonal Line Drawing Book Volumes 3 Features Technical Indicators Acceleration/Deceleration Oscillator Elliot Wave Oscillator Accumulation Distribution Line Envelopes Aroon Oscilltor Fast Stochastic Oscillator Aroon Up/Down Full Stochastic Oscillator Average Directional Index GMMA Average True Range GMMA Oscillator Awesome Oscillator Highest High Bearish Engulfing Historical Volatility Bollinger Band Width Ichimoku Kinko Hyo Bollinger Bands Keltner Indicator Bullish Engulfing Know Sure Thing Chaikin Money Flow Lowest Low Chaikin Oscillator -

Trading Strategies Using Stochastic

ANALYSIS TOOLS By Ng Ee Hwa, ChartNexus Market Strategist TRADING STRATEGIES USING STOCHASTIC In the aftermath of the global market correction following the big drop in the Chinese stock market indices in Feb 2007, investors have returned to the markets with a vengeance pushing the indices to scale new heights almost daily. However with recent moves by the Chinese authorities to cool the bullishness through measures such as the increase in stamp duties, the Chinese stock markets have again dropped significantly in the first few days of June 2007. This example of wild swings in the market sentiment can cause serious damage to the retail investor’s pocket, especially those uneducated in the forces at play in the stock market. Consequently it is vital that investors and traders are well-equipped in technical analysis tools to time the market effectively. This article will look at one of the tools in Technical Analysis indicators called Stochastic, a momentum indicator that shows clear bullish and bearish signals. Stochastic is a momentum oscillator oscillators that oscillate in a fixed range, an developed by George C.Lane in the late 1950s. overbought and oversold condition can be Lane noticed that in an up trending stock, prices specified. Based on his trading experience, Lane will usually make higher highs and the daily closing defined the overbought and oversold region for price will tend to accumulate near the extreme the Stochastic value to be above 80 and below highs of the “look back” periods. Similarly, a down 20 respectively. He deemed that a Stochastic value trending stock demonstrated the same behavior above 80 or below 20 may signal that a price trend of which the daily closing price tends to reversal may be imminent. -

Technical-Analysis-Bloomberg.Pdf

TECHNICAL ANALYSIS Handbook 2003 Bloomberg L.P. All rights reserved. 1 There are two principles of analysis used to forecast price movements in the financial markets -- fundamental analysis and technical analysis. Fundamental analysis, depending on the market being analyzed, can deal with economic factors that focus mainly on supply and demand (commodities) or valuing a company based upon its financial strength (equities). Fundamental analysis helps to determine what to buy or sell. Technical analysis is solely the study of market, or price action through the use of graphs and charts. Technical analysis helps to determine when to buy and sell. Technical analysis has been used for thousands of years and can be applied to any market, an advantage over fundamental analysis. Most advocates of technical analysis, also called technicians, believe it is very likely for an investor to overlook some piece of fundamental information that could substantially affect the market. This fact, the technician believes, discourages the sole use of fundamental analysis. Technicians believe that the study of market action will tell all; that each and every fundamental aspect will be revealed through market action. Market action includes three principal sources of information available to the technician -- price, volume, and open interest. Technical analysis is based upon three main premises; 1) Market action discounts everything; 2) Prices move in trends; and 3) History repeats itself. This manual was designed to help introduce the technical indicators that are available on The Bloomberg Professional Service. Each technical indicator is presented using the suggested settings developed by the creator, but can be altered to reflect the users’ preference. -

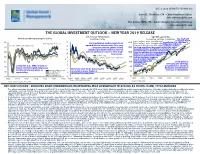

The Global Investment Outlook – New Year 2019 Release U.S

DEC 4 2018 (UPDATED TO NOV 30) Daniel E. Chornous, CFA Chief Investment Officer [email protected] Eric Savoie, MBA, CFA Senior Analyst, Investment Strategy [email protected] THE GLOBAL INVESTMENT OUTLOOK – NEW YEAR 2019 RELEASE U.S. 10-year T-Bond yield S&P 500 equilibrium Global purchasing managers' indices Equilibrium range Normalized earnings & valuations The S&P 500 Nov. '18 Range: 2141 - 3563 (Mid: 2852) is slightly below 65 16 5120 equilibrium (i.e. the band’s ISM Peak Aug 2018: 61.3 Our equilibrium model suggests an Nov. '19 Range: 2218 - 3691 (Mid: 2954)midpoint), and many Previous ISM Peak Feb 2011: 59.2 14 upward bias to interest rates for a very Current (30-November-18): 2760 other markets continue 60 long time into the future should 2560 to show significant discounts to their fair value. A significant rise in inflation and interest rates 12 inflation premiums and real rates of will eventually reduce the sustainable 55 interest ultimately return to their long- 1280 level for valuations, but normalizing 10 term norms. P/E’s and corporate profitability to Last Plot: 2.99% reflect current levels for these 50 8 Current Range: 2.12% - 3.90% (Mid: 3.01%) 640 critical variables suggests % stock market valuations are reasonable, but 45 6 320 certainly not Solid gains in “cheap” in all revenues and earnings 4 Led by the U.S., PMIs remain at 160 locations. propelled U.S. stocks in 40 particular, but threats to levels consistent with expansion, 2 But in the near-term, the pressure but the trend everywhere is both are accumulating. -

A Machine Learning Approach

0DVWHU7KHVLV 6RIWZDUH(QJLQHHULQJ 7KHVLVQR06( $XJXVW $0DFKLQHOHDUQLQJDSSURDFK ,QILQDQFLDOPDUNHWV &KULVWLDQ(Z| Department of Software Engineering and Computer Science Blekinge Institute of Technology Box 520 S E – 372 25 Ronneby i Sweden This thesis is submitted to the Department of Software Engineering and Computer Science at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Software Engineering. The thesis is equivalent to 20 weeks of full time studies. &RQWDFW,QIRUPDWLRQ Author: Christian Ewö Address: Solvägen 11, s-39351 Kalmar, SWEDEN E-mail: [email protected] Phone: +46 739803781 External advisor: Dr. Peter Andras The University of Newcastle upon Tyne Address: Claremont Tower, School of Computing Science, University of Newcastle Phone: +44 191 2227946 University advisor: Prof. Paul Davidsson Department of Software Engineering and Computer Science Department of Internet : www.bth.se/ipd Software Engineering and Computer Science Phone : +46 457 38 50 00 Blekinge Institute of Technology Fax : + 46 457 271 25 Box 520 S E – 372 25 Ronneby ii Sweden $%675$&7 In this work we compare the prediction performance of three optimized technical indicators with a Support Vector Machine Neural Network. For the indicator part we picked the common used indicators: Relative Strength Index, Moving Average Convergence Divergence and Stochastic Oscillator. For the Support Vector Machine we used a radial-basis kernel function and regression mode. The techniques were applied on financial time series brought from the Swedish stock market. The comparison and the promising results should be of interest for both finance people using the techniques in practice, as well as software companies and similar considering to implement the techniques in their products.